Businesses such as Paxos that issue stablecoin USD tokens want to find a way to identify common token metrics such as top holders, daily active users, daily volume, total number of holders, latest transfers, top Decentralized Finance (DeFi) protocols the tokens have been used on, and more. With Amazon Managed Blockchain (AMB) Query and Amazon QuickSight, businesses can easily analyze Ethereum token data.

Moreover, with QuickSight, you can format dashboards in a visually pleasing way, automate reports, and easily obtain critical information for ERC20 tokens. In this post, we show how to use AMB Query and AWS data services like AWS Glue, Amazon Athena, and QuickSight to generate visualizations that help you analyze data related to ERC20 tokens.

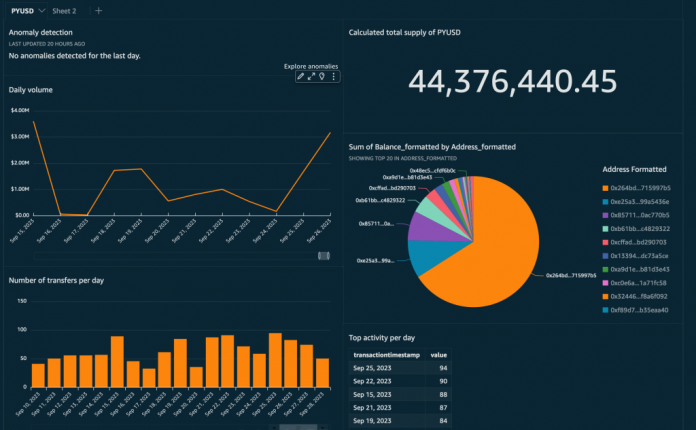

QuickSight dashboard displaying ERC20 token metrics.

How AMB Query helps with token visualization

In this section, we discuss the key features of AMB Query for Ethereum and why it’s the ideal choice for gathering the public blockchain data needed to generate visualizations for ERC20 token metrics.

Continuously updated finalized data

One of the key data points we want to capture on our token visualization dashboards are daily active users, daily volume, latest transfers, and top Ethereum DeFi platforms used by the token users. To display this information, we need a mechanism that can capture token operations. To do that, we can utilize AMB Query’s ListTransactions and ListTransactionEvents APIs.

The ListTransactions API gives you an entire list of all the transactions for Externally Owned Accounts (EOA) on Ethereum or smart contract addresses for ERC20 tokens. Take for example PayPal’s fully backed stablecoin PYUSD issued by Paxos. PayPal can use ListTransactions to get all the transactions of PYUSD. Then, you can call ListTransactionEvents with each transaction hash to query a list of all of the transfers for an address for tokens (ERC20, ERC1155, ERC721) or native coins (BTC, ETH) on a public blockchain. Similarly, for PayPal, they can retrieve a list of all of the balances for PYUSD tokens.

With data related to transactions, balances, and distribution of tokens, you can identify the top DeFi platforms by volume in PayPal’s PYUSD token exchanged. By identifying the smart contract address that holds the PYUSD tokens in a liquidity pool, you can check how many transfers that address does in a span of time.

Historical data

The other set of key data points we want to capture on our token visualization dashboards are top holders and total holders. To do this, the ListTokenBalances API will give you a list of all tokens minted by a smart contract when you pass in the appropriate smart contract address as the input. Moreover, AMB Query will provide historical data via the REST API that would otherwise require specialized indexing infrastructure and extract, transform, and load (ETL) processes to gain access.

Cost efficiency

Working with blockchain data can be expensive, particularly if you must manually run ETL on blockchain data and index it in various ways in order to serve query and analytics workloads. AMB Query, however, allows you to shed the undifferentiated heavy lifting and cost of operating blockchain data infrastructure on your own, and instead query the exact data you need on-demand using a pay-as-you-go model. Not only does this represent an opportunity for cost savings, AMB Query provides predictable pricing according to the complexity or resources used by the API calls, which makes it easy to determine what your costs will be for a given workload.

Solution overview

Now that we have discussed why we want token visualizations and why AMB Query would be a good fit in creating these token visualizations, we discuss how this would be implemented. The following figure shows the architecture of what the AMB Query integration would look like for creating token visibility dashboards.

The architecture uses the following key services:

AMB Query allows you serverless access to standardized, multi-blockchain datasets with developer-friendly APIs. By utilizing the ListTransactions, ListTransactionsEvents and ListTokenBalances APIs from AMB Query, you can gather both historical and latest finalized data about your ERC20 token.

AWS Glue is a serverless data integration service that simplifies the ETL process. In this case, we run a simple Python script in AWS Glue that calls the ListTransactions, ListTransactionsEvents and ListTokenBalances APIs from AMB Query.

Amazon Simple Storage Service (Amazon S3) is an object storage service. This is where we store all of the token information after every AWS Glue job run.

Amazon Athena is a serverless interactive analytics service. We can easily query for the data we want to extract and display on QuickSight dashboards.

Amazon QuickSight is a business analytics service which will help us display ERC20 token data.

In the following sections, we discuss the sets to configure our integration and create visualizations.

Prerequisites

You first need to set up an AWS account and configure your AWS Identity and Access Management (IAM) permissions correctly. For more information, refer to Changing permissions for an IAM user.

Create an S3 bucket

Amazon S3 is an object storage service provided by AWS. You can store and retrieve any amount of data at any time, from anywhere. In this case, we store data coming from our AWS Glue scripts, which means all of the historical and continuously updated finalized data for the token. All you have to do is create an S3 bucket, because all of the uploading is handled by the AWS Glue scripts you copy and paste from GitHub. From there, your data will be read by Athena. To create your S3 bucket, follow the instructions in Create your first S3 bucket.

Query data with AMB Query

AMB Query provides serverless access to standardized, multi-blockchain datasets with developer-friendly APIs that simplify building blockchain applications, including queries related to token balances, transaction details, and on-chain events.

In our solution, we use ListTransactions and ListTransactionEvents to get information about all transfer operations for the token of choice, and we use ListTokenBalances to export a snapshot of all token holders at a given time.

AMB Query has an interactive Query Editor that allows developers to query blockchain data. The following screenshot displays the results for the input parameters:

Blockchain network: ETHEREUM_MAINNET

Query type: ListTransactions

Ethereum address of the PYUSD token: 0x6c3ea9036406852006290770BEdFcAbA0e23A0e8

From date: 2023/03/26

To date: 2023/09/26

Create AWS Glue jobs

In this step, you create two AWS Glue jobs, go to the GitHub repository, copy the files token-snapshot.py and token-transfers.py, and then enter the code into the job code editor.

token-transfers.py captures all transfer operations of said token, so you can get continuously up-to-date finalized data. With token-snapshots.py, you can pass the token address as a job parameter. The data will be saved to Amazon S3.

Capture all token transfers

To capture the token transfers, you create an AWS Glue job called token-transfers. Complete the following steps:

On the AWS Glue console, choose Visual ETL in the navigation pane.

Select Python Shell script editor.

Select Create a new script with boiler plate code.

Choose Create.

Change the default title to token-transfers.

Copy the code from the token-transfers.py file from the token-dashboards code repository and enter it into the code editor.

Choose Job details and Advanced properties.

Under Job parameters, set the key –token to the token contract address of PYUSD: 0x6c3ea9036406852006290770BEdFcAbA0e23A0e8.

Pass the previously created S3 bucket name using –s3_bucket_name as the key and the name of the bucket as the value.

Run the job.

To create recurring jobs, choose Schedules in the navigation pane.

Choose Create schedule.

For Name, enter a name for the schedule (for this post, we use token-transfers-schedule).

For Frequency, choose Hourly.

For Minute of the hour, keep at 0. This way, you can automate your script to run every hour so you can be up to date in terms of daily active users, daily volume, and latest transfers.

Choose Create schedule.

Index all token holders

Now you create an AWS Glue job called token-snapshot, which is responsible for gathering all of the token holders’ balances and storing them in Amazon S3 for further analysis.

Follow the same steps as before to create the token-snapshot script, copy the code from the token-snapshot.py file from the token-dashboards GitHub repository, and enter it into the code editor.

Choose Job details and Advanced properties.

Under Job parameters, set the key –token to the value 0x6c3ea9036406852006290770BEdFcAbA0e23A0e8.

Pass the previously created S3 bucket name using –s3_bucket_name as the key and the name of the bucket as the value.

Run the job.

To create recurring jobs, choose Schedules in the navigation pane.

Choose Create schedule.

For Name, enter a name for the schedule (for this post, we use token_snapshot_schedule).

For Frequency, choose Daily.

For Minute of the hour, keep at 0. This way, you can automate your script to run one time a day so you can get an up-to-date snapshot of the latest list of all tokens minted by your smart contract.

Choose Create schedule.

You have now created two AWS Glue jobs that run on a schedule, feeding Ethereum data based on the ERC20 token selected. The data flows to Amazon S3, which will be read by Athena.

Query data in Athena

Athena is a serverless, highly scalable, and streamlined analytics service that meets data needs. In our solution, AWS Glue saves data to Amazon S3, and Athena acts as a data repository for QuickSight.

Run the following SQL script in Athena for your token-transfers script:

CREATE EXTERNAL TABLE events(

contractaddress string COMMENT ‘from deserializer’,

eventtype string COMMENT ‘from deserializer’,

from string COMMENT ‘from deserializer’,

to string COMMENT ‘from deserializer’,

value string COMMENT ‘from deserializer’,

transactionhash string COMMENT ‘from deserializer’,

transactiontimestamp string COMMENT ‘from deserializer’

)

ROW FORMAT SERDE ‘org.apache.hadoop.hive.serde2.OpenCSVSerde’

WITH SERDEPROPERTIES (

‘escapeChar’=’\’,

‘quoteChar’='”‘,

‘separatorChar’=’,’

)

STORED AS INPUTFORMAT ‘org.apache.hadoop.mapred.TextInputFormat’

OUTPUTFORMAT ‘org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat’

LOCATION ‘s3://BUCKET-NAME/TOKEN-ADDRESS/events‘

TBLPROPERTIES (‘classification’=’csv’, ‘skip.header.line.count’=’1’)

Run the following SQL script in Athena for your token-snapshot script:

CREATE EXTERNAL TABLE `token_snapshot`(

`address` string COMMENT ‘from deserializer’,

`balance` string COMMENT ‘from deserializer’

)

COMMENT ‘Creating a snapshot table from Athena.’

ROW FORMAT SERDE ‘org.apache.hadoop.hive.serde2.OpenCSVSerde’

WITH SERDEPROPERTIES (‘escapeChar’=’\’, ‘quoteChar’='”‘, ‘separatorChar’=’,’)

STORED AS INPUTFORMAT ‘org.apache.hadoop.mapred.TextInputFormat’

OUTPUTFORMAT ‘org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat’

LOCATION ‘s3://BUCKET-NAME/TOKEN-ADDRESS/snapshot‘

TBLPROPERTIES (‘classification’=’csv’, ‘skip.header.line.count’=’1’)

You should now have two tables, token-snapshot and token-events, displaying data from Amazon S3 that we extracted from AMB Query.

Create visualizations in QuickSight

Now that you have your AWS Glue Python script running and Athena configured properly, you can load your token data into an S3 bucket, query the data, and load it into QuickSight for visualizations.

Import your data

To import your data, complete the following steps:

On the QuickSight console, choose Analyses in the navigation pane.

Choose New analysis.

Choose New dataset.

Choose Athena.

In the pop-up that appears, for Data source name, enter AwsDataCatalog and leave Athena workgroup as primary.

Choose Create data source.

Select the appropriate tables (for this post, we use events).

Choose Select.

Choose between Directly query your data, then choose Visualize.

Create visualizations

Now that you have successfully imported all your datasets, you can create a graph in QuickSight.

Make sure the dataset you have chosen is token_snapshot.

Under Fields list, create a calculated field for balance, select address and balance_int.

Under Visual types, choose the pie chart icon.

Now you will be able to see a visual like the one in the following screenshot on your dashboard.

You can truncate the addresses in the pie chart to make it look cleaner.

On the Insert menu, choose Add Calculated Field.

Enter the following code:

concat(substring(address, 1, 6), ‘…’, substring(address, 39, 4))

Now your pie chart will look like the following screenshot. To learn more about QuickSight calculated fields, refer to Adding calculated fields.

With QuickSight, business analysts can easily create rich dashboards using ERC20 token data that gets automatically updated. Dashboards can be shared across the organizations, and reused and shared publicly. Leveraging QuickSight, you can build more elaborate dashboard tailored to your business needs. The following screenshot shows a fully-developed dashboard.

Clean up

To stop incurring costs from the resources you created during this post, remove the scheduled AWS Glue jobs, adjust your subscription plan for QuickSight, and delete all the files created in the target S3 bucket.

Conclusion

In this post, we discussed the challenges associated with extracting blockchain data related to ERC20 tokens and generating visualizations for the token data. We then outlined a solution that provides a cost-efficient way to create visualizations with blockchain data using services like AMB Query, AWS Glue, and QuickSight. We also included a step-by-step tutorial to implement this solution in your environment.

Now that you have learned how you can visualize ERC20 token data on Ethereum using AMB Query and QuickSight, let’s start building!

About the authors

Everton Fraga works as Sr. Blockchain/Web3 Specialist Solutions Architect at AWS. He helps companies worldwide to build Web3 infrastructure and applications. He is a former Software Engineer at Ethereum Foundation.

Varsha Narmat is a Blockchain/Web3 Specialist Solutions Architect at AWS. Prior to AWS, Varsha obtained a BS in Computer Science from Michigan State University and has always been passionate about technology and innovation. Outside of work, Varsha enjoys reading, traveling, and staying active.

Read MoreAWS Database Blog