Applications in the past were monolithic and rigid, and their components were closely coupled with each other, making them difficult to develop, maintain, and adapt to changing business requirements. Today’s businesses need to deliver changes rapidly, frequently and reliably.

To overcome these issues, organizations are moving from development of monolithic applications to microservices architectures for building their modern applications. This new style of architecture has increased the agility, portability, adaptability, and scalability of these applications.

Studies show that a 100-millisecond delay on a website can hurt conversion rates by 7%, and 53% of mobile users can drop off the site if page load time takes more than 3 seconds. Modern applications demand ultra-fast response times. As applications grow in popularity, disk-based databases pose performance challenges for microservices architectures. Amazon MemoryDB for Redis is an in-memory database that provides low latency and durability. In this post, we explain why microservices architectures need a high-performant in-memory database and how MemoryDB can support latency and persistence needs of modern microservices applications.

Why MemoryDB is a good fit for microservices

To understand why MemoryDB is a good fit for microservices, let’s look into low-latency transactional data needs of microservices, advantages of Redis-compatibility and durability guarantees of MemoryDB.

Transactional data needs: The terms hot, warm, and cold data refer to the frequency of accessing data in storage. Warm data is less frequently accessed and stored on slightly slower storage. Cold data is rarely accessed and is usually archived for analytical or historical purposes. Hot data is the application’s transactional data, and it needs to be stored on the fastest storage to meet the requirements of performance-intensive modern applications. Microservices put more emphasis on the hot data (transactional data) that the microservices are responsible for handling. They need to manage the frequent reads and writes to this data. Unlike other disk-based primary databases, MemoryDB stores all of its data in memory, thereby providing microsecond reads and single-digit millisecond writes. MemoryDB is a flexible and versatile database designed to store hot data in memory to enable fast reads and writes. MemoryDB can serve massive throughput at microsecond speeds of data access, even with small resources. This is beneficial for microservices because latency budgets on microservices are often tight and the data stored is often small. MemoryDB can read over 300,000 messages per second with response times under 1 millisecond, and provides zero data loss.

Durability and High Availability: For data durability needs of business critical microservices applications, in addition to storing all data in-memory, MemoryDB also stores all data in a durable distributed Multi-AZ transactional log. The distributed transaction log supports strongly consistent append operations and stores data encrypted in multiple Availability Zones (AZs) for both durability and availability. Every write is stored on disk in multiple AZs before it becomes visible to a client providing data durability. MemoryDB Multi-AZ transactional log is powered by the same technology that’s used by services with the highest durability needs, like S3. This transaction log is then used as a replication bus: the primary node records its updates to the log, and then replicas consume them. This enables replicas to have an eventually consistent view of the data on the primary and to provide high availability. In case of a hardware failure in the primary node, a failover happens almost immediately, and the replica is promoted to primary without losing any data because it reads it directly from the Multi-AZ transactional log. Amazon MemoryDB for Redis offers an availability Service Level Agreement (SLA) of 99.99% when using a Multi-Availability Zone (Multi-AZ) configuration.

Redis compatibility: Most databases provide very powerful data and query models that enable rich (database-level) server-side processing on behalf of the applications. Conventional key-value stores provide minimal server-side processing, in order to store and retrieve data at scale. MemoryDB for Redis provides a scalable key-value model, with server-side scripting and rich data structures. This approach provides server-side processing without incurring a penalty on performance. MemoryDB is fully Redis compatible, which has been voted most-loved database 5 years in a row. It has a simple API to store and access data in memory. For modern microservices applications, a high-performance, durable key-value store such as MemoryDB with intelligent Redis data structures is often the best tradeoff.

MemoryDB can support performance-sensitive modern applications, microservices principles such as loose-coupling, and enable low-latency durable asynchronous communication. The combination of features in MemoryDB such as microsecond read performance, durability, consistency, and availability of built-in Redis data structures makes it suitable for usage patterns we see in microservices applications.

Microservices Data Layer

Microservices are the backbone of modern applications that organizations are building today. It is critical to design a good service architecture by correctly identifying the services, defining their responsibilities and interfaces for communication. In a microservices architecture, the application is broken down into multiple subdomains based on a business function or a problem space. Each subdomain complies with essential microservices principles such as loose coupling to avoid monolithic application disadvantages. Loose coupling allows services to be developed, deployed and scaled independently. It is a best practice to enforce loose coupling by building microservices that do not maintain state within each service across different client requests. Offloading the state management to a persistence layer enables the microservices to be stateless, making them much easier to manage, and increase development and deployment velocity. This persistence layer needs to be fast, reliable, and scalable.

MemoryDB for the microservices architecture patterns

Microservices need to persist data in a database to implement stateless services and loose-coupling. In a microservices architecture, different services have different data requirements. For some services, a relational database is the best choice and for others, a NoSQL key-value or document database is best. Some business transactions need to enforce certain rules on the data they handle. To support these database requirements, the solution is to implement a Database per Service pattern. Other use cases, such as sharing data across microservices (for shared state) or for durable asynchronous communication, need a centralized shared database.

While building microservices, developers often start with disk-based services like a relational database or a familiar NoSQL database for microservice state and domain data management. As each microservice is responsible for its subdomain and the associated data, each single user action can translate to several microservice and database calls on the backend, resulting in higher latencies. For applications that are performance-sensitive, the following microservices usage patterns will benefit from the high performance, durability, strong consistency, and built-in Redis data structures of MemoryDB:

Database per Service pattern – Use MemoryDB for each individual microservice that is concerned only with the data private to that service. MemoryDB can back a wide range of microservices, each with their own data store.

Shared state and durable session store pattern – Use MemoryDB to store states durably, enabling fast access to this data during multiple independent stateless calls made during an application flow.

Durable Async Cross-service communication pattern – Use MemoryDB to create a highly durable message queue to asynchronously pass messages between microservices, and support high throughput (process thousands of messages per second with millisecond write latency), guaranteed delivery, and acknowledgement of messages with zero maintenance needed for queue administration.

Let’s dive deeper into these microservices patterns.

Database per Service pattern

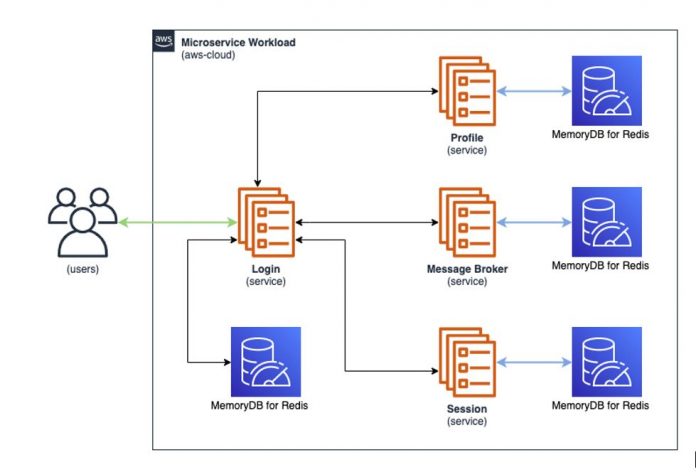

In the following illustration, the Login, User Profile, Fraud, and Session (security) microservices use their own logical instance of MemoryDB to persist that service’s private data. This enforces loose coupling between services, allowing them to be independently scaled and deployed. User Profiles, Security and Login services, for example, can potentially have large amounts of requests for reads during peak times with very low latency requirements. Using MemoryDB, we will be able to scale our microservice linearly by adding shards whenever needed and having consistent sub-millisecond response times.

Let’s look at an example of the User Profile service, code snippet in Python using the Flask framework and the Redis-Py library.

We’ll define our business model entity, and implement our API endpoints; the CRUD (Create, Read, Update and Delete) operations use Redis Hashes to store a single User Profile. For simplicity we have only the Write and Read operations shown here. By taking advantage of Redis-OM and Pydantic libraries see how simple the code looks, doing automatic validations. For production code you’d need to add logging and error handling.

#Create a User class that will validate input and will map each property to a field/value in a Redis Hash

class User(HashModel):

user_name: str

first_name: str

last_name: str

email: EmailStr

age: PositiveInt

bio: Optional[str]

class Meta:

database: memorydb_client

@app.route(‘/user/save’, methods=[‘POST’])

def save_users():

try:

new_user = User(**request.json)

new_user.save()

return new_user.pk

except ValidationError as e:

return “Bad request.”, 400

@app.route(‘/user/read/<id>’, methods=[‘GET’])

def read_user(id: str):

try:

user = User.get(id)

return user.dict()

except NotFoundError:

return {}

This microservice is very simple, in general the golden rule for microservice’s private data is share-nothing, so we’ve kept it to a single domain User and only Read and Write operations. Depending on the complexity of the business logic, your code might look a lot different but the main reason is to showcase simplicity and efficiency with the idea to make these microservices lean and scalable.

Shared state microservices pattern (durable session store)

Microservices are discrete units of compute that need the backing of a persistent data layer. While the Database per Service pattern discusses how microservices can manage their private domain data, the shared state pattern discusses how microservices can share the data that is common to all of them. The applications use a state service to share state between service calls and increase application capabilities. These microservices also need a durable database to store user session information that may include user profile information, personalized recommendations, and other session-related data. The session store data can’t be ephemeral for certain use cases because it’s the only source of truth of a live session and needs to be durably stored to resume the session at a later time. For the ephemeral session or shared state use cases, we recommend Amazon ElastiCache. In order to avoid diluting end-user experience, the session store needs high availability, high performance, and data durability. As an in-memory durable database, MemoryDB enables low-latency applications and dramatically improves user experience and service-level agreements for performance and throughput.

In the following figure, we use MemoryDB to store that session state and the Trip Management and Driver Management microservices are consuming and persisting states to MemoryDB. This shared state allows for loosely coupled microservices to exchange information within a broader application context. Because MemoryDB stores data in memory, it enables the low-latency applications to meet the high-performance requirements of accessing the session information.

For the state value here, there is flexibility of Redis data structures:

Hash map – This captures the field or value properties consumed or stored by the Trip management and driver management services. This may include trip creation date, requester, location, status, and more. The driver management service may add additional properties, including driver, car, and more, to the initial trip request.

String – You can use a string if a particular serialized data format is being exchanged across the different services.

Sorted set – You can use a sorted set to rank incoming trip requests. Data can be sorted by timestamp to indicate the order of trip requests needing attention. The value of a sorted set can also include a reference to another key, such as the hash map containing the trip properties.

JSON – MemoryDB supports the native JavaScript Object Notation (JSON) format, a simple, schemaless way to encode complex datasets inside Redis clusters. You can natively store and access data using the JavaScript Object Notation (JSON) format inside Redis clusters and update JSON data stored in those clusters, without needing to manage custom code to serialize and deserialize it.

Similar to our previous example, let’s see how we can implement a simple session store with a Flask application. Start by creating a new app with a Python environment

# Flask needs to know we want to use MemoryDB for Redis as our backend distributed datastore for sessions

app.secret_key = ‘USE_SUPER_SECRET_KEY’ # Remember to change this value

app.config[‘SESSION_TYPE’] = ‘redis’

…

app.config[‘SESSION_REDIS’] = memorydb_client

server_session = Session(app)

@app.route(‘/session/save’, methods=[‘GET’, ‘POST’])

def set_session():

if request.method == ‘POST’:

# Save the form data to the session object

session[‘user_name’] = request.form[‘user_name’]

return redirect(url_for(‘get_session’))

return render_template(‘session_form.html’, show_form=True)

@app.route(‘/session/read’)

def get_session():

return render_template(‘session_form.html’)

Durable Asynchronous communication pattern

A microservices application is a distributed system, and the communication between microservices needs to happen over the network. To support microservices architecture principles such as loose coupling, independent scalability, and operations, the communication between the microservices needs to be loosely coupled as well. Although the communication can occur via REST APIs, it is inherently synchronous, which means the caller needs to wait for the response from the API called synchronously. The REST client APIs have async implementation via callbacks but it introduces complexity to the applications. If there is a complex communication path (resulting in multiple intermediate calls to several microservices and other third-party systems), it can effectively add coupling to the microservices that are communicating with each other. If loose coupling is important to the application, an alternative is asynchronous messaging.

When using asynchronous communication for microservices, it is common to use a message broker, usually implemented using a queue. A broker ensures that the communication between different microservices is reliable and stable, the messages are managed and monitored within the system, and they don’t get lost. For applications with asynchronous communication, durability, low latency, and high throughput requirements, MemoryDB can provide a high performant (>300K messages per second and microsecond read response times), highly durable queue or message broker.

In the following figure, we use MemoryDB as a priority queue for asynchronous communication between microservices in a media processing application. The video API service communicates asynchronously by placing the message in the durable MemoryDB queue, making it ready for consumption by other chunking services. This guarantees message delivery and consumption, and avoids any data loss.

This solution provides the following features:

A modular architecture and container-based processing for media functions

Enhanced in-memory media processing algorithms

A persistent queue with message delivery guarantees

A highly durable queue to asynchronously pass messages between microservices with zero data loss

The ability to read over 300,000 messages per second with microsecond response times.

Traceability of the entire message lifecycle

MemoryDB is implemented here for a media workflow and messaging platform that uses a highly durable persistent queue to asynchronously pass messages between microservices with ultra-fast response times.

As mentioned, MemoryDB is Redis compatible and supports Redis data structures:

Message queues – These are developed with LIST data structure. Message queues are ideal for queuing specific work for particular consumers. In this case, a microservice uses a specific key identifier to interact with another user.

Message streams – These are ideal for scaling the consumption of messages across consumers. In a microservices implementation, this could be media content delivered to a valid audience.

Containerize the solution

Microservices are encapsulated using containers, which provide isolation and enable automated deployment, scaling, and improved application management. To manage a large cluster of containers we need to use a container orchestration platform. AWS offers two container orchestration services: Amazon Elastic Container Service (Amazon ECS) for running and managing applications built using Docker containers, and Amazon Elastic Kubernetes Service (Amazon EKS) for running and managing Kubernetes applications on AWS. AWS Fargate is a serverless compute service that can power both EKS and ECS applications.

Developers can containerize and deploy the microservices architecture using the following steps:

Host the Docker images in Amazon Elastic Container Registry (Amazon ECR).

Create task definitions using Amazon EKS (Elastic Kubernetes Service).

Connect to MemoryDB for Redis using open-source clients.

The following diagram illustrates this architecture.

Deploy MemoryDB using ACK

AWS Controllers for Kubernetes (ACK) is a tool that lets developers manage AWS services. You can use ACK for MemoryDB to provision and manage MemoryDB clusters.

To learn about the advantages of using ACK and deploying MemoryDB using ACK, refer to Deploy a high-performance database for containerized applications: Amazon MemoryDB for Redis with Kubernetes.

Call to Action

With the advanced features described in this post, MemoryDB simplifies the development of modern applications and fits well into the microservices landscape. In this post, we discussed the requirements of a modern application, why MemoryDB is a good fit for microservices applications, and common microservices data patterns. For more information on how Amazon MemoryDB for Redis can provide ultra-fast performance for your applications visit: https://aws.amazon.com/memorydb and the Developer Guide. Or email us:

[email protected]

[email protected]

About the authors

Sashi Varanasi is a Global Leader for Specialist Solutions architecture, In-Memory and Blockchain Data services. She has 25+ years of IT Industry experience and has been with AWS since 2019. Prior to AWS, she worked in Product Engineering and Enterprise Architecture leadership roles at various companies including Sabre Corp, Kemper Insurance and Motorola.

Roberto Luna Rojas is an AWS Sr. Solutions Architect specialized in in-memory NoSQL databases based in NY. He works with customers from around the globe to get the most out of in-memory databases one bit at the time. When not in front of the computer, he loves spending time with his family, listening to music, and watching movies, TV shows, and sports.

Read MoreAWS Database Blog