At AWS re:Invent 2023, we announced the general availability of Knowledge Bases for Amazon Bedrock. With Knowledge Bases for Amazon Bedrock, you can securely connect foundation models (FMs) in Amazon Bedrock to your company data for fully managed Retrieval Augmented Generation (RAG).

In previous posts, we covered new capabilities like hybrid search support, metadata filtering to improve retrieval accuracy, and how Knowledge Bases for Amazon Bedrock manages the end-to-end RAG workflow.

Today, we’re introducing the new capability to chat with your document with zero setup in Knowledge Bases for Amazon Bedrock. With this new capability, you can securely ask questions on single documents, without the overhead of setting up a vector database or ingesting data, making it effortless for businesses to use their enterprise data. You only need to provide a relevant data file as input and choose your FM to get started.

But before we jump into the details of this feature, let’s start with the basics and understand what RAG is, its benefits, and how this new capability enables content retrieval and generation for temporal needs.

What is Retrieval Augmented Generation?

FM-powered artificial intelligence (AI) assistants have limitations, such as providing outdated information or struggling with context outside their training data. RAG addresses these issues by allowing FMs to cross-reference authoritative knowledge sources before generating responses.

With RAG, when a user asks a question, the system retrieves relevant context from a curated knowledge base, such as company documentation. It provides this context to the FM, which uses it to generate a more informed and precise response. RAG helps overcome FM limitations by augmenting its capabilities with an organization’s proprietary knowledge, enabling chatbots and AI assistants to provide up-to-date, context-specific information tailored to business needs without retraining the entire FM. At AWS, we recognize RAG’s potential and have worked to simplify its adoption through Knowledge Bases for Amazon Bedrock, providing a fully managed RAG experience.

Short-term and instant information needs

Although a knowledge base does all the heavy lifting and serves as a persistent large store of enterprise knowledge, you might require temporary access to data for specific tasks or analysis within isolated user sessions. Traditional RAG approaches are not optimized for these short-term, session-based data access scenarios.

Businesses incur charges for data storage and management. This may make RAG less cost-effective for organizations with highly dynamic or ephemeral information requirements, especially when data is only needed for specific, isolated tasks or analyses.

Ask questions on a single document with zero setup

This new capability to chat with your document within Knowledge Bases for Amazon Bedrock addresses the aforementioned challenges. It provides a zero-setup method to use your single document for content retrieval and generation-related tasks, along with the FMs provided by Amazon Bedrock. With this new capability, you can ask questions of your data without the overhead of setting up a vector database or ingesting data, making it effortless to use your enterprise data.

You can now interact with your documents in real time without prior data ingestion or database configuration. You don’t need to take any further data readiness steps before querying the data.

This zero-setup approach makes it straightforward to use your enterprise information assets with generative AI using Amazon Bedrock.

Use cases and benefits

Consider a recruiting firm that needs to analyze resumes and match candidates with suitable job opportunities based on their experience and skills. Previously, you would have to set up a knowledge base, invoking a data ingestion workflow to make sure only authorized recruiters can access the data. Additionally, you would need to manage cleanup when the data was no longer required for a session or candidate. In the end, you would pay more for the vector database storage and management than for the actual FM usage. This new feature in Knowledge Bases for Amazon Bedrock enables recruiters to quickly and ephemerally analyze resumes and match candidates with suitable job opportunities based on the candidate’s experience and skill set.

For another example, consider a product manager at a technology company who needs to quickly analyze customer feedback and support tickets to identify common issues and areas for improvement. With this new capability, you can simply upload a document to extract insights in no time. For example, you could ask “What are the requirements for the mobile app?” or “What are the common pain points mentioned by customers regarding our onboarding process?” This feature empowers you to rapidly synthesize this information without the hassle of data preparation or any management overhead. You can also request summaries or key takeaways, such as “What are the highlights from this requirements document?”

The benefits of this feature extend beyond cost savings and operational efficiency. By eliminating the need for vector databases and data ingestion, this new capability within Knowledge Bases for Amazon Bedrock helps secure your proprietary data, making it accessible only within the context of isolated user sessions.

Now that we’ve covered the feature benefits and the use cases it enables, let’s dive into how you can start using this new feature from Knowledge Bases for Amazon Bedrock.

Chat with your document in Knowledge Bases for Amazon Bedrock

You have multiple options to begin using this feature:

The Amazon Bedrock console

The Amazon Bedrock RetrieveAndGenerate API (SDK)

Let’s see how we can get started using the Amazon Bedrock console:

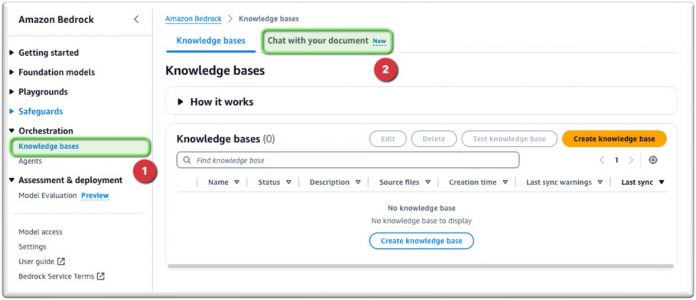

On the Amazon Bedrock console, under Orchestration in the navigation pane, choose Knowledge bases.

Choose Chat with your document.

Under Model, choose Select model.

Choose your model. For this example, we use the Claude 3 Sonnet model (we are only supporting Sonnet at the time of the launch).

Choose Apply.

Under Data, you can upload the document you want to chat with or point to the Amazon Simple Storage Service (Amazon S3) bucket location that contains your file. For this post, we upload a document from our computer.

The supported file formats are PDF, MD (Markdown), TXT, DOCX, HTML, CSV, XLS, and XLSX. Make that the file size does not exceed 10 MB and contains no more than 20,000 tokens. A token is considered to be a unit of text, such as a word, sub-word, number, or symbol, that is processed as a single entity. Due to the preset ingestion token limit, it is recommended to use a file under 10MB. However, a text-heavy file, that is much smaller than 10MB, can potentially breach the token limit.

You’re now ready to chat with your document.

As shown in the following screenshot, you can chat with your document in real time.

To customize your prompt, enter your prompt under System prompt.

Similarly, you can use the AWS SDK through the retrieve_and_generate API in major coding languages. In the following example, we use the AWS SDK for Python (Boto3):

Conclusion

In this post, we covered how Knowledge Bases for Amazon Bedrock now simplifies asking questions on a single document. We explored the core concepts behind RAG, the challenges this new feature addresses, and the various use cases it enables across different roles and industries. We also demonstrated how to configure and use this capability through the Amazon Bedrock console and the AWS SDK, showcasing the simplicity and flexibility of this feature, which provides a zero-setup solution to gather information from a single document, without setting up a vector database.

To further explore the capabilities of Knowledge Bases for Amazon Bedrock, refer to the following resources:

Knowledge bases for Amazon Bedrock

Getting started with Amazon Bedrock, RAG, and Vector database in Python

Vector Embeddings and RAG Demystified: Leveraging Amazon Bedrock, Aurora, and LangChain (Part 1 and Part 2)

Share and learn with our generative AI community at community.aws.

About the authors

Suman Debnath is a Principal Developer Advocate for Machine Learning at Amazon Web Services. He frequently speaks at AI/ML conferences, events, and meetups around the world. He is passionate about large-scale distributed systems and is an avid fan of Python.

Sebastian Munera is a Software Engineer in the Amazon Bedrock Knowledge Bases team at AWS where he focuses on building customer solutions that leverage Generative AI and RAG applications. He has previously worked on building Generative AI-based solutions for customers to streamline their processes and Low code/No code applications. In his spare time he enjoys running, lifting and tinkering with technology.

Read MoreAWS Machine Learning Blog