XA transactions are not a very familiar concept to lots of people and therefore hardly used. An XA transaction is a two-phase commit protocol that supports distributed transactions that updates multiple relational databases. It involves a transaction manager that monitors this global transaction. XA makes sure that transactional updates are committed in all of the participating databases in this global transaction, or are fully rolled back out of all of the databases, reverting to the state prior to the start of the transaction. XA transactions are also useful when you need to coordinate a transaction between different databases.

MySQL uses replication to copy data asynchronously from one MySQL database server (source) to another (replica). MySQL replication uses the binary log file to keep changes (UPDATE, DELETE, INSERT) from the source server. Each replica that is connected to this source server requests a copy of this binary log file via replication to run on the replica database server.

XA transactions, when used with a MySQL binary log, can cause instability and leave the server and binary log in an inconsistent state if there is an unexpected halt to the server in the middle of this XA transaction.

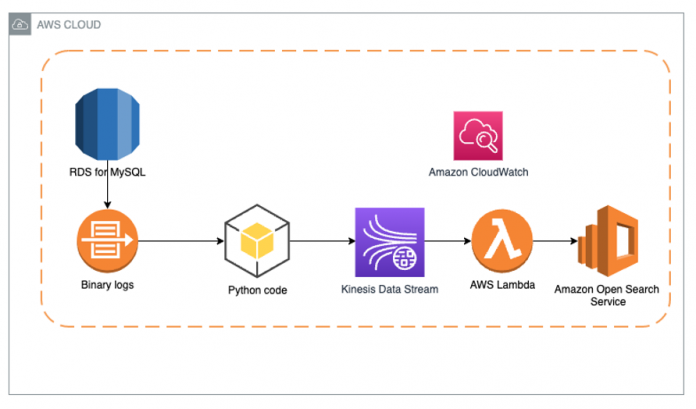

In this post, we present a solution to safely replicate data with XA transactions from Amazon Relational Database Service (Amazon RDS) for MySQL applications to Amazon OpenSearch Service using Amazon Kinesis Data Streams and AWS Lambda.

Solution overview

In our solution we implement a system that reads the MySQL binary logs, extracts the transactions, and pushes the transactions to a downstream analytics system like Amazon OpenSearch Service or Amazon Redshift. The solution also needs to be suitable for high transaction loads and be scalable and highly available.

The following diagram illustrates the solution of implementing continuous data replication from Amazon RDS for MySQL to Amazon OpenSearch Service using change data capture (CDC).

The following are the high level procedures to setup this solution:

Prepare the source RDS for MySQL instance

Create the Amazon Kinesis Data Stream

Create the Python code in Amazon Elastic Compute Cloud (Amazon EC2)

Create the OpenSearch Service Domain

Create the Lambda function

Prepare the source RDS for MySQL instance

Enable the following parameters in the RDS instance parameter group:

Ensure that binary logging is enabled in the parameter group (binlog_format = ROW). Enable automated backup for Amazon RDS for MySQL, Amazon RDS for MariaDB and set binlog_format = ROW for Amazon Aurora MySQL-Compatible Edition.

Ensure that binlog_row_image = FULL.

The reason for this parameter is because all events generated by the CDC code contain the database image before and after the change. For example, if a row contains the column table.a = 10 and the update sets the column table.a = 20, the binary log and Kinesis event contain table.a = 10 as the before image and table.a = 20 as after image.You can verify these parameters from the database as follows by using a mysql client:

The database user that connects to the source database must have the REPLICATION SLAVE role. You can run the following code on the database to create the relevant user and grant the roles:

Create the Amazon Kinesis Data Stream

The binary logs are captured from MySQL by using a Python script that reads the MySQL binary log stream and creates a Kinesis event from each binary log event, which is stored in the Kinesis data stream. We use the Kinesis Data Streams for high availability and scalability of the event queue. You can Create a Kinesis Data Stream using the AWS console.

Create the Python code in Amazon EC2

We can run the Python code, which we also call the fetcher script, on Amazon Elastic Kubernetes Service (Amazon EKS), Amazon Elastic Container Service (Amazon ECS), or on a very small Amazon Elastic Compute Cloud (Amazon EC2) instance like t4g.micro. We don’t recommend running the MySQL replication CDC (Python) code on a Lambda function because this code needs to run continuously to monitor all changes from binary logs and Lambda functions have timeouts.

The first Python code reads the binary log stream from the MySQL. We used the Python package python-mysql-replication, which provides the ability to read the MySQL binary log stream directly from the MySQL source database or replica.

After we read the event from the binary logs, we can stream it to Kinesis Data Streams. This is where we can create conversions and data manipulations if needed. We use the Boto3 package to connect to Kinesis Data Streams and create the record.

install python3

install boto3 and mysql-replication

The following link to GitHub is the sample fetcher Python script

To run the code use:

Create an OpenSearch Service Domain

The target of this solution is Amazon OpenSearch. Create an OpenSearch Service Domain.

Select name for the domain.

Create master user

Select “Create Master User”

Enter user name and password

Configure the Access policy to “Only use fine-grained access control” to allow Lambda to access the Amazon OpenSearch Service

For more information, refer to the Amazon OpenSearch Service Developer Guide.

Create the Lambda function

To deploy the AWS Lambda function we use AWS Serverless Application Model (SAM).

You can install SAM on your local server/laptop or on an AWS EC2 instance with windows Linux or MacOS.

The next step is to deploy the code to the AWS Lambda with SAM:

Download the source code from GitHub.

Go to change-data-capture-mysql-opensearch/opensearch-writer

Edit the file template.yaml. In line number 39 add your AWS Kinesis Data Stream ARN for example

Edit the file samconfig.toml and add the appropriate AWS region name

Run the fallowing commands to build, validate and deploy the code on Lambda:

This code creates the Lambda function and a trigger to the Kinesis Data Stream. Events in the Kinesis Data Stream will trigger the Lambda function.

Getting results

The following test was use to demonstrate sample XA transaction been replicated from the source RDS for MySQL to Amazon OpenSearch target:

We can see the document on the Amazon OpenSearch Service being successfully replicated using the following command:

System analysis of the solution

Although the solution we presented introduced an additional component (Kinesis Data Streams) to the overall application, it provides a safe option for replicating data change events from a MySQL database using XA transactions. If you’re running MySQL workloads on fully managed services like Amazon RDS and Amazon Aurora, you need to provision a separate EC2 instance to run the fetcher Python code that reads the binary log stream from MySQL and the streamer Python code that pushes the change events to Kinesis Data Streams.

AWS Database Migration Service (AWS DMS) uses its change data capture (CDC) feature to continuously replicate changes from a MySQL source database to a variety of target databases. This is made possible through the MySQL binary log. AWS DMS uses MySQL binary log replication to stream these changes to the target database.

However, XA transactions in MySQL can’t be used safely with MySQL binary log replication due to engine limitations. As a result, AWS DMS is unable to replicate XA transactions. For more information about the limitations of using a MySQL database with AWS DMS, refer to Limitations on using a MySQL database as a source for AWS DMS.

Clean up

If you were running this solution in your own AWS Account for test purpose, don’t forget to clean up the resources after your test, by deleting the Amazon RDS for MySQL instance, EC2 instance and Amazon OpenSearch Service Domain you used during setup.

Summary

In this post, we presented a solution to safely replicate change streams from a MySQL database using XA transactions. There are many standard replication tools like AWS DMS that system architects and developers can choose from when designing systems that use the CQRS pattern. However, it’s common to encounter features on the source database that aren’t supported by available replication tools. This is where you can use custom solutions like the one presented in this post. You can use the solution as is or further customize the architecture and code to replicate database change events from Amazon RDS for MySQL to downstream services like Amazon Redshift or Amazon OpenSearch Service for analytics use cases.

If you have comments about this post, submit them in the comments section.

About the authors

Baruch Assif (Osoveskiy) is a Database Sr. Solutions Architect. He helps customers with performance architecture and migrate their database solutions to AWS.

Stanley Chukwuemeke is a Database Solutions Architect. He helps customers architect and migrate their database solutions to AWS.

Kehinde Otubamowo is a Sr. NoSQL Solutions Architect. He is passionate about database modernization and enjoys sharing best practices for building cost effective database solutions that perform at scale.

Read MoreAWS Database Blog