At OCP Summit 2022, we’re announcing Grand Teton, our next-generation platform for AI at scale that we’ll contribute to the OCP community.

We’re also sharing new innovations designed to support data centers as they advance to support new AI technologies:

A new, more efficient version of Open Rack.

Our Air-Assisted Liquid Cooling (AALC) – design.

Grand Canyon, our new HDD storage system.

You can view AR/VR models of our latest hardware designs at: https://metainfrahardware.com

Empowering Open, the theme of this year’s Open Compute Project (OCP) Global Summit has always been at the heart of Meta’s design philosophy. Open-source hardware and software is, and will always be, a pivotal tool to help the industry solve problems at large scale.

Today, some of the greatest challenges our industry is facing at scale are around AI. How can we continue to facilitate and run the models that drive the experiences behind today’s innovative products and services? And what will it take to enable the AI behind the innovative products and services of the future? As we move into the next computing platform, the metaverse, the need for new open innovations to power AI becomes even clearer.

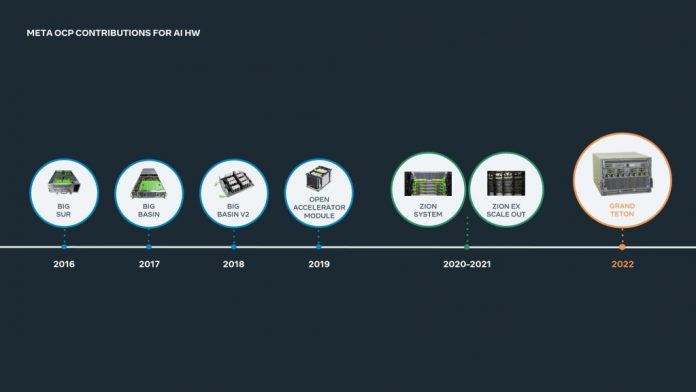

As a founding member of the OCP community, Meta has always embraced open collaboration. Our history of designing and contributing next-generation AI systems dates back to 2016, when we first announced Big Sur. That work continues today and is always evolving as we develop better systems to serve AI workloads.

After 10 years of building world-class data centers and distributed compute systems, we’ve come a long way from developing hardware independent of the software stack. Our AI and machine learning (ML) models are becoming increasingly powerful and sophisticated and need more high-performance infrastructure to match. Deep learning recommendation models (DLRMs), for example, have on the order of tens of trillions of parameters and can require a zettaflop of compute to train.

At this year’s OCP Summit, we’re sharing our journey as we continue to enhance our data centers to meet Meta, and the industry’s, large-scale AI needs. From new platforms for training and running AI models, to power and rack innovations to help our data centers handle AI more efficiently, and even new developments with PyTorch, our signature machine learning framework – we’re releasing open innovations to help solve industry-wide challenges and push AI into the future.

Grand Teton: AI platform

We’re announcing Grand Teton, our next-generation, GPU-based hardware platform, a follow-up to our Zion-EX platform. Grand Teton has multiple performance enhancements over its predecessor, Zion, such as 4x the host-to-GPU bandwidth, 2x the compute and data network bandwidth, and 2x the power envelope. Grand Teton also has an integrated chassis in contrast to Zion-EX, which comprises multiple independent subsystems.

As AI models become increasingly sophisticated, so will their associated workloads. Grand Teton has been designed with greater compute capacity to better support memory-bandwidth-bound workloads at Meta, such as our open source DLRMs. Grand Teton’s expanded operational compute power envelope also optimizes it for compute-bound workloads, such as content understanding.

The previous-generation Zion platform consists of three boxes: a CPU head node, a switch sync system, and a GPU system, and requires external cabling to connect everything. Grand Teton integrates this into a single chassis with fully integrated power, control, compute, and fabric interfaces for better overall performance, signal integrity, and thermal performance.

This high level of integration dramatically simplifies the deployment of Grand Teton, allowing it to be introduced into data center fleets faster and with fewer potential points of failure, while providing rapid scale with increased reliability.

Rack and power innovations

Open Rack v3

The latest edition of our Open Rack hardware is here to offer a common rack and power architecture for the entire industry. To bridge the gap between present and future data center needs, Open Rack v3 (ORV3) is designed with flexibility in mind, with a frame and power infrastructure capable of supporting a wide range of use cases — including support for Grand Teton.

ORV3’s power shelf isn’t bolted to the busbar. Instead, the power shelf installs anywhere in the rack, which enables flexible rack configurations. Multiple shelves can be installed on a single busbar to support 30kW racks, while 48VDC output will support the higher power transmission needs of future AI accelerators.

It also features an improved battery backup unit, upping the capacity to four minutes, compared with the previous model’s 90 seconds, and with a power capacity of 15kW per shelf. Like the power shelf, this backup unit installs anywhere in the rack for customization and provides 30kW when installed as a pair.

Meta chose to develop almost every component of the ORV3 design through OCP from the beginning. While an ecosystem-led design can result in a lengthier design process than that of a traditional in-house design, the end product is a holistic infrastructure solution that can be deployed at scale with improved flexibility, full supplier interoperability, and a diverse supplier ecosystem.

You can join our efforts at: https://www.opencompute.org/projects/rack-and-power

Machine learning cooling trends vs. cooling limits

With a higher socket power comes increasingly complex thermal management overhead. The ORV3 ecosystem has been designed to accommodate several different forms of liquid cooling strategies, including air-assisted liquid cooling and facility water cooling. The ORV3 ecosystem also includes an optional blind mate liquid cooling interface design, providing dripless connections between the IT gear and the liquid manifold, which allows for easier servicing and installation of the IT gear.

In 2020, we formed a new OCP focus group, the ORV3 Blind Mate Interfaces Group, with other industry experts, suppliers, solution providers, and partners, where we are developing interface specifications and solutions, such as rack interfaces and structural enhancements to support liquid cooling, blind mate quick (liquid) connectors, blind mate manifolds, hose and tubing requirements, blind mate IT gear design concepts, and various white papers on best practices.

You might be asking yourself, why is Meta so focused on all these areas? The power trend increases we are seeing, and the need for liquid cooling advances, are forcing us to think differently about all elements of our platform, rack and power, and data center design. The chart below shows projections of increased high-bandwidth memory (HBM) and training module power growth over several years, as well as how these trends will require different cooling technologies over time and the limits associated with those technologies.

You can see that with facility water cooling strategies, we can accommodate north of 1000W sockets, with as much as 50W of HBM per stack

You can join our efforts at: https://www.opencompute.org/projects/cooling-environments

Grand Canyon: Next-gen storage for AI infrastructure

Supporting ever-advancing AI models also means having the best possible storage solutions to support our AI infrastructure. Grand Canyon is Meta’s next-generation storage platform,featuring improved hardware security and future upgrades of key commodities. The platform is designed to support higher-density HDD’s without performance degradation and with improved power utilization.

Launching the PyTorch Foundation

Since 2016, when we first partnered with the AI research community to create PyTorch, it has grown to become one of the leading platforms for AI research and production applications. In September of this year, we announced the next step in PyTorch’s journey to accelerate innovation in AI. PyTorch is moving under the Linux Foundation umbrella as a new, independent PyTorch Foundation.

While Meta will continue to invest in PyTorch, and use it as our primary framework for AI research and production applications, the PyTorch Foundation will act as a responsible steward. It will support PyTorch through conferences, training courses, and other initiatives. The foundation’s mission is to foster and sustain an ecosystem of vendor-neutral projects with PyTorch that will help drive industry-wide adoption of AI tooling.

We remain fully committed to PyTorch. And we believe this approach is the fastest and best way to build and deploy new systems that will not only address real-world needs, but also help researchers answer fundamental questions about the nature of intelligence.

The future of AI infrastructure

At Meta, we’re all-in on AI. But the future of AI won’t come from us alone. It’ll come from collaboration – the sharing of ideas and technologies through organizations like OCP. We’re eager to continue working together to build new tools and technologies to drive the future of AI. And we hope that you’ll all join us in our various efforts. Whether it’s developing new approaches to AI today or radically rethinking hardware design and software for the future, we’re excited to see what the industry has in store next.

The post OCP Summit 2022: Open hardware for AI infrastructure appeared first on Engineering at Meta.

Read MoreEngineering at Meta