Many AWS customers are starting to modernize architectures and move toward open-source databases. Babelfish for Aurora PostgreSQL makes it easier to migrate applications from SQL Server to Amazon Aurora PostgreSQL-Compatible Edition. With Babelfish, Aurora PostgreSQL-Compatible supports commonly used T-SQL language and semantics, which reduces the amount of code changes related to database calls in an application.

In this post, we show how to migrate a Microsoft SQL Server database to Babelfish, including data migration using AWS Database Migration Service (AWS DMS) and will use SQL Server Northwind sample database for the migration. Additionally, we look into some common issues and their potential resolution.

Babelfish overview

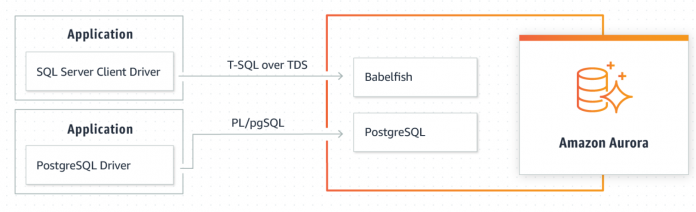

Before we discuss the step-by-step migration process, let’s look at the Babelfish architecture. Babelfish is a migration accelerator for moving SQL Server applications to Aurora PostgreSQL-Compatible with minimal changes to your T-SQL code or client connectivity drivers. It allows you to move from a licensed proprietary on-premises database management system (DBMS) to an open-source DBMS in the cloud and enables the path for modernization.

Babelfish provides the capability for PostgreSQL to understand queries from applications written for SQL Server. Babelfish understands the SQL Server Wire Protocol and T-SQL, the SQL Server query language, so you don’t have to switch database drivers or rewrite all of your application queries. You can create TDS connections using the SQL Server connectivity libraries and also standard PostgreSQL connections, as shown in the following diagram.

The following are some benefits of Babelfish:

Keep existing queries – Language extensions enable Aurora PostgreSQL-Compatible to work with T-SQL

Accelerate migration – You can complete migration faster, saving months to years of work

Freedom to innovate – You can run T-SQL code side by side with open-source functionality and continue to develop with familiar tools

Solution overview

The following are the high-level steps for Babelfish migration:

Generate DDL and perform analysis using the Babelfish Compass tool. We can generate DDL using the following options:

Use SQL Server Management Studio (SSMS) to export the database schema.

As of Babelfish Compass v.2023-08, Compass may optionally perform the DDL generation directly for the SQL Server databases for which analysis needs to be performed.

Create an Aurora PostgreSQL instance with the Babelfish extension.

Recreate the schema in Babelfish by running the DDL script against port 1433.

Migrate data from SQL Server to Babelfish using AWS DMS and reconfigure the client application to connect to the Babelfish TDS port instead of SQL Server.

For .NET applications with a backend as Babelfish, little to no changes are required in application code. Changes are required in the application code for the features not supported by Babelfish.

In the following sections, we provide the step-by-step details to migrate from SQL Server to Babelfish for Aurora PostgreSQL.

Babelfish Compass assessment tool

Babelfish Compass is a standalone tool that runs on Windows, Mac, and Linux, and assesses compatibility for Babelfish. This tool looks at the DDL and SQL code and provides a detailed report listing all supported and unsupported features.

To install and run Babelfish Compass, complete the following steps:

Download the latest version of Babelfish Compass (.zip file) from GitHub.

Unzip the file to the required folder. There are three zip files in the folder. You should unzip the file BabelfishCompass_v.2023-08.zip.

Babelfish Compass requires 64-bit Java/JRE 8 or later; it must be installed prior to running the Compass report.

Open CMD (Windows) and navigate to the path where you unzipped the zip file.

To verify installation, run BabelfishCompass with -help option.

Generate Compass report for Northwind database

Use the Compass tool capability to generate DDLs with Compass v.2023-08 or later. This option is useful when there are many SQL Server instances or many databases in a SQL Server. To let Compass, perform the DDL generation, the following command-line options must be specified. Compass will then connect to the SQL Server, generate the DDL files, and run a Compass analysis on the generated files.

–sqlendpoint – The hostname or IP address of the SQL Server. Optionally, the port number can also be specified (for example, 10.123.45.67,1433 or mybigbox,1433).

–sqllogin – The login name to connect to the SQL Server. This login should typically be a member of the sysadmin role so as to have permission to generate the DDL.

–sqlpasswd – The corresponding password.

–sqldblist – This is optional; when omitted or when all is specified, DDL is generated for all user databases in the server. Alternatively, a comma-separated list of database names can be specified.

The speed of DDL generation highly depends on the network proximity to the SQL Server. If it takes too long, you can cancel the process using CTRL+C and do manual DDL generation through SSMS, which may be faster.

Above script generates, Babelfish compass report and by default it goes in your user’s Documents\BabelfishCompass folder. The script also generates separate DDL files for each database you specify and stores it in your computers TEMP directory as shown in below screen shot.

Other option to generate compass report is to generate DDL using SSMS first and run Babelfish compass commands to generate report, refer to Deep dive into Babelfish Compass to learn more details.

Analyze the results

To assess the overall migration efforts, the first thing to do is to look at the summary section at the top of the report, where the number of objects and the number of lines of SQL code are reported. This will give a first impression of how big is application and much effort it may take.

Next, focus on the following Summary of SQL Features sections: Not Supported, Review Manually, Review Semantics, Review Performance, and Ignored.

Based on the issues, either develop the possible workaround or descope the migration by scaling down the features not required in migrated application. The descope process requires collaboration between developers and the application owner. When we use Optimistic option in the command, then some of the commands will list under Ignored rather than not supported. If we generate the report without Optimistic clause, below listed features will be reported under not supported. The features ignored with the Optimize option can generally be ignored for purposes of getting the application migrated. Please note that when we generate compass report for Northwind schema using the optimistic setting, there are no unsupported features.

Create a Babelfish for Aurora PostgreSQL cluster

Creating a Babelfish cluster is similar to creating an Aurora PostgreSQL cluster, except we expand the filter options under Engine version and select Show versions that support the Babelfish for PostgreSQL feature. It is recommended to choose the latest major release version of Aurora PostgreSQL which has the most recent features and performance improvements.

When you create a Babelfish cluster, you choose between using a single migrated T-SQL user database or multiple migrated T-SQL user databases together. If you specify single-db, you can create only a single T-SQL database in Babelfish, and T-SQL schemas are created as regular PostgreSQL schemas in your Babelfish database. In multiple-database mode, the schema names of user databases become dbname_schemaname when accessed from PostgreSQL. The schema names remain the same when accessed from T-SQL. See Using Babelfish with a single database or multiple databases for more information.

We recommend using multi-db for the migration mode since this provides support for multiple T-SQL user databases in your Babelfish cluster. You cannot change this value after you create the cluster, so it’s better to have the flexibility even if you initially need only one T-SQL user database.

To stay closer to the SQL Server, you can choose sa as the primary user name.

After you create the Babelfish cluster, it will provide two endpoints, a reader and writer, and it will also support both PostgreSQL and T-SQL on different ports.

Connect to Babelfish Cluster

The next step is to make the connection with the Babelfish cluster and create a schema in the new database using SQL Server Management Studio (SSMS). SSMS is a GUI-based client tool to connect to SQL Server with limited Object Explorer support for viewing and scripting objects.

Enter your Babelfish endpoint cluster and credentials, then choose Connect.

Recreate the schema in Babelfish by running the DDL script in SSMS

To recreate schema in Babelfish, follow below steps:

Check Babelfish as well as Aurora PostgreSQL versions using the following query:

Configure Babelfish escape hatches to ignore – Babelfish mimics SQL behavior for control flow and transaction state whenever possible. When Babelfish encounters an error, it returns an error code similar to the SQL Server error code. To handle statements that might fail, Babelfish defines certain options called escape hatches. An escape hatch is an option that specifies Babelfish behavior when it encounters an unsupported feature or syntax. Use below statement to set all escape hatches to ignore strict behavior:

Create Northwind database and verify using T-SQL catalog view:

Check schema mapping between Babelfish and PostgreSQL, this information will be useful for creating DMS task for data loading.

Depending on multi-db or single-db configuration selected for your Babelfish cluster, you will see different results because PostgreSQL internally maps database and schema names differently for each of these options.

Results of multi-db Babelfish cluster:

Results of single-db Babelfish cluster:

Create schema objects in Babelfish by running the finalized scripts.

Babelfish currently supports migrating BINARY, VARBINARY, and IMAGE data types using the BYTEA data type with DMS. Make changes in Northwind database script to change any IMAGE datatype to BYTEA. In the original Northwind database the Category table Picture column uses the IMAGE data type and Employee table Photo column uses the IMAGE data type. In the final script, find and replace as shown below:

Run scripts with only primary key and unique keys on the tables, this will speed up the data loading process and we can create additional indexes and constraints once data load process finishes.

Validate the tables created in previous step using below scripts:

Migrate data from SQL Server to Babelfish using AWS DMS

AWS DMS version 3.5.1 provides better support data type support for Aurora PostgreSQL with Babelfish minor versions 14.8 and 15.3 or later. Here is how to get started with using DMS with the SQL Server source and Aurora PostgreSQL target endpoints.

On the AWS DMS console, create a replication instance by choosing the size of the instance depending upon your workload.

Create the source and target endpoints.

Source endpoint – The Source endpoint should point to the SQL Server.

Target endpoint – The Aurora PostgreSQL target endpoint is the preferred way to migrate data to Babelfish. When you create your AWS DMS target endpoint using the AWS DMS console, API, or CLI commands, keep following things in mind:

Specify the target engine as Amazon Aurora PostgreSQL

Name the database, babelfish_db.

In the Endpoint Settings section, add settings to set DatabaseMode to Babelfish and BabelfishDatabaseName to the name of the target Babelfish T-SQL database. Please note that If you are using Aurora PostgreSQL with Babelfish prior to minor versions 15.3 and 14.8, do not use these endpoint settings.

Create and start the replication task to load the required tables in the target database. For more information about Babelfish as an AWS DMS endpoint, refer to Using Babelfish as a target for AWS Database Migration Service.

For migrating Northwind database, below are task settings:

Target table preparation – “Do Nothing”. In this mode AWS DMS will not make any changes in the target database. you should select Full LOB mode to take advantage of the new capability with DMS 3.5.1 with Babelfish 3.2.0 and 2.5.0 releases.

Table Mapping Rule – Add following rule to include all tables in the dbo schema where schema name is like ‘dbo’ and Source table name is like ‘%’ or specific table from your source SQL Server database.

Transformation rules – The following are the transformation rules needed depending on multi-db or single-db configurations:

If you use multi-db mode rename the dbo schema to match your PostgreSQL schema which is northwind_dbo

For both the modes, rename the tables to lowercase. Below screen shot displays the transformation rule:

Review the table statistics to confirm data load. Once the DMS replication task completes successfully, we can view the table statistics.

Alternatively, we can run query the database to validate the data.

Change the BYTEA data types back to IMAGE – Most of the applications works fine with BYTEA but if we need to change it back to IMAGE, use below script to add new column with IMAGE data-type, then copy data and drop original column and finally rename to match original column name.

Reset your IDENTITY columns after loading data from DMS. In SQL Server, it is very common to use IDENTITY columns for the primary key or other columns which needs auto incremented values. After migrating data with tables that use IDENTITY columns or the SERIAL data type, reset the PostgreSQL-based sequence object based on the maximum value for the column using below script:

The follwoing statements are generated by the previous queries. Run them against Northwind database:

Using the Generate Script wizard in SSMS, you will regenerate the create scripts as follows:

Script just the database tables, to include check constrains, indexes, triggers, etc. in separate file. Comment out the table creation section as we already migrated the tables. Final script should only contain indexes, constrains and triggers. Run the script against Northwind database in Babelfish cluster.

Script just the views and run it against Northwind database in Babelfish cluster.

Script just the stored procedures, fix any non-supported features and run it against Northwind database in Babelfish cluster.

Migration issues and potential resolution

In this section, we discuss some of the issues and potential resolutions for Babelfish as version 3.2

that we have seen with customers in previous migration efforts.

Unsupported features with stored procedures and functions

In order to start testing your application faster, one technique is to comment out the unsupported stored procedure bodies and throw an exception/raise an error. We will still maintain the stored procedure signature (name, input & output). Theoretically, you can write a parameterized regular expression (with group capture) to do this. Below is an example:

This way, you can isolate unsupported issues and tackle them separately release to get these fixed rather than holding up all testing efforts due to subset of unsupported features which may take some time to resolve.

Troubleshooting queries

This is the most common issue after migration to Babelfish. Many of these issues are often attributed to different index strategies between SQL Server and PostgreSQL that can be diagnosed with EXPLAIN ANALYZE on the PostgreSQL side or using SET BABELFISH_STATISTICS PROFILE.

A common issue is that SQL Server can still use an indexed column when the predicate has an expression on the column, but PostgreSQL may not. The solution is to create an expression index in PostgreSQL matching the predicate expression. Once you analyze the queries, apply the potential fixes by ensuring appropriate indexes and join conditions. Use the following code:

Use SET BABELFISH_STATISTICS PROFILE {ON|OFF} to display the query plan used to run a statement

Use SET BABELFISH_SHOWPLAN_ALL {ON|OFF} to display estimated explain plans for a statement without performing the command

In the following example, BABELFISH_SHOWPLAN_ALL provides details of the access path, index scan (if any), and cost of the operation, which will help in performance tuning:

In this example, to improve performance, we create an index on test2 on column c; this is reflected in the explain plan and the cost of the operation will also reduce:

For more information, refer to Reviewing a Query Plan.

Update statistics

Statistics of database objects is critical for the performance of the queries. Database optimizers use these statistics to determine the best execution plan and use it for data retrieval.

Gathering and updating stats is not supported in Babelfish as of this writing. As a workaround solution, use PostgreSQL features to analyze the table:

SQL Server command – UPDATE STATISTICS

PostgreSQL command – ANALYZE

For more information, refer to ANALYZE.

Rebuild table indexes

Indexes are important for query performance. In T-SQL, we use either the DBCC command or ALTER INDEX command for the re-indexing:

These commands are not supported in Babelfish; instead, we can use the PostgreSQL statement REINDEX TABLE from the PostgreSQL connection.

For more details, refer to Rebuilding Table Indexes.

Unsupported code features in Babelfish

There are some differences in Babelfish functions or code syntax and SQL Server. The following table provides a few examples of workarounds.

SQL Feature

Babelfish Workaround

MERGE statement

The Compass report rewrite option generates a workaround for the MERGE statement:

–Replace MERGE with UPDATE and Add Insert if requires.

UPDATE LOCATIONS SET LocationName=S.LocationName FROM Locations T, Locations_stage S WHERE T.LocationID=S.LocationID AND ( T.LocationID =3 )

Date functions, for example EOMONTH()

Most of the unsupported date functions are written by the Compass rewrite option using DATEADD, DATEPART, and so on.

PIVOT clause

Rewrite the statement using the SUM (WHEN…) clause.

UPDATE, WITH (Common Table Expression) as target

This statement is not supported in Babelfish. Manually rewrite the UPDATE statement against underlying table by putting all the required conditions in the WHERE clause.

DBCC commands

DBCC are SQL Server native commands for database maintenance. For Babelfish, use PostgreSQL commands for your specific purposes because DBCC is not supported by the PostgreSQL database engine, which is the underlying database for Babelfish.

Calling a procedure or function with DEFAULT parameter values

Babelfish doesn’t support the DEFAULT keyword in procedure or function calls. Compass rewrites the calls with the actual default parameter values in place of the DEFAULT keyword. The following is an example:

–Before rewrite

dbo.stored_procedure_1( @variable1, parameter_value, DEFAULT, DEFAULT, DEFAULT)

–After Babelfish Compass rewrite

dbo.stored_procedure_1( @variable1, parameter_value, /*REWRITTEN*/ ‘N/A’ /*DEFAULT*/,

/*REWRITTEN*/ NULL /*DEFAULT*/, /*REWRITTEN*/ 0 /*DEFAULT*/)

Summary

In this post, we showed the steps involved in migrating a SQL Server application to Babelfish for Aurora PostgreSQL, including using the Babelfish Compass tool and AWS DMS. We discussed which sections to focus on in the Babelfish Compass report to get more details about unsupported features. We also showed details about loading data into the Babelfish cluster using AWS DMS. Lastly, we presented common migration issues and potential resolutions that can help accelerate Babelfish migrations.

The AWS Babelfish team is making continuous improvements to the product and adding new features on a regular basis. Check out the Babelfish for Aurora PostgreSQL updates with each quarterly release for the latest improvements

For more information about Babelfish for Aurora PostgreSQL, refer to Babelfish for Aurora PostgreSQL.

About the Author

Amit Arora is a Solutions Architect with a focus on database and analytics at AWS. He works with our financial technology, global energy customers and AWS certified partners to provide technical assistance and design customer solutions on cloud migration projects, helping customers migrate and modernize their existing databases to the AWS Cloud.

Read MoreAWS Database Blog