Customers on Google Kubernetes Engine (GKE) use Local SSD for applications that need to rapidly download and process data eg. AI/ML, analytics, batch, in-memory caches. These applications download data to the Local SSD from object storage such as Google Cloud Storage (GCS) and process this data in applications running as pods on GKE. As data is processed, it moves between the local storage and RAM making input/output operations per second (IOPS) performance important. Previously, GKE Local SSD usage was either on older SCSI-based technology or using beta (and alpha) APIs. Now you can use generally available APIs for creating ephemeral or raw-block storage volumes that are all based on NVMe, yielding up to 1.7x better performance than SCSI.

Local SSD is a Compute Engine product that allows ephemeral access to high-performance SSDs directly attached to the physical host. Local SSDs give better performance than PD SSDs and Filestore in exchange for less durability; the data is lost if the Compute Engine instance is stopped or encounters various error conditions. If your workload needs more IOPS and better latency over durability, you’ll find this is a reasonable trade-off.

GKE recently released built-in Local SSD support to become the first managed Kubernetes platform to offer applications ephemeral and raw-block storage that can be provisioned with familiar Kubernetes APIs. This represents the culmination of work across the upstream Kubernetes community to add isolation for ephemeral volumes and to make Google Cloud APIs generally available for configuring Local SSD for performant local storage.

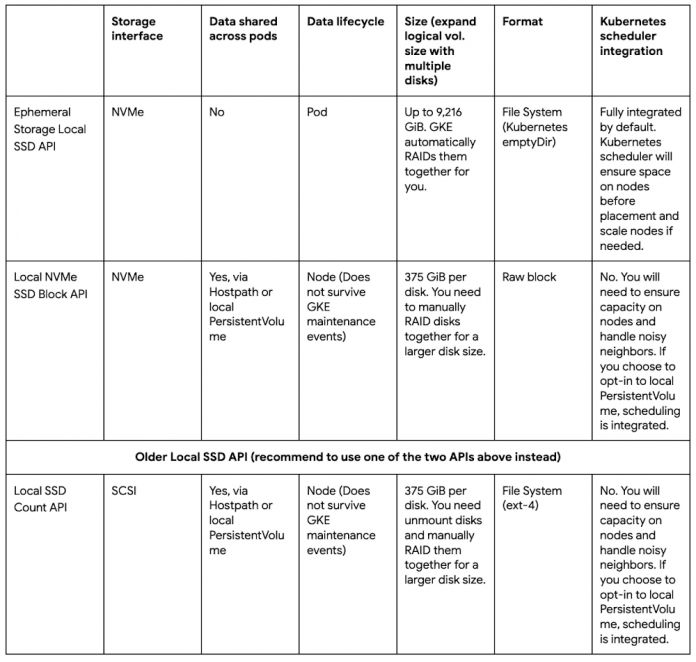

Now Local SSD can be used through GKE with two different options:

1) The ephemeral storage Local SSD option is for those who want fully managed local ephemeral storage backed by Local SSDs that is tied to the lifecycle of the pod. When pods request ephemeral storage, they are automatically scheduled on nodes that have Local SSDs attached. Nodes are autoscaled when the cluster is short of ephemeral storage space with cluster autoscaling enabled.

2) The Local SSD block option is for those who want more control over the underlying storage and who want to build their own node-level cache for their pods to drive better performance for applications. Customers can also customize this option by installing a file system on Local SSDs by running a DaemonSet to RAID and format disks as needed.

We recommend customers migrate from the previous (GA) Local SSD Count API to the newer GA APIs to benefit from better performance.

Let’s take a look at some examples of using the new APIs.

Example #1: Using Ephemeral Storage Local SSD API with emptyDir

Let’s say you have an AI/ML application managed by Kubernetes and data is downloaded to local storage for processing. All pods run the same machine learning model, and each pod is responsible for training a part of the data. Since no data is shared across pods, you can use emptyDir volume as the scratch space, and provision a node pool with local ephemeral storage backed by Local SSDs to optimize IOPS performance.

To create such a node pool, you can use the –ephemeral-storage-local-ssd count=<N> option in the gcloud CLI,

This command will:

create a cluster whose default node pool has n Local SSDs attached to each node. The maximum number of disks varies by machine type and region.

create and add the label cloud.google.com/gke-ephemeral-storage-local-ssd=true to each node

RAID0 all Local SSDs to an array /dev/md/0, format it to an ext-4 file system and mount to path /mnt/stateful_partition/kube-ephemeral-ssd

bind mount the ephemeral storage which consists of the container runtime root directory, kubelet root directory and pod logs root directory to the Local SSDs mount point /mnt/stateful_partition/kube-ephemeral-ssd

The –ephemeral-storage-local-ssd option is immutable. After a node pool is created, the number of Local SSDs attached to each node cannot be changed. If the option is unspecified, GKE initializes node local ephemeral storage with a Persistent Disk boot disk by default, either pd-standard, pd-ssd or pd-balanced. When N Local SSDs attach to a node, the local ephemeral storage available for pods is:

Node Allocatable = 375GiB * N – (kube-reserved + system-reserved + eviction-threshold)

Now you have a node pool, the next step is to create Workloads using emptyDir volumes as scratch space. In Kubernetes, pods can access and write temporary data via emptyDir volumes, logs, and the container writable layer. The data written here is ephemeral and will be deleted when the container or Pod is deleted. In the node pool you just created, these directories are using Local SSDs. For Kubernetes version 1.25+ the local storage capacity isolation feature is GA. It provides support for capacity isolation of shared storage between pods, such that a pod can be limited in its consumption of shared resources by evicting Pods if its consumption of shared storage exceeds that limit. It also allows setting ephemeral storage requests for resource reservation. The limits and requests for shared ephemeral-storage are similar to those for memory and CPU consumption. Before this feature was introduced, pods could be evicted due to other pods filling the local storage since local storage is a best-effort resource.

Here is an example of a pod using emptyDir volume backed by Local SSDs, and local storage capacity requests and limits configured.

cloud.google.com/gke-ephemeral-storage-local-ssd: “true” the pod will be scheduled to a node with local ephemeral storage backed by Local SSDs. The node label is generated at cluster creation time

spec.containers[].resources.requests.ephemeral-storage:”200Gi” the pod can be assigned to a node only if its available local ephemeral storage (allocatable resource) has more than 200GiB

spec.containers[].resources.limits.ephemeral-storage:”300Gi” kubelet eviction manager will measure the disk usage of all containers usage plus emptyDir usage, evict the pod if the storage usage exceeds its limit 300GiB

spec.volumes[].emptyDir.sizeLimit:50Gi if the pod’s emptyDir used up more local ephemeral storage than 50GiB, the pod will be evicted from the node

Example #2: Using Local NVMe SSD Block API with local PersistentVolume

Continue to use the AI/ML application example, but now you have pods running different machine learning models against the same data set. You want to download data to local storage, multiple pods need to access the same data. In this scenario, you can provision a node pool with raw block Local SSDs attached, use a DaemonSet to RAID the disks, and use the local static provisioner to provision local PersistentVolume which can be used as node level cache.

The first step is to create a node pool with raw block Local SSDs attached. To create such a node pool, you can use the –local-nvme-ssd-block count=<N> option in gcloud CLI,

This command will:

create a cluster whose default node pool has N Local SSDs attached to each node

create and add the label cloud.google.com/gke-local-nvme-ssd=true to each node

at node initialization time, the host OS creates a symbolic link (symlink) to access the disk under an ordinal path, and a symlink with a universally unique identifier (UUID). The ordinal path doesn’t expose the underlying storage interface e.g NVMe. It can be used when an application can tolerate the data being deleted on a node repair or upgrade. The UUID symlink is more suitable when your application has special recovery features that need to be executed when the data is deleted. In this example, we assume the former.

The next step is to run a DaemonSet to RAID the Local SSDs. Here is an example. This DaemonSet will set a RAID0 array on all Local SSDs and format the device to an ext-4 file system. After RAID the disks, you can use this example yaml file to generate the local static provisioner DaemonSet. The static provisioner will create local PersistentVolumes for your RAID0 array and corresponding StorageClass. After all these are done, you can create a PVC and Pod to use the local PersistentVolumes. The commands can be found here.

Resources

To get more information about using GKE Local SSD, please refer to:

Cloud BlogRead More