Proper estimation of predictive uncertainty is fundamental in applications that involve critical decisions. Uncertainty can be used to assess the reliability of model predictions, trigger human intervention, or decide whether a model can be safely deployed in the wild.

We introduce Fortuna, an open-source library for uncertainty quantification. Fortuna provides calibration methods, such as conformal prediction, that can be applied to any trained neural network to obtain calibrated uncertainty estimates. The library further supports a number of Bayesian inference methods that can be applied to deep neural networks written in Flax. The library makes it easy to run benchmarks and will enable practitioners to build robust and reliable AI solutions by taking advantage of advanced uncertainty quantification techniques.

The problem of overconfidence in deep learning

If you have ever looked at class probabilities returned by a trained deep neural network classifier, you might have observed that the probability of one class was much larger than the others. Something like this, for example:

p = [0.0001, 0.0002, …, 0.9991, 0.0003, …, 0.0001]

If this is the case for the majority of the predictions, your model might be overconfident. In order to evaluate the validity of the probabilities returned by the classifier, we may compare them with the actual accuracy achieved over a holdout data set. Indeed, it is natural to assume that the proportion of correctly classified data points should approximately match the estimated probability of the predicted class. This concept is known as calibration [Guo C. et al., 2017].

Unfortunately, many trained deep neural networks are miscalibrated, meaning that the estimated probability of the predicted class is much higher than the proportion of correctly classified input data points. In other words, the classifier is overconfident.

Being overconfident might be problematic in practice. A doctor may not order relevant additional tests, as a result of an overconfident healthy diagnosis produced by an AI. A self-driving car may decide not to brake because it confidently assessed that the object in front was not a person. A governor may decide to evacuate a town because the probability of an eminent natural disaster estimated by an AI is too high. In these and many other applications, calibrated uncertainty estimates are critical to assess the reliability of model predictions, fall back to a human decision-maker, or decide whether a model can be safely deployed.

Fortuna: A library for uncertainty quantification

There are many published techniques to either estimate or calibrate the uncertainty of predictions, e.g., Bayesian inference [Wilson A.G., 2020], temperature scaling [Guo C. et al., 2017], and conformal prediction [Angelopoulos A.N. et al., 2022] methods. However, existing tools and libraries for uncertainty quantification have a narrow scope and do not offer a breadth of techniques in a single place. This results in a significant overhead, hindering the adoption of uncertainty into production systems.

In order to fill this gap, we launch Fortuna, a library for uncertainty quantification that brings together prominent methods across the literature and makes them available to users with a standardized and intuitive interface.

As an example, suppose you have training, calibration, and test data loaders in tensorflow.Tensor format, namely train_data_loader, calib_data_loader and test_data_loader. Furthermore, you have a deep learning model written in Flax, namely model. Then you can use Fortuna to:

fit a posterior distribution;

calibrate the model outputs;

make calibrated predictions;

estimate uncertainty estimates;

compute evaluation metrics.

The following code does all of this for you.

The code above makes use of several default choices, including SWAG [Maddox W.J. et al., 2019] as a posterior inference method, temperature scaling [Guo C. et al., 2017] to calibrate the model outputs, and a standard Gaussian prior distribution, as well as the configuration of the posterior fitting and calibration processes. You can easily configure all of these components, and you are highly encouraged to do so if you are looking for a specific configuration or if you want to compare several ones.

Usage modes

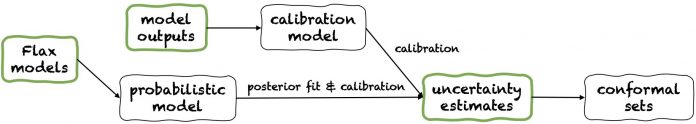

Fortuna offers three usage modes: 1/ Starting from Flax models, 2/ Starting from model outputs, and 3/ Starting from uncertainty estimates. Their pipelines are depicted in the following figure, each starting from one of the green panels. The code snippet above is an example of using Fortuna starting from Flax models, which allows training a model using Bayesian inference procedures. Alternatively, you can start either by model outputs or directly from your own uncertainty estimates. Both these latter modes are framework independent and help you obtain calibrated uncertainty estimates starting from a trained model.

1/ Starting from uncertainty estimates

Starting from uncertainty estimates has minimal compatibility requirements, and it is the quickest level of interaction with the library. This usage mode offers conformal prediction methods for both classification and regression. These take uncertainty estimates in numpy.ndarray format and return rigorous sets of predictions that retain a user-given level of probability. In one-dimensional regression tasks, conformal sets may be thought of as calibrated versions of confidence or credible intervals.

Mind that if the uncertainty estimates that you provide in inputs are inaccurate, conformal sets might be large and unusable. For this reason, if your application allows it, please consider the Starting from model outputs and Starting from Flax models usage modes detailed below.

2/ Starting from model outputs

This mode assumes you have already trained a model in some framework and arrive at Fortuna with model outputs in numpy.ndarray format for each input data point. This usage mode allows you to calibrate your model outputs, estimate uncertainty, compute metrics, and obtain conformal sets.

Compared to the Starting from uncertainty estimates usage mode, Starting from model outputs provides better control, as it can make sure uncertainty estimates have been appropriately calibrated. However, if the model had been trained with classical methods, the resulting quantification of model (aka epistemic) uncertainty may be poor. To mitigate this problem, please consider the Starting from Flax models usage mode.

3/ Starting from Flax models

Starting from Flax models has higher compatibility requirements than the Starting from uncertainty estimates and Starting from model outputs usage modes, as it requires deep learning models written in Flax. However, it enables you to replace standard model training with scalable Bayesian inference procedures, which may significantly improve the quantification of predictive uncertainty.

Bayesian methods work by representing uncertainty over which solution is correct, given limited information, through uncertainty over model parameters. This type of uncertainty is called “epistemic” uncertainty. Because neural networks can represent many different solutions, corresponding to different settings of their parameters, Bayesian methods can be especially impactful in deep learning. We provide many scalable Bayesian inference procedures, which can often be used to provide uncertainty estimates, as well as improved accuracy and calibration, with essentially no training-time overhead.

Conclusion

We announced the general availability of Fortuna, a library for uncertainty quantification in deep learning. Fortuna brings together prominent methods across the literature, e.g., conformal methods, temperature scaling, and Bayesian inference, and makes them available to users with a standardized and intuitive interface. To get started with Fortuna, you can consult the following resources:

GitHub repository

Official documentation

Try Fortuna out, and let us know what you think! You are encouraged to contribute to the library or leave your suggestions and contributions—just create an issue or open a pull request. On our side, we will keep on improving Fortuna, increase its coverage of uncertainty quantification methods and add further examples that showcase its usefulness in several scenarios.

About the authors

Gianluca Detommaso is an Applied Scientist at AWS. He currently works on uncertainty quantification in deep learning. In his spare time, Gianluca likes to practice sports, eating great food and learning new skills.

Alberto Gasparin is an Applied Scientist within Amazon Community Shopping since July 2021. His interests include natural language processing, information retrieval and uncertainty quantification. He is a food and wine enthusiast.

Michele Donini is a Sr Applied Scientist at AWS. He leads a team of scientists working on Responsible AI and his research interests are Algorithmic Fairness and Explainable Machine Learning.

Matthias Seeger is a Principal Applied Scientist at AWS.

Cedric Archambeau is a Principal Applied Scientist at AWS and Fellow of the European Lab for Learning and Intelligent Systems.

Andrew Gordon Wilson is an Associate Professor at the Courant Institute of Mathematical Sciences and Center for Data Science at New York University, and an Amazon Visiting Academic at AWS. He is particularly engaged in building methods for Bayesian and probabilistic deep learning, scalable Gaussian processes, Bayesian optimization, and physics-inspired machine learning.

Read MoreAWS Machine Learning Blog