In Part 1 of this series, we addressed failures related to the source, network, task, and replication instance when migrating from Oracle to Amazon Relational Database Service (Amazon RDS) for PostgreSQL using AWS Database Migration Service (AWS DMS). In this post, we discuss failure scenarios pertaining to validation and target database failure, and share mitigation strategies and error handling settings. This post focuses on common validation scenarios, namely drift of records because of missing data on the source and target. We also discuss common failure scenarios of the target database, their impact on replication tasks, and remediation strategies.

AWS DMS data validation

AWS DMS supports data validation, which helps you make sure that your data was correctly migrated from the source to the target. If enabled, validation begins soon after the full load phase is complete. Validation also compares the incremental changes that occur for a change data capture (CDC) task. AWS DMS compares each row in the source to its matching row in the target and reports any mismatches. If you encounter errors during data validation, you need to check the replication task log for failures, or you can check the awsdms_validation_failures_v1 table present in the target database.

AWS DMS data validation can encounter the following types of errors:

Missing source

Missing target

Record differences

The following code snippet shows an example error in the AWS DMS logs:

The error in the task logs is the same for a missing source, missing target, or record differences. However, the error details in awsdms_validation_failures_v1 are different, which we explore in the following examples.

Missing source

If an additional record is present in the target database but the same row is not present in the source, then this error will be encountered as MISSING_SOURCE in control table (awsdms_validation_failures_v1). See the following code:

During revalidation, if rows are missing from the source, this could be because of the following:

Partitions are removed on the source and available on the target

Additional data is inserted on the target outside of AWS DMS or your application

Missing target

If any record wasn’t migrated to the target database, you see this error as MISSING_TARGET in the control table (awsdms_validation_failures_v1):

Record differences

When the replication option is enabled on the task automatically, data will be validated from source to target and vice versa.

If any record doesn’t match between the source and target database based on the primary key or unique key, then you will see the following error as RECORD_DIFF in the control table (awsdms_validation_failures_v1):

This may be caused by the following:

Latency on the target – Batch bulk loads on the source may have a potential impact on the target database. When an AWS DMS task lags, it can cause data validation errors and report failures due to missing records on the target.

Frequent updates on the source – During a high volume of concurrent updates on the source, you will notice that AWS DMS data validation doesn’t accurately validate the data in the target.

Suggested remediation strategies

In this section, we discuss possible strategies to mitigate these data validation issues.

Monitor validation failures

You can deploy a custom AWS Lambda function to parse the Amazon CloudWatch logs and based on error matching you can send notification to appropriate teams. In near-real time, you may refer to the awsdms_validation_failures_v1 control table residing in the target database created by the DMS and notify the appropriate teams for corrective action. You can use the AWS DMS describe task API to get validation failure statistics in addition to an extract of awsdms_validation_failures_v1.

Query the table in the database with below command

Monitor source and target latency

You can set up CloudWatch alerts for source and target latency. When latency reaches a certain value, CloudWatch triggers an alert via Amazon Simple Notification Service (Amazon SNS) and sends a notification to the appropriate teams.

Adjust AWS DMS task

Consider adjusting the AWS DMS task with the following suggested validation configuration:

Additionality, you may experience the following problems during data validation:

Data types mismatch between source and target – If you encounter a data type issue, you can fix it in the validation only (or data replication) task using an AWS DMS transformation rule with override-validation-function. You may observe a data type error even though there is no actual data replication issue. This is because of ASCII characters, in which case the AWS DMS validation task reports a validation issue.

LOB truncation – In some cases, you may observe a mismatch in LOB columns. To address this issue, you can manually check the data for failed primary or unique key values between the source and target database. If there are matching values, you can ignore the validation error. If it’s indeed a data issue, you need to fix the replication task.

Table errors due to target database failure/connection timeout – If the validation failure count reaches the validation threshold, then the table will be suspended from validation. A validation query may also be stopped due to the timeout parameter set in the database, or an unexpected restart of the target instance, which can suspend table validation.

The following table shows an example of table statistics on the AWS DMS task console.

TableName

Validation state

ValidationPendingRecords

ValidationState

PPT_8

Table error

8,20,000

SSL SYSCALL error:

EOF detected ; No query has been executed with that handle

PPT_9

Table error

90,000

SSL SYSCALL error:

EOF detected ; No query has been executed with that handle

PPT_10

Table error

79,000

SSL SYSCALL error:

EOF detected ; No query has been executed with that handle

The following code snippet shows the example AWS DMS log when the target database has restarted.

In case of a connection timeout or database restart during the validation, consider the following:

Restart the validation task, if it’s a validation-only task.

Try the revalidation option in the task if the task type is full data load and CDC.

If the task type is CDC only and validation is enabled, then you need to create a separate validation-only task for the failed table.

Collation differences Due to collation differences in PostgreSQL and Microsoft SQL Server databases as the source or target, validation might return false negatives. To mitigate this kind of issue, set “HandleCollationDiff“: true in the validation settings. The default is false.

To learn more about the limitations of data validation, refer to Limitations.

Target database failure

Amazon Aurora is a managed service that supports MySQL and PostgreSQL. In this post, we use an Amazon Aurora PostgreSQL-Compatible Edition database as the target database. If the target database is unavailable due to contention, restart, networking, communication, or other reasons, this results in a replication task failure between the source and target database.

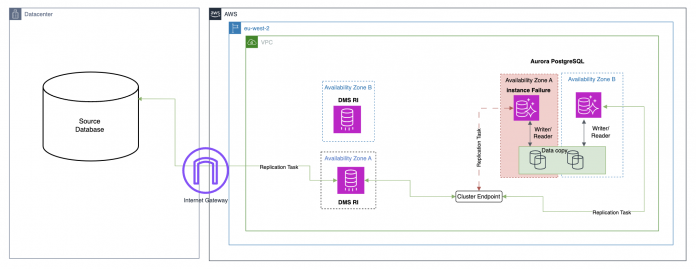

The following diagram illustrates a target database failure scenario.

Connectivity lost between AWS DMS and target database

In the event of target database unavailability because of hardware or network issues, the AWS DMS tasks can’t connect to the target, resulting in an increase in target latency.

The following code snippet shows an error in AWS DMS while loading the data to the target with a full load:

The following code snippet shows an error in AWS DMS while loading the data to the target with CDC:

This loss of connectivity may be because you’re using an instance endpoint for configuring the target endpoints in AWS DMS. In rare cases, it could be caused by changes in security groups.

To mitigate this issue, consider the following strategies:

Check the AWS DMS task logs to understand more about the error.

Check the CloudWatch metrics, event subscription, and database log to understand more about the cause of the target database failure or failover.

For high availability, we recommend a Multi-AZ configuration by placing a read instance in a separate Availability Zone. An available reader instance will be promoted to the writer and inherit the cluster writer endpoint, which AWS DMS will attempt to reconnect.

Configure the target endpoint to use RDS cluster endpoints, which can help mitigate network connectivity incidents.

Resource contention on the target database

Resource contention on the target database may be caused by the following:

Incorrect sizing of the target database instance

High resource usage (CPU, memory, or I/O) by other services

No proper indexes (primary, unique, or secondary) on tables, causing full table scans

High OLTP workloads on the source database

If nothing is connecting to the target database apart from AWS DMS, then you need to first understand where exactly contention is happening with the help of Enhanced Monitoring, CloudWatch, or Amazon RDS Performance Insights. If the error is because of instance sizing, consider the following:

Change the instance type of the primary database to a higher class with more compute or memory capacity and rerun the AWS DMS task.

Modify the read replica instance type and increase the size of it. When you initiate a failover of the current writer, AWS DMS automatically connects to the new writer instance.

During the ongoing replication phase, you might observe that AWS DMS is using more resources on the target database because of poor indexing or full table scans. In such cases, you need to generate the explain plan of the underlying queries and fix the root cause, either by adding secondary indexes on target tables.

If the target database is PostgreSQL, then huge DML operations during peak business hours can cause the autovacuum daemon process to run (to perform maintenance activity). This uses more resources and makes the AWS DMS apply process slow. To address this, tune your autovacuum parameters and consider the strategies we discussed for instance sizing.

Error handling settings and monitoring

In this section, we discuss default behavior and possible solutions to avoid any data errors, such as SUSPEND_TABLE and STOP_TASK. For more information, refer to Error handling task settings.

The default behavior of the task during an error is to log the issue into the log file and continue with replication. To avoid critical business loss due to data corruption, missing data, or partial data replication, consider using the STOP_TASK option.

Most error handling is based on the following settings:

LOG_ERROR – The task continues and the error is written to the task log

SUSPEND_TABLE – The task continues but data from the table with the error record is moved into an error state and the data isn’t replicated

STOP_TASK – The task stops and manual intervention is required

If you’re using limited LOB mode with some value in CDC and any new LOB value is beyond the limited size set on the task, then only partial data will replicate. To mitigate this issue, you can use the parameter DataTruncationErrorPolicy, which determines the action AWS DMS takes for the truncated data. The default is LOG_ERROR – it will log the message in the task log and move forward. However, we suggest using STOP_TASK to stop the task, fix the error, and then resume the task.

In case of DML, if any operation fails, there will be an issue with data in the target tables. To address this, check awsdms_apply_exceptions for exceptions and then adjust the error handling settings. You can set ApplyErrorDeletePolicy, ApplyErrorInsertPolicy, and ApplyErrorUpdatePolicy according to your requirements.

For information about other task controls and behaviors, refer to Error handling task settings. This can help you take the necessary action as needed instead of encountering table suspension or canceled tasks. To learn more, refer to How do I modify the error handling task settings for an AWS DMS task?

For more information about troubleshooting migration tasks, refer to Troubleshooting migration tasks in AWS Database Migration Service.

Conclusion

In this post, we discussed various failure scenarios and their mitigation steps pertaining to each component in the data replication setup. Make sure that each test case is thoroughly tested with a viable solution acceptable to both the customer, business, and technical stakeholders as per the business SLA prior to starting the data migration.

We welcome your feedback. If you have any questions or suggestions, leave them in the comments section.

About the Authors

Vivekananda Mohapatra is a Lead Consultant with the Amazon Web Services ProServe team. He has deep expertise in database development and administration on Amazon RDS for Oracle, Amazon RDS for PostgreSQL, Amazon Aurora PostgreSQL, and Amazon Redshift databases. He is also a subject matter expert in AWS DMS. He works closely with customers to help migrate and modernize their databases and applications to AWS.

Jitender Kumar is a Senior Lead Consultant with the Professional Services team at Amazon Web Services. He has worked on multiple databases as DBA and is skilled in SQL performance tuning and database migrations. He focuses on database migration to AWS and works with customers from assessment to implementation.

HariKrishna Boorgadda is a Senior Consultant with the Professional Services team at Amazon Web Services. He focuses on database migrations to AWS and works with customers to design and implement Amazon RDS and Amazon Aurora architectures.

Rajesh Madiwale is a Lead Consultant with Amazon Web Services. He has deep expertise on database development and administration on Amazon RDS for PostgreSQL, Amazon Aurora PostgreSQL, Amazon Redshift, MySQL, and Greenplum databases. He is an ardent member of the PostgreSQL community and has been working on PostgreSQL his entire tenure. He has also delivered several sessions at PostgreSQL conferences.

Read MoreAWS Database Blog