When you’re considering migrating from on premises to the AWS Cloud, the spotlight is firmly on database transitions. After you migrate the schemas and business logic, data migration strategies are determined by factors such as available downtime, database size, and data complexity. Homogeneous migrations, like those within Oracle, often rely on tools such as Oracle Data Pump or RMAN. However, when migrating between different databases, like from Oracle to Amazon Relational Database Service (Amazon RDS) for PostgreSQL, the go-to solutions typically involve AWS Database Migration Service (AWS DMS), Oracle GoldenGate, or third-party utilities like Shareplex.

Choosing the right cutover strategy is pivotal. Whether it’s the all-at-once “big bang” or the more gradual phased approach, each comes with its nuances. Extended replications in the phased method can span weeks to months, necessitating rigorous resilience planning against potential hitches. It’s imperative to communicate with stakeholders: although replication methods aren’t foolproof, a solid mitigation plan paves the way for seamless migration. Deciding on the approach also entails weighing the inherent risks of the “big bang” method against the controlled progression of phased migrations. You need to carefully evaluate the risk criteria between various cutover approaches.

In the complex terrain of heterogeneous database migrations, AWS DMS stands out as the preferred choice for many. Its adaptability, integration capabilities, and the backing of AWS make it a trusted tool for bridging database disparities. However, like all technologies, AWS DMS isn’t immune to challenges during the migration process. Despite its robustness, unforeseen issues can emerge.

In this two-part series, we examine common failure causes for components like the source database, replication instance, migration task, and target database. We delve into investigative steps, provide error log samples, and offer mitigation guidance. Although this series primarily focuses on migrating from Oracle to Amazon RDS for PostgreSQL or Amazon Aurora PostgreSQL-Compatible Edition, the discussed scenarios can apply to other database combinations. In this post, we address failures related to the source, network, task, and replication instance. In Part 2, we cover validation-related issues, target database failures, and error handling strategies.

AWS DMS failure use cases

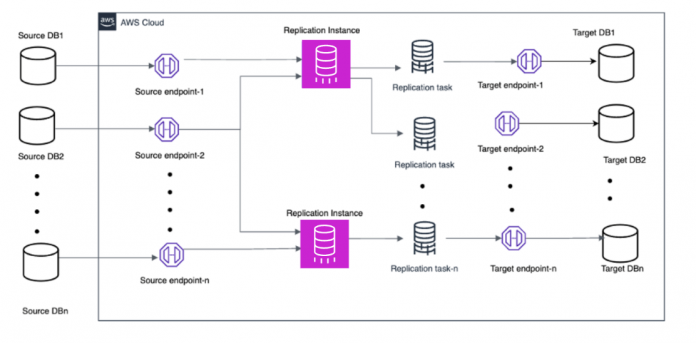

A migration setup using AWS DMS is comprised of different components, namely the source database, network infrastructure, replication instance, replication task, and target database. The source and target components are configured as endpoints, and the AWS DMS task is a workflow to migrate data between the source and target. You can create different types of AWS DMS tasks depending on the ongoing replication requirements, such as full load, change data capture (CDC) only, or full load plus CDC. You can split the source database schema and tables across multiple tasks depending on the characteristic of data, namely tables with a primary key, non-primary key, large objects (LOBs), and so on. Each component in this setup is susceptible to failures; we discuss the reasons for these failures later in this post.

Recommended testing approach for failure scenarios

It’s essential to establish a comprehensive testing strategy to identify and address potential failure scenarios during database migrations with AWS DMS. The test environment should match the production environment in terms of both volumetric and representative datasets. You should ideally reproduce each of the discussed failure scenarios in the test environment to develop the solution and mitigations.

For example, the failure of a task might lead to a full data load for a table or several tables, causing an outage. You need to evaluate the following failure scenarios and design the mitigation in alignment with Service Level Agreements (SLAs):

Source database failure

Network failure

AWS DMS failures (replication instances and tasks)

Target database failure

You should build a comprehensive testing strategy with various test cases, including expected and actual outcomes. Compare these with the business SLAs to obtain agreement from business stakeholders when designing your data migration strategy.

Resources involved

The resources used in this post include a source database, AWS DMS replication instance, AWS DMS endpoints, AWS DMS replication jobs, target database, and your network.

The following diagram illustrates the AWS DMS data migration architecture and its various components.

Source database failure

The first component of failure in the AWS DMS setup is challenging for the AWS team to investigate due to limited or no access to the customer’s database environment. The database logs, ASM logs, and AWS DMS logs serve as the primary sources of information for investigation. The scenarios discussed in this section represent the most common failures for the Oracle source database.

The following diagram illustrates the source database failure scenarios, such as source database disruption due to underlying host failure.

Single instance failure or RAC node eviction

AWS DMS interacts with the Oracle instance, even in a RAC configuration, because AWS DMS doesn’t support scan IPs, if there’s an instance failure or, in a RAC cluster setup, one of the instances restarts or gets evicted, AWS DMS stops reading the live data and archive logs necessary for replication.

The following is an example error log:

To mitigate this issue, start the Oracle instance and ensure it returns gracefully. For a RAC setup, configure the source endpoint to utilize multiple nodes of the cluster using Amazon Route 53.

ASM restart or crash due to known bug

In this scenario, AWS DMS frequently disconnects from the source database, causing pauses in reading changes and increasing latency. Due to a known bug of ASM, Oracle instance may restart or crash.

The following code snippet is an error from the AWS DMS logs:

The following code snippet is an error from the ASM logs:

A temporary workaround is to restart ASM with a memory flushing script.

Unavailability of redo or archive logs

This error may be caused for the following reasons:

The archive logs are missing

The archive logs are available but unable to read from the additional archive destinations

The following code snippet is an error from the AWS DMS logs:

If the necessary archives are missing because of the retention policy, restore the archives from available backups, whether from disks or tapes. This restore allows AWS DMS to continue the replication.

You can also set up additional archive destinations using a parameter. Configure an additional archive destination to keep a copy of the redo and archive files on a shared file system. This system should be accessible by all standby nodes of RAC, as shown in the following configuration:

archivedLogDestId=1;

Source endpoint ECA – ExtraArchivedLogDestIds=[3,4];

For information about other issues, such as if AWS DMS stops replicating data changes due to reset logs when the standby database is transitioned to the primary database during a failover of the primary database, refer to How to handle AWS DMS replication when used with Oracle database in fail-over scenarios.

UNDO and TEMP tablespaces

When using Oracle as a source for AWS DMS, sometimes the full load task fails due to a small tablespace size, resulting in the following error:

To address this issue, follow the best practices and add more space to the TEMP tablespaces. If you see a similar issue with the UNDO tablespaces, you can also add more space.

Network failure

Sometimes, AWS DMS tasks or endpoint connectivity fails because of network-related issues. These problems can stem from network bandwidth, network packet loss, or incorrect security group configurations between the source or target and the AWS DMS replication instance.

Troubleshooting network failures becomes complex due to strict security policies and resource governance. Although there isn’t a straightforward troubleshooting method, basic networking tools and utilities can help pinpoint the issue. You can also consider configuring the AWS DMS diagnostic support AMI to perform the network testing at various source and target data stores. Initial investigations at the network component level can identify obvious network problems. However, for more complex issues related to firewall rules, configurations, and network devices, consulting with network SMEs and DBAs is recommended to isolate the problem.

The following diagram illustrates a network failure scenario.

Network disconnections

AWS DMS may experience frequent disconnections from the source database, leading to pausing the reads of the changes and resulting in an increase of latency. The client (AWS DMS) log data lists ORA-03113 and ORA-03135 as the encountered errors.

The following code snippet is an example error message:

To address this issue, consider the following:

Adjust the sqlnet.expire_time value in the sqlnet.ora file – Changing this to an appropriate value could reduce or eradicate disconnection errors during longer scans, especially when including LOB values.

Adjust the MTU size – To find the Maximum Transmission Unit (MTU) size, you can use the tracepath command. The MTU size should be the same across the entire network from source to destination host. When the MTU size is different on the gateway device, update the MTU value in the AWS DMS settings. Aligning these values will increase stability. Note that this value is host-specific and, if the AWS DMS instance is changed, this value needs to be set again.

Network packet loss

AWS DMS may experience frequent disconnections from the source database, leading to pausing the reads of the changes and resulting in an increase of latency. The following code snippet is an example error message:

To optimize the network throughput for the tasks, add the extra connection attribute (ECA) asmUsePLSQLArray=TRUE on the source endpoint to be incorporated in the task. This ECA will instruct the AWS DMS task to cache 50 reads before transmitting over the network, resulting in fewer round trips over the network.

AWS DMS replication instance failure

The AWS DMS replication instance performs the actual data movement between the source and target. The replication instance plays a major role because all replication activities originate from it. However, there are specific instances where replication failures occur:

Disk space is full

AWS DMS is set up in a single Availability Zone

There’s contention on CPU or memory

The following diagram illustrates a replication instance failure scenario.

Cases have occurred where old, unused tasks led to increased storage requirements. There should be a default log cleanup or maintenance process to manage log storage space usage. When detailed debugging is enabled on heavy-duty transaction workloads, it can result in storage being filled. To address this, always set an alarm for storage. If it reaches a threshold, take the necessary action. You can find an event for low storage in the event subscription. You can enable this, or if preferred, set an alarm using Amazon CloudWatch metrics.

If a failure occurs due to full storage, consider the following strategies:

Examine the task log file size. If possible, delete it to make space available. If not, disable any unnecessary additional logging. Use the AWS DMS console for log management to immediately free up space. Although logs will remain in CloudWatch, they’ll be removed from the replication instance. For more details, refer to Introducing log management in AWS Database Migration Service.

Modify the replication instance to add more storage.

As a last resort, consider creating a new replication instance and moving the existing task to new instance.

Set a CloudWatch alarm for metrics on CPU and memory. This way, if issues arise, you’ll be alerted. To prevent contention on the replication instance, select the right type of instance for your replication workloads. Also, reviewing replication instance forensics best practices can provide insights into the impact of AWS DMS tasks on replication instance resources.

Always enable Multi-AZ. In the event of an Availability Zone failure, a replication instance from another Availability Zone will step in and continue data replication.

AWS DMS task failure

A replication task is a component of AWS DMS that reads the data from the source database. The data is formatted for consumption by the target data store before loading into target databases. Replication tasks can be used for three migration types:

Migration of existing data (full load)

Ongoing replication (CDC)

Migration of existing data and ongoing replication (full load and CDC)

The following diagram illustrates an AWS DMS task failure scenario.

During data replication, you may come across various failure scenarios during different migration types.

The following scenarios are common in full load tasks:

Data duplication

Connection timeout

LOB truncation

UNDO or TEMP tablespaces on the source database

The following are common in CDC tasks:

Table errors

Swap files on the AWS DMS replication instance

Update or delete failed because row doesn’t exist

DDL changes on the source or target database

Auto generated columns

The following are common for full load and CDC tasks:

Tasks running concurrently

Insert failed due to existing data on the target

Full load tasks

This section discusses some of the common issues observed during a full load migration (migrating existing data).

Data duplication

Sometimes, we encounter tables without primary keys in older legacy databases. During migration, we redesign these target tables to include primary keys. Yet, when running a full load, there’s a possibility that the replication task might fail due to duplicate data in the source tables. When using the AWS DMS setting DO_NOTHING, and if duplicate data exists in the target, the task could fail.

The following screenshot shows the error in the table statistics on the AWS DMS console.

The following code snippet shows the error in the AWS DMS logs:

The following code snippet shows the error in the database log files:

To mitigate this issue, consider the following:

If duplicates exist in the source table, remove them before reloading the data.

If you can’t remove duplicates from the source table, ensure the target has the same structure. However, you should include these types of tables in only full load tasks.

If no duplicate data exists in the source tables but duplicates exist on the target due to an earlier failed full load job, enable TRUNCATE_BEFORE_LOAD in the target table preparation mode.

Connection timeout

The replication task handles data replication. It connects to the source, reads the data, and writes it to the target table. If a process locks the target table during writing, the replication task will wait. If this wait lasts more than 60 seconds, the task times out.

This could be caused by the following:

The replication task attempts a DML/DDL operation on the target table, and it takes an unusually long time to finish.

If the target table has a lock, the AWS DMS task will cancel the statement. If a full load task encounters this error, the table load fails, and table statistics will display an error.

The following code snippet shows the error in the AWS DMS logs:

To mitigate this issue, you need to adjust the ECA in the target endpoint with an acceptable time. A bigger value will hold off cancellation for a longer duration. See the following code:

It sets the client statement timeout for the PostgreSQL instance, in seconds. The default value is 60 seconds.

Additionally, check if any query is holding the lock on the target table using a locking query. If the locks are legitimate, then you need to wait until query completion or cancel the query and restart the AWS DMS replication task to load the data by excluding the fully loaded tables. See the following code:

Locking Query

The connection can also be interrupted between the source and the AWS DMS replication instance by either a firewall or general network issues. For more details, refer to the previous section in this post regarding network failure.

LOB truncation

During a full load, if tables have LOB columns and you select LimitedLobmode, and the LOB size of a column exceeds the maximum LOB size set in the task settings, the data will truncate. By default, AWS DMS logs the LOB truncation and continues. However, if the end-user forgets or overlooks the logs, this can lead to truncated data. For more information about data types supported in AWS DMS for LOB columns, refer to Data types for AWS Database Migration Service.

Let’s look at an example of the LOB truncation issue. In the following code, the value is initially less than 1 KB, and the replication task setting for the LOB size is set to 1 KB:

On the AWS DMS console, you can set the maximum LOB size using Limited LOB mode, as shown in the following screenshot. For more information, refer to Setting LOB support for source databases in an AWS DMS task.

The following screenshot shows the AWS DMS task status on the table statistics page.

The following code snippet shows the warning from the AWS DMS logs:

The table load completed successfully, but there is warning in AWS DMS for the LOB column.

After investigating the task log, we recheck the table LOB size and observe the mismatch in size because of truncation of data:

To mitigate this, add the ECA FailTasksOnLobTruncation=true in the source endpoint. This attribute causes a task to fail if the actual size of a LOB column is greater than the specified size and avoids truncating the data.

The following code shows an example error message:

You can also adopt an alternate approach of running a full load with the help of maximum LOB size and inline LOB mode. This approach might help speed up migrating LOB tables.

CDC tasks

During the replication phase, the replication task reads data from transaction log files, accumulates it on the replication instance in serial transaction order, and then replays it on the target in the same sequence. During this phase, a variety of possible failures can occur.

Table errors

When there are data issues, the task will, by default, log the error and continue with the replication. Additionally, an exception will appear in the control table. When this error count reaches a threshold, the table will be suspended from replication. The default count for TableFailureMaxCount is 1000.

Consider the following mitigation strategies:

It’s recommended to have proper monitoring in place on the task log files, which will notify stakeholders of any error. For more information on setting up monitoring at the task level, refer to Automating database migration monitoring with AWS DMS.

As soon as a table error is encountered, check the control table (awsdms_apply_exceptions) in the target database.

For critical business tables, it’s recommended to understand the data and set the error settings accordingly, so you can stop the task if any data issues are encountered. For more information, refer to Error handling task settings.

Swap file on the AWS DMS replication instance

When running a CDC task, you may encounter a swap file issue. In this scenario, swap files reading from the source database are paused. This issue leads to latency or a huge number of transaction files being generated.

The following code snippet shows the error in the AWS DMS logs:

If this error occurs, consider the following strategies:

Check the health of the target database.

Change the logging parameter in PostgreSQL( ) to log all long-running AWS DMS queries in the PostgreSQL logs. Generate an explain plan to drill down the issue (for missing or improper indexes and full table scans).

If there are no issues on the target database, try to optimize the task by splitting the task into small chunks to run a parallel data load. Also, you can tune the AWS DMS task by enabling batch apply mode in the replication task. For more details, refer to Change processing tuning settings.

Update or delete failed because row doesn’t exist

When running an update or delete, you may encounter the following error:

This is caused by missing data in the target database. This issue is often observed in non-primary key tables.

To mitigate the issue, consider the following steps:

Check the error message in the awsdms_apply_exceptions table present in the target database under the control schema.

Visit the task log files to understand more about the error.

Compare the data between the source and target manually for the same primary key value. If it’s a really data issue, then you need to fix the AWS DMS replication task.

DDL changes on the source or target database

If a DDL is run on the source (such as adding a column or changing a data type) and the change isn’t replicated or applied to the target, the table may be suspended from replication. Here are the limitations with regards to DDL changes.

To address the issue, you can enable the ChangeProcessingDdlHandlingPolicy settings so that AWS DMS will take care of replicating the DDL to target. You should refer to the individual documentation for the source or target as per your use case.

Make sure to stop the replication task and apply the changes on the target first, then on the source. Then you can resume the replication task.

Auto generated columns

There is a chance of table failure if there are auto generated columns in the source database tables. For example, SQL Server and Oracle support computed columns and virtual columns with “generated always” clause respectively.

There are two use cases regarding this error:

Virtual column with “generate by column” – If a column is not visible to AWS DMS, then verify if the target database supports a similar functionality (for example, Amazon Aurora PostgreSQL 12 and later supports the “generate by column” feature).

Non-virtual column – If a column’s data is generated using “generated always” based on another column, then the column is visible to AWS DMS. Verify if the target database supports this functionality and exclude the column from replication, allowing the target functionality to handle the column. You can also alter the target column to be non-generated and allow AWS DMS to input values. During cutover, manage this in the target using methods such as triggers or “generated by.”

Full load and CDC tasks

In this section, we discuss issues you may encounter during full load and CDC tasks.

Tasks running concurrently

AWS DMS employs a lightweight database to store metadata for all AWS DMS tasks and related activities. Occasionally, issues linked to this database may surface, though they are intermittent.

While rare, this problem might arise due to contention from launching too many replication tasks simultaneously, which can lead to a locking situation in the metadata.

The following code snippet shows an example error message in the AWS DMS logs:

A straightforward way to approach this issue is to start only a few tasks at time, wait until the tasks start replicating, and then start the next batch of tasks with a gap of time between.

Insert failed due to existing data on the target

To avoid downtime, end-users often use the full load and CDC option with

replication tasks. With this option, AWS DMS loads existing data from the source to the target, caching all incoming changes to apply after the full load. If any data changes occur on a table before migrating to the target, these changes will be migrated as part of the full load. Additionally, these changes are cached for CDC. When applying the cached changes to the target, AWS DMS may begin to throw errors for duplicate rows.

For a workaround for open transactions, refer to How AWS DMS handles open transactions when starting a full load and CDC task. Note that this issue has been fixed in AWS DMS version 3.5.1.

The following code snippet shows an example error message in the AWS DMS logs:

Consider the following mitigation strategies:

A thorough review is required to investigate duplicate rows. If there is a primary key on both the source and target database, then AWS DMS will manage it by logging an error message in the task log file and continuing.

If the table has a non-primary key in the source database and a primary key in the target database, verify whether those are actual values. If not, AWS DMS will handle it because there’s a primary key on the target table.

If there’s a non-primary key on both the source and target table, AWS DMS will insert duplicate data. If rows exist in the target but not in the source, you should manually remove them from the target table.

Conclusion

In this post, we explored various failure scenarios and their respective mitigation steps related to each component in the data replication setup. In Part 2 of this series, we will delve into validation errors, target database failure scenarios and handling data related errors through error handling settings.

We value your feedback. Share any questions or suggestions in the comments section.

About the Authors

Vivekananda Mohapatra is a Lead Consultant with the Amazon Web Services ProServe team. He has deep expertise in database development and administration on Amazon RDS for Oracle, Amazon RDS for PostgreSQL, Amazon Aurora PostgreSQL, and Amazon Redshift databases. He is also a subject matter expert in AWS DMS. He works closely with customers to help migrate and modernize their databases and applications to AWS.

Jitender Kumar is a Senior Lead Consultant with the Professional Services team at Amazon Web Services. He has worked on multiple databases as DBA and is skilled in SQL performance tuning and database migrations. He focuses on database migration to AWS and works with customers from assessment to implementation.

HariKrishna Boorgadda is a Senior Consultant with the Professional Services team at Amazon Web Services. He focuses on database migrations to AWS and works with customers to design and implement Amazon RDS and Amazon Aurora architectures.

Rajesh Madiwale is a Lead Consultant with Amazon Web Services. He has deep expertise on database development and administration on Amazon RDS for PostgreSQL, Amazon Aurora PostgreSQL, Amazon Redshift, MySQL, and Greenplum databases. He is an ardent member of the PostgreSQL community and has been working on PostgreSQL his entire tenure. He has also delivered several sessions at PostgreSQL conferences.

Read MoreAWS Database Blog