Enterprises both large and small, across diverse industries and with varying levels of cloud maturity, recognize the importance and value of deploying active/active database configurations. An active/active system is a network of independent processing nodes, each having access to a common replicated database so all nodes can participate in a common application. Some enterprises are simply looking to implement their existing on-premises database deployment patterns on AWS. Others are looking to improve the performance and availability of database access to their users, sometimes within a single Region but increasingly across the globe, in a variety of business and operational scenarios.

A common reason for active/active database deployment is to deploy a single application across many Regions to improve query response times but still have all the data available to all users. In this scenario, user requests are routed to the closest Region and any data changes are asynchronously replicated to the other Regions by the database layer, ensuring both visibility of these changes globally as well as acting as disaster recovery copies in case of outages.

Another common scenario is the operational need to reduce, if not eliminate, downtime from new application deployments or database version upgrades and patching. This technique is commonly called blue-green deployment, and reduces downtime and risk by running two identical production-class environments (blue and green). Typically, at any time only one of the environments is live, with the live environment serving all production traffic. For this example, blue is currently live and green is used for testing purposes (such as a new database version, or application or schema changes). As you prepare a new version of your software, deployment and the final stage of testing takes place in the environment that is not live (in this example, green). After you have deployed and fully tested the software in green, you switch the application routing so all incoming requests now go to green instead of blue. This technique can eliminate downtime due to application deployment. In addition, blue-green deployment reduces risk: if something unexpected happens with your new version on green, you can immediately roll back to the last version by switching back to blue. To learn more about blue-green deployments with Amazon Aurora, see Automate the Amazon Aurora MySQL blue/green deployment process.

In this post, we show you how to set up multiple Amazon Aurora PostgreSQL-Compatible Edition clusters and use Oracle GoldenGate bi-directional replication to synchronize databases either within a single Region or across multiple Regions to support these different scenarios. Oracle GoldenGate is a software product that allows you to replicate, filter, and transform data from one database to another database. Due to their existing Oracle GoldenGate licenses or DBA expertise, many AWS customers are looking to use their existing GoldenGate software and knowledge to augment and enhance their Aurora database deployments.

In this post, we test Aurora PostgreSQL 3.1 (compatible with PostgreSQL 11.6) and Oracle GoldenGate 19c for PostgreSQL, although you can use the same approach with Amazon Relational Database Service (Amazon RDS) for PostgreSQL as well as later versions of Amazon RDS for PostgreSQL or Aurora PostgreSQL as well as Oracle GoldenGate. With that as an introduction, let’s dig in.

Prerequisites

You must have the following prerequisites:

Two Amazon Elastic Compute Cloud (Amazon EC2) instances (RHEL 7.7 AMI) configured as a GoldenGate hub instance in the source and target database Regions

The hub instances have Oracle GoldenGate 19c for PostgreSQL configured

The hub instances also have PostgreSQL 11 client libraries installed

The software Oracle GoldenGate PostgreSQL is also available on OCI Marketplace. For detailed steps on how to implement this OCI image, see PostgreSQL and AWS Aurora Capture using GoldenGate for PostgreSQL on OCI Marketplace.

Set up an Aurora PostgreSQL database cluster

The database configuration prerequisites are minimal:

Create your Aurora PostgreSQL DB clusters. For instructions on creating publisher and subscriber database instances, see Creating a DB cluster and connecting to a database on an Aurora PostgreSQL DB cluster.

Create a new DB cluster parameter group to use for logical replication.

To use logical replication with Aurora PostgreSQL, modify the cluster parameter group and set the static cluster parameter rds.logical_replication to 1.

Create a replication user and grant necessary privileges to the replication user in both the database clusters (for our example, we use postgres, but it may be different for your environment):

Create the corresponding publisher database and tables (with primary keys), using a PostgreSQL authenticated user with super user privileges and load tables with sample data. For instructions, see “Setting up the environment” Step 9 in Achieving minimum downtime for major version upgrades in Amazon Aurora for PostgreSQL using AWS DMS.

There are a few unsupported features in Oracle GoldenGate for PostgreSQL to consider in this setup:

PostgreSQL system databases aren’t supported for remote capture and delivery. For more information about what capture and delivery modes are available, see Choosing Capture and Apply Modes.

The Aurora PostgreSQL database version must be version 2.0 or higher, compatible with PostgreSQL 10.4+.

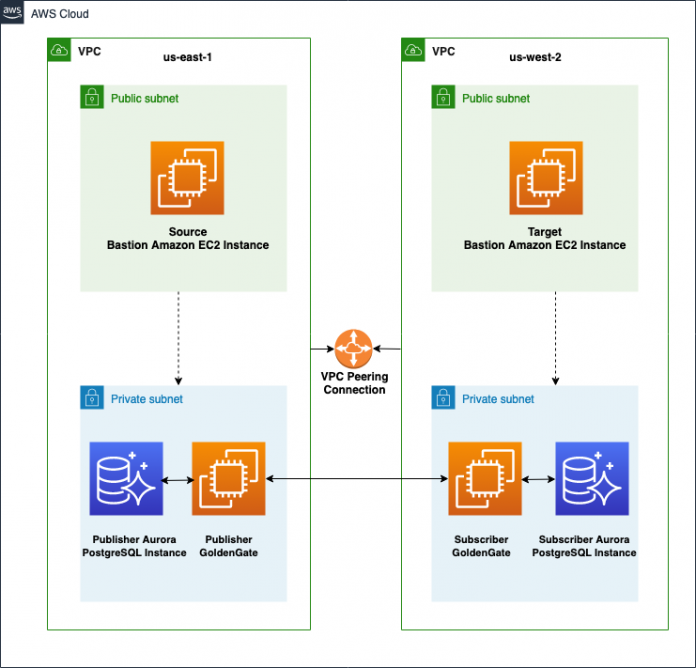

The following shows the deployment diagram for our solution.

The deployment diagram consists of the following components:

Aurora PostgreSQL instances in two Regions (us-east-1 and us-west-2). You can choose an instance type depending on your workloads.

Oracle GoldenGate on Amazon EC2 instances in the same Regions as the databases.

VPC peering between the source and target Amazon EC2 instances’ VPCs for establishing communication between the two Regions. This allows VPC resources including Amazon EC2 instances that run in different Regions to communicate with each other using private IP addresses, without requiring gateways, VPN connections, or separate network appliances. The traffic remains in the private IP space. All inter-Region traffic is encrypted with no single point of failure or bandwidth bottleneck.

Configure Oracle GoldenGate on the source database

In this section, we describe the tasks for configuring and running Oracle GoldenGate for PostgreSQL.

Log in to the Amazon EC2 host.

Create an ODBC.in file under the Oracle GoldenGate installation directory and specify the source database connection details:

Set the following environment parameters in .bash_profile:

Start GGSCI (Oracle GoldenGate command-line interface) and run the commands to create the necessary subdirectories:

Create the manager parameter file, listing an unused port for the manager to use:

Create the GoldenGate wallet file for storing the primary key for encrypting trail files:

Create Credentialstore for securely storing database user passwords:

Add a heartbeat table and checkpoint table:

Connect to the source database from GGSCI and enable supplemental logging for the user tables to be used for remote capture:

Create an extract group to fetch incremental changes. The extract group name can be eight characters long and needs to be unique across a single deployment.

To learn more about GoldenGate bi-directional replication support for PostgreSQL, see Oracle GoldenGate PostgreSQL Capture is Available now. This support is provided using the TRANLOGOPTIONS FILTERTABLE parameter. This parameter is set up in the extract files for both the source and target table and prevents data loopback.

Before starting the Oracle GoldenGate PostgreSQL extract, you need to register it with the corresponding database of interest and unregister when done (see the following code).

On registering, GoldenGate automatically creates a replication slot in the Aurora PostgreSQL database.

Configure the Oracle GoldenGate PostgreSQL Data Pump process to send the extracted trail files to the target Amazon EC2 instance.

We have decoupled the Extract and Data Pump processes to allow the extract to continue to pull changes even if there is network issue between source and target Regions. To create the data pump file, specify the target Amazon EC2 GoldenGate Hub instance host name in the RMTHOST section:

Add the data pump file to the source instance:

Repeat these steps on the target Amazon EC2 instance. Make sure to keep following points in mind:

Create the odbc.ini file with the apgtarget section and specify the target database parameters

Use TRG instead of SRC in the GoldenGate process names

Skip Step 5, and copy the cwallet.so file under the $GG_HOME/dirwlt directory to the target Amazon EC2 GoldenGate hub $GG_HOME/dirwlt directory

Start the Extract and Data Pump processes on the source and target and verify that the processes are running:

If the status shows ABENDED, check the process logs to see what’s wrong and revalidate your configuration.

In earlier steps, we configured GoldenGate to pull change data capture (CDC) changes. The next step is to copy the existing data available in the source tables.

Create a parameter file for data load as follows:

We send these files directly to the target GoldenGate hub in this step.

Start the Extract process. Unlike earlier processes, this process has to be started from the command line:

Set up the initial data load on the target and set up PostgreSQL replication

We use a Replicat process to load the initial data on the target. To do this, use an initial-load extract to extract source records from the source tables and write them to an extract file in canonical format. From the file, an initial-load Replicat loads the data using the database interface. During the load, the change-synchronization groups extract and replicate incremental changes, which are then reconciled with the results of the load.

Perform an initial data load using an initial-load Replicat on the subscriber:

Add the Replicat by logging in to the target database:

Start the Replicat process from the command line:

Set up CDC on the target

After a successful initial data load, we need to apply the CDC changes on the target.

Connect to the subscriber database and configure the Replicat for CDC:

You should use the HANDLECOLLISIONS parameter during initial loads to resolve duplicate and missing records. If a row is missing on the target, Oracle Goldengate converts an update into an insert statement. Similarly, if a row already exists on the target, it updates the row. We recommend turning off HANDLECOLLISIONS after the Replicat has caught up post initial load. During normal operations, these errors should stop the processing because it might indicate a potential problem with application. For more information, see HANDLECOLLISIONS | NOHANDLECOLLISIONS.

Log in to the subscriber database using GGSCI and add the Replicat:

Start the Replicat process using GGSCI:

Repeat these steps on the source database to copy CDC changes from the target database back to the source database. Because the data is already available, we don’t need to perform an initial data load.

Configure conflict detection and resolution between two Aurora PostgreSQL instances

When two systems are processing data transactions and the activity is shared across both systems, detecting and resolving conflicts across them becomes an essential requirement for any active/active replication configuration.

Oracle GoldenGate provides a conflict detection and resolution system that you can configure in an active/active bi-directional setup. In our example, we show you how to set up a time-based conflict resolution method, in which GoldenGate persists transactions with the latest timestamp.

To enable this, we need to make a few changes to the Extract and Replicat configuration.

Extract changes

Edit the extract parameter file and set the GetBeforeCols option of the Extract Table parameter to specify columns for which you want the extract to capture the before image of an update. In the following code, we tell GoldenGate to extract a before image for all columns on update:

Replicat changes

We add the COMPARECOLS option to specify which columns are used to detect update and delete conflicts. We use the RESOLVECONFLICT option to specify the conflict resolution method. In the following example, GoldenGate persists the record with the latest transaction_date_time value.

For more information about these rules, see CDR Example 1: All Conflict Types with USEMAX, OVERWRITE, DISCARD.

Prerequisites for test setup

We begin our test setup by establishing connectivity to the Amazon EC2 instances and checking the status of GoldenGate processes on the publisher and subscriber Aurora PostgreSQL databases. We then check the tables on both the source and target databases.

Connect to the publisher and subscriber Amazon EC2 instances

Connect to two Amazon EC2 instances so you can see the transactions replicating from source and target.

Check the status of the GoldenGate processes on the publisher and subscriber instances. The Extract, Data Pump, and Replicat processes should be in the RUNNING state:

If the status shows ABENDED, check the process logs to see what’s wrong and revalidate your configuration.

Check tables on the publisher and subscriber databases

Connect to the publisher and subscriber Aurora PostgreSQL databases to check the tables:

Make sure that we’re at a consistent starting point with four sample tables (mlb_data, sporting_event, sporting_event_ticket, ticket_purchase_hist) loaded in our sample schema that we now use for replication testing in the next section.

Basic replication test

In this step, we use the dms_sample.mlb_data table for our one-way replication tests.

Insert test

On the publisher, enter the following code:

Verify the data is replicated at the subscriber:

Now, we test the reverse, and insert data at the subscriber side and check if it gets replicated to the publisher.

On the subscriber, enter the following code:

Verify the data on the publisher:

The insert test is successful.

Update test

On the publisher, enter the following code:

Verify the data is updated at the subscriber:

Now, let’s test the reverse, and update data at the subscriber and check if it gets updated at the publisher.

On the subscriber, enter the following code:

Verify the data is updated at the publisher:

The update test is successful.

Delete test

On the publisher, enter the following code:

Verify the data on the subscriber:

Now, let’s test the reverse, and delete data in the subscriber and check if it gets updated in the publisher.

On the subscriber, enter the following code:

Verify the data on the publisher:

The delete test is successful.

Conflict detection and resolution test

In this step, we use the dms_sample.ticket_purchase_hist table for our conflict detection and resolution tests.

On the publisher and subscriber databases, set AUTOCOMMIT off:

Connect to the publisher database and make some changes:

Connect to the subscriber database and make some changes:

Because the extract and data pump processes on the publisher are in the RUNNING state, changes should flow from the subscriber to the publisher. For our conflict resolution mechanism, we used USEMAX (transaction_date_time). Because the timestamp for the UPDATE statement on the subscriber is most recent, the Replicat process applies these changes on the publisher.

Run a COMMIT on the publisher and subscriber databases, respectively.

The row on the source is instantly updated with the latest change:

Oracle GoldenGate monitoring

The primary tool for monitoring in AWS is Amazon CloudWatch. We can set up CloudWatch alarms based on certain thresholds or use the unified CloudWatch monitoring agent. The primary way to view processing information in GoldenGate is through the Oracle GoldenGate Software Command Interface (GGSCI). For more information about these commands, see Using the Information Commands in GGSCI.

Set up CloudWatch alarms for monitoring CPU utilization and disk usage, and specify an appropriate threshold. Additionally, you can deploy a custom script to send database replication lag metrics to CloudWatch for monitoring lag.

Oracle GoldenGate maintains an error file called ggserr.log under the home directory. This file contains information about the start and stop of GoldenGate processes, error messages (for example, process abend), GGSCI commands run, and other information and warning messages. You can install the unified CloudWatch monitoring agent on Amazon EC2 machines to ship GoldenGate error logs (ggserr.log) to CloudWatch logs. You can then use CloudWatch to monitor incoming log entries for any desired patterns and raise alarms. For more information about CloudWatch, see Store and Monitor OS & Application Log Files with Amazon CloudWatch.

The Replicat process writes statistics for conflict detection and resolution to a report file. You can view conflict detection and resolution statistics from the GGSCI by using the STATS REPLICAT command with the REPORTCDR option:

Aurora PostgreSQL monitoring

We can use a predefined catalog view in PostgreSQL, pg_stat_replication, to view statistics related to replication on the Aurora PostgreSQL database. We can also monitor the replication slots that currently exist on the database cluster, along with their current state using the pg_replication_slots view:

High availability for GoldenGate Amazon EC2 servers

In this post, the Oracle GoldenGate for PostgreSQL software is installed in a Single-AZ configuration in each Region on an Amazon EC2 instance utilizing Amazon Elastic Block Store (Amazon EBS) volumes as its storage. The data stored in EBS volumes is automatically replicated within its Availability Zone to prevent data loss due to failure of any single hardware component. To implement a highly available architecture, we recommend deploying your workloads to multiple Availability Zones.

Check out some related posts:

Implement Oracle GoldenGate high availability in the AWS Cloud

Implementing cross-region disaster recovery using Oracle GoldenGate for Amazon RDS for Oracle

Conclusion

This post covered how to implement active/active, bi-directional replication for Aurora PostgreSQL databases running in two different Regions, using Oracle GoldenGate 19c. If you want to try out GoldenGate for PostgreSQL, see Using Oracle GoldenGate for PostgreSQL.

If you have questions or suggestions, leave a comment.

About the Authors

Amit Bansal is a Senior Consultant with Professional Services team at Amazon Web Services. He focuses on database migrations to AWS and works with customers to design and implement AWS RDS, Aurora and Redshift architectures.

Shayon Sanyal is a Sr. Solutions Architect specializing in databases at AWS. His day job allows him to help AWS customers design scalable, secure, performant and robust database architectures on the cloud. Outside work, you can find him hiking, traveling or training for the next half-marathon.

Read MoreAWS Database Blog