Global organizations that operate and do business in many countries need to be compliant with data sovereignty and other compliance rules like GDPR. For example, you may want to replicate data to other Regions while at the same time removing certain columns to adhere to privacy laws within a country.

In this post, we demonstrate how you can replicate data across Regions while transforming it using AWS Database Migration Service (AWS DMS) and Amazon Aurora PostgreSQL-Compatible Edition.

Amazon Aurora is a MySQL and PostgreSQL-compatible relational database built for the cloud that combines the performance and availability of traditional enterprise databases with the simplicity and cost-effectiveness of open-source databases. This solution also works with Amazon Relational Database Service (Amazon RDS) for PostgreSQL.

AWS DMS helps you migrate databases to AWS quickly and securely. The source database remains fully operational during the migration, which minimizes downtime to applications that rely on the database. AWS DMS can migrate your data to and from most widely used commercial and open-source databases. For more information, see Sources for data migration.

Solution overview

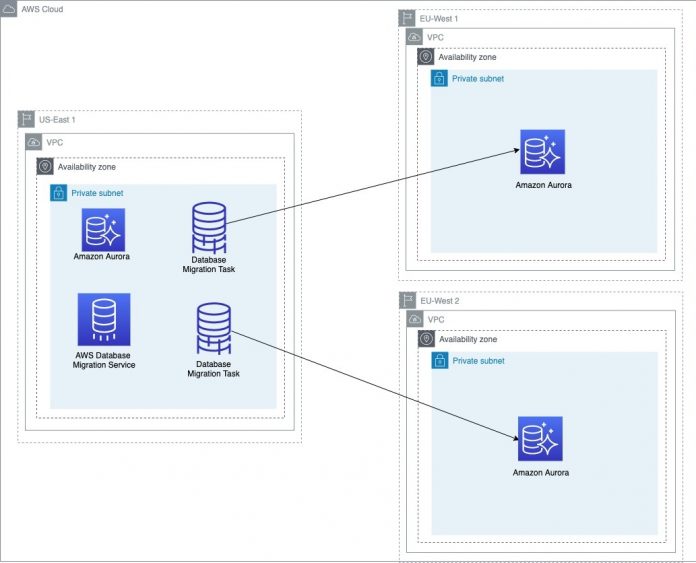

For our use case, we have a global product catalog with a requirement to replicate across multiple Regions while at the same time removing various columns due to data privacy compliance. Also, when the catalog is updated, the target copies need to be updated. We can use AWS DMS with ongoing replication to solve this challenge.

The following diagram illustrates our architecture used in this post.

Prerequisites

For this walkthrough, you should have the following prerequisites:

Amazon Virtual Private Cloud (Amazon VPC) in three Regions with Aurora PostgreSQL, version 10.6 or higher, for the purpose of this demonstration connected via a transit gateway or VPC peering.

You can use the following AWS Quick Start to deploy Aurora PostgreSQL in new or existing VPCs as needed using an AWS CloudFormation template.

A sample table DDL is available on GitHub.

The Aurora PostgreSQL source database needs to have logical replication configured for CDC (change data capture). Specifically, you need to modify the following two RDS DB cluster parameters:

rds.logical_replication = 1

wal_sender_timeout = 0

An AWS DMS replication instance configured.

AWS DMS endpoints created for the source endpoint as well as the targets.

Don’t choose Select RDS DB instance when creating the target endpoints because it prevents you from selecting endpoints in other Regions.

Select Provide access information manually under Endpoint Configuration and enter the server name accordingly as shown in the following screenshots for each target endpoint.

Deploying Aurora PostgreSQL and AWS DMS and replicating across Regions incurs costs in your account.

Replicate and transform

Now that the services are configured, we can create the AWS DMS migration task, which is where the data can be transformed via a transformation rule. For this post, we use the action of removing a column based on a compliance use case. You can use transformations to rename a schema or table, add a prefix or suffix to a schema or table, or include a specific table column. For more information about the transformation actions supported, see Transformation rules and actions.

For our demonstration, we use the following table.

Source

us-east-1

column_name

employee_id

first_name

last_name

email

phone_number

social_security

hire_date

age

job_id

salary

manager_id

department_id

When you create the migration task, select Migrate existing data and replicate ongoing changes for the Migration type for ongoing replication.

Under Table mappings, specify the database schema and tables from the source database.

Under Transformation rules, choose Add transformation rule.

For Target, choose Column.

For Schema name, enter a name.

For Table name, enter the table to transform.

For Column name, enter the column to remove.

For Action, choose Remove Column.

Choose Create task.

Because this is a CDC (change data capture) task, the task continues to run with a status of Load complete, replication ongoing until the task is manually stopped. This allows all changes on the source to be replicated continuously.

You can repeat these steps for other Regions with different transformation rules based on your requirements.

Transformation results

For this demonstration, we removed the social_security column from both target Regions and age only in eu-west-1, as depicted in the following table.

Source

Target 1

Target 2

us-east-1

eu-west-2

eu-west-1

column_name

column_name

column_name

employee_id

employee_id

employee_id

first_name

first_name

first_name

last_name

last_name

last_name

email

email

email

phone_number

phone_number

phone_number

social_security

hire_date

hire_date

hire_date

age

age

job_id

job_id

job_id

salary

salary

salary

manager_id

manager_id

manager_id

department_id

department_id

department_id

In the output from the source Region, us-east-1, the employee table has 12 rows.

In the output from the target Regions, eu-west-2 has the social_security column removed, and eu-west-1 has the social_security and age column removed via the AWS DMS transformation rule.

Clean up

To clean up any provisioned resources used in this post, delete the following items:

The CloudFormation stack if you used the Quick Start

Any Aurora PostgreSQL databases created if you didn’t use the Quick Start

AWS DMS resources in the following order:

Any ongoing migration tasks

The migration tasks once it has stopped

The replication instance

Conclusion

In this post, we showed you how to use AWS DMS to replicate an Aurora PostgreSQL database to multiple Regions while performing a transformation on a specific table. Also, by using an AWS DMS CDC migration task, all changes on the source database are replicated to the target endpoints.

Let us know what you think by sharing your comments.

For further reading, see Best practices with Amazon Aurora PostgreSQL.

About the Authors

Raj Dandu is a Solutions Architect at Amazon Web Services. He helps customers build solutions using AWS services. His favorite technical domains are data management and containers. In his spare time Raj likes running and spending time with his family.

Tom Santuccio is a Solutions Architect at Amazon Web Services and is based out of Charlotte, NC. He has a focus on security and enjoys leveraging technology to solve business problems for large enterprise organizations. When he’s not working, he enjoys running and spending time with his family.

Suresh Patnam is a Solutions Architect at AWS. He helps customers innovate on the AWS platform by building highly available, scalable, and secure architectures on Big Data and AI/ML. In his spare time, Suresh enjoys playing tennis and spending time with his family.

Read MoreAWS Database Blog