In scenarios such as consolidating or merging multiple departments with separate AWS accounts into a single AWS account, splitting a single account or divisions into multiple AWS accounts for better management, or duplicating an AWS account across Regions, you often need to migrate the database from one AWS account to another with minimal downtime and application disruption.

In this post, we explore migrating an Amazon Relational Database Service (Amazon RDS) for Oracle database from one AWS account to another using RDS storage-level snapshots and change data capture (CDC) using AWS Database Migration Service (AWS DMS) to minimize the downtime window. Depending on the database size, migration needs to be planned in accordance with application availability requirements to minimize the downtime and cut-over window.

Solution overview

In this post, we discuss the steps and best practices for a successful migration of an RDS for Oracle database from one account to another, which includes two main phases:

The initial data load using an RDS snapshot

CDC from the source and continuous replication using AWS DMS until the cut-over or go-live

Initial data load

Amazon RDS for Oracle offers multiple options to complete the initial load. For more information, refer to Importing data into Oracle on Amazon RDS. As part of selecting the initial load options, how fast the initial load takes to complete is an important factor.

How many changes that need to be applied in the CDC phase depends on how long the initial load takes to complete. All change logs of the source need to be preserved during the time to complete the first phase because the second phase needs those logs to sync the target database. Make sure that your source storage has enough space to retain archived redo logs during the specified retention period. As a side note, if you plan to increase storage for retention need, increase the size of instance after the snapshot is taken, so that snapshot reflects only original size and not the size with additional storage that you added temporarily to retain archive logs for phase2.

Refer the query below to see daily archive log generation volume (in GB) for last 15 days.

Oracle Data Pump with Amazon S3 integration or Amazon EFS integration – This approach is a logical migration of the database and useful when the database is of small to medium size. It’s also a good option when you have to migrate only selected schemas or tables within the schema and when you need to migrate across database versions. For databases, when migration is time constrained, Data Pump might not be the best option if the delta built up is huge during the time that the export and import is completed. For more details, refer to Importing using Oracle Data Pump and Strategies for best practice for very large database migration.

Data pump can be used with Amazon S3 or Amazon EFS to transfer the data files and metadata. For more information, refer to RDS for Oracle integration with S3 and RDS for Oracle integration with Amazon EFS.

RDS snapshot restore – This approach is recommended for cross-account migration if the source and target AWS accounts are in the same or different Regions. The source and target databases need to be the same Oracle version in this method. Because the snapshot is taken at the storage level, it’s a much faster approach for migration and there is no Amazon S3 integration required while sharing a manual snapshot across accounts. This is a physical database migration for when you have to migrate the entire database and not selected schemas or tables. For more information, refer to Restoring from a DB snapshot.

Traditional export and import – This can be useful for very small databases but isn’t recommended.

We discuss and use the RDS snapshot method to perform the initial data load in Amazon RDS for Oracle because it’s much faster and more cost-effective compared to other approaches. It doesn’t require Amazon S3 integration and the snapshot can be shared across AWS accounts easily and effectively.

Data replication (Change Data Capture – CDC)

In Amazon RDS for Oracle, after the initial data load, you need to migrate the delta of changes until the cut-over to keep the source and target RDS instances in sync. In this post, we focus on using AWS database migration service (DMS), which uses Oracle redo logs for CDC and replication.

In AWS DMS, there are two methods for reading Oracle redo logs while doing CDC: Oracle LogMiner and AWS DMS Binary Reader. By default, AWS DMS uses Oracle LogMiner for CDC. For more information, refer to Using Oracle LogMiner or AWS DMS Binary Reader for CDC.

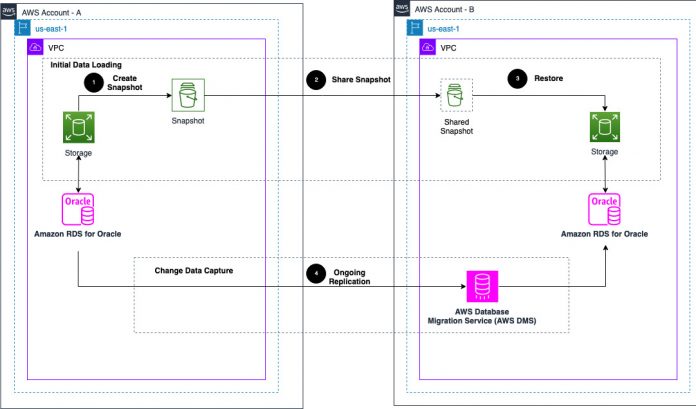

Architecture overview

The following diagram illustrates the solution architecture.

The high-level steps are as follows:

Take a manual snapshot from the source.

Share and copy the snapshot with the target account.

Restore the target RDS database from the source snapshot in the target account.

Create an AWS DMS replication instance in the target account.

Create AWS DMS endpoints to connect the source and target RDS instances.

Create an AWS DMS task for ongoing replication (CDC-only task).

Prerequisites

Before you get started, make sure you meet the following prerequisites:

The AWS DMS setup will be on the target side, and AWS DMS needs to be connected to the source and target RDS instances, so the source and target network connectivity needs to be established between AWS accounts using either VPC peering or AWS Transit Gateway. For more information, see Create a VPC peering connection.

You need Amazon Virtual Private Cloud (Amazon VPC) security groups with inbound rules to allow connections from the source to target. For more information, see Control traffic to your AWS resources using security groups.

Archive log mode needs to be enabled in the source and target databases. You enable archive log mode in Amazon RDS for oracle by setting backup retention period to 1 or more days. Please check backup retention period of source and target databases. You can check current archive log retention period setting using below commands.

The output shows the current setting for archive log retention in hours. The following sample output shows that archived redo logs are kept for 48 hours.

How long you need to retain archive log depends on time it takes to complete initial load as second phase of change data capture will start after initial load is complete. As a best practice, give some buffer time as well. As an example, if you anticipate initial load to complete in 24 hours, then retain archive log for 36 hours with 12 hours of buffer to accommodate any unexpected time delay. Retention period can be modified using below command. I used sample value of 24 hours as an example.

Depending on the method you use for CDC, the Oracle database user/login used by AWS DMS requires some privileges in the source database. Use the following table to configure an AWS-managed Oracle source database instance and apply required privileges.

Workflow step

Using LogMiner

Using Binary Reader

Grant Oracle account privileges.

For more information, see User account privileges required on an AWS-managed Oracle source for AWS DMS.

For more information, see User account privileges required on an AWS-managed Oracle source for AWS DMS.

Prepare the source database for replication using CDC.

For more information, see Configuring an AWS-managed Oracle source for AWS DMS.

For more information, see Configuring an AWS-managed Oracle source for AWS DMS.

Grant additional Oracle user privileges required for CDC.

No additional account privileges are required.

For more information, see Configuring a CDC task to use Binary Reader with an RDS for Oracle source for AWS DMS.

If you haven’t already done so, configure the task to use LogMiner or Binary Reader for CDC.

For more information, see Using Oracle LogMiner or AWS DMS Binary Reader for CDC.

For more information, see Using Oracle LogMiner or AWS DMS Binary Reader for CDC.

Configure Oracle Standby as a source for CDC.

AWS DMS doesn’t support Oracle Standby as a source.

For more information, see Using an Amazon RDS for Oracle Standby (read replica) as a source with Binary Reader for CDC in AWS DMS.

Perform the initial data load

Complete the following steps for your RDS for Oracle snapshot restore:

Connect to the source Oracle database and note down the CURRENT_SCN from the source database:

If possible, run the preceding query to capture the CURRENT_SCN during a time of minimal activity or an idle period in the database. It’s best if the source database is in read-only mode because it will be used as a start position for the CDC phase. If this isn’t possible, there might be some overlap of transactions in the second phase, which shouldn’t be an issue as long as you have a proper error handling policy and the proper constraints to avoid duplicates in the target database (for example, primary keys or unique keys on a table). Also make sure to retain archive logs for an extended period until cut-over or go-live so that all changes after the captured SCN are available to sync up during the second phase.

Create a manual snapshot of the source RDS instance.

Share the manual snapshot with the target AWS account after the snapshot is available. For target AWS account to copy an encrypted DB snapshot shared from your account, the account that you share your snapshot with must have access to the AWS KMS key that encrypted the snapshot. To allow another AWS account access to a KMS key, update the key policy for the KMS key. If target account wants to use different KMS key, it can only be set during snapshot copy. For more information, refer “Sharing encrypted snapshot” section in Sharing a DB snapshot.

Restore from the RDS snapshot to create a new RDS instance in the target account.

Verify the target database schema, table structures, and object count against the source.

Configure the target database with required privileges and settings. There are some limitations you need to review. For more information, refer to Using an Oracle database as a target for AWS Database Migration Service.

If you have active triggers on tables in you Oracle database application schema that perform actions of data changes based on operations like Insert or Update or Delete, disable them in the schemas of the target database after restore to avoid duplication of transactions because AWS DMS CDC is going to capture all changes, including transactions by triggers. The output of the following query generates a list of commands that you can put in the script to disable all triggers in the target database:

Save the script you use to disable triggers because you need this script during the cut-over phase while going live on the target. You can replace the keyword DISABLE with ENABLE in the script during cut-over and run it in the target to only enable the list of triggers that you disabled. The triggers that are already disabled in the source will not be affected by this.

Configure AWS DMS CDC and ongoing replication

To set up CDC and ongoing replication, complete the following steps:

Create an AWS DMS replication instance.

Prepare source database when using AWS managed Oracle database as source for AWS DMS. Also refer to limitations of using Oracle as source.

Prepare target database when using Oracle as target database. Also refer to privileges needed and limitations of using Oracle as target.

Create AWS DMS endpoints for the source and target RDS for Oracle instances.

Create an AWS DMS replication task and for Migration Type, choose Replicate data changes only using the SCN captured earlier as the starting point for the CDC.

The following are some important points to keep in mind while creating the task:

LOB data types (BLOB, CLOB, NCLOB) are supported only on tables that have a primary key defined during the CDC phase.

Limited LOB mode is much faster than full LOB mode, so use limited LOB mode whenever possible and specify a size big enough to accommodate the largest LOB in the source database. For example, if the largest LOB in database is 110 MB, use limited LOB mode with 128 MB size. Use the following query to find the largest LOB in the database schema:

In Oracle, schema equals to database user. For example, when you log in as MIG_LOGIN in the Oracle database, you are connected to MIG_LOGIN schema. When you use Oracle as a target, AWS DMS migrates the data to the schema which is target endpoint’s login user. For example, if you’re migrating a schema named DMS_SAMPLE to an Oracle target database, and the target endpoint login you use to connect to database is MIG_LOGIN, then AWS DMS connects to the Oracle target as MIG_LOGIN and populates the MIG_LOGIN schema with database objects from DMS_SAMPLE schema (transfers DMS_SAMPLE objects to MIG_LOGIN by default instead of DMS_SAMPLE objects to DMS_SAMPLE schema). To override this behavior, you need to map the DMS_SAMPLE schema at the source to the DMS_SAMPLE schema at the target by providing a schema transformation rule. For example, to migrate the DMS_SAMPLE schema objects to a DMS_SAMPLE schema at the target endpoint, use the following transformation rule (JSON editor view):

Start the task and let it sync the delta of changes from the SCN.

You can monitor the AWS DMS tasks for any errors or warnings, either manually or using Amazon CloudWatch. For more information about using CloudWatch, refer to Monitoring replication tasks using Amazon CloudWatch.

As a best practice, if possible, avoid deploying schema and schema structure changes (DDLs) in the source database during the replication phase until cutover. If there are requirements for DDL changes in the source, suspend the AWS DMS task for CDC, apply changes to the source and target databases, and resume the AWS DMS task for CDC for continuous replication.

Perform the cut-over

During cut-over time, stop the application pointing to the source database, stop the source database or put the source database in read-only mode, and let the final redo log changes be synched by the replication task. Validate the target database and then point the application to the target database.

Wait until the desired cut-over time while monitoring replication lag using CloudWatch.

Stop the applications connected to the source database when the CDCLatencySource and CDCLatencyTarget metrics are close to 0 and allow the final changes to flow through.

Put all future scheduled batch jobs using the source database on hold.

Verify the remaining active user connections present in the source database using the following query. See if any other application-related sessions are active, such as active batch jobs that need to finish.

If no more important active sessions need to complete, shut down the source database using the SQL command shutdown immediate for a graceful shutdown or put the source database in read-only mode using the following three steps in sequence:

Verify CDCLatencySource and CDCLatencyTarget metrics are 0 after the final changes flow through.

Enable the triggers in the target database that were disabled during the initial data load, after the target database restore, and before CDC started. You can replace the keyword DISABLE with ENABLE in the script generated earlier and run it in the target database.

Run the following statement in the source RDS for Oracle DB instance to generate the DDL to reset sequences:

Run the generated DDL statements in the target RDS Oracle instance.

Monitor the target instance by configuring the required CloudWatch alerts (refer to Monitoring Amazon RDS metrics with Amazon CloudWatch) and Amazon RDS events.

Point applications to the target database and start the applications.

Verify and test that the applications and database work as expected before going live.

Clean up

After you cut over to the target instance, you can stop the source instance to avoid instance charges. After the migration is confirmed to be successful, you can delete the instance. For instructions, refer to Deleting a DB instance.

Conclusion

In this post, we described how to migrate Amazon RDS for Oracle from one AWS account to another AWS account by using EBS storage-level snapshot and AWS DMS to speed up migration and reduce downtime. We provided references for accomplishing the migration setup, including the knowledge base, configuration requirements, and limitations.

Let us know what you think in the comments section. We’re always looking forward to your feedback.

About the Authors

Jinesh Shah is a Database Consultant with AWS Professional Services. He has more than 20 years of experience working with databases. In AWS, he works with customers in their journey to the cloud and helps them successfully achieve cost-effective, scalable, optimized, secure, and highly-available database migration and modernization solutions.

Pramod Yadav works as Cloud Support Engineer in Amazon Web Services. He has 12 years of experience working with relational databases. At AWS, he works primarily on Premium Support tickets and internal escalations created for Amazon RDS for Oracle, Amazon RDS for PostgreSQL, Amazon Aurora PostgreSQL, and AWS DMS.

Read MoreAWS Database Blog