Database migration is a multi-step process comprised of assess, mobilize and modernize phases with different tools and technologies involved. You can use tools such as AWS Schema Conversion Tool (AWS SCT) and AWS Database Migration Service (AWS DMS) to accelerate each of these phases.

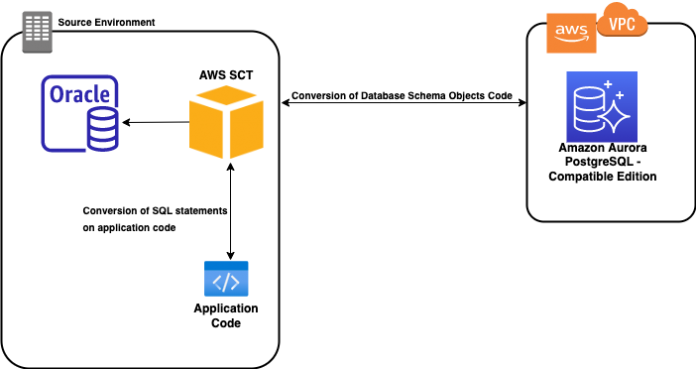

In this post, we demonstrate how to use the AWS SCT CLI to convert database schema object code and application SQL and PL/SQL code into PSQL in application files, as part of the migration process from an Oracle database hosted on Amazon Elastic Compute Cloud (Amazon EC2) to Amazon Aurora PostgreSQL-Compatible Edition.

AWS DMS helps you migrate databases to AWS quickly and securely. The source database remains fully operational during the migration, minimizing downtime to applications that rely on the database. AWS DMS can migrate your data to and from the most widely used commercial and open-source databases.

AWS SCT makes heterogeneous database migrations predictable by automatically converting the source database schema and a majority of the database code objects, including views, stored procedures, and functions, to a format compatible with the target database. You can use AWS SCT to convert the SQL code in your C++, C#, Java, or other application code.

Solution overview

In this post, we detail the AWS SCT CLI method to convert database schema object code and SQL or PL/SQL code from application files. For this you must create an AWS SCT CLI input file with an .scts extension. This file contains the AWS SCT CLI commands and required configuration. You must run the constructed .scts file in one step, which we demonstrate later in this post. However, for clarity, we have outlined all components of the .scts file individually.

At a high level, the solution steps are:

Download and install the latest version of AWS SCT.

Add the source and target connections and call the source and target connection functions.

Map the source and target schemas, then load the object tree for the source schema.

Generate and view assessment reports.

Convert the schema object code and save the DDL statements.

Apply the converted schema object code to the target.

Convert and save the application code.

Trigger the conversion with the constructed .scts input file.

Migrate the data using AWS DMS.

The following diagram illustrates the architecture.

Download and install the latest version of AWS SCT

First, you must download and install AWS SCT either on your local computer, a server in your data center, or an EC2 instance. Make sure your AWS SCT version is build 656 or higher. For step-by-step instructions, refer to Installing, verifying, and updating AWS SCT.

In our use case, we install AWS SCT on Amazon Linux hosted on Amazon EC2.

Add the source and target connections

Then create an input file with the .scts extension. The first call, CreateProject creates an AWS SCT project. Followed by AddSource to add Oracle as source and the Aurora PostgreSQL target with AddTarget. This will also establish connections to respective source and target. The following snippet shows the implementation:

Map the source and target schemas and load the respective object trees

The next step establishes the server mapping, which is used for conversion using the command AddServerMapping.

There are two ways of using this command:

Using a tree path, such as Servers.<sourceName>.Databases.<database_name>.Schemas.<schema_name>

Using a name path, such as <sourceName>.<schema_name>

For this post, we use a tree path:

Generate and view assessment reports

You use the CreateReport command to generate a migration assessment report in the current project.

After you create the migration assessment report, you can save it to a PDF file using the SaveReportPDF command or save it to CSV using SaveReportCSV. In the following code, we continue to use treePath for these calls, which provides a path to a specific type of object or object name:

The following screenshot shows an example of our migration assessment report PDF.

Convert the schema object code

The most interesting and challenging phase of the entire flow is when AWS SCT converts the database and schema object code from source to target. We save the converted DDL statements in .sql files. As part of the process, we make multiple AWS SCT CLI calls.

To begin with, we call the Convert function, which performs object conversion on the scope defined by one of its parameters (we use treePath for defining the scope). We have an option to save the converted DDLs from the source using SaveSourceSQL to save it to a single file or SaveSourceSQLbyStage to save to different .sql files for different object types.

Similarly, you can save the converted .sql files for the target database. We use both the SaveTargetSQL and SaveTargetSQLbyStage commands to save the converted files into a single .sql file and different .sql files for different object types, respectively:

The following screenshot shows the files that we created under the /home/sct-user/blog/output/target/split/ folder when we used the SaveTargetSQLbyStage function to create multiple files.

Apply the converted schema object code to the target

As a best practice, it’s always recommended to review the converted .sql files to see if the conversion done by AWS SCT is as expected. If you see any discrepancies, fix the code manually and then run them on the target database.

If the AWS SCT can’t automatically convert the SQL code, you see an error message like the following in your .sql file:

The preceding error is specific when you try to convert object code that uses ROWID from Oracle to Aurora PostgreSQL.

For the scope of this post, we apply the converted schema object code using the ApplyToTarget command, because the intention is to show that we can automate this step:

Validation is a crucial phase of any migration. For more information, refer to Validating database objects after migration using AWS SCT and AWS DMS.

Convert the application code

Now that our schema objects are on our target database (Aurora PostgreSQL), we’re ready to move on to the application conversion step.

We can use AWS SCT to convert the SQL code in our C++, C#, Java, XML, SQL or other application code.

For our use case (an Oracle to Aurora PostgreSQL conversion), we use the AWS SCT CLI to convert SQL statements in a file to PSQL statements, and save the application assessment report using the following functions. AWS SCT overwrites the existing application file that we mentioned in the -file argument of the ConvertGenApp, ApplyGenApp, and SaveGenApp functions. The source application file that we mentioned in the -file argument of ConvertGenApp (application_sql.java) is saved in the project directory with the extension *.old. For our case, it’s saved in /home/sct-user/blog/blog_trial_1/AppConversion/App_convert/ApplicationFiles/application_sql.java.old.

We used the following sample JAVA application file with SQLs for our use case:

CreateGenAppProject – Creates the generic application conversion project

SaveGenAppReportToPDF – Saves the conversion report to PDF

ConvertGenApp – Converts the conversion project script

ApplyGenApp – Applies the converted or changed code to the generic application sources

SaveGenApp – Saves the converted or changed generic application sources on the file system

See the following code:

Trigger the conversion with the constructed .scts input file

The .scts file includes all the mentioned functions, which can be called with a single command:

For example:

You can also use the GUI mode of AWS SCT to convert database schema object code and convert the SQL to PSQL code from the application file.

Migrate the data using AWS DMS

This final step requires careful planning. Data movement is a significant phase of any database migration.

For instructions on automating migration using the AWS Command Line Interface (AWS CLI), refer to Automating AWS DMS Migration Tasks.

Best practices while using AWS SCT CLI.

Use the SSL connection while connecting to source and target database. You must configure the SSL encryption on your source and target databases as prerequisites

Either remove ”scts” input file after the execution or anonymize the database credentials from the “scts” input file

Summary

The AWS SCT CLI provides a way to automate and manage database and application migrations. In this post, we showed you how to use the AWS SCT CLI with .scts files to convert Oracle database schema objects and SQL code from an application file to Amazon Aurora PostgreSQL-Compatible Edition.

For additional details, refer to Command-Line Interface and Interactive Mode Reference: AWS Schema Conversion Tool.

About the Authors

Narendra Tawar is a Database Migration Specialist Solutions Architect on the Amazon Database Migration Accelerator team, with a strong background in database migration. He has carried out many large-scale database migrations and modernizations. As a Solutions Architect, he enjoys providing technical assistance to customers that are looking to migrate and modernize their existing databases.

Dwarka Rao is a Database Migration Specialist Solutions Architect with the Amazon Database Migration Accelerator team at Amazon Web Services. He helps customers and partners build highly scalable, available, and secure databases solutions on cloud migration projects from on-premises to AWS.

Alex Anto is a Data Migration Specialist Solutions Architect with the Amazon Database Migration Accelerator team at Amazon Web Services. He works as an Amazon DMA Advisor to help AWS customers to migrate their on-premises data to AWS Cloud database solutions.

Read MoreAWS Database Blog