In this post, we demonstrate how you can use AWS CloudFormation, open-source Apache JMeter, and Python to generate a performance testing framework for Amazon Aurora PostgreSQL-Compatible Edition databases. This performance testing model can help you run multiple iterations of load testing on Aurora PostgreSQL and make data-driven decisions regarding the database version, configuration, and instance type.

This post reuses CloudFormation templates from the post Deploy an Amazon Aurora PostgreSQL DB cluster with recommended best practices using AWS CloudFormation and the aws-samples GitHub repository to build the solution resources.

Solution overview

This performance testing framework provides the following resources:

CloudFormation templates to create Amazon Virtual Private Cloud (Amazon VPC) resources, Amazon Elastic Compute Cloud (Amazon EC2) instances, and an Aurora PostgreSQL database

A Python script to create database objects in the test database

A preconfigured Apache JMeter test plan that you can import and run on any server

You might need to be readjust and optimize this testing framework according to individual use cases.

Amazon Aurora is a relational database service that combines the speed and availability of high-end commercial databases with the simplicity and cost-effectiveness of open-source databases. Aurora is fully compatible with MySQL and PostgreSQL, allowing existing applications and tools to run without requiring modification.

Apache JMeter is a widely used open-source load testing tool that supports load testing of various applications and can simulate heavy load on servers, databases, networks, and web applications. You can create virtual users and add “think time” that can simulate real-time production load. For more details, refer to Apache JMeter.

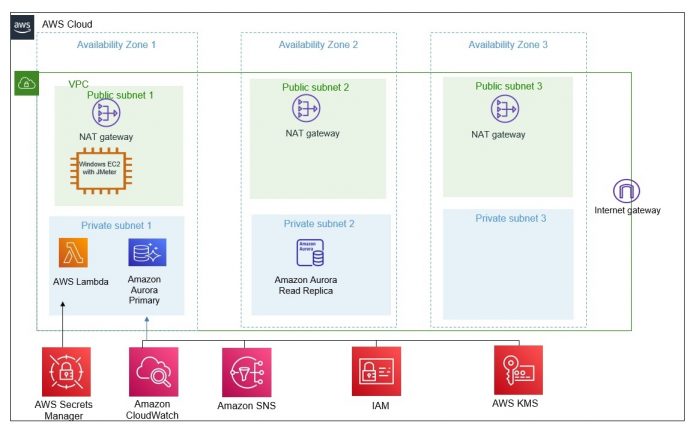

The following diagram illustrates our solution architecture.

Prerequisites

Make sure you have the following prerequisites:

An AWS account with permissions to create CloudFormation stacks and to create Amazon VPC resources, EC2 instances, and an Aurora database

The prerequisites as mentioned in the post Deploy an Amazon Aurora PostgreSQL DB cluster with recommended best practices using AWS CloudFormation

Please consider the cost incurred for some resources during their use.

Set up Amazon VPC and networking components

For full instructions on setting up your Amazon VPC and networking components, refer to Deploy an Amazon Aurora PostgreSQL DB cluster with recommended best practices using AWS CloudFormation. Note that building the Unix bastion host is not required.

Choose Launch Stack to build the Amazon VPC and the networking components with AWS CloudFormation:

Enter the following parameter values:

Stack name – Enter a meaningful name for the stack

ClassB 2nd Octet – Specify the 2nd Octet of IPV4 CIDR Block

Estimated time to create the stack is 5 minutes.

Build an Amazon EC2 Windows machine

Choose Launch Stack to build your Amazon EC2 Windows machine with AWS:

Enter the following parameter values:

Stack name – Enter a meaningful name for the stack

InstanceType – t3.2xlarge (Default)

KeyName – Key pair for Windows Amazon EC2

ParentVPCStack – Stack name used to build Amazon VPC components from the previous step

SourceCidrForRDP – Customer’s IP to log in to Amazon EC2 using RDP

Estimated time to create the stack is 5 minutes.

The CloudFormation stack creates a Windows EC2 machine that you later use to install JMeter and launch the JMeter load testing. On the Amazon EC2 console, you should see an EC2 instance called JmeterApp in a Running state.

Set up an Aurora PostgreSQL database

Choose Launch Stack to create the Aurora database infrastructure:

For full instructions, refer to Deploy an Amazon Aurora PostgreSQL DB cluster with recommended best practices using AWS CloudFormation.

Estimated time to create the stack is 10 minutes.

Because the Unix bastion host in the linked post isn’t required, for ParentSShBastionStack, enter the stack name used to build the Windows Amazon EC2 instance in the previous section.

After the database has been created successfully, log in to the AWS Secrets Manager console and note down the database user name, password, port, and host name.

Set up Apache JMeter

Log in to the Amazon EC2 Windows machine and perform the following steps to configure JMeter:

Download and install the latest Java on the Amazon EC2 machine.

Download and install Apache JMeter.

Download the Apache JMeter Plugins Manager and place it under %JMETER_HOME%/lib/ext.

JMeter Plugins Manager manages the plugins required to perform this load testing. For more details, refer to JMeter Plugins Manager.

Increase the heap size of Apache JMeter to 4G:

Edit jmeter.bat and update the following:

Download the PostgreSQL JDBC connection JAR file in the EC2 machine.

Install Python ( 3.xx or above) in the EC2 machine.

Install pip and psycopg2:

Download DBeaver Community Edition client to the EC2 machine.

Use the database connection details retrieved from AWS Secrets Manager and verify the database connectivity using DBeaver.

Download the Apache JMeter JMX file (TestPlan_Jmeter_insert_update.jmx) attached to perform load testing and place it under any directory <testdata_directory> in the EC2 machine.

Prepare the test data

To prepare the test data, use the database credentials retrieved from AWS Secrets Manager to run the Python script (python_create_testdata.py) on the test database.

Open command prompt, and then run the following:

This script creates the schema, tables, partitions, indexes, and functions in preparation for load testing. You can run the script multiple times, and it will drop the existing database objects and recreate the database objects for performance testing.

Apache JMeter test plan description

The Apache JMeter test plan used in this post has three thread groups:

150kb_update_insert – This thread group performs 150 KB payload inserts and updates to the test table. It has the following parameters:

Number of users – 2

Ramp-up period – 300 seconds

Loop count – 288000

Throughput controller – 90% insert, 10% update

2MB_Insert – This thread group performs 2 MB inserts to the test table. It has the following parameters:

Number of users – 1

Ramp-up period – 1 seconds

Loop count – 8

Throughput controller – 100% insert

75MB Payload Insert – This thread group performs inserts to the table created by the Python script. It has the following parameters:

Number of users – 1

Ramp-up period – 1 second

Loop Count – 2

Throughput controller – 100% insert

For better understanding about the different JMeter components, please refer to the JMeter documentation on building a database test plan.

Performance test run

To run a performance test, complete the following steps:

In the Amazon EC2 Windows machine, start Apache JMeter in GUI mode by choosing %JMETER_HOME%/bin/jmeter.bat

Choose the Apache JMeter executable file to open the console.

Choose File, Open, and choose TestPlan_Jmeter_insert_update.jmx

Choose Testplan_to_insert_Update and choose the PostgreSQL driver from Amazon EC2 machine.

Choose Database and JMeter Configuration and update the Aurora PostgreSQL database details with the cluster host, database name, user name, and password.

Right click on the thread group and confirm that all thread groups are enabled.

Expand each thread group and right click and disable the listeners.

Once all the above steps have completed, close the JMeter GUI screen to run the load test in cli mode.

Open command prompt, and then run the load testing in the cli mode:

Performance test results

You can review the results on Apache JMeter by opening the output file (<output directory>/<output_filename>.jtl) from the Apache JMeter GUI, as shown in the following screenshot.

You can also generate dashboard reports by using the following command:

This report is generated as HTML format and can be viewed by choosing the following file, <reportDirectory>/index.html.

The dashboard reports provide the following metrics of the load testing:

Start time and end time of the run

APDEX (Application Performance Index)

Request summary graph that displays all failed and success requests

Statistics table that provides information about response time, throughput, and network speed

An error summary table that provides types of error encountered during the run

On top of all these metrics, this dashboard also drills down on other important charts:

Over time

Throughput

Response times

The following graph depicts “Over time” by displaying the average response time in milliseconds over time for the different thread groups.

The following graph depicts “Throughput” chart by displaying the number of hits/second over time for the different thread groups.

The following graph depicts “Response time” chart by displaying the response time percentile over time for the different thread groups.

For more details about the JMeter dashboards and charts, refer to Generating Report Dashboard.

Additionally, you can review OS and database performance using Amazon CloudWatch metrics and Amazon RDS Performance Insights.

The following graph displays the OS metrics, database load and top running SQLs during the test run as shown by Amazon RDS Performance Insights.

Run multiple iterations

To rerun a test for a different database configuration, complete the following steps:

On the AWS CloudFormation console, update the stack with the configuration changes (database instance, database version) and update the stack.

Retrieve the database details and credentials from Secrets Manager.

Run the Python script again to reload the data.

Update Apache JMeter with the database credentials and cluster information and then rerun the test.

Clean up

To avoid unwanted charges, delete the resources created via the CloudFormation stacks when you’re done using this solution. For a clean deletion, delete the CloudFormation stacks in the following order via the AWS CloudFormation console:

Delete the stack for database.

Delete the stack for Amazon EC2 JMeter.

Delete the stack for the VPC.

Conclusion

In this post, we provided a testing framework built on AWS CloudFormation, Python scripts, and Apache JMeter that you can use to run performance testing. This framework can provide insights into how to proceed with performance testing and how easily test data can be created, and you can evaluate the results for any chosen database configuration.

If you have any questions or suggestions, leave a comment.

About the Authors

Ishwar Adhikary is a Database Consultant at Amazon Web Services (AWS). He works closely with customers to modernize their database and application infrastructures. His focus area is migration of relational databases from On-premise datacenter to AWS Cloud.

Jagrit Shrestha is a Database consultant at Amazon Web Services (AWS). He works as a database specialist helping customers migrate their on-premises database workloads to AWS and provide technical guidance.

John Lonappan is a Senior Database Specialist Consultant / Solutions Architect at Amazon Web Services (AWS) with a focus on relational databases. Prior to AWS, John has worked as Database Architect for large Data center providers across the Globe. Outside of work, he is passionate about, Long drives, EV Conversion, play chess and travel.

Bharath Kumar is a Lead Database Consultant with the AWS Professional Services Team.He has been supporting and enabling customers to migrate their database from OnPrem data center to AWS cloud and also in migration from commercial database engines to open source database in Amazon.

Read MoreAWS Database Blog