Observability is the ability to understand the state of a database including performance, health, and security. Observability is critical to any database and especially when working at scale with a database service like Cloud Bigtable.

Bigtable is a managed service, so users have access to the suite of monitoring tools and metrics we provide, and we are always listening to feedback from users to learn what problems you’re trying to solve and what tooling we can build to improve the development and debugging experience.

In this blog post, we’ll look at several new tools and metrics available for Bigtable that supplement established tools like Key Visualizer and Cloud Monitoring. We’ll see what problems they can help diagnose, and how to solve those problems to get the best performance from Bigtable.

Identify inefficient queries with query stats

One key feature of Bigtable is the low latency – at the 99th percentile, you can perform single-digit millisecond queries. However, with any large-scale system, there will always be some high-latency queries. These fall under two categories: queries that are inherently slow due to the amount of data processed and queries that are slow due to external circumstances.

For queries that are inherently slow, you can’t add more nodes or processing power to optimize them. A common example with Bigtable is counting all the rows in a table, a query that needs to scan the entire table. For queries that are otherwise performant, if there is heavy traffic currently on the table that external circumstance might make the query slow.

We have introduced Bigtable query stats to help you diagnose those queries that are inherently slow. You can get more information about the query results by running your queries via the cbt CLI or the Go Client library and enabling query stats.

One core piece of information included in query stats is the ratio of data seen to data returned. If your query sees a significant amount more data than it returns, that indicates a less-performant query since it read rows it didn’t need.

A few solutions to address these slower queries include:

Narrow your query scope – Using a prefix scan or setting the start and end keys for a query is the best way to limit the number of rows seen and will speed up your queries.

Changing your schema – If you have a complex query that is used infrequently, you might accept slower performance, but if your common queries are doing slow table scans, then you might want to rework your schema to be more optimal.

Denormalize data – One way to rework your schema is to put the same data in multiple rows or tables with different row key designs to accommodate multiple access patterns. This can add complexity to your application, but depending on your use case, it might be easy to incorporate without much difficulty.

Cache your common queries – A final approach to dealing with slow queries is to cache them. You could keep the results of your queries in a cache service for 30 minutes and send queries there instead of Bigtable to reduce the response time. This is going to work best when you have slow queries whose results are needed multiple times.

Solve spiky workloads with high-granularity metrics

Applications using Bigtable perform best when traffic is regular or slowly changes. However, given the large scale that Bigtable supports, irregularities may occur and affect performance. An example of an irregularity is spiky traffic, which is too sudden to rescale for and can be difficult to diagnose.

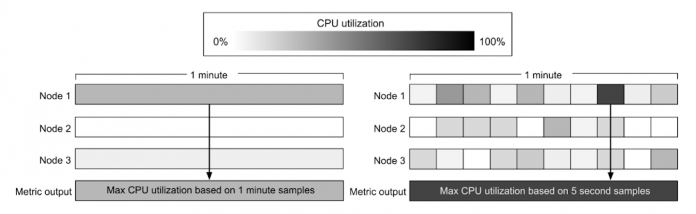

We have introduced new high granularity metrics for CPU utilization and request count that give the maximum value in a five-second span per minute. This visualization can help you see how this exposes spikes in those metrics.

CPU

This high granularity max CPU utilization metric will allow you to recognize spiky workloads that were previously getting averaged out. If your application was experiencing unexpected latency, but it looked like the CPU was fine, there might have been hot tablets from processing spikes. Now, you can more easily identify hot activity and then use tools like the hot tablet tool and the key visualizer to diagnose the issue with precision.

Request count

Request count is another metric you can use to explain latency changes. With the high granularity metric, you can see the peaks in your request count and more accurately diagnose the change in latency.

If your bursty traffic is rare, you can add some rate limiting – perhaps a request is being spammed and should be limited regardless of the latency impacts. If this occurrence is more regular, you might need to modify your row key format to spread the load across the database better. Sometimes, your workload will just be a bit spiky, and you won’t be able to change that, but this metric will provide confidence that the services you’re expecting are working as intended.

Access metadata with table stats

Table stats provide summary information about a Bigtable table. They can be useful when you are troubleshooting issues with performance or when you want to determine the source of storage costs.

Table stats can provide quick insights to some information about the tables for a high level view of how data is being stored which is great if your company has multiple developers working within one instance. A few of the new metrics provided include logical data in bytes, average number of columns per row, and row count.

Number of columns per row can help you see if the table is being used more as a key value store or a wide-table store. Row count can be a useful data point especially if you have time-series data. Note that this metric isn’t a live data point and is usually about a week delayed, so you won’t see it on your fresher tables.

Next steps

We’ve looked at a breadth of new metrics for Cloud Bigtable observability in this piece. Use these new tools to build and debug your applications at scale, and check out the existing resources that pair well with them.

Read about another new Bigtable observability tool: client side metrics. These metrics give you transparency into each layer of latency and you can determine if it is coming from the servers or just your own application processing.

Learn about Bigtable performance to ensure your database design isn’t causing high Bigtable server latency and you’re getting the best usage out of your tools

Read the best practices for high-performance schema design.

We thank the Google Cloud Bigtable team members who provided support for the blog and these new features: Humberto Díaz Suárez Software Engineer, Ibrahim Kettaneh Software Engineer, Alex O’Neill Software Engineer.

Cloud BlogRead More