Database object validation plays a key role in the database migration process. It’s the process of determining whether all the source database objects have been successfully migrated to the target database by comparing their types and counts. If you skip the validation phase, you may encounter runtime errors due to missing database objects, which can be a blocker for unit testing and code deployments.

In Validate database objects post-migration from Microsoft SQL Server to Amazon RDS for PostgreSQL and Amazon Aurora PostgreSQL we discussed validating migration from Microsoft SQL Server to Amazon Relational Database Service (Amazon RDS) for PostgreSQL and Amazon Aurora PostgreSQL-Compatible Edition. In this post, we discuss database object validations when migrating from Microsoft SQL Server to Amazon RDS for MySQL or Amazon Aurora MySQL-Compatible Edition.

Database object validation

The following list contains the various types of database objects that we validate using their counts and detail-level information, which helps us identify missing or partially migrated database objects. We ignore the system schemas in the source and target databases.

Schemas

Tables

Views

Stored procedures

Functions

Indexes

Triggers

Constraints

Let’s dive deep into each object type and its validation process. We can use SQL Server Management Studio (SSMS) to connect to the Microsoft SQL Server database and MySQL Workbench to connect to our RDS for MySQL or Aurora MySQL database and run the following queries to validate each object.

Schemas

Schemas are used to represent a collection of database objects that serve a related functionality in an application or a microservice. Let’s validate the schemas at the source and target database with the following queries.

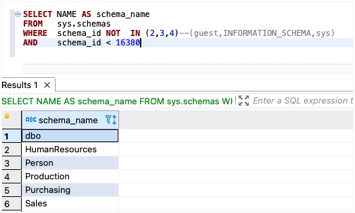

For SQL Server, use the following query:

The following screenshot shows our output.

For Amazon RDS for MySQL or Aurora MySQL, you have the following code option:

Tables

AWS Schema Conversion Tool (AWS SCT) converts source SQL Server tables to the equivalent target (MySQL) tables with appropriate data types and relative table definitions using the default or custom mapping rules applied. The following scripts return the counts and detail-level information for all the tables, assuming the source database doesn’t have any partitioned tables.

For SQL Server, use the following code:

The following screenshot shows our output.

For Amazon RDS for MySQL or Aurora MySQL, use the following code for counts:

The following screenshot shows our output.

Views

You can validate the views count converted by AWS SCT by using the following queries on the source and target databases.

For SQL Server, use the following code:

The following screenshot shows our output.

For Amazon RDS for MySQL or Aurora MySQL, you have the following code option:

The following screenshot shows our output.

Stored procedures

For SQL Server, use the following code for counts:

Use the following code for detail-level information:

The following screenshot shows our output.

For Amazon RDS for MySQL or Aurora MySQL, use the following code for counts:

The following screenshot shows our output.

Use the following query for detail-level information:

The following screenshot shows our output.

Functions

For SQL Server, use the following code for counts:

The following screenshot shows our output.

Use the following code for detail-level information:

The following screenshot shows our output.

For Amazon RDS for MySQL or Aurora MySQL, use the following code for counts:

The following screenshot shows our output.

Use the following code for detail-level information:

The following screenshot shows our output.

Indexes

Indexes play a key role in improving query performance. Because tuning methodologies differ from database to database, the number of indexes and their types vary with SQL Server and MySQL databases based on different use cases, so index counts also may differ.

With the following scripts, you can get the counts of indexes and their types in both SQL Server and MySQL databases.

For SQL Server, use the following code:

The following screenshot shows our output.

For Amazon RDS for MySQL or Aurora MySQL, use the following code for counts:

The following screenshot shows our output.

Use the following code for detail-level information:

Triggers

Triggers help you audit the DML or DDL changes in the database. They can also impact performance based on the usage in appropriate areas. The following queries give you the count and details of triggers for both the source and target databases.

For SQL Server, use the following code for counts:

The following screenshot shows our output.

Use the following code for detail-level information:

The following screenshot shows our output.

For Amazon RDS for MySQL or Aurora MySQL, use the following code for counts:

The following screenshot shows our output.

Use the following code for detail-level information:

These keys should be turned off before performing migration with AWS Database Migration Service (AWS DMS).

Constraints

Along with database object validation, you need to ensure the data is consistent and bound to integrity. Different types of constraints provide you with the flexibility to control and check the data during insertion to avoid runtime data integrity issues.

Primary keys

Primary keys allow you to have unique values for columns, which prevents information from being duplicated, following the normalization process.

This key helps improve the search based on the key values and avoid table scans.

The following queries help you extract the counts and details of primary keys in the source and target databases.

For SQL Server, use the following code for counts:

The following screenshot shows our output.

Use the following code for detail-level information:

The following screenshot shows our output.

For Amazon RDS for MySQL or Aurora MySQL, use the following code for counts:

The following screenshot shows our output.

Use the following for detail-level information:

The following screenshot shows our output.

Foreign keys

Foreign keys help you identify the relations between the tables, which you can use to form database-normalized forms that store relevant data in appropriate tables. These keys should be turned off before performing migration with AWS DMS.

With the following queries, you get the counts and detail-level information about the foreign keys in both the source and target databases.

For SQL Server, use the following code for counts:

The following screenshot shows our output.

Use the following code for detail-level information:

The following screenshot shows our output.

For Amazon RDS for MySQL or Aurora MySQL, use the following code for counts:

The following screenshot shows our output.

Unique keys

Using unique keys restricts the uniqueness of data in the column and prevents duplicate values. You can use this key to avoid data redundancy, which indirectly helps with appropriate data storage and retrieval. With the following queries, you get the counts and detail-level information about the unique keys in both the source and target databases.

For SQL Server, use the following code for counts:

The following screenshot shows our output.

Use the following code for detail-level information:

The following screenshot shows our output.

For Amazon RDS for MySQL or Aurora MySQL, use the following code for counts:

Useful MySQL catalog tables

The following table summarizes a few of the SQL Server and MySQL system tables and views that are useful for database object validation. These tables and views hold the metadata about various database objects and their details, which you can use for the validation.

SQL Server

MySQL

sys.tables/sys.objects/information_Schema

information_schema.tables

sys.views/ sys.objects/information_Schema

information_schema.views

sys.triggers/ sys.objects

information_schema.triggers

sys.indexes

information_schema.STATISTICS

information_schema.table_constraints/sys.objects

sys.objects

sys.objects/sys.procedures

information_schema.routines

Identify and fix the missing objects

As you can see, some of query output doesn’t match between SQL Server and MySQL because AWS SCT doesn’t convert everything. The queries in this post can help you identify missing database objects during migration from the source to the target database. You can use the query results to perform a comparison to identify the gaps in database objects. This narrows down the focus on the missing objects of the migration. You can use the queries iteratively after you fix the missing objects until you achieve a desired state.

Conclusion

Validating database objects is essential to providing database migration accuracy and confirming that all objects have been migrated correctly. Validating all the database objects helps you ensure target database integrity, thereby allowing your application to function as seamlessly as it did on the source database.

In this post, we discussed post-migration validation of database objects. Following the database migration from SQL Server to Amazon RDS for MySQL or Aurora MySQL, we clarified the significance of the validation process and types of database objects validated, boosting the confidence level of the migrated databases. In case of errors, this solution also helps you identify errors and assists you in identifying the missing or unmatched objects post-migration.

If you have any questions or suggestions about this post, leave a comment.

About the authors

Shyam Sunder Rakhecha is a Database Consultant with the Professional Services team at AWS based out of Hyderabad, India and specializes in database migrations. He is helping customers in migration and optimization in AWS cloud. He is curious to explore emerging technology in terms of Databases. He is fascinated with RDBMS and Big Data. He also love to organize team building events and regalement in team.

Pradeepa Kesiraju is a Senior Engagement Manager with the AWS Professional Services team based out of Hyderabad, India. She specializes in managing large scale database migrations to AWS cloud. She brings with her vast experience working on database technologies, that she leverages to identify potential risks and issues proactively. She strives to keep herself up to date with new database technologies and aligning them with customer business outcomes.

Sai Krishna Namburu is a Database Consultant with AWS Professional Services based out of Hyderabad, India. With good knowledge on relational databases adding hands-on in homogenous and heterogenous database migrations, he helps the customers in migrating to AWS cloud and their optimizations. Enthusiastic about new technologies around the databases and process automation areas. Interested in open source technologies, data analytics and ML which enhances the customer outcome.

Read MoreAWS Database Blog