Amazon SageMaker Serverless Inference is an inference option that enables you to easily deploy machine learning (ML) models for inference without having to configure or manage the underlying infrastructure. SageMaker Serverless Inference is ideal for applications with intermittent or unpredictable traffic. In this post, you’ll see how to use SageMaker Serverless Inference to reduce cost when you deploy an ML model as part of the testing phase of your MLOps pipeline.

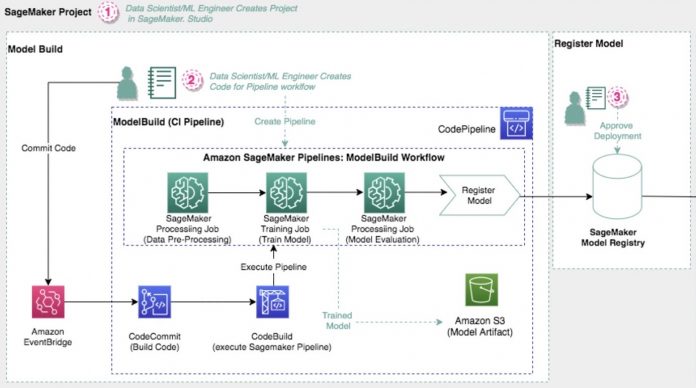

Let’s start by using the scenario described in the SageMaker Project template called “MLOps template for model building, training, and deployment”. In this scenario, our MLOps pipeline goes through two main phases, model building and training (Figure 1), followed by model testing and deployment (Figure 2).

In Figure 2, you can see that we orchestrate the second half of the pipeline using AWS CodePipeline. We deploy a staging ML endpoint, enter a manual approval, and then deploy a production ML endpoint.

Let’s say that our staging environment is used inconsistently throughout the day. When we run automated functional tests, we get over 10,000 inferences per minute for 15 minutes. Experience shows that we need an ml.m5.xlarge instance to handle that peak volume. At other times, the staging endpoint is only used interactively for an average of 50 inferences per hour. Using the on-demand SageMaker pricing information for the us-east-2 region, the ml.m5.xlarge instance would cost approximately $166 per month. If we switched to using a serverless inference endpoint and our tests can tolerate the cold-start time for the serverless inference, then the cost would drop to approximately $91 per month, a 45% increase in savings. If you host numerous endpoints, then the total savings will increase accordingly, and a serverless inference endpoint also reduces the operational overhead.

Price per second for a 1 GB serverless endpoint

$ 0.0000200

Number of inferences during peak testing times (1 run per day, 15 minutes per run, 10000 inferences per minute)

4,500,000

Number of inferences during steady state use (50 per hour)

35,625

Total inference time (1 second per inference)

4,535,625

Total cost (total inference time multiplied by price per second)

4,535,625 * 0.0000200 = $91

To make it easier for you to try using a serverless inference endpoint for your test and staging environments, we modified the SageMaker project template to use a serverless inference endpoint for the staging environment. Note that it still uses a regular inference endpoint for production environments.

You can try this new custom project template by following the instructions in the GitHub repository, which includes setting the sagemaker:studio-visibility tag to true.

When compared to the built-in MLOps template for model building, training, and deployment template, the new custom template has a few minor changes to use a serverless inference endpoint for non-prod changes. Most importantly, in the AWS CloudFormation template used to deploy endpoints, it adds a condition tied to the stage, and it uses that to drive the endpoint configuration.

This section of the template sets a condition based on the deployment stage name.

This section of the template lets us specify the allocated memory for the serverless endpoint, as well as the maximum number of concurrent invocations.

In the endpoint configuration, we use the condition to set or unset certain parameters based on the stage. For example, we don’t set an instance type value or use data capture if we use serverless inference, but we do set the serverless memory and concurrency values.

Once we deploy the custom project template and exercise the deployment CodePipeline pipeline, we can double check that the staging endpoint is a serverless endpoint using the AWS Command Line Interface (AWS CLI).

Feature exclusions

Before using a serverless inference endpoint, make sure that you review the list of feature exclusions. Note that, at the time of writing, serverless inference doesn’t support GPUs, AWS Marketplace model packages, private Docker registries, Multi-Model Endpoints, data capture, Model Monitor, and inference pipelines.

Conclusion

In this post, you saw how to use SageMaker Serverless Inference endpoints to reduce the costs of hosting ML models for test and staging environments. You also saw how to use a new custom SageMaker project template to deploy a full MLOps pipeline that uses a serverless inference endpoint for the staging environment. By using serverless inference endpoints, you can reduce costs by avoiding charges for a sporadically used endpoint, and also reduce the operational overhead involved in managing inference endpoints.

Give the new serverless inference endpoints a try using the code linked in this blog, or talk to your AWS solutions architect if you need help making an evaluation.

About the Authors

Randy DeFauw is a Principal Solutions Architect. He’s an electrical engineer by training who’s been working in technology for 23 years at companies ranging from startups to large defense firms. A fascination with distributed consensus systems led him into the big data space, where he discovered a passion for analytics and machine learning. He started using AWS in his Hadoop days, where he saw how easy it was to set up large complex infrastructure, and then realized that the cloud solved some of the challenges he saw with Hadoop. Randy picked up an MBA so he could learn how business leaders think and talk, and found that the soft skill classes were some of the most interesting ones he took. Lately, he’s been dabbling with reinforcement learning as a way to tackle optimization problems, and re-reading Martin Kleppmann’s book on data intensive design.

Read MoreAWS Machine Learning Blog