At Google Cloud I/O, we announced the public preview of Cloud Run jobs. Unlike Cloud Run services that run continuously to respond to web requests or events, Cloud Run jobs run code that performs some work and quits when the work is done. Cloud Run jobs are a good fit for administrative tasks such as database migration, scheduled work like nightly reports, or doing batch data transformation.

In this post, I show you a fully serverless, event-driven application to take screenshots of web pages, powered by Cloud Run jobs, Workflows, and Eventarc.

Architecture overview

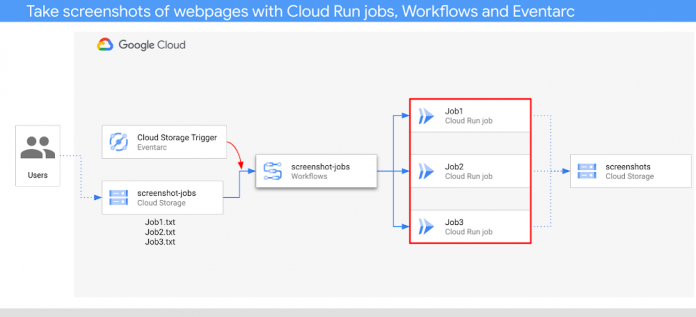

Here’s an architectural overview of the application:

User uploads a job (a text file with a list of URLs) to a Cloud Storage bucket.

This triggers a Cloud Storage event that gets picked up by Eventarc and forwarded to Workflows.

Workflows extracts the file from the event and parses the URLs from the file.

Workflows creates a new Cloud Run job with a unique task (container) for each URL.

Workflows runs and waits for all the tasks of the job to complete.

Each task of the job captures a screenshot of its URL and saves it to the Cloud Storage bucket.

If all the tasks of the job are successful, Workflows deletes the job text file and the job. If not, Workflows keeps the job to enable post mortem.

In this application, Cloud Run jobs captures screenshots of web pages in parallel tasks, Workflows manages the lifecycle of Cloud Run jobs, and Eventarc makes the application event-driven by forwarding Cloud Storage events to Workflows.

Screenshot application with Cloud Run jobs

Caution: Only use this application to screenshot web pages that you trust.

The application is written in Node.js (source code). It has a main function that receives a list of URLs. Each task (container) reads the CLOUD_RUN_TASK_INDEX (passed by Cloud Run jobs) environment variable to determine which URL it needs to process from the list. It also reads the BUCKET_NAME environment variable, so it knows where to store its screenshot:

code_block[StructValue([(u’code’, u’async function main(urls) {rn console.log(`Passed in urls: ${urls}`);rnrn const taskIndex = process.env.CLOUD_RUN_TASK_INDEX || 0;rn const url = urls[taskIndex];rn if (!url) {rn throw new Error(rn `No url found for task ${taskIndex}. Ensure at least ${rn parseInt(taskIndex, 10) + 1rn } url(s) have been specified as command args.`rn );rn }rn const bucketName = process.env.BUCKET_NAME;rn if (!bucketName) {rn throw new Error(rn “No bucket name specified. Set the BUCKET_NAME env var to specify which Cloud Storage bucket the screenshot will be uploaded to.”rn );rn }’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e354c3c1250>)])]

It uses the Puppeteer library to take a screenshot of the web page:

code_block[StructValue([(u’code’, u’const browser = await initBrowser();rn const imageBuffer = await takeScreenshot(browser, url).catch(async (err) => {rn // Make sure to close the browser if we hit an error.rn await browser.close();rn throw err;rn });rn await browser.close();’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e354cf1b310>)])]

Once the screenshot is captured, the app saves it to the Cloud Storage bucket:

code_block[StructValue([(u’code’, u’console.log(“Initializing Cloud Storage client”);rn const storage = new Storage();rn const bucket = await createStorageBucketIfMissing(storage, bucketName);rn await uploadImage(bucket, taskIndex, imageBuffer);rn console.log(“Upload complete!”);’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e354cf1b050>)])]

Note that there’s no server to handle HTTP requests, as it’s not needed. There is simply a containerized application (see Dockerfile) that Workflows creates and executes as a task of a Cloud Run job.

Orchestration with Workflows

Workflows is used to manage the lifecycle of Cloud Run jobs (see workflow.yaml).

Workflows receives the Cloud Storage event from Eventarc and parses out the URLs from the file:

code_block[StructValue([(u’code’, u’main:rn params: [event]rn steps:rn – init:rn assign:rn – bucket: ${event.data.bucket}rn – name: ${event.data.name}rn …rn – read_from_gcs:rn call: http.getrn args:rn url: ${“https://storage.googleapis.com/download/storage/v1/b/” + bucket + “/o/” + name}rn auth:rn type: OAuth2rn query:rn alt: mediarn result: file_contentrn – prepare_job_args:rn assign:rn – urls: ${text.split(file_content.body, “\n”)}’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e354cf1b5d0>)])]

Then, it creates a Cloud Run job with the list of URLs. Note that taskCount is set to the number of URLs. This ensures that each URL is processed in a separate task (container) in parallel:

code_block[StructValue([(u’code’, u’- create_job:rn call: googleapis.run.v1.namespaces.jobs.creatern args:rn location: ${location}rn parent: ${“namespaces/” + project_id}rn body:rn apiVersion: run.googleapis.com/v1rn metadata:rn name: ${job_name}rn labels:rn cloud.googleapis.com/location: ${location}rn annotations:rn run.googleapis.com/launch-stage: ALPHArn kind: “Job”rn spec:rn template:rn spec:rn taskCount: ${len(urls)}rn template:rn spec:rn containers:rn – image: ${job_container}rn args: ${urls}rn env:rn – name: BUCKET_NAMErn value: ${bucket_output}rn timeoutSeconds: 300rn serviceAccountName: ${“screenshot-sa@” + project_id + “.iam.gserviceaccount.com”}rn result: create_job_result’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e354d4d7750>)])]

Once the job is created, Workflows executes the job:

code_block[StructValue([(u’code’, u’- run_job:rn call: googleapis.run.v1.namespaces.jobs.runrn args:rn location: ${location}rn name: ${“namespaces/” + project_id + “/jobs/” + job_name}rn result: run_job_result’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e354d4d7450>)])]

If everything is successful, the job and the job text file are deleted:

code_block[StructValue([(u’code’, u’- delete_job:rn call: googleapis.run.v1.namespaces.jobs.deletern args:rn location: ${location}rn name: ${“namespaces/” + project_id + “/jobs/” + job_name}rn result: delete_job_resultrn – delete_from_gcs:rn call: googleapis.storage.v1.objects.deletern args:rn bucket: ${bucket}rn object: ${name}’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e354d4d7490>)])]

The output of the workflow is the list of processed URLs:

code_block[StructValue([(u’code’, u’- return_result:rn return:rn processed_urls: ${urls}’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e354d4d74d0>)])]

Trigger with Eventarc

The last step is to execute the workflow when there’s a new job request. A new job request is simply a new text file in a Cloud Storage bucket. This event is captured by Eventarc and forwarded to the workflow with a Cloud Storage trigger:

code_block[StructValue([(u’code’, u’gcloud eventarc triggers create screenshot-jobs-trigger \rn –location=us \rn –destination-workflow=$WORKFLOW_NAME \rn –destination-workflow-location=$REGION \rn –event-filters=”type=google.cloud.storage.object.v1.finalized” \rn –event-filters=”bucket=$BUCKET” \rn –service-account=screenshot-sa@$PROJECT_ID.iam.gserviceaccount.com’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e354d4d78d0>)])]

Try it out

To try out the application, upload some job files (job1.txt, job2.txt) to the bucket:

gsutil cp job1.txt gs://$BUCKET

gsutil cp job2.txt gs://$BUCKET

Each file should trigger a new Workflow execution:

And each Workflow execution should trigger a new Cloud Run job with a unique task for each URL running in parallel:

After a few seconds, you should see a new output bucket with screenshots of web pages:

Cloud Run jobs is a new way of running code that performs some work and quits when the work is done. This blog post demonstrated how to use Cloud Run jobs, Workflows, and Eventarc to create a fully serverless, event-driven application to take screenshots of web pages. As always, feel free to reach out to me on Twitter @meteatamel for any questions or feedback.

Cloud Blog