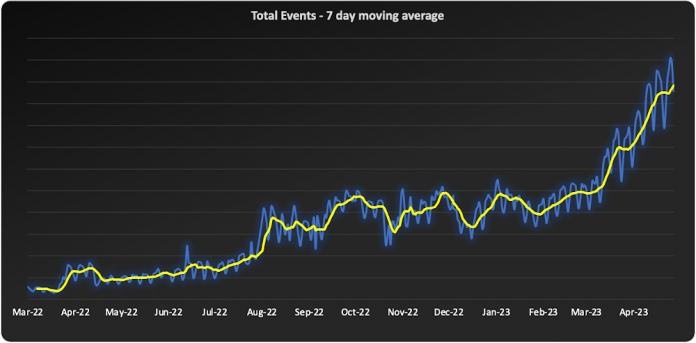

Statsig is a modern feature management and experimentation platform used by hundreds of organizations. Statsig’s end-to-end product analytics platform simplifies and accelerates experimentation with integrated feature gates (flags), custom metrics, real-time analytics tools, and more, enabling data-driven decision-making and confident feature releases. Companies send their real-time event-stream data to Statsig’s data platform, which, on average, adds up to over 30B events a day and has been growing at 30-40% month over month.

With these fast-growing event volumes, our Spark-based data processing regularly ran into performance issues, pipeline bottlenecks, and storage limits. In turn, this rapid data growth negatively impacted the team’s ability to deliver product insights on time. The sustained Spark tuning efforts that we were dealing with made it challenging to keep up with our feature backlog. Instead of spending time building new features for our customers, our engineering team was controlling significant increases in Spark’s runtime and cloud costs.

Adopting BigQuery pulled us out of Spark’s recurring spiral of re-architecture and into a Data Cloud, enabling us to focus on our customers and develop new features to help them run scalable experimentation programs.

The growing data dilemma

At Statsig, our processing volumes were growing rapidly. The assumptions and optimizations we madea month ago would become irrelevant the next month. While our team was knowledgeable in Spark performance tuning, the benefits of each change were short lived and became obsolete almost as quickly as it was implemented. As the months passed, our data teams were dedicating moretime to optimizing and tuning Spark clusters instead of building new data products and features. We knew we needed to change our data cloud strategy.

We started to source advice from companies and startups who had faced similar data scaling challenges – the resounding recommendation was “Go with BigQuery.” Initially, our team was reluctant. For us, a BigQuery migration would require an entirely new data warehouse and a cross-cloud migration to GCP.

However, during a company-wide hackathon, a pair of engineers decided to test BigQuery with our most resource-intensive job. Shockingly, this unoptimized job finished much faster and at a lower cost than our current finely-tuned setup. This outcome made it impossible for us to ignore a BigQuery migration any longer. We set out to learn more.

Investigating BigQuery

When we started our BigQuery journey, the first and obvious thing we had to understand was how to actually run jobs. With Spark, we were accustomed to daunting configurations, mapping concepts like executors back to virtual machines, and creating orchestration pipelines for all the tracking and variations required.

When we approached running jobs on BigQuery, we were surprised to learn that we only needed to configure the number of slots allocated to the project. Suddenly, with BigQuery, there were no longer hundreds of settings affecting query performance. Even before running our first migration test, BigQuery had already eliminated an expensive and tedious task list of optimizations our team would typically need to complete.

Digging into BigQuery, we learned about additional serverless optimizations that BigQuery offered out of the box that addressed many of the issues we had with Spark. For example, we often had a single task get stuck with Spark because virtual machines would be lost or the right VM shape needed to be attainable. With BigQuery’s autoscaling, SQL jobs are much more granularly defined and can move resources as needed between multiple jobs. As another example, we sometimes encountered a storage issue with Spark due to the shuffled data overwhelming a machine’s disk. On BigQuery, there is aseparate in-memory shuffle service that eliminates the need for our team to worry about predicting and sizing shuffle disk sizes.

At this point, it was clear that the migration away from the DevOps of Spark and into the serverless BigQuery architecture would be worth the effort.

Spark to BigQuery migration

When migrating our pipelines over, we ran into situations where we had to rewrite large blocks of code, making it easy to introduce new bugs. We needed a way to simultaneously stage this migration without committing to huge rewrites . Dataproc is a very useful tool for this purpose. Dataproc provides us with a simple yet flexible API to spin up Spark clusters and gives us access to the full swath of configurations and optimizations that we’re accustomed to from our previous Spark deployments.

Additionally, BigQuery offers a direct Spark integration through stored procedures with Apache Spark, which provides a fully managed and serverless Spark experience native to BigQuery and allows you to call Spark code directly from BigQuery SQL. It can be configured as part of the BigQuery autoscaler and called from any orchestration tool that can execute SQL, such as dbt.

This ability to mix and match BigQuery SQL and multiple options for Spark gave us the flexibility to move to BigQuery immediately but roll out the entire migration on our timeline.

With BigQuery, we’re back to building features

With BigQuery, we could to tap into performance improvements, direct cost savings, and experienced a reduction in our data pipeline error rates. However, BigQuery really changed our business by unlocking new real-time features that we didn’t have before. A couple of examples are:

1. Fresh, fast data results

On our Spark cluster, we needed to pre-compute tens of thousands of possible results each day if a customer wanted to look at a specific detail. While only a small percentage of results would get viewed each day, we couldn’t predict which results would be needed, so we had to pre-compute it all. With BigQuery, the queries run much faster, so we now compute specific results when customers need them. We benefit from avoiding expensive jobs. To our customers, this translates into fresher data.

2. Real-time decision features

Since our migration to BigQuery began, we have rolled out several new features powered by BigQuery’s ability to compute things in near real-time, enhancing our customers’ ability to make real-time decisions.

1) A metrics explorer that lets our customers query their metric data in real-time.

2) A deep dive experience that lets our customers instantly dig into a specific user’s details instead of waiting on a 15-minute Spark job to process.

3) A warehouse-native solution that lets our customers use their own BigQuery project to run analysis.

Migrating from Spark to BigQuery has simplified many of our workflows and saved us significant money. But equally importantly, it has made it easier to work with massive data, reduced the strain on our perpetually stretched-thin data team, and allowed us to build awesome products faster for our customers.

Getting started with BigQuery

There are a few ways to get started with BigQuery. New customers get $300 in free credits to spend on BigQuery. All customers get 10GB storage and up to 1TB queries free per month, not charged against their credits. You can get these credits by signing up for the BigQuery free trial. Not ready yet? You can use the BigQuery sandbox without a credit card to see how it works.

The Built with BigQuery advantage for ISVs

Google is helping tech companies like Statsig build innovative applications on Google’s data cloud with simplified access to technology, helpful and dedicated engineering support, and joint go-to-market programs through the Built with BigQuery initiative.

More on Statsig

Companies want to understand the impact of their new features on the business, test different options, and identify optimal configurations that balance customer success with business impact. But running these experiments is hard. Simple mistakes can cause you to make wrong decisions, and lack of standardization can make results hard to compare, and reduce user trust. When implemented poorly, an experimentation program can become a bottleneck, causing feature releases to slow down or, in some cases, causing teams to skip experimentation altogether. Statsig provides an end-to-end product experimentation and analytics platform that makes it simple for companies to leverage best-in-class experimentation tooling.

If you’re looking to improve your company’s experimentation process, visitstatsig.com and explore what our platform can offer. We have a generous free tier and a community of experts on Slack to help ensure your efforts are successful. Boost your business’s growth with Statsig and unlock the full potential of your data.

Cloud BlogRead More