In-memory databases are ideal for applications that require microsecond response times and high throughput, such as caching, gaming, session stores, geo-spatial services, queuing, real-time data analytics and feature stores for machine learning (ML). In this In-Memory Database Bluebook, we provide you with a list of Amazon ElastiCache and Amazon MemoryDB for Redis code samples and projects, available in our GitHub repo, that provide prescriptive solutions to specific customer problems and requirements. These sample projects include caching, real-time database monitoring, session store, conversational chatbots, benchmarking tools for optimizing performance, online feature stores for low-latency machine learning (ML) model inferencing and many more. The core purpose of these artifacts is to provide you a head start and make it simpler for you to get started with our services. Let’s dive in and review some of our solutions.

Caching solutions using ElastiCache

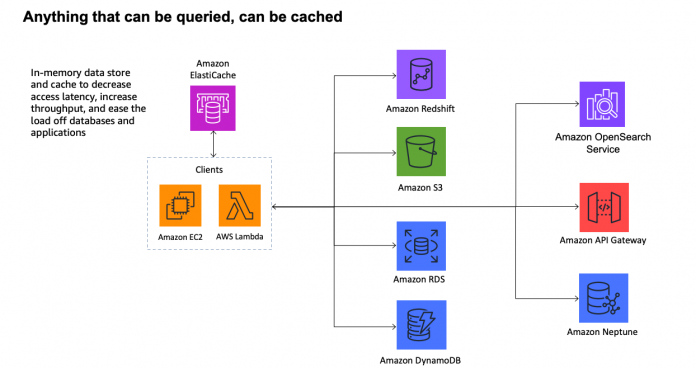

A cache is a high-speed data access layer primarily used to improve system performance, reduce latency, and minimize the load on primary data sources such as databases, storage devices, or remote servers. Caching works by storing a subset of frequently accessed data or query results in a high-speed, low-latency storage area. This allows workloads to quickly retrieve and serve this data without the need to access the slower primary data storage, which is often disk-based. Caching is an effective mechanism to scale your applications at a significantly lower cost.

The following diagram shows an example architecture.

Implementing caching is a valuable approach to cost-effectively improve the performance and scalability of your applications that rely on databases. ElastiCache is a fully managed, in-memory data store service that can be used to cache frequently accessed data, reducing the load on your primary database and speeding up data retrieval. To get started, refer to Implementing database caching using Amazon ElastiCache.

Use ElastiCache for Redis on top of a MySQL database

The cache-aside strategy, also known as lazy loading, is one of the most popular options for cost-effectively boosting database performance. When an application needs to read data from a database, it first queries the cache. If the data is not found, the application then queries the database and populates the cache with the result. The following diagram illustrates this architecture.

In the following database caching tutorial, you will learn how to boost the performance of your MySQL database, and reduce the burden on your underlying data sources, by adding an in-memory caching layer using Amazon ElastiCache.

ElastiCache as a session store

A session store is a crucial component in web application development. It’s responsible for maintaining and managing user-specific data and state across multiple HTTP requests, providing a seamless and personalized user experience. Session stores are essential for tracking user authentication, managing shopping carts, and preserving user-specific settings and data.

Storing session data in Redis offers significant benefits for web applications. Redis, an in-memory key-value store, provides exceptional speed, efficiency, and scalability for handling user sessions. Redis supports flexible data structures (hashes, sets, sorted sets, streams, JSON documents, and more), data persistence, and session expiry management making it an ideal choice for providing fast, reliable, and scalable session management. Additionally, Redis’s pub/sub capabilities enable real-time updates, and its robust performance makes it well-suited for applications with growing user bases. The following diagram illustrates an example architecture.

This ElastiCache for Redis session management code showcases a basic implementation of session management with Redis, including user authentication, shopping cart functionality, and visit tracking. It’s a useful starting point for understanding how to use Redis for session storage in a web application.

Boosting application performance using Amazon ElastiCache

In this section, we discuss different strategies to boost application performance using ElastiCache.

Boost the performance and reduce our I/O costs for Amazon DocumentDB workloads

Amazon DocumentDB (with MongoDB compatibility) is a fully managed native JSON document database used for storing, querying, indexing and aggregating JSON based data at scale. In this particular case, you can cache frequently accessed JSON documents using an in-memory database such as Amazon ElastiCache to significantly boost performance and cut down your I/O costs. Starting with Redis 6.2, ElastiCache supports natively storing and accessing data in JSON format. Application developers can now effortlessly store, fetch, and update their JSON data inside Redis without needing to manage custom code for serialization and deserialization. The demo Caching for performance with Amazon DocumentDB and Amazon ElastiCache showcases how to integrate Amazon DocumentDB and Amazon ElastiCache to achieve microsecond response times. For further performance tests, refer to Amazon ElastiCache Caching for Amazon DocumentDB(with MongoDB compatibility. The following diagram shows an example architecture.

Optimize cost, increase throughput and boost performance of Amazon RDS workloads using Amazon ElastiCache

With ElastiCache, you can save up to 55% in cost and gain up to 80 times faster read performance by using ElastiCache with Amazon Relational Database Service (Amazon RDS) for MySQL (compared to Amazon RDS for MySQL alone). Use the benchmarking tool Amazon ElastiCache Caching for Amazon RDS to design, test, and deploy your workloads using Amazon ElastiCache.

For performance comparison purposes, we tested the workload using two architectures. We first used an RDS database only and then we paired the RDS database with Amazon ElastiCache. The workload characteristics for both tests were identical with 80% random reads and 20% writes. The source database uses an enlarged version of the standard airportdb MySQL example dataset with approximately 85GiB in size. The database load consisted of 75 simulated users running a total of 250,000 queries.

The following figures show the average combined (read and write queries) performance characteristics using SQL Server only. We used the DB instance type db.r5.xlarge with 32 GiB RAM 4vCPU and a database cache of 820 MB.

MS SQL Server paired with Amazon ElastiCache

The following figures show the average combined (read and write queries) performance characteristics using SQL Server paired with ElastiCache. We used the DB instance type db.r5.xlarge with 32 GiB RAM 4vCPU, and the database cache used 820 MB. For the ElastiCache instance type, we used cache.m6g.xlarge with 26 GiB RAM 4vCPU. ElastiCache memory used 35 MB.

After pairing the RDS database with Amazon ElastiCache for our workload using the airportdb sample database, we observed an increase in the average number for transactions per second (TPS) from 5,300 to 7,100 (a 34% increase). Also, the average combined read/write response time decreased from 14 milliseconds to 9 milliseconds, a decrease of approximately 35%. Average read transactions completed in 0.51 milliseconds, which is 10 times faster compared to Amazon RDS only read response time.

Additionally, Amazon RDS CPU utilization dropped significantly from around 78% to 32%, an almost 59% reduction in RDS CPU utilization.

Secure Amazon ElastiCache for Redis using IAM Authentication

AWS Identity and Access Management (IAM) securely manages who can be authenticated (signed in) and authorized (have permissions) to use ElastiCache resources. With IAM authentication you can authenticate a connection to ElastiCache for Redis using AWS IAM identities, when your cluster is configured to use Redis version 7 or above with encryption in transit enabled. You can also configure fine-grained access control for each individual ElastiCache replication group and ElastiCache user and follow least-privilege permissions principles. For more information, refer to Identity and Access Management for Amazon ElastiCache. The Elasticache IAM authentication demo application showcases a sample Java-based application based on a Redis Lettuce client to demo the IAM-based authentication to access your ElastiCache for Redis cluster. Refer to Simplify managing access to Amazon ElastiCache for Redis clusters with IAM to learn how to setup IAM with ElastiCache for your workload.

ElastiCache Well-Architected Lens

AWS Well-Architected is a framework and a set of best practices provided by AWS to help cloud architects build secure, high-performing, resilient, and efficient infrastructure for their applications. The framework is built around six pillars—operational excellence, security, reliability, performance efficiency, cost optimization, and sustainability, and is designed to assist organizations in designing, building, and maintaining their workloads on AWS in a way that aligns with best practices and architectural principles.

Migrate Redis workloads to Amazon ElastiCache: Best practices and guidelines

The ElastiCache Well-Architected Lens is a collection of design principles and guidance for designing Well-Architected ElastiCache workloads. You can create custom lenses with your own pillars, questions, best practices, and improvement plans. Each pillar has a set of questions to help start the discussion around an ElastiCache architecture. To get started with creating a ElastiCache custom lens for AWS Well-Architected, please refer to ElastiCache Lens for Well-Architected.

Monitor in-memory databases

ElastiCache and Amazon MemoryDB for Redis (durable, in-memory database service purpose-built for modern applications with microservices architectures) provide both host-level metrics (for example, CPU usage) and metrics that are specific to the cache engine software (for example, cache gets and cache misses) that enable you to monitor your clusters. These metrics are measured and published for each node in 60-second intervals. You can access these metrics through Amazon CloudWatch. For more details, refer to the monitoring documentation for Monitoring ElastiCache usage with CloudWatch Metrics and Monitoring MemoryDB for Redis with Amazon CloudWatch.

DBTop Monitoring is a lightweight application to perform real-time monitoring for AWS database resources. Based on the same simplicity concept of the Unix top utility, it provides a quick view of database performance, all on one page. For more details on setting up and configuring the application, please refer to DBTop Monitoring Solution for AWS Database Services.

The DBTop Monitoring Solution supports the following database engines:

Amazon RDS for MariaDB

Amazon RDS for MySQL

Amazon RDS for Oracle

Amazon RDS for PostgreSQL

Amazon RDS for SQL Server

Amazon Aurora instances and clusters:

Amazon Aurora MySQL-Compatible Edition

Amazon Aurora PostgreSQL-Compatible Edition

Amazon ElastiCache for Redis

Amazon MemoryDB for Redis

The following screenshot shows an example of the DBTop Monitoring dashboard.

ElastiCache for machine learning

In this section, we discuss ML use cases with Amazon ElastiCache.

Build a high-performance feature store serving fast and accurate predictions across millions of users

A feature store is a critical component of machine learning (ML) and data science infrastructure that helps organizations manage, store, and serve features for ML models. Features are the input variables or attributes used by ML models to make predictions, and a feature store centralizes the storage and management of these features to streamline the ML workflow. Refer to Build an ultra-low latency online feature stores for real-time inferencing using Amazon ElastiCache for Redis to learn how to build online feature stores on AWS with Amazon ElastiCache for Redis to support mission-critical ML use cases that require ultra-low latency. For a demo of using Feast (an open-source feature store to store and serve features consistently for offline training and online inference) with ElastiCache, refer to Real-time Credit Scoring with Feast and Amazon ElastiCache.

The following diagram illustrates the architecture used in the demo.

Build a chat application with a high-speed in-memory database to store your chat conversations and provide more accurate responses to users

Chatbots are becoming increasingly integral to our digital experiences and also one of the core large language model (LLM) use-cases. These chatbots excel in maintaining conversations and providing users with the information they need. Aside from basic prompting and LLMs, memory is one of the core components of a chatbot. Memory allows a chatbot to remember past interactions, and provide an answer in context.

Amazon Bedrock helps you build and scale AI applications effortlessly by providing a range of leading foundation models (FMs) through scalable, reliable, and secure API. You can also leverage PartyRock, a fun and intuitive playground provided by Bedrock to experiment and build generative AI applications without writing code or creating an AWS account.

In the world of chatbots, security and privacy are paramount concerns. Bedrock is designed from the ground up to provide security and privacy for all your generative AI data.

ElastiCache for Redis helps you retain user chat history with sub-millisecond latency across browser reloads or when application is restarted, thereby ensuring seamless user experience.

LangChain is a framework that helps you unlock the full potential of language models. It allows applications to be context-aware. LangChain’s ConversationSummaryBufferMemory uses ElastiCache by retrieving and summarizing past conversations, which helps in providing relevant responses.

The following architecture shows how you can seamlessly store chat history in Amazon ElastiCache for Redis, providing scalability and speed. For a sample tutorial, refer to Build a generative AI Virtual Assistant with Amazon Bedrock, Langchain and Amazon ElastiCache

Ultra-fast performance for microservices with MemoryDB for Redis

You can use MemoryDB for Redis as the primary database for microservices, serving as a Redis-compatible, durable, in-memory database. MemoryDB makes it straightforward and cost-effective to build applications that require microsecond read and low single-digit millisecond write performance with data durability and high availability. To learn more, refer to Introducing Amazon MemoryDB for Redis – A Redis-Compatible, Durable, In-Memory Database Service and check out a code demo using a microservice in Python that allows you to create, update, delete, and get data for customers from a MemoryDB cluster using a REST API.

Summary

Over the years, our in-memory specialist solutions architecture team has been helping our customers build highly scalable modern applications using in-memory database solutions like ElastiCache and MemoryDB. In this post, we put together some of those solutions.

Our goal is to continue adding more solutions to this learning series as we help our customers in their cloud adoption journey. At AWS, we are customer obsessed and the majority of our product features have been developed by working backward from our customer requests. As our audience, we always value your feedback. If you’re looking to build your applications using our in-memory databases, leave us your feedback and we can include solution artifacts for your use cases in the future posts in this series.

About the authors

Siva Karuturi is a Worldwide Specialist Solutions Architect for In-Memory Databases based out of Dallas, TX. Siva specializes in various database technologies (both Relational & NoSQL) and has been helping customers implement complex architectures and providing leadership for In-Memory Database & analytics solutions including cloud computing, governance, security, architecture, high availability, disaster recovery and performance enhancements. Off work, he likes traveling and tasting various cuisines Anthony Bourdain style!

Lakshmi Peri is a Sr, Solutions Architect specialized in NoSQL databases. She has more than a decade of experience working with various NoSQL databases and architecting highly scalable applications with distributed technologies. In her spare time, she enjoys traveling to new places and spending time with family.

Roberto Luna Rojas is an AWS Sr. Solutions Architect specialized in In-Memory NoSQL databases based in NY. He works with customers from around the globe to get the most out of in-memory databases one bit at the time. When not in front of the computer, he loves spending time with his family, listening to music, and watching movies, TV shows, and sports.

Steven Hancz is an AWS Sr. Solutions Architect specialized in in-memory NoSQL databases based in Tampa, FL. He has 25+ years of IT experience working with large clients in regulated industries. Specializing in high-availability and performance tuning.

Read MoreAWS Database Blog