Advancements in artificial intelligence (AI) and machine learning (ML) are revolutionizing the financial industry for use cases such as fraud detection, credit worthiness assessment, and trading strategy optimization. To develop models for such use cases, data scientists need access to various datasets like credit decision engines, customer transactions, risk appetite, and stress testing. Managing appropriate access control for these datasets among the data scientists working on them is crucial to meet stringent compliance and regulatory requirements. Typically, these datasets are aggregated in a centralized Amazon Simple Storage Service (Amazon S3) location from various business applications and enterprise systems. Data scientists across business units working on model development using Amazon SageMaker are granted access to relevant data, which can lead to the requirement of managing prefix-level access controls. With an increase in use cases and datasets using bucket policy statements, managing cross-account access per application is too complex and long for a bucket policy to accommodate.

Amazon S3 Access Points simplify managing and securing data access at scale for applications using shared datasets on Amazon S3. You can create unique hostnames using access points to enforce distinct and secure permissions and network controls for any request made through the access point.

S3 Access Points simplifies the management of access permissions specific to each application accessing a shared dataset. It enables secure, high-speed data copy between same-Region access points using AWS internal networks and VPCs. S3 Access Points can restrict access to VPCs, enabling you to firewall data within private networks, test new access control policies without impacting existing access points, and configure VPC endpoint policies to restrict access to specific account ID-owned S3 buckets.

This post walks through the steps involved in configuring S3 Access Points to enable cross-account access from a SageMaker notebook instance.

Solution overview

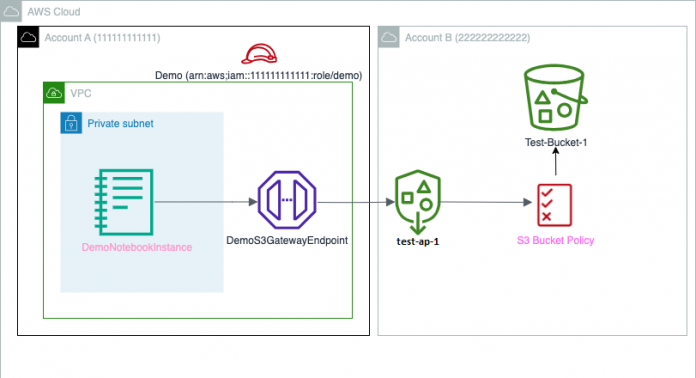

For our use case, we have two accounts in an organization: Account A (111111111111), which is used by data scientists to develop models using a SageMaker notebook instance, and Account B (222222222222), which has required datasets in the S3 bucket test-bucket-1. The following diagram illustrates the solution architecture.

To implement the solution, complete the following high-level steps:

Configure Account A, including VPC, subnet security group, VPC gateway endpoint, and SageMaker notebook.

Configure Account B, including S3 bucket, access point, and bucket policy.

Configure AWS Identity and Access Management (IAM) permissions and policies in Account A.

You should repeat these steps for each SageMaker account that needs access to the shared dataset from Account B.

The names for each resource mentioned in this post are examples; you can replace them with other names as per your use case.

Configure Account A

Complete the following steps to configure Account A:

Create a VPC called DemoVPC.

Create a subnet called DemoSubnet in the VPC DemoVPC.

Create a security group called DemoSG.

Create a VPC S3 gateway endpoint called DemoS3GatewayEndpoint.

Create the SageMaker execution role.

Create a notebook instance called DemoNotebookInstance and the security guidelines as outlined in How to configure security in Amazon SageMaker.

Specify the Sagemaker execution role you created.

For the notebook network settings, specify the VPC, subnet, and security group you created.

Make sure that Direct Internet access is disabled.

You assign permissions to the role in subsequent steps after you create the required dependencies.

Configure Account B

To configure Account B, complete the following steps:

In Account B, create an S3 bucket called test-bucket-1 following Amazon S3 security guidance.

Upload your file to the S3 bucket.

Create an access point called test-ap-1 in Account B.

Don’t change or edit any Block Public Access settings for this access point (all public access should be blocked).

Attach the following policy to your access point:

The actions defined in the preceding code are sample actions for demonstration purposes. You can define the actions as per your requirements or use case.

Add the following bucket policy permissions to access the access point:

The preceding actions are examples. You can define the actions as per your requirements.

Configure IAM permissions and policies

Complete the following steps in Account A:

Confirm that the SageMaker execution role has the AmazonSagemakerFullAccess custom IAM inline policy, which looks like the following code:

The actions in the policy code are sample actions for demonstration purposes.

Go to the DemoS3GatewayEndpoint endpoint you created and add the following permissions:

To get a prefix list, run the AWS Command Line Interface (AWS CLI) describe-prefix-lists command:

In Account A, Go to the security group DemoSG for the target SageMaker notebook instance

Under Outbound rules, create an outbound rule with All traffic or All TCP, and then specify the destination as the prefix list ID you retrieved.

This completes the setup in both accounts.

Test the solution

To validate the solution, go to the SageMaker notebook instance terminal and enter the following commands to list the objects through the access point:

To list the objects successfully through S3 access point test-ap-1:

To get the objects successfully through S3 access point test-ap-1:

Clean up

When you’re done testing, delete any S3 access points and S3 buckets. Also, delete any Sagemaker notebook instances to stop incurring charges.

Conclusion

In this post, we showed how S3 Access Points enables cross-account access to large, shared datasets from SageMaker notebook instances, bypassing size constraints imposed by bucket policies while configuring at-scale access management on shared datasets.

To learn more, refer to Easily Manage Shared Data Sets with Amazon S3 Access Points.

About the authors

Kiran Khambete is working as Senior Technical Account Manager at Amazon Web Services (AWS). As a TAM, Kiran plays a role of technical expert and strategic guide to helping Enterprise customers achieving their business goals.

Ankit Soni with total experience of 14 years holds the position of Principal Engineer at NatWest Group, where he has served as a Cloud Infrastructure Architect for the past six years.

Kesaraju Sai Sandeep is a Cloud Engineer specializing in Big Data Services at AWS.

Read MoreAWS Machine Learning Blog