In the Part 1 of this series, you learned about Amazon DynamoDB data loading strategies and the behavior of DynamoDB during short runs. In this post, you learn about query performance and the adaptive behavior of DynamoDB during sustained activity.

Querying

To drive traffic at arbitrarily large rates and simulate real-world behavior, we need a number of multi-threaded clients that each make query calls as fast as possible sending randomly created IP addresses. These consume whatever query capacity the table can provide; the rest is throttled. To enable this, I created an auto-scaling group of Amazon Elastic Compute Cloud (Amazon EC2) instances each running the same simple query client.

Querying test: On-demand table, one partition key value

For our first test, we’ll query against an on-demand table with data loaded using a single-valued partition key design. We saw earlier that this use of a single-value partition key slowed our load speed. Now let’s examine what it does to our query speed.

You might expect a steady ongoing rate of 6,000 reads per second: All the data is in one partition, each partition has a maximum read rate of 3,000 read units per second, and each of our eventually consistent (EC) queries consumes 0.5 read units. Therefore, math says we’ll achieve 6,000 query lookups per second against one very hot, throttling partition. The actual result is nothing like that.

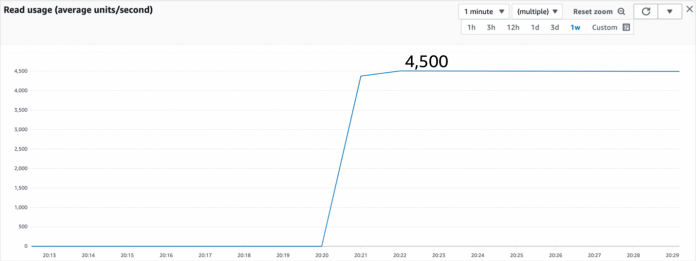

The Figure 1 that follows shows what we see at the start of the testing and for the first 10 minutes.

Figure 1: Query test of one on-demand table with one partition key value

As Figure 1 shows, the query is consuming 4,500 read units, which is 9,000 of our EC queries per second. We’re exceeding expectations. Here’s what’s going on: Every partition has its data spread across three nodes for redundancy—a leader node that takes all writes, and two follower nodes that follow quickly behind. A strongly consistent (SC) read always goes to the leader node to get the latest data. The leader node can handle 3,000 reads per second, which is why a partition can handle 3,000 SC reads per second. An EC read can go to any of the three nodes. When all three nodes are active, a partition can theoretically handle 9,000 reads per second, which is what we find here. There will be times during normal operation when one node out of the three might be down for short durations, such as for internal maintenance, or possibly replaced with a log replica that can’t answer queries. During those times, the partition is only able to handle 6,000 EC reads per second, and that would still be considered normal behavior. This is why you should design assuming a partition can maintain 6,000 EC reads per second even though you will sometimes run at 9,000 EC reads per second.

Continuing the query barrage, after 10 minutes we get a jump in throughput, as shown in Figure 2 that follows.

Figure 2: Continuing the test shows a doubling in throughput

The throughput exactly doubled. DynamoDB noticed the single hot partition and decided to split it into two new partitions, doubling the throughput capacity (and at no additional cost).

DynamoDB has a feature called adaptive capacity that, among other things, can isolate frequently accessed items. Colloquially, this isolating capability is called split for heat. When DynamoDB observes a partition receiving sustained high read or write throughput, it might split the partition into two new partitions, each holding a subset of the items from the original partition. This doubles the read and write capacity available to these items.

The split for heat logic chooses the sort key split point based on recent traffic patterns, aiming to spread the heat evenly across the two new partitions. The split point is rarely in the exact center. With the IP address use case, the split would aim to separate IP ranges that were seeing the most queries.

When choosing a split point, DynamoDB has to consider if the table has a local secondary index (LSI). If it does, then the split point can only be between item collections (items sharing the same partition key). If it does not have an LSI, then DynamoDB has the option to split within an item collection using a sort key value as part of the split point location. This means that items with the same partition key might be assigned to different partitions according to their sort key values. The hash of the partition key provides the first part of the partition placement and the value of the sort key further refines the placement. Notice that if our IP table had been constructed with an LSI that would have kept split for heat from improving our query performance here because our single item collection could not have been further split.

Split for heat applies to reads and writes, and applies any time high traffic is sustained. In fact, when loading the randomized CSV file with a single partition key back in Part 1, I observed partition splitting near the end of the load. While appreciating that feature of DynamoDB to adapt, I didn’t want that when benchmarking because it would have unduly influenced the query tests by starting them with more partitions. To keep the split from happening, I chose to load from the sequential CSV file. That prevented a split because DynamoDB’s split for heat logic is able to detect a steadily increasing line of heat (such as a single partition key with an always increasing sort key value) and will not initiate a split because it knows if it did, all the new updates after the split would go to the second partition anyway.

Back to the test. After a while, we find the throughput doubles again, as shown in Figure 3 that follows.

Figure 3: Continuing the test shows another doubling

At this point, the two partitions with data have both split and now four partitions are handling the queries, doubling the maximum rate.

After running for a total of 90 minutes under steady load, the graph shows the pattern in Figure 4 that follows.

Figure 4: Query performance over a 90-minute run

There’s a lot to notice in Figure 4. The initial read rates were limited by partition capacity limits causing split for heat to repeatedly add partitions to increase capacity. After about an hour, the partitions were split to such a degree that no partitions were near their limits.

Sometimes, as part of the split process, there’s a temporary dip in the query rate, a reminder to design for 6,000 EC reads per second while sometimes observing 9,000. It’s also expected to see about one second of Internal Server Error responses at the time of partition cutover from old to new for any writes (or strongly consistent reads) to that partition as the partition leadership responsibility moves to a new leader node.

Skipping the middle part for now, during the last 30 minutes of the test, the throughput eventually became limited at 120,000 RCUs by the table-level read throughput limit. Every account has a limit on how much provisioned read capacity can be granted to a table (there’s another limit for write). Implicitly, it’s also used to control the maximum throughput of on-demand tables. The default is 40,000 read units in most AWS Regions. Before testing, I increased the test account’s table-level read throughput limit to 80,000 read units, because it gave us more time to observe the splitting and doubling before the table limit came into effect. A table with 80,000 read units running EC queries can achieve up to 120,000 read units steady state, thanks to the 50 percent boost discussed above.

Now let’s discuss that odd peak in the middle. It’s possible for throughput to go above the table’s limit for a short duration thanks to burst capacity, another feature of adaptive capacity that allows a table to borrow capacity (on a best effort basis) to provide temporary capacity above the table capacity limit. That’s what creates that large peak to 220,000 read units (440,000 queries per second). The partitions had split to the point they weren’t a bottleneck anymore, and the burst capacity started being provided and consumed.

The burst capacity allowed is finite (sized to the equivalent of 5 minutes at maximum capacity, which for this table would be 80,000*300 = 24,000,000 read units), after which traffic is limited based on the table-level read throughput limit.

If we had let the query load lighten below the limit, the burst capacity would accumulate again for later use, but the query rate was always kept at the maximum the table could support, so the line continues onward flat, hovering at the table-level read throughput limit.

If we were doing SC reads, we’d be limited to exactly 80,000 reads per second. Because we’re doing EC reads, we have an additional 50 percent, allowing us to run at 120,000 read units per second ongoing, just as the chart shows.

What’s interesting here is that even with no special planning and only one partition key value (against best practice advice), the infrastructure could handle 440,000 queries per second after an hour and we were only throttled by account-related quotas.

Querying test: On-demand table, multiple partition key values

For our second test, let’s follow best practice advice and use multiple partition key values. We start the process again with a new on-demand table, shown in figure 5 that follows. What do we expect?

Figure 5: Query test using 200+ partition key values

Right away, we achieve a higher throughput at around 15,000 read units per second (30,000 queries). This is four times our previous starting point. That makes sense because we’re using all four partitions present in a newly created on-demand table. As we keep traffic high, the partitions split and split again, shown in Figure 6 that follows.

Figure 6: Partition splits allow for higher throughput

It’s the same pattern as with one partition key, but starting with four split-ready partitions instead of one.

The final graph looks very much like the first, but the peak came after 45 minutes instead of an hour, shown in Figure 7 that follows.

Figure 7: Throughput over an hour of allowing the query to run

The takeaway here is that it’s better to have good dispersion of partition keys. Having 200+ partition keys scaled out faster than having 1.

Another takeaway is that, due to adaptive capacity, when benchmarking DynamoDB, don’t assume that what you see in the first 5 minutes is what you’ll see after an hour!

Querying test: A million requests per second

Let’s conclude with a test aiming for a million requests per second.

For this, we provision a table with 500,000 read units (this requires raising the default account quotas). That should be more than enough for a million queries per second. We can then either leave the table provisioned or switch to on-demand after creation. On-demand tables don’t have a provisioned size signal to guide them, so they often more aggressively split partitions than provisioned tables. We’ll stay with provisioned for our testing, however. One reason is it gives us a nice red line showing the provisioned throughput of 500,000 read units, which is essentially our million requests-per-second target.

The following figure shows what we observe for the first 15 minutes.

Figure 8: Initial read traffic with 200+ partition key values, against a table provisioned at 500,000 RCUs

As shown in the preceding Figure 8, initially, the table achieved about 225,000 read units or 450,000 queries per second. Throughput wasn’t throttled at the table level, so we can deduce there were some hot partitions limiting our read throughput. Should we find a better mechanism for spreading the data across more partition key values and more evenly across the table’s partitions? Ideally, yes. But let’s test what happens if we don’t.

Figure 9: Read traffic doubles after 15 minutes

Figure 9 that precedes shows that split for heat has doubled our throughput after 15 minutes, and 470,000 RCUs consumed means we’re achieving 940,000 eventually consistent queries per second. We’re almost to the million queries per second mark. An hour more and we’ve generated the overall traffic pattern shown in Figure 10 that follows.

Figure 10: Read traffic across 90 minutes, achieving a steady 1,440,000 queries per second

That’s a steady state of almost 1.5 million queries per second. It only stopped there as a result of the table-level read throughput limit.

Oh, and at what latency? We can see that in Amazon CloudWatch metrics, shown in Figure 11 that follows.

Figure 11: CloudWatch metrics for query latency

CloudWatch reports a steady average of 1.72–1.88 milliseconds per query, even when running more than a million queries per second. That’s consistent performance at scale.

Query test: Summary

DynamoDB might split a partition if that partitions receives sustained read or write traffic. This doubles the throughput available to the items in that partition. The split point is calculated to be ideal based on recent traffic patterns. If the table has an LSI present, the split point can only be between item collections.

Traffic to a provisioned table might be throttled by either its read or write capacity settings. An on-demand table has an implicit maximum provisioned capacity based on the table-level read and write throughput limits. These limits can be raised.

Burst capacity allows traffic to exceed the table’s limit for short bursts.

Using high-cardinality partition keys enables smooth assignment of items to partitions and allows all partitions to contribute to the table’s throughput. Using low-cardinality partition keys can create uneven workloads across partitions. In these situations, split for heat can be especially useful. However, split for heat cannot split within an item collection if there’s an LSI present or if the split is determined to not be beneficial, such as when writing an ever-increasing sort key.

Continue to Part 3 for a bookmarkable reference guide covering DynamoDB scaling behaviors described in this post and in Part 1 and for best practice tips.

About the authors

Jason Hunter is a California-based Principal Solutions Architect specializing in DynamoDB. He’s been working with NoSQL Databases since 2003. He’s known for his contributions to Java, open source, and XML.

Vivek Natarajan is a CS major at Purdue and a Solutions Architect intern at AWS.

Read MoreAWS Database Blog