The general rule with Amazon DynamoDB is to choose a high cardinality partition key. But why; and what happens if you don’t? Inspired by a customer use case, we dive deep into this question and explore the performance of loading and querying DynamoDB using different partition key designs and table settings.

After each experiment, we look at the generated performance graph, explain the patterns we see, and—through repeated improving iterations—show you the fundamentals of DynamoDB internals and best practices for writing performant applications. We cover table partitions, partition key values, hot partitions, split for heat, burst capacity, and table-level throughput limits.

This three-part series starts by presenting the problem we’ll be exploring and looking at data loading strategies and the behavior of DynamoDB during short-duration runs. Part 2 covers query performance and the adaptive behavior of DynamoDB during sustained activity. The series concludes with Part 3, which is a summary of learnings and best practices.

AWS estimated that the solution we’re about to explore would not only be massively scalable, but would cost about $0.18 to load the items, $0.05 per month ongoing in storage, and with fully flexible on-demand mode they could do eight million lookups for $1. We’ll start with a slow load and by the end, we’ll be processing millions of requests per second with an average latency under 2 milliseconds.

Customer use case: IP address lookup

This post was inspired by a customer question about the importance of high cardinality partition keys. Many DynamoDB use cases have a natural and obvious high cardinality partition key (such as a customer ID or asset ID). Not this case. Accenture Federal Services contacted us and said they wanted to design an IP address metadata lookup service. Their dataset consisted of hundreds of thousands of IP address ranges, each range having a start address (such as 192.168.0.0), an end address (such as 192.168.10.255), and associated metadata (such as owner, country, security rules to apply, and so on). Their query needed to accept an IP address, find the range containing it, and return the metadata.

Accenture Federal Services wanted to know:

How well DynamoDB would work for this lookup service.

What table design would work best.

What design would be most efficient in terms of runtime, cost, and scalability.

What would be the theoretical maximum lookups per second DynamoDB could achieve.

They were also concerned that their use case didn’t seem like a classic DynamoDB use case, because there was no obvious partition key. They wanted to know if that would limit performance.

In this post, we’ll work through the problem and answer these questions.

The lookup algorithm

Before doing any table design, it’s important to have the base algorithm. It must accept an IP address and efficiently locate the range containing the address.

A DynamoDB table schema has a partition key and an optional sort key. Additional attributes might be present but they aren’t indexed (unless placed in a secondary index).

If you’re not already familiar with partition keys and sort keys, you might want to first learn the DynamoDB fundamentals. If you prefer video, I suggest DynamoDB: Its purpose, main features, and key concepts and DynamoDB: Under the hood, managing throughput, advanced design patterns.

One way to pull data from DynamoDB is to perform a Query operation. A Query can do many things, one of which is retrieve items where the partition key is specified exactly and the sort key is specified with an inequality (where partition key equals X and sort key does not equal Y). We can use a Query to look up an IP from an IP range as long as two things are true of the data:

The IP ranges in the dataset never overlap. This is inherently true, otherwise the dataset would be ambiguous (because the same IP would have multiple metadata entries).

The IP ranges fully describe all IP addresses, with no gaps. This isn’t always true, because some IP ranges are specially reserved and don’t have metadata, however synthetic ranges can fill in the gaps with a payload indicating there’s no metadata available, to make this assumption true.

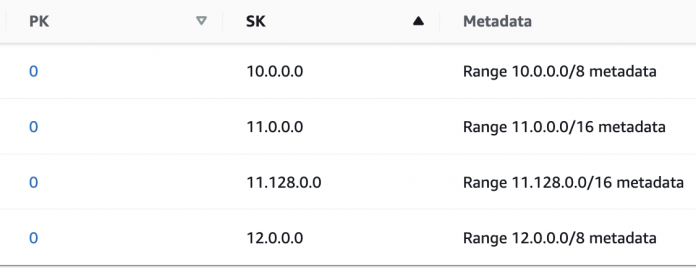

Each IP range can be stored as an item (row) within DynamoDB. For now, let’s assume a single fixed partition key value (one that’s the same for all items) and a sort key value equal to the start IP address of the range. Figure 1 that follows shows the data model. (We’ll improve this data model later.)

Figure 1: Data model using a fixed partition key

The query can then find items where the partition key equals 0 and sort key is less than or equal to the lookup IP address by scanning backward and limiting to one result. The first item that matches is the metadata to return. The end range value doesn’t have to be considered, because we know there are no overlaps or gaps. The following is the Python code for an AWS Lambda function showing a test run:

If you’re struggling to see how this algorithm works, it might help to visualize it. Picture a fence in the countryside with lots of fence posts placed at random intervals. You want to find which segment of the fence corresponds to any particular distance you might walk along the fence. To solve this, you start by walking out that distance, then walk backward until you encounter a fence post. On that fence post is all the metadata about that segment. Each fence post describes the segment following it. The fence post on the other side holds the metadata about the segment following it. If there’s a gap in the fence, the fence post right before the gap says gap here.

But wait, just one partition key value makes the query simple but goes directly against DynamoDB best practices of having high cardinality among partition key values. Let’s test the implications of this.

The data format

To run a test, we must first consider the actual data format. The source data is in a CSV file, one IP range per line, no gaps, no overlaps. Each line has start and end IP values as well as metadata. The following is a mock example with whitespace added for readability:

It’s tempting to use the start IP string as the sort key, but it’s dangerous to sort on strings as if they’re numbers. You’ll find .100 comes between .1 and .2. One solution is to zero-pad the values so 1.0.32.0 becomes 001.000.032.000. If every number is always three digits, strings and numbers sort identically.

There’s a better way: Convert each IP address to its natural numeric form. IP addresses are almost always written in a dotted-quad format such as 1.0.32.0, but this is just a human-friendly serialization. Fundamentally, an IP address (IPv4 anyway) is a single 32-bit value.

The IP address 1.0.32.0 when serialized to binary (but keeping the periods for readability) is 00000001.00000000.00100000.00000000, which if conveyed as a single decimal is 16,785,408. That’s the numbering model we use for the sort key because it’s simple and efficient for the inequality operation, plus it’s compact in storage.

Figure 2 that follows shows a snippet of what the table is going to look like after converting the IP addresses to their numeric values.

Figure 2: Table showing IP addresses converted to their numeric values

Loading

Now we’re ready to begin testing, starting with load performance. We load the CSV file using a simple looping, single-threaded Python script. Note that all test results were gathered from the us-east-1 Region.

Loading test: On-demand table, sequential CSV, using a single partition key

As a first test, let’s perform a bulk load against a newly created on-demand table. We load from the CSV file and have our Python code assign the same partition key for all items and convert IP range strings to number values. Note the CSV file data is sorted by IP range, with lower IPs at the start. This kind of ordering is common with CSV files, which will be important later in the experiment. Figure 3 that follows shows the results.

Figure 3: Results of the first load test: on-demand table, sequential CSV, single partition key

As shown in Figure 3, the first load test performs at a steady rate of 1,000 write units per second. The rest of the write requests are throttled.

Here’s what’s going on behind the scenes: Every DynamoDB table is distributed across some number of physical partitions. Each physical partition can support 3,000 read units per second and 1,000 write units per second. By using just one partition key value, all writes are being sent to one partition, and that’s created a bottleneck.

This doesn’t imply that new on-demand tables only allocate one partition. On-demand tables continuously adapt to live traffic and newly created on-demand tables are documented as able to serve up to 4,000 write request units and 12,000 read request units. For more information, see Read/write capacity mode.

Those throughput capabilities align exactly with what a table having four partitions would do. So even with four partitions available, because we’re using a single partition key value, all traffic is being allocated to one of those partitions, leaving the other three partitions inactive.

A quick refresher on how data gets assigned to partitions: Each partition is responsible for a subset of the table’s key space, akin to number ranges on a very large number line. The partition key value is hashed, which converts it to a number, and the partition whose range includes that number gets the read or write for that partition key value. If the partition key values are always the same, the same partition generally gets all the reads and writes. Note that different partition keys can hash to the same general area of the number range and, if so, will be colocated in the partition handling that range. A partition can split, moving its items to two new partitions and introducing a new split point on the number range. Partitions can also split within an item collection (among items having the same partition key value) in which case the sort key is considered in calculating the split point.

We’ll see this apply soon, but the takeaway is that using a single partition key value will initially place all the items into the same partition, which can greatly throttle writes.

Loading test: On-demand table, sequential CSV, and multiple partition keys

We can speed up the load if we spread the data across more partition key values. Perhaps we pick the partition key value that’s the first part of the IP address. For example, 192.168.0.0 gets a partition key of 192. That spreads the writes across 200+ partition key values and should spread the work more evenly across partitions.

We have to adjust our query to specify the correct partition key based on the IP range being looked up, and our loader to ensure that no range crosses partition key boundaries, because if they do then the core logic fails. The new table design looks like Figure 4 that follows.

Figure 4: Table design showing distinct partition keys for each /8 address range

Figure 5 that follows shows the performance during loading.

Figure 5: Results of second load test: Now with many partition keys

The load improved to about 1,250 writes per second. Why not more and why isn’t the work distributed better across the partitions? It’s because the CSV file is sequential. All the thousands of ranges for each partition key value are going in one right after the other. One partition takes all the traffic for a while, then another, then another. It’s not well spread. The only improvement comes when the partition key value switches and a new partition gets its turn as the throttling partition.

The takeaway here is that sequential CSV loads don’t spread traffic well.

Loading test: On-demand table, random CSV, and multiple partition keys

If we randomize the order of the CSV file entries (either by adjusting the file or doing a shuffle as an internal task within the Python loader), we can hope to spread the load more evenly and get a performance boost. Figure 6 that follows shows our test result.

Figure 6: Results of third load test: Now with a line-randomized CSV file

This test finished in under 2 minutes. The first and last timings are partial minutes about which we can’t infer a per-second rate. During the fully-measured central minute, it reached about 3,600 writes per second. This matches well with four partitions being well utilized. The simple act of randomizing the data write order greatly improved the write throughput.

For these reasons, you should randomize CSV loads whenever the CSV file has rows sorted by (and thus grouped by) partition key value.

It’s also useful to randomize the source data before loading if the source data came from a Scan of another DynamoDB table, because the items returned by the Scan are naturally grouped by partition key hash values and thus would create a steady line of heat during the load.

Loading test: Provisioned table, random CSV, and multiple partition keys

All testing so far has been on newly created on-demand tables. Let’s test a table provisioned at 10,000 write capacity units (WCUs) (to keep things simple, we won’t turn on auto scaling,). We’ll keep using the randomized CSV file. Figure 7 that follows shows what we observe.

Figure 7: Results of fourth load test: Now using a table provisioned at 10,000 WCUs

The load completed in less than a minute. All the activity was gathered in a sub-minute data point with an average of 6,000 writes per second, meaning the peak during that minute was well above that.

A newly provisioned table with 10,000 WCUs has more partitions than a new on-demand table (it needs at least 10 partitions to handle that write load), and by having a lot of partition key values spread across those extra partitions, we were able to improve performance.

Does that mean provisioned is better than on-demand? No, because on-demand quickly adjusts and grows capacity and partitions over time based on what traffic it accepts. It’s just that the default size of an on-demand table is below 10,000 WCUs. If you expect that a table will receive a high traffic load from the beginning, it’s a good idea to pre-warm a new on-demand table with a specified initial capacity by first creating the table as provisioned then switching it to on-demand.

Loading test: Summary

The maximum rate of load depends on the number of physical partitions, the number of partition key values, and the ability of the load to parallelize the work across the partitions. Having more partitions tends to increase load rate, but more partitions is most beneficial when there are enough partition key values to spread the work across the partitions and the loading logic also spreads the work across the partition key values.

In Part 2, we’ll explore query performance and the adaptive behavior DynamoDB exhibits during sustained activity.

Conclusion

In this three-part series, you learned about DynamoDB internals by reviewing the results of testing the performance of loading and querying using different partition key designs. We discussed table partitions, partition key values, hot partitions, split for heat, burst capacity, and table-level throughput limits.

As always, you are welcome to leave questions or feedback in the comments.

About the authors

Jason Hunter is a California-based Principal Solutions Architect specializing in DynamoDB. He’s been working with NoSQL Databases since 2003. He’s known for his contributions to Java, open source, and XML.

Vivek Natarajan is a CS major at Purdue and a Solutions Architect intern at AWS.

Read MoreAWS Database Blog