Running a lot of genomic pipelines can be challenging. Adding GPUs to the solution is harder. In this blog, we are presenting the whole solution of running multiple jobs with GPU and Batch. As Nvidia Clara Parabricks is available in the Google Cloud Platform Marketplace, you can easily spin up a single VM with Parabricks tools to help with your genomics pipeline workloads. A Parabricks VM allows you to run individual workflow(s) manually. If we are in a Lab / Health Care institute level environment, a scale-out solution with deployment automation is needed to run analysis in parallel. Creating multiple Parabricks VMs is not the solution either because there is no central job management across VMs created from the Marketplace. Batch is the solution addressing the problem. A lot of Genomic pipelines rely on the HPC solutions and toolings like Parabricks. We are going to discuss how and why to run Nvidia Clara Prarbricks on Batch at scale.

Product Summary:

Batch

Fully managed batch service to schedule, queue, and execute batch jobs on Google’s infrastructure. User provisions and auto scales capacity while eliminating the need to manage third-party solutions. It is natively integrated with Google Cloud to run, scale, and monitor workload.

Nvidia Clara Parabricks Pipelines

Parabricks is a software suite for performing secondary analysis of next generation sequencing (NGS) DNA and RNA data. A major benefit of Parabricks is that it is designed to deliver results at blazing fast speeds and low cost. Parabricks can analyze whole human genomes in about 45 minutes, compared to about 30 hours for 30x WGS data. The best part is the output results exactly match the commonly used software. So, it’s fairly simple to verify the accuracy of the output.

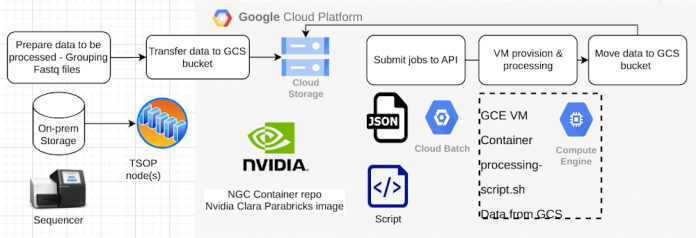

Processing flow:

Provided the data is ready in the Google Cloud Storage bucket, you can submit jobs to the Batch API,

All you have to worry about is 2 files.

Batch json file – This file defines everything about the infrastructure including machine type, # of GPU, container image, persistent disk, GCS bucket (data) location and run script location and commands etc.

Bash script runs within the docker container – This file has all steps to be run.

Make sure the Batch API is enabled and you have the IAM roles / permissions to submit the job.

In the following example, we are going to follow the tutorial steps from Nvidia Clara Parabricks website:

https://docs.nvidia.com/clara/parabricks/3.8.0/Tutorials.html

Architecture and Infrastructure decisions:

Use the Compute Engine Persistent Disk – users can define the size and the performance. Size can be up to 63TB per disk. Performance tier between pd-standard , pd-balanced, pd-ssd.

Use Google Cloud Storage (GCS)

Scripts can be mounted to the Batch VM and the Docker container.

Input and Output can be stored cost effectively in GCS buckets

For large datasets, transfer operations like GSUTIL may perform better.

Nvidia Clara Parabricks image – use the Parabricks image natively without building a new image. You don’t need to maintain a custom image.

Sample code:

Here is the sample GCP environment summary which includes shared VPC network use case:

GCP service project: service-hpc-project2

GCP shared VPC host project: host-hpc-project1

GCP network: test-network

GCP subnetwork: tier-1

GCS Bucket: thomashk-test2

Batch Submission Json example (cb-parabricks.json)

#Copyright 2023 Google. This software is provided as-is, without warranty or representation for any use or purpose. Your use of it is subject to your agreement with Google

code_block[StructValue([(u’code’, u'{rnt”taskGroups”: [rn {rntt”taskSpec”: {rn t”runnables”: [rn t{rnttt”script”: {rn tt”text”: “distribution=$(. /etc/os-release;echo $ID$VERSION_ID); curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo apt-key add -; curl -s -L https://nvidia.github.io/nvidia-docker/$distribution/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list; sudo apt-get update && sudo apt-get install -y nvidia-container-toolkit; sudo systemctl restart docker”rn ttt}rn tt},rnttt{rnttt”container”: {rntttt”imageUri”: “nvcr.io/nvidia/clara/clara-parabricks:4.0.0-1″,rntttt”options”: “–gpus all”,rntttt”volumes”: [rnttttt”/mnt/data:/mnt/data”,rnttttt”/mnt/gcs/share:/mnt/gcs/share”rnttttt],rntttt”entrypoint”: “/bin/sh”,rn “commands”: [rn t”-c”,rnttttt”/mnt/gcs/share/parabricks/parabricks-run.sh”rnttttt]rn }rnttt}rn t],rn t”volumes”: [rntttt{rn t”deviceName”: “new-pd”,rn tt”mount_path”: “/mnt/data”,rn t”mountOptions”: “rw,async”rn tt},rnt ttt{rnt tt”gcs”: {rnt ttt”remotePath”: “thomashk-test2″rnt ttt},rnt ttt”mountPath”: “/mnt/gcs/share”rnt ttt}rn t],rnttt”maxRetryCount”: 8,rn t”maxRunDuration”: “86400s”rn t},rn t”taskCount”: 1rn }rnt],rnt”allocationPolicy”: {rntt”instances”: [rn t{rnttt”install_gpu_drivers”: true,rn “policy”: {rn t”machine_type”: “n1-standard-16″,rnt tt”disks”: [rn tt{rn tt”newDisk”: {rn t”sizeGb”:250,rn tttt”type”: “pd-standard”rn tt},rn tt”deviceName”: “new-pd”rn tt}rn tt],rn tt”accelerators”: [rn ttt{rn ttt”type”: “nvidia-tesla-t4″,rn tt”count”: 2rn tt}rn tt]rn ttt}rnttt}rn t],rnt”network”: {rn “networkInterfaces”: [rn t{rn ttt”network”: “projects/host-hpc-project1/global/networks/shared-net”,rnttt”subnetwork”: “projects/host-hpc-project1/regions/us-central1/subnetworks/tier-1″,rn t”noExternalIpAddress”: true rn }rn ]rnt}rn t},rn t”labels”: {rn t”env”: “experimental”,rn t”user”: “thomashk”rnt},rnt”logsPolicy”: {rn t”destination”: “CLOUD_LOGGING”rn t}rn}’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3ebfb9e14850>)])]

This is the script with running steps with a batch file (parabricks-run.sh)

code_block[StructValue([(u’code’, u’#!/bin/shrn# Copyright 2022 Google. This software is provided as-is, without warranty or representation for any use or purpose. Your use of it is subject to your agreement with Google.rn# Author: Thomas Leung

[email protected]# This is the parabrick run according to the sample run provided by Nvidiarn# https://docs.nvidia.com/clara/parabricks/v3.6/text/getting_started.html#example-runrn# This script is part of the Batch parabricks run sample.rn rn#install Google SDK for cloud operations rnapt-get updaternapt-get install curlrncurl https://dl.google.com/dl/cloudsdk/release/google-cloud-sdk.tar.gz > /tmp/google-cloud-sdk.tar.gzrnmkdir -p /usr/local/gcloudrntar -C /usr/local/gcloud -xvf /tmp/google-cloud-sdk.tar.gz rn/usr/local/gcloud/google-cloud-sdk/install.shrnbashrn rn#install the wget solutionrnapt-get install wgetrn rncd /mnt/datarn rn# download the datasetrnwget -O parabricks_sample.tar.gz \rn”https://s3.amazonaws.com/parabricks.sample/parabricks_sample.tar.gz”rntar xvf parabricks_sample.tar.gzrn rn# pbrun to get the bam – FQ2BAMrnpbrun fq2bam \rn –ref parabricks_sample/Ref/Homo_sapiens_assembly38.fasta \rn –in-fq parabricks_sample/Data/sample_1.fq.gz parabricks_sample/Data/sample_2.fq.gz \rn –out-bam output.bamrn rn# pbrun to get the vcf – HaplotypeCallerrnpbrun haplotypecaller \rn –ref parabricks_sample/Ref/Homo_sapiens_assembly38.fasta \rn –in-bam output.bam \rn –out-variants variants.vcfrn rn# pbrun VCF QC by Bamrnmkdir -p vcfqcbybam_output_dirrnpbrun vcfqcbybam \rn –ref parabricks_sample/Ref/Homo_sapiens_assembly38.fasta \rn –in-vcf variants.vcf \rn –in-bam output.bam \rn –out-file vcfqcbybam_pileup.txt \rn –output-dir vcfqcbybam_output_dirrn rn# copy output to the output directory via GCSfuse. It is recommanded to use gsutil to copy data out instead of gcsfuse.rncp output* /mnt/gcs/share/parabricks/output/.rncp *.vcf /mnt/gcs/share/parabricks/output/.rncp -r vcfqcbybam_output_dir /mnt/gcs/share/parabricks/output/.’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3ebfb9dc82d0>)])]

Store the script in the GCS bucket (gs://thomashk-test2)

$ gsutil ls gs://thomashk-test2/parabricks

gs://thomashk-test2/parabricks/

gs://thomashk-test2/parabricks/parabricks-run.sh

gs://thomashk-test2/parabricks/input/

gs://thomashk-test2/parabricks/output/

User can submit the job with the following command:

Job submission command:

code_block[StructValue([(u’code’, u’gcloud batch jobs submit parabricks-job-1 –config=cb-parabricks.json –location=us-central1′), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3ebfb9f62510>)])]

Job status checking command:

code_block[StructValue([(u’code’, u’gcloud batch jobs describe parabricks-job-1 –location=us-central1′), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3ebfb9da1b50>)])]

With Batch and Parabricks, we can accelerate genomic runs with GPUs and operate at scale. You may consider generating genomic runs scripts as data available at the storage and cron jobs to submit jobs into Batch automatically without any interactions. Batch offers status of the jobs via API, gcloud command and webGUI.

Happy computing!

Cloud Blog