After the article in Nature about the open-source of AlphaFold v2.0 on GitHub by DeepMind, many in the scientific and research community have wanted to try out DeepMind’s AlphaFold implementation firsthand. With compute resources through Amazon Elastic Compute Cloud (Amazon EC2) with Nvidia GPU, you can quickly get AlphaFold running and try it out yourself.

In this post, I provide you with step-by-step instructions on how to install AlphaFold on an EC2 instance with Nvidia GPU.

Overview of solution

The process starts with a Deep Learning Amazon Machine Image (DLAMI). After installation, we run predictions using the AlphaFold model with CASP14 samples on the instance. I also show how to create an Amazon Elastic Block Store (Amazon EBS) snapshot for future use to reduce the effort of setting it up again and save costs.

To run AlphaFold without setting up a new EC2 instance from scratch, go to the last section of this post. You can create a new EC2 instance with the provided public EBS snapshots in a short time.

The total cost for the AWS resources used in this post cost is less than $100 if you finish all the steps and shut down all resources within 24 hours. If you create an EBS snapshot and store it inside your AWS account, the EBS snapshot storage cost is about $150 per month.

Launch an EC2 instance with a DLAMI

In this section, I demonstrate how to set up an EC2 instance using a DLAMI from AWS. It already has lots of AlphaFold’s dependencies preinstalled and saves time on the setup.

On the Amazon EC2 console, choose your preferred AWS Region.

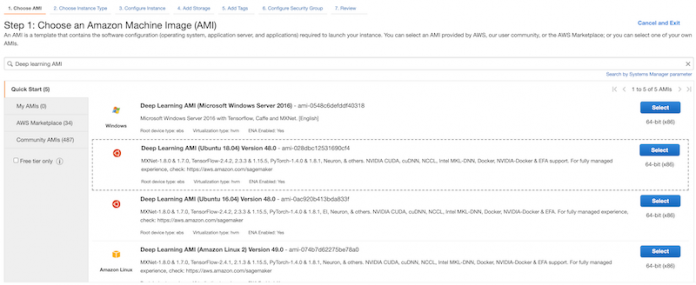

Launch a new EC2 instance with DLAMI by searching Deep Learning AMI. I use DLAMI version 48.0 based on Ubuntu 18.04. This is the latest version at the time of this writing.

Select a p3.2xlarge instance with one GPU as the instance type. If you don’t have enough quota on a p3.2xlarge instance, you can increase the Amazon EC2 quota on your AWS account.

Configure the proper Amazon Virtual Private Cloud (Amazon VPC) setting based on your AWS environment requirements. If this is your first time configuring your Amazon VPC, consider using the default Amazon VPC and review Get started with Amazon VPC.

Set the system volume to 200 GiB, and add one new data volume of 3 TB (3072 GiB) in size.

Make sure that the security group settings allow you to access the EC2 instance with SSH, and the EC2 instance can reach the internet to install AlphaFold and other packages.

Launch the EC2 instance.

Wait for the EC2 instance to become ready and use SSH to access the Amazon EC2 terminal.

Optionally, if you have other required software for the new EC2 instance, install it now.

Install AlphaFold

You’re now ready to install AlphaFold.

After you use SSH to access the Amazon EC2 terminal, first update all packages:

Mount the data volume to the folder /data. For more details, refer to the Make an Amazon EBS volume available for use on Linux

Use the lsblk command to view your available disk devices and their mount points (if applicable) to help you determine the correct device name to use:

Determine whether there is a file system on the volume. New volumes are raw block devices, and you must create a file system on them before you can mount and use them. The device is an empty volume.

Create a file system on the volume and mount the volume to the /data folder:

Install the AlphaFold dependencies and any other required tools:

Create working folders, and clone the AlphaFold code from the GitHub repo:

You use the new volume exclusively, so the snapshot you create later has all the necessary data.

Download the data using the provided scripts in the background. AlphaFold needs multiple genetic (sequence) database and model parameters.

The whole download process could take over 10 hours, so wait for it to finish. You can use the following command to monitor the download and unzip process:

When the download process is complete, you should have the following files in your /data/af_download_data folder:

Update /data/alphafold/docker/run_docker.py to make the configuration march the local path:

With the folders you’ve created, the configurations look like the following. If you set up a different folder structure in your EC2 instance, set it accordingly.

Confirm the NVidia container kit is installed:

You should see similar output to the following screenshot.

Build the AlphaFold Docker image. Make sure that the local path is /data/alphafold because a .dockerignore file is under that folder.

You should see the new Docker image after the build is complete.

Use pip to install all Python dependencies required by AlphaFold:

Go to the CASP14 target list and copy the sequence from the plaintext link for T1050.

Copy the content into a new T1050.fasta file and save it under the /data/input folder.

You can use this same process to create a few more .fasta files for testing under the /data/input folder.

Install CloudWatch monitoring for GPU (Optional)

Optionally, you can install Amazon CloudWatch monitoring for GPU. This requires an AWS Identity and Access Management (IAM) role.

Create an Amazon EC2 IAM role for CloudWatch and attach it to the EC2 instance.

Change the Region in gpumon.py if your instance is in another Region, and provide a new namespace like AlphaFold as the CloudWatch namespace:

Launch gpumon and start sending GPU metrics to CloudWatch:

Use AlphaFold for prediction

We’re now ready to run predictions with AlphaFold.

Use the following command to run a prediction of a protein sequence from /data/input/T1050.fasta:

Use the tail command to monitor the prediction progress:

The whole prediction takes a few hours to finish. When the prediction is complete, you should see the following in the output folder. In this case, <target_name> is T1050.

Change the owner of the output folder from root so you can copy them:

Use scp to copy the output from the prediction output folder to your local folder:

Use this protein 3D viewer from NIH to view the predicted 3D structure from your result folder.

Select ranked_0.pdb, which contains the prediction with the highest confidence.

The following is a 3D view of the predicted structure for T1050 by AlphaFold.

Create a snapshot from the data volume

It takes time to install AlphaFold on an EC2 instance. However, the P3 instance and the EBS volume can become expensive if you keep them running all the time. You may want to have an EC2 instance ready quickly but also don’t want to spend time rebuilding the environment every time you need it. An EBS snapshot helps you save both time and cost.

On the Amazon EC2 console, choose Volumes in the navigation pane under Elastic Block Store.

Filter by the EC2 instance ID.Two volumes should be listed.

Select the data volume with 3072 GiB in size.

On the Actions menu, choose Create snapshot.

The snapshot takes a few hours to finish.

When the snapshot is complete, choose Snapshots, and your new snapshot should be in the list.

You can safely shut down your EC2 instance now. At this point, all the data in the data volume is safely stored in the snapshot for future use.

Recreate the EC2 instance with a snapshot

To recreate a new EC2 instance with AlphaFold, the first steps are similar to what you did earlier when creating an EC2 instance from scratch. But instead of creating the data volume from scratch, you attach a new volume restored from the Amazon EBS snapshot.

Open the Amazon EC2 console and in the AWS Region of your choice, and launch a new EC2 instance with DLAMI by searching Deep Learning AMI.

Choose the DLAMI based on Ubuntu 18.04.

Select p3.2xlarge with one GPU as the instance type. If you don’t have enough quota on a p3.2xlarge instance, you can increase the Amazon EC2 quota on your AWS account.

Configure the proper Amazon VPC setting based on your AWS environment requirements. If this is your first time configuring your Amazon VPC, consider using the default Amazon VPC and review Get started with Amazon VPC.

Set the system volume to 200 GiB, but don’t add a new data volume.

Make sure that the security group settings allow you to access the EC2 instance and the EC2 instance can reach the internet to install Python and Docker packages.

Launch the EC2 instance.

Take note of which Availability Zone the instance is in and the instance ID, because you use them in a later step.

On the Amazon EC2 console, choose Snapshots.

Select the snapshot you created earlier or use the public snapshot provided.

On the Actions menu, choose Create Volume.

For this post, we provide public snapshots in Regions us-east-1, us-west-2, and eu-west-1. You can search public snapshots by snapshot ID: snap-0d736c6e22d0110d0 in us-east-1,snap-080e5bbdfe190ee7e in us-west-2,snap-08d06a7c7c3295567 in eu-west-1.

Set up the new data volume settings accordingly. Make sure that the Availability Zone is the same as the newly created EC2 instance. Otherwise, you can’t mount the volume to the new EC2 instance.

Choose Create Volume to create the new data volume.

Choose Volumes, and you should see the newly created data volume. Its state should be available.

Select the volume, and on the Actions menu, choose Attach volume.

Choose the newly created EC2 instance and attach the volume.

Use SSH to access the Amazon EC2 terminal and run lsblk. You should see that the new data volume is unmounted. In this case, it is /dev/xvdf.

Determine whether there is a file system on the volume. The data volume created from the snapshot has an XFS file system on it already.

Mount the new data volume to the /data folder:

Update all packages on the system and install the dependencies. You do need to rebuild the AlphaFold Docker image.

Confirm that the Nvidia container kit is installed:

You should see output like the following screenshot.

Use the following command to run a prediction of a protein sequence from /data/input/T1024.fasta:

I use a different protein sequence because the snapshot contains the result from T1050 already. If you want to run the prediction for T1050 again, first delete or rename the existing T1050 result folder before running the new prediction.

Use the tail command to monitor the prediction progress:

The whole prediction takes a few hours to finish.

Clean up

When you finish all your predictions and copy your results locally, clean up the AWS resources to save cost. You can safely shut down the EC2 instance and delete the EBS data volume if it didn’t delete when the EC2 instance was shut down. When you need to use AlphaFold again, you can follow the same process to spin up a new EC2 instance and run new predictions in a matter of minutes. And you don’t incur any additional cost other than the EBS snapshot storage cost.

Conclusion

With Amazon EC2 with Nvidia GPU and the Deep Learning AMI, you can install the new AlphaFold implementation from DeepMind and run predictions over CASP14 samples. Because you back up the data on the data volumes to point-in-time snapshots, you avoid paying for EC2 instances and EBS volumes when you don’t need them. Creating an EBS volume based on the previous snapshot greatly shortens the time needed to recreate the EC2 instance with AlphaFold. Therefore, you can start running your predictions in a short amount of time.

About the Author

Qi Wang is a Sr. Solutions Architect on the Global Healthcare and Life Science team at AWS. He has over 10 years of experience working in the healthcare and life science vertical in business innovation and digital transformation. At AWS, he works closely with life science customers transforming drug discovery, clinical trials, and drug commercialization.

Read MoreAWS Machine Learning Blog