Amazon DynamoDB transactions help developers perform all-or-nothing operations by grouping multiple actions across one or more tables. These transactions provide ACID (atomicity, consistency, isolation, durability) compliance for multi-item operations in applications.

The following scenarios are common use cases for DynamoDB transactions:

Financial transactions where an all-or-nothing operation is required. For example, transactions can be used in a peer-to-peer payment application to guarantee that money is subtracted from the sender’s account and posted to the receiver’s account atomically.

Order processing on an ecommerce website. By using transactions, developers can make sure that when an order is successfully placed, inventory and catalog information, the buyer’s order history, and shipment details have all been updated.

Developers find that the ability to make atomic changes to multiple items in DynamoDB with conditions around them is very powerful. For example, transactions can be used in multiplayer games with in-game item purchase, or games with online match making. This tutorial walks you through modeling game player data for a Battle Royale game where a player can only be added to a game if all of the following operations are successful:

Confirm that there are not already 50 players in the game (each game can have a maximum of 50 players).

Confirm that the user is not already in the game.

Create a new UserGameMapping entity to add the user to the game.

Increment the people attribute on the Game entity to track how many players are in the game.

Designing high-throughput and performant transactional applications requires skill and time. Developers often need to design application control flows to optimize transaction resilience, and plan database IOPS requirements to support their transactional workloads. This can be challenging especially with distributed applications and varying loads on databases over time. Amazon DynamoDB’s built-in transactions architecture, monitoring capabilities, and features like on-demand capacity mode, adaptive capacity, and auto-scaling make transactions on DynamoDB tables performant and resilient.

This post provides best practices guidance to help developers and architects build performant and cost-optimized transactional applications using Amazon DynamoDB.

DynamoDB transactions and two-phase commit protocol

DynamoDB offers three transactional APIs: TransactWriteItems, TransactGetItems and ExecuteTransaction (with PartiQL).

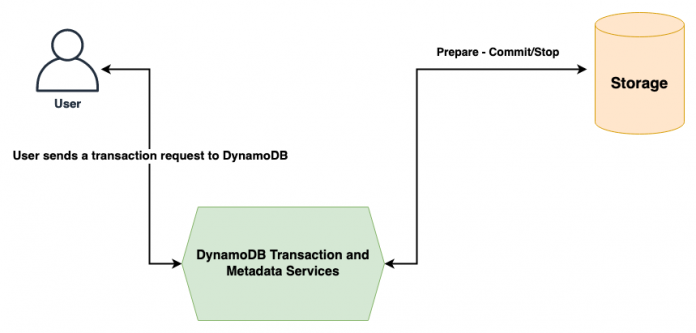

DynamoDB uses a two-phase commit protocol to process a transaction request. After a transaction request is received, DynamoDB’s internal transaction services co-ordinate the processing of the transaction with the underlying DynamoDB tables (see figure below). The transaction services identify the backend storage nodes that need to participate in the transaction, and execute the transaction over two phases: prepare and commit/stop.

In this two-phase commit protocol, each item that needs to be written or read through TransactWriteItems, TransactGetItems, or ExecuteTransaction uses two write and read operations—one for each phase. In the prepare phase, the transaction is prepared and submitted to the storage nodes, and upon a successful prepare phase, the items enter the commit phase, in which they are either committed or the transaction stops.

Capacity planning for transactional APIs

As DynamoDB transactional APIs perform two underlying reads or writes for every item in the transaction, planning for the additional reads and writes when you are provisioning capacity to your tables is important for building resilience in your transactions.

DynamoDB’s built-in transactions architecture optimizes how capacity is utilized in DynamoDB tables, and in turn optimizes the cost of your transactions. Before beginning the execution of the two-phase commit protocol, the DynamoDB service queries metadata of the underlying tables for availability of capacity units. The transaction request is admitted only when enough capacity units are available to prepare all items.

In the following example, a transaction is being requested for items a, b, and c across tables A, B, and C, which consume capacity in provisioned mode. As Table C doesn’t have sufficient capacity for the prepare phase, the transaction stops, without consuming any capacity units in any of the three tables.

This additional check prevents capacity from being consumed in the underlying tables in the prepare phase, if one or more tables in the transaction do not have enough capacity available to run the transaction successfully.

To ensure success of both the prepare and commit phases of the transaction, the capacity for underlying tables must be appropriately planned for provisioned mode tables.

It’s not always easy to predict the size of your items in applications, and your item sizes may vary significantly. Your application and database load patterns may also vary over time. DynamoDB makes this easy by using auto scaling to automatically adjust your provisioned capacity up and down to meet your actual traffic demand. Additionally, if your transactional workloads are highly spiky and unpredictable, you should consider using on-demand mode to accommodate your changing traffic patterns.

For a workload with predictable traffic and item sizes, you can calculate your read capacity unit (RCU) and write capacity unit (WCU) needs based on the size of your items and the number of actions. Let’s look at an example TransactWriteItems request that does the following:

Writes a new item (item a from the preceding example) that is 3 KB in size

Updates an item (item b) that is 2 KB in size

Deletes an item (item c) that is 10 KB in size

In DynamoDB, each WCU allows for one write action per second for an item up to 1KB in size. Because the new item a is 3 KB, a write requires 3 WCUs for each phase, therefore 6 WCUs total on Table A. Similarly, the Update action on item b requires 2 WCUs for each phase and 4 WCUs in total on Table B. Finally, the Delete action on item c requires 10 WCUs for each phase and 20 WCUs in total on Table C. Calculating the correct number of WCUs for all the tables involved in a transaction is an important first step towards building a resilient transactional application.

Using this capacity calculation, along with the rate at which the transaction is run by your application (TPS—transactions per second), you can calculate the number of WCUs and RCUs needed for the transaction. You can then determine the total capacity needs at the table level by summing up the capacity needs across all other transactions driven by your application. You must also plan for the additional capacity units that will be consumed by idempotent operations through SDK retries and TransactionInProgressException errors.

A supplemental way to estimate the TPS load is by monitoring and projecting the capacity usage and throttling metrics via Amazon CloudWatch. For more information, see DynamoDB Metrics and Dimensions.

Avoid transaction conflicts

A transaction conflict can occur during concurrent requests on an item in any of the following three scenarios:

A PutItem, UpdateItem, or DeleteItem request for an item conflicts with an ongoing TransactWriteItems request that includes the same item.

An item within a TransactWriteItems request is part of another ongoing TransactWriteItems request.

An item within a TransactGetItems request is part of an ongoing TransactWriteItems, BatchWriteItem, PutItem, UpdateItem, or DeleteItem request.

For any of these scenarios, it’s always a good idea to test your application logic and monitor the CloudWatch TransactionConflict metric to see if there are any conflicting transactions. If the metric statistic is high, consider the following:

You can decrease the number of conflicts by simplifying your transactions and reducing the number of items per transaction. For example, if you’re trying to update or write five items within a transaction, look for ways to lower the number of items to two or three. You should always try to minimize the number of items in a singular atomic action.

For the first two scenarios, consider serializing your TransactWriteItems requests to DynamoDB for the same item. To make the serialized writes more efficient, consider modularizing your application, so no two modules are writing or updating the same items. For example, each module can be in charge of accessing one shard of your data, and within a module the actions are serialized, but different modules can run transactions in parallel. Each shard can represent a segment of your data that is stored on your DynamoDB table. Let’s say Module 1 handles primary keys that start with a letter between A–C, Module 2 handles primary keys that start with a letter between D–F, and so on.

Encountering the third scenario is rare, because reading and writing the same items simultaneously is rare in practice. In such cases, consider using GetItem or BatchGetItem instead of TransactGetItems.

Further considerations

The following are additional considerations regarding transactions:

Review Best Practices for Transactions.

Consolidate attributes into a single item whenever possible. If a set of attributes is often updated across multiple items as part of a single transaction, consider grouping the attributes into a single item to reduce the scope of the transaction.

We recommend watching the following AWS talk, which provides guidance around developing modern applications that require transactions, and how DynamoDB enables developers to maintain correctness of their data at scale by adding atomicity and isolation guarantees for multi-item conditional updates.

High-throughput transaction considerations on items: The adaptive capacity of DynamoDB can isolate a single item into its own partition. This way, any single item in a DynamoDB table can support read and write throughputs up to the partition maximum values. With transactions, because the throughput consumed is doubled for the two phases, a single item would require two times as much throughput.

Consider using BatchGetItem, BatchWriteItem, and BatchExecuteStatement where ACID characteristics aren’t needed.

Conclusion

The purpose of this post is to help developers and application designers better evaluate their transactional workloads on DynamoDB and optimize for performance, resiliency, and cost. We looked at the DynamoDB transactions architecture, discussed scenarios to solidify our understanding of how transactions work, and talked about DynamoDB’s built-in features and best practices to help you build scalable, performant, and cost-effective transactional applications with DynamoDB.

Learn more about DynamoDB transactions in the DynamoDB Developer Guide. Additionally, we recommend working with your AWS account team and Solutions Architects to further optimize your data design and DynamoDB performance.

About the Authors

Badi Parvaneh is a Solutions Architect with Amazon Web Services. Badi works with AWS’s strategic customers that are on the forefront of innovation, and helps them implement highly-scalable and resilient cloud architectures. Outside of work, he enjoys playing video games, cooking, and watching TV shows with his wife.

Anuj Dewangan is a Principal Solutions Architect with Amazon Web Services. Anuj works with AWS’s largest strategic customers in architecting, deploying and operating web scale distributed systems and applications on AWS.

Read MoreAWS Database Blog