Babelfish for Aurora PostgreSQL includes support for the SQL Server wire-protocol and T-SQL, which is the query language used in Microsoft SQL Server. This means that developers can use Babelfish to run their existing SQL Server applications on Amazon Aurora PostgreSQL-Compatible Edition without having to switch database drivers or completely rewrite their queries.

When migrating from SQL Server to Babelfish for Aurora PostgreSQL, you have different options for data migration, such as the following:

Using AWS Database Migration Service (AWS DMS). For more information, refer to AWS Database Migration Service now supports Babelfish for Aurora PostgreSQL as a target.

Using SQL Server Integration Services (SSIS). For more information, refer to Migrate from SQL Server to Aurora PostgreSQL using SSIS and Babelfish.

Generating T-SQL insert commands (generating a script via SQL Server Management Studio).

Using the Bulk Copy Program (bcp) utility.

In this post, we show you the details as well as the limitations of using bcp for data migrations.

Solution overview

If you’re a SQL Server developer or administrator, you’ve probably heard of the bcp utility, which is a command-line tool that allows you to import or export large amounts of data into or from a SQL Server database.

With bcp, you can quickly transfer data from one SQL Server table to Babelfish for Aurora PostgreSQL. In addition, bcp is efficient, because the insert mechanism is optimized using a batch of rows instead of row by row. It also allows you to have minimal logging operations.

For more information about Babelfish for Aurora PostgreSQL, see Using Babelfish for Aurora PostgreSQL.

Prerequisites

Make sure you have the following prerequisites:

SQL Server Management Studio (SSMS) or the bcp utility must be installed

SQL Server Native Client must be installed on the machine

bcp limitations

Note the following limitations of this data migration option:

bcp doesn’t migrate the table schemas

bcp works with SQL Server databases and Babelfish with some limitations; certain options aren’t supported (-T, -G, -K, -R, -V, and -h).

It’s supported from Babelfish v2.1 (Aurora PostgreSQL 14.3) and above.

There is a known issue when the field/row terminator is contained in the data. The default field terminator is t (tab character) and default row terminator is n (new line character). If the field terminator you are using is contained in your data, for example Major,Mary and you are using , as the field terminator, you will have problems (invalid character value error message) importing this record.

For example, imagine a table with the following columns:

ID

Name

Date

1

Major,Mary

1981-01-01

2

John

1980-02-02

If we use bcp with ,as the terminator field (-t), we will have the following output:

Note that on the first line, we have an additional , which was contained in our data. The data import will fail for this record. Alternatively, you can use the -t | parameter or any other custom terminator character when exporting and importing data. When using the field terminator -t |, we should have output similar to the following:

The same applies to the row terminator. See Specify Field and Row Terminators for more details on how to use customized field/row terminators

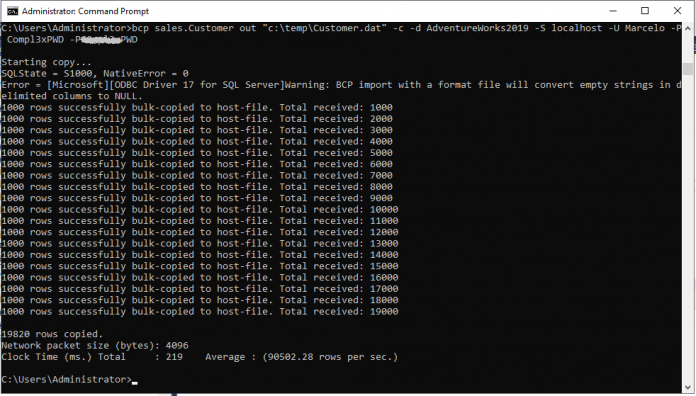

Export data from SQL Server with bcp

You can export your data from a SQL Server database with bcp using the following syntax:

You must specify the name of the source table, the name of the output file, and the database access credentials. bcp exports all table data to the specified file.

If you have hundreds of tables, you can use the following T-SQL command to generate a bcp command to export data:

Import data to Babelfish for Aurora PostgreSQL with bcp

You can import your data to Babelfish with bcp using the following syntax:

You must specify the name of the destination table, the name of the input file, and the database access credentials. bcp imports all data from the file into the specified table.

Note that when importing data, it’s very important to use the same parameter that was used to export the data in character data type (-c) or using the native format, for example binary (-n) that was used when exporting the data. Using the -c parameter is required because this is a cross-platform data migration.

If you have hundreds of tables, you can use the following T-SQL command to generate a bcp command to import data:

bcp parameters

bcp has many useful parameters, such as the ability to specify the format of the data and character encoding, among others.

The following table summarizes the commonly used parameters supported when using the bcp utility to import data into Babelfish for Aurora PostgreSQL.

Parameter

Description

Default

-b

Specifies the number of rows per batch. Each batch is imported and logged as a separate transaction

All rows

-c

Performs the operation formatting all data as text.

-d

The database name to connect to.

-e

The error file to store any row that bcp was unable to transfer.

This option will generate an error file that contains any lines that could not be imported and the corresponding error message.

-m

The maximum errors allowed before bcp cancels the operation.

By default, bcp will consider a maximum of 10 errors before the operation is canceled. To cancel the operation on the first error, use the parameter -m 1.

10

-P

Specifies the login password to connect to the Babelfish for Aurora PostgreSQL or SQL Server.

-S

Specifies the SQL Server or Babelfish for Aurora PostgreSQL endpoint.

-t

Informs the field terminator. See Specify Field and Row Terminators (SQL Server) for additional details.

t

-U

The login user to connect to Babelfish for Aurora PostgreSQL or SQL Server.

bcp error handling

In this section, we discuss some of the challenges you may face when exporting and importing data using bcp and how to handle them.

Usually, we don’t have issues when performing bcp exports, because we are just creating a text file. However, when importing this data, depending on the quality of your data, you may face some problems. The most common problems are:

Primary key violations

Bad delimiter field

Invalid data

It’s important to know that bcp, by default, considers the limit of 10 errors before canceling the operation. You can change this behavior by passing the parameter -m 1 if you want bcp to cancel the operation on the first error. You can also use the parameter -e to generate a file with detailed errors.

The following screenshot shows an example of an error when importing data.

The example shows that we had the errors and the import process continued despite the errors. The error message shows we had some invalid data, but we don’t know exactly which record is causing this issue. If you want the process to stop on the first error, you can pass the parameter m 1.

Let’s repeat the command, adding the -e parameter to generate an error file. Note that the output of the bcp command is similar, but now we have the error file SalesOrderDetail.err.

When we open the error file, we now have the details about the error and which record is causing the problem.

The first error shows that line 8 contains an invalid character. We can also see on the next line the record that bcp is trying to import. We can see that the third column is split, which could indicate an error with the terminator field. When we open the file generated by bcp and examine line 8, we can see the problematic record and row.

The next error in our error file is on line 41,079: the error message says there is an invalid date format. The error file also shows the record that has the error, and we can see that there is a typo in the field with date 213-06-30.

The third error is on line 88,898. The error message says Invalid character, and we can see the invalid character in the date field: 2014-0i-29.

These are examples of how to work with error handling with bcp. The -e parameter saves you a lot of time when trying to find the error and the record with the error.

Best practices

When using bcp to migrate data from a SQL Server database, you should consider the following best practices:

Consider creating foreign keys after data migration. This will ensure the data integrity. If you want to import data without dropping foreign keys, make sure that you load the tables in the right order, otherwise you will receive an error message informing you that you have a foreign key violation and the operation will fail.

When importing large tables and large amounts of data, consider dropping the indexes, loading the data with bcp, and then recreating the index. Dropping indexes before loading the table speeds up your data load process; then you can recreate your indexes if needed.

Because we’re are migrating SQL Server to Aurora PostgreSQL, the -c parameter is required to perform to export and import data using a character data type.

Be aware if your termination field and row are contained into your data.

Use the -e parameter to generate an error file when importing a large number of records.

Summary

In this post, we explored an alternative option for data migration, going beyond the traditional approaches of using AWS DMS, SSIS, or customized scripts. Along the way, we discovered the various parameters supported by bcp utility, allowing us to fine-tune our data migration processes. We also explored the techniques for effectively handling errors, which will help you investigate potential issues that may arise when dealing with problematic or erroneous data.

Concluding our learning journey, we delved into best practices, ensuring a smooth and successful data migration to Babelfish using the bcp utility.

For more information about this feature and Babelfish for Aurora PostgreSQL, see Using Babelfish for Aurora PostgreSQL.

If you have any questions, comments, or suggestions, leave a comment.

About the Authors

Marcelo Fernandes is a Sr. Database Architect with the AWS Professional Services Team. He has been supporting and enabling customers on their journey to migrate and modernize their database solutions from on-premises data centers to AWS.

David Carvalho Queiroz is a Senior Database Consultant on the Professional Services team at Amazon Web Services, with over 10 years of experience in databases. He works by designing migration solutions and strategies to educate and enable global companies on their journey to AWS.

Eduardo Valentim is a Senior Database Consultant within the AWS Professional Services team, bringing over 17 years of experience in the field of databases. Throughout his career, Eduardo has been dedicated to assisting customers with database-related challenges, including migration, design, and performance optimization. By staying up-to-date with the latest best practices in performance and security, Eduardo ensures that his clients’ environments are optimized for success.

Read MoreAWS Database Blog