In today’s digital world, businesses face increasing competition and tight time constraints. Whether it’s a SaaS company looking to release a new feature by week’s end or an employee in a retail shop managing inventory and looking to resolve stock issues by end of day, everyone needs actionable insights at their fingertips in real-time. Access to real-time insights can come in handy in such scenarios where you need immediate decision-making.

When provided on time, these insights can empower businesses to assess and act on data quickly. For example, an employee in the retail shop can get insights in real-time to order stock for a product that’s likely to be sold out a week earlier than expected. These types of insights are made possible by real-time analytics.

Real-time analytics empower organizations to view and analyze data as it flows into their systems. This can help them improve their decision-making, respond to the latest trends, and enhance the operational efficiency of their processes.

What is real-time analytics?

What is streaming analytics?

How does real-time analytics work?

Batch processing vs real-time processing

Real-time analytics architecture

Characteristics of a real-time analytics system

Benefits of real-time analytics

Real-time analytics use cases in various industries

Use Striim to power your real-time analytics architecture

What is real-time analytics?

Real-time analytics refers to pulling real-time data from different sources, which is then analyzed and transformed into a format that makes it understandable for target users. It allows users to draw conclusions or get insights immediately after data is entered into a company’s system. They can see this data on a dashboard, report, or any other medium.

There are two types of real-time analytics:

On-demand real-time analytics

This type of analytics waits for the users to send a request, such as with a SQL query, to deliver the analytics outcome. This can be a data analyst or someone else in the organization who wants to get the latest information about some business activity. For example, a marketing manager can use on-demand real-time analytics to determine how users on social media react to an online ad in real time. On-demand real-time analytics relies on fresh data (e.g. in the data warehouse), but queries are run on an as-needed basis.

Continuous real-time analytics

Unlike on-demand analytics, continuous real-time analytics isn’t reactive. It delivers analytics continuously in real-time without requiring any request. This type of analytics can be shown on a dashboard via charts or other visuals, so users can view what’s happening at the moment. For example, in cybersecurity, continuous real-time analytics can be useful for analyzing streams of network security data coming into an organization’s network for threat detection.

What is streaming analytics?

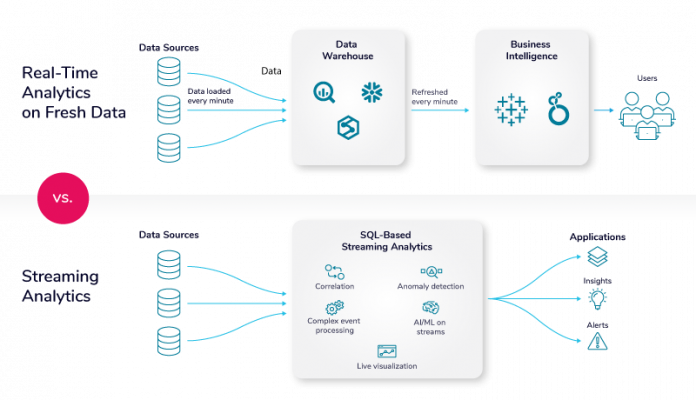

Real-time analytics two ways: on fresh data at rest vs data in motion. Real-time analytics on fresh data can be either on-demand or continuous (e.g dashboards are refreshed every minute). Streaming analytics – or continuous analytics on data in motion – enables correlation, anomaly detection, complex event processing, and machine learning on data streams.

Streaming analytics is analytics on data in motion, as opposed to analytics on data at rest in a database or data warehouse. Streams of data are continuously queried with Streaming SQL, enabling correlation, anomaly detection, complex event processing, artificial intelligence/machine learning, and live visualization. Streaming analytics is especially useful for fraud detection, log analysis, and sensor data processing.

How does real-time analytics work?

Real-time analytics can be broken down into the following steps:

Collecting useful real-time data

For every organization, there’s some type of real-time data that holds value. Some examples of this include:

Enterprise resource management (ERP) data: Analytical or transactional data

Website application data: Top source for traffic, bounce rate, or number of daily visitors

Customer relationship management (CRM) data: General interest, number of purchases, or customer’s personal details

Support system data: Customer’s ticket type or satisfaction level

Based on your operations, look into the type of data that’s crucial for your business and find a way to collect it. For instance, if you work in a manufacturing plant and are looking to use real-time analytics to find faults in your machinery, you can collect data from machine sensors and analyze it in real-time to see if there are any signs of failure. For collection of data, you need a real-time ingestion tool that can collect data from your sources in a reliable way.

Combining data from various sources

In most cases, you need data from more than one source to complete an analysis. For instance, if you are looking to analyze customer data, you will need data from operational systems of sales, marketing, and customer support to look into ways to improve customer experience. To do this, you have to combine data from all your sources. For this purpose, you can use ETL (extract, transform, and load) tools or build a custom data pipeline of your own and send the aggregated data to a target system like a data warehouse.

Extracting insights by analyzing data

The final step is to extract actionable insights. In this step, you use statistical methods and data visualizations to analyze data by identifying underlying patterns or correlations in the data. For instance, you can use clustering to divide the data points into different groups based on their features and common properties. A model might also be used for making predictions based on the available data. These insights are then shown to users in an easy-to-understand format.

Batch processing vs. real-time processing

One of the things that makes real-time analytics possible is how the data is processed. To understand it, you need to know the difference between batch processing and real-time processing.

Batch processing is used in data analytics, where a large amount of data is stored first for some time and then analyzed as per the requirements. Batch processing is used when you have to analyze a large aggregate or where you can wait for results to come out in hours or days. An example of such a system is a payroll system that processes salary data at the end of the month.

Real-time processing analyzes data as soon as data enters the system, without any delay. Real-time analytics is required in scenarios where you need insights quickly. Examples include systems like flight control or ATM machines, where events must be generated, processed, and analyzed quickly.

Real-Time Analytics Architecture

Implementing real-time analytics requires a different architecture and approach than traditional batch-based data analytics. This is in addition to a different set of tools and technologies that can support the streaming and processing of large volumes of data. When it comes to real-time analytics, raw source data rarely is what you want to be delivered to your target systems. More often than not, you need a data pipeline that starts with data integration and then enables you to do several things to the data in-flight before delivery to the target.

Data integration

The data integration layer in the real-time analytics architecture provides capabilities for continuously ingesting data of varying formats and velocity from either external sources or existing cloud storage. The data integration layer is the backbone of any analytics architecture, as downstream reporting and analytics systems rely on consistent and accessible data. Therefore, the integration channel must be able to handle large volumes of data from a variety of sources with minimal impact on source systems and sub-second latency. This layer leverages data integration tools such as Striim to connect to various data sources to ingest streaming data and deliver it to various targets.

For example, the Striim platform enables the continuous movement of unstructured, semi-structured, and structured data – extracting it from a wide variety of sources such as databases, log files, sensors, and message queues, and delivering it in real-time to targets such as Big Data, Cloud, Transactional Databases, Files, and Messaging Systems for immediate processing and usage.

Event/stream processing

The event processing layer provides tools and components for processing data as it is ingested. Data coming into the system in real-time are often referred to as streams or events because each data point describes something that has occurred in a given period. Often, these events need to be cleaned, enriched, processed and transformed in flight before they can be stored or used to provide value. Therefore another essential component for real-time data analytics is the infrastructure to handle real-time event processing.

Event/stream processing with Striim

Some data integration platforms like Striim perform in-flight data processing such as filtering, transformations, aggregations, masking, and enrichment of streaming data before delivering with sub-second latency to diverse environments in the cloud or on-premises. In addition, Striim can also deliver data for advanced processing to stream processing platforms like Apache Spark and Apache Flink, which can manage and process large data volumes with advanced business logic processing.

Data storage

A real-time analytics infrastructure also requires a scalable, durable, and highly available storage service to support the massive volumes of data required for various analytics use cases. Data warehouses and data lakes are the most commonly used storage architectures for big data. Companies looking for a mature, structured data solution that focuses on business intelligence and data analytics use cases may consider a data warehouse. However, data lakes are suitable for enterprises looking for a flexible, low-cost big-data solution to power machine learning and data science workloads on unstructured data.

All data required for real-time analytics is rarely contained within the incoming stream. Applications deployed to devices or sensors are generally built to be very lightweight and intentionally designed to produce minimal network traffic. Therefore the data store should be able to support data aggregations and joins for different data sources and must be able to cater to a variety of data formats.

Presentation/consumption

The core of the real-time analytics solution is a presentation layer to showcase the processed data in the data pipeline. When designing a real-time architecture, this step must be at the forefront because it’s ultimately the end goal of the real-time analytics pipeline.

The data consumption layer provides analytics across the business for all users through purpose-built analytics tools that support analysis methodologies such as SQL, batch analytics, reporting dashboards, and machine learning. This layer is essentially responsible for:

Providing visualization of large volumes of data in real time

Directly querying data from big stores, like data lakes and warehouses.

Turning data into actionable insights using machine learning models that help businesses deliver quality brand experiences.

Characteristics of a real-time analytics system

In order to verify a system truly supports real-time analytics, it must possess certain characteristics.

Low latency

Latency in a real-time analytics system is the period during which an event arrives in the system and gets processed. Latency includes computer processing latency and network latency. It’s important for a real-time system to operate under a low latency to analyze data quickly.

High availability

Availability refers to the ability of a real-time analytics system to perform a function when it’s needed. High availability is important because without it:

The system cannot instantly process data

The system will find it hard to store data or use a buffer for later processing, particularly with high-velocity streams

Horizontal scalability

Horizontal scalability refers to the capability of a real-time analytics system to increase capacity or enhance performance by adding servers to an existing pool. It’s important in the scenario where you can’t adjust the rate of data ingress. In that case, horizontal scalability can help to adjust the size of a real-time system receiving data.

Benefits of real-time analytics

The ability to analyze data in real-time can offer plenty of benefits.

Optimize customer experience

According to the IBM/NRF report, after the pandemic, expectations about online shopping have changed considerably. Consumers want hybrid services that can help them move seamlessly from one channel to another, such as buy online, pickup in-store (BOPIS), or order online and get it delivered to their doorstep. As per the IBM/NRF report, one in four consumers wants to shop the hybrid way.

However, to make these transactions possible, retailers need access to real-time analytics to move data from their supply chain to the relevant departments. Organizations today need to monitor their rapidly changing contexts 24/7. They need to process and analyze cross-channel data immediately.

Another area where real-time analytics can add value is real-time personalization. Here, real-time analytics can instantly deliver personalized content to each user based on their actions on a brand channel like a website, mobile app, SMS, or email.

Detect fraud

Regardless of their size, many organizations have to deal with fraud. Real-time analytics can help organizations identify theft, fraud, and other types of malicious activities. These malicious online activities have seen a surge over the past few years. According to the Federal Trade Commission (FTC), American consumers lost almost $6 billion to fraud last year, a major increase from $3.4 billion in 2020.

For example, companies can use real-time analytics by combining it with machine learning and Markov modeling. Markov modeling is used to identify unusual patterns and make predictions on the likelihood of a transaction being fraudulent. If a transaction shows signs of unusual behavior, then it can be flagged as fraud.

Improve decision-making

Nearly 60% of all data businesses collect today has lost more than half of its value because it gets outdated. Real-time analytics can ensure you get to use the latest insights.

Using fresh data can help organizations know what they are doing well and improve. Conversely, it allows them to identify their issues. For instance, if a piece of machinery isn’t working in a manufacturing plant, real-time analytics can collect this data from sensors and generate data-driven insights that can help technicians resolve it.

Real-time use cases in different industries

Real-time analytics can offer organizations a wide range of use cases.

Supply chain

Real-time analytics in the supply chain can be useful for integrating data in real time and making better decisions. Managers can view real-time dashboard data to oversee the supply chain and strategize demand and supply. Some of the other ways real-time analytics can help organizations include:

Feed live data to route planning algorithms. These algorithms can analyze real-time data to optimize routes and save time by going through traffic patterns on roadways, weather conditions, and fuel consumption.

Use aggregation of real-time data from fuel-level sensors to resolve fuel issues faced by drivers. These sensors can provide data on fuel level volumes, consumption, and dates of refills.

Collect real-time data from electronic logging devices (ELD) to study driver behavior and improve it.

Read more about how Blume Global uses real-time analytics to improve fleet management.

Finance

In certain industries, such as commodities trading, market fluctuations require organizations to be agile. Real-time analytics can help in these scenarios by intercepting changes and empowering organizations to adapt to rapid market fluctuations. Financial firms can use real-time analytics to analyze different types of financial data, such as trading data, market prices, and transactional data.

For example, consider the case of Inspyrus (now MineralTree). Inspyrus is a fintech company that was looking to improve accounts payable operations for businesses. The company wanted to ensure its users could get a real-time view of their transactional data from invoicing reports. However, their existing stack was unable to support real-time analytics, which meant that it took a whole hour for data updates, whereas some operations could even take weeks. There were also technical issues with moving data from an online transaction processing (OLTP) database to Snowflake in real time.

By using a real-time analytics tool, Striim, Inspyrus succeeded in ingesting real-time data from an OLTP database, which is loaded into Snowflake, where it’s transformed. A business intelligence tool is then used to visualize this data and create rich reports for users. As a result, Inspyrus users can view their reports in real time.

Use Striim to power your real-time analytics infrastructure

Your real-time analytics infrastructure can be only as good as the tool you use to support it. Striim is a unified real-time data integration and streaming platform that enables real-time analytics that can offer a range of benefits in this regard. It can help you:

Collect data non-intrusively, securely, and reliably, from operational sources (databases, data warehouses, IoT, log files, applications, and message queues) in real time

Stream data to your cloud analytics platform of choice, including Google BigQuery, Microsoft Azure Synapse, Databricks Delta Lake, and Snowflake

Offer data freshness SLAs to build trust among business users

Perform in-flight data processing such as filtering, transformations, aggregations, masking, enrichment, and correlations of data streams with an in-memory streaming SQL engine

Set up custom alerts to respond to key business events in real time

Striim is a unified real-time data integration and streaming platform that connects clouds, data, and applications.

In terms of features and support, you will find it miles ahead of other tools on the market. For instance, Striim supports data enrichment – something that companies like Hevo Data and Fivetran lack. Similarly, you can build complex in-flight data transformations with Striim, whereas other tools like Qlik Replicate offer only a few predefined transformations. Sign up for a demo today.

Read MoreStriim