What the research is:

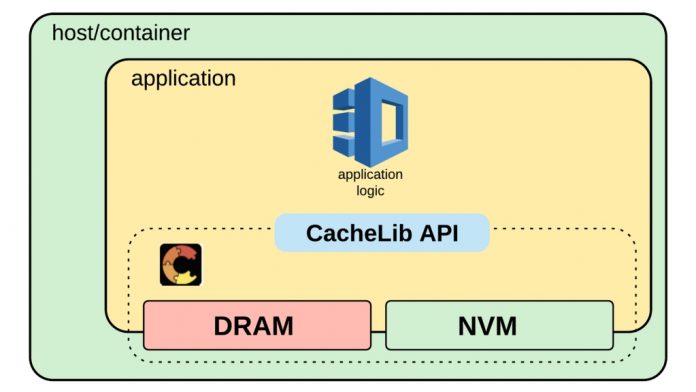

Kangaroo is a new flash cache that enables more efficient caching of tiny objects (objects that are ~100 bytes or less) and overcomes the challenges presented by existing flash cache designs. Since Kangaroo is implemented within CacheLib, Facebook’s open source caching engine, developers can use Kangaroo through CacheLib’s API to build their own customized cache services.

Our work on Kangaroo was honored with a Best Paper award at the 2021 Symposium on Operating Systems Principles (SOSP) conference.

CacheLib’s API allows developers to build and customize concurrent caches.

Optimized for tiny objects, Kangaroo minimizes dynamic random-access memory (DRAM) usage and the number of writes — and introduces a new cache eviction policy that reduces cache misses with minimal DRAM overhead, further reducing load on back-end storage systems.

Kangaroo’s CacheLib implementation also allowed our researchers to quickly evaluate the impact of Kangaroo’s design on real world production workloads. We evaluated Kangaroo using traces from production social graph caching workloads at Facebook and Twitter. Our experiments show Kangaroo reduces misses by 29 percent under realistic system constraints (DRAM and write rate constraints) compared with prior, state-of-the-art flash cache designs. These results are corroborated with a test deployment of Kangaroo in a shadow production setup.

Miss ratio for all three systems over a seven-day Facebook trace. Kangaroo reduces cache misses by 29 percent versus set-associative (SA) caches and by 56 percent versus log-structured (LS) caches.

How it works:

Current flash caches fall into two main categories: set-associative caches and log-structured caches. Set-associative caches write many more bytes than necessary because they have to write a 4 KB flash page — the minimum write granularity — for every object. With tiny objects that are roughly 100 bytes or less in size, this leads to significant write amplification (i.e., set-associative caches write ~40x more bytes than strictly necessary).

Log-structured caches have a large DRAM overhead because they have to keep an index entry for every on-flash object. Thus, neither set-associative nor log-structured flash caches are well designed for tiny object flash caches.

Kangaroo combines log-structured and set-associative caches to reduce both DRAM and flash-write overheads. Kangaroo has two main parts: KLog, a small log-structured flash cache, and KSet, a large set-associative flash cache.

Lookups first check the DRAM cache (1). If the object is not in the DRAM cache, requests check KLog’s index (2a) and then read the object from flash if the object was found in the index (2b). Requests finally check the per-set Bloom filter in KSet (3a) and read the set if the object could be in KSet (3b).For inserts, Kangaroo first inserts objects into the DRAM cache (1). Objects evicted from the DRAM cache are either dropped by a preflash admission policy (2a) or added to KLog’s DRAM index (2b) and appended to KLog’s flash log (2c). Objects evicted from Klog are either dropped by another admission policy (3a) or inserted to KSet (3b).

One of Kangaroo’s innovations is in how it moves objects from KLog to KSet. Kangaroo uses KLog to find multiple objects mapping to the same set in KSet, such as the pink and yellow objects shown above. Whenever an object is evicted from KLog, Kangaroo proactively evicts objects from KLog to minimize write amplification in KSet.

Since writing a set always requires writing 4 KB, regardless of the number of objects inserted, writing two objects instead of just one cuts the write overhead per object in half. Thus, Kangaroo can amortize writes to KSet over multiple objects, decreasing the overall number of bytes written to flash. KLog accomplishes this goal with a small capacity (~5 percent of flash), so Kangaroo needs only a small amount of DRAM to index KLog’s entire capacity. Thus, Kangaroo addresses both the DRAM and flash-write overheads of caching tiny objects on flash.

On top of this basic design, Kangaroo introduces additional techniques to realize its hierarchical design and increase its effectiveness. In particular, since Kangaroo is a cache and not a key-value store, it can evict objects to minimize writes. Kangaroo exploits this opportunity by adding a threshold admission policy that evicts objects instead of admitting them to KSet if there are fewer than n objects to insert from KLog. This admission policy allows Kangaroo to guarantee that the write overhead for moving objects to KSet will be much lower than a set-associative cache. Kangaroo provides further optimizations to reduce DRAM overhead and reduce misses, as explained in our SOSP 2021 paper.

Together, these optimizations allow Kangaroo to overcome the limitations of log-structured caches and set-associative caches, creating a flash cache that delivers on the goal of efficient caching for tiny objects.

Why it matters:

Caching plays an important role in large-scale systems by allowing for quicker access to data and lowering the load on databases and other back-end systems. For large caches, it is far more efficient to use flash than to use DRAM. However, flash caches fall short when it comes to caching tiny objects. Flash caches require either too much DRAM, which loses the efficiency benefits of flash, or too many writes, which wears out the flash device too quickly. In either case, flash caching fails to live up to its potential as an efficient, large cache for tiny objects, ultimately resulting in smaller caches and more load on back-end systems.

Many social media and IoT services have large numbers of tiny objects, each a few hundred bytes or less. For example, edges in Facebook’s social graph, which are needed to connect friends, posts, and images, among other content, average under 100 bytes. Caching these objects efficiently on flash allows them to be quickly retrieved at scale and minimizes the load on back-end storage services. Kangaroo enables large-scale flash caching of tiny objects by having low DRAM and write overheads, leading to a lower miss ratio in large flash caches.

Read the full paper:

Kangaroo: Caching billions of tiny objects on flash

Get it on GitHub:

Acknowledgements:

The authors would like to thank Sara McAllister for leading the project and members of the Parallel Data Lab at Carnegie Mellon University for their input in guiding the research and collaboration with Facebook.

The post Kangaroo: A new flash cache optimized for tiny objects appeared first on Facebook Engineering.

Read MoreFacebook Engineering