Instagram has introduced Immortal Objects – PEP-683 – to Python. Now, objects can bypass reference count checks and live throughout the entire execution of the runtime, unlocking exciting avenues for true parallelism.

At Meta, we use Python (Django) for our frontend server within Instagram. To handle parallelism, we rely on a multi-process architecture along with asyncio for per-process concurrency. However, our scale – both in terms of business logic and the volume of handled requests – can cause an increase in memory pressure, leading to efficiency bottlenecks.

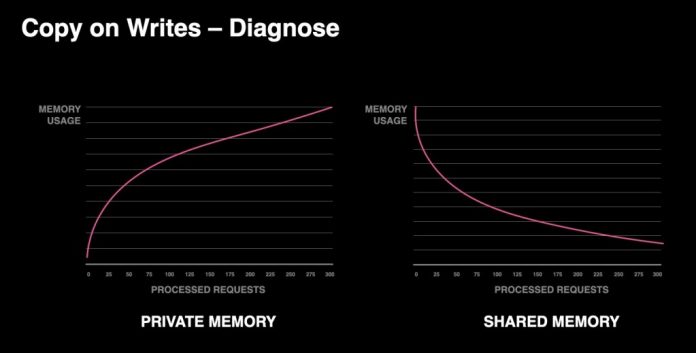

To mitigate this effect, we rely on a pre-fork web server architecture to cache as many objects as possible and have each separate process use them as read-only structured through shared memory. While this greatly helps, upon closer inspection we saw that our processes’ private memory usage grew over time while our shared memory decreased.

By analyzing the Python heap, we found that while most of our Python Objects were practically immutable and lived throughout the entire execution of the runtime, it ended up still modifying these objects through reference counts and garbage collection (GC) operations that mutate the objects’ metadata on every read and GC cycle – thus, triggering a copy on write on the server process.

The effect of copy on writes is increasing private memory and a reduction of shared memory from the main process.

Immortal Objects for Python

This problem of state mutation of shared objects is at the heart of how the Python runtime works. Given that it relies on reference counting and cycle detection, the runtime requires modifying the core memory structure of the object, which is one of the reasons the language requires a global interpreter lock (GIL).

To get around this issue, we introduced Immortal Objects – PEP-683. This creates an immortal object (an object for which the core object state will never change) by marking a special value in the object’s reference count field. It allows the runtime to know when it can and can’t mutate both the reference count fields and GC header.

A comparison of standard objects versus immortal objects. With standard objects, a user can guarantee that it will not mutate its type and/or its data. Immortality adds an extra guarantee that the runtime will not modify the reference count or the GC Header if present, enabling full object immutability.

While implementing and releasing this within Instagram was a relatively straightforward process due to our relatively isolated environment, sharing this to the community was a long and arduous process. Most of this was due to the solution’s implementation, which had to deal with a combination of problems such as backwards compatibility, platform compatibility, and performance degradation.

First, the implementation had to guarantee that, even after changing the reference count implementation, applications wouldn’t crash if some objects suddenly had different refcount values.

Second, it changes the core memory representation of a Python object and how it increases its reference counts. It needed to work across all the different platforms (Unix, Windows, Mac), compilers (GCC, Clang, and MSVC), architectures (32-bit and 64-bit), and hardware types (little- and big-endian).

Finally, the core implementation relies on adding explicit checks in the reference count increment and decrement routines, which are two of the hottest code paths in the entire execution of the runtime. This inevitably meant a performance degradation in the service. Fortunately, with the smart usage of register allocations, we managed to get this down to just a ~2 percent regression across every system, making it a reasonable regression for the benefits that it brings.

How Immortal Objects have impacted Instagram

For Instagram, our initial focus was to achieve improvements in both memory and CPU efficiency of handling our requests by reducing copy on writes. Through immortal objects, we managed to greatly reduce private memory by increasing shared memory usage.

Increasing shared memory usage through immortal Objects allows us to significantly reduce private memory. Reducing the number of copy on writes.

However, the implications of these changes go far beyond Instagram and into the evolution of Python as a language. Until now, one of Python’s limitations has been that it couldn’t guarantee true immutability of objects on the heap. Both the GC and the reference count mechanism had unrestricted access to both of these fields.

Contributing immortal objects into Python introduces true immutability guarantees for the first time ever. It helps objects bypass both reference counts and garbage collection checks. This means that we can now share immortal objects across threads without requiring the GIL to provide thread safety.

This is an important building block towards a multi-core Python runtime. There are two proposals that leverage immortal objects to achieve this in different ways:

PEP-684: A Per-Interpreter GIL

PEP-703: Making the Global Interpreter Lock Optional in CPython

Try Immortal Objects today

We invite the community to think of ways they can leverage immortalization in their applications as well as review the existing proposals to anticipate how to improve their applications for a multi-core environment. At Meta, we are excited about the direction in the language’s development and we are ready to keep contributing externally while we keep experimenting and evolving Instagram.

The post Introducing Immortal Objects for Python appeared first on Engineering at Meta.

Read MoreEngineering at Meta