When building applications using microservices, their management and reliability can be a growing challenge. You need your systems to detect a faulty condition in your applications and remediate the situation immediately and automatically. To do this automatically you need different layers of your infrastructure to communicate and collaborate with each other. Health checks are an explicit way for one layer of a design to communicate to a higher level orchestration or routing layer. For example, in Kubernetes checks can tell the cluster scheduler if your container needs to be restarted, or in Google Cloud load balancing health checks for a backend can inform the front-end where to send traffic. We have introduced health checks to Cloud Run to give you more control over how we help you automate resilience in your services.

Cloud Run Health checks enable you to:

Customize when your container is ready to receive requests

Customize when your container should be considered unhealthy

By default, when starting a new container, Cloud Run waits for your container to listen on a port before sending traffic to it. While this default behavior works for a majority of workloads, you might want to customize this behavior. In addition, Cloud Run now supports custom “liveness” health checks to restart a container if it is considered unhealthy.

Types of health checks

Cloud Run provides two types of custom health checks, startup and liveness checks. Let’s understand the difference between the two and how they can help you.

Startup

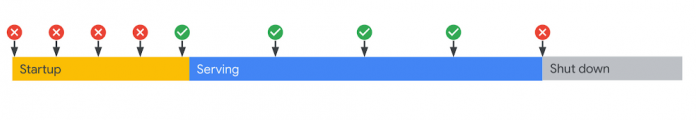

Users can configure startup probes to define when a container has started and is ready to start serving requests. If such a probe is configured, it disables liveness checks until it succeeds, making sure those probes don’t interfere with the application startup.

Imagine you have an application that takes a few minutes to load initial configuration and startup. The application won’t be ready to serve even though the process is running because it needs to finish its startup operations. You will also run into this issue every time your application scales a new instance of the container. The conclusion is that your application should not receive any traffic until the container itself is ready and healthy to serve. Using startup probes, you will be able to ensure your containers are ready to serve requests when Cloud Run sends them.

Liveness

Cloud Run allows users to configure liveness check probes to know when to restart a container. For example, liveness probes could catch a deadlock, where an application is running, but unable to make progress. Restarting a container in such a state can help to make the application more available despite bugs.

Imagine a scenario where your application has deadlocked causing it to hang indefinitely and stop serving requests. Ordinarily Cloud Run will assume because the process is running and the container is up, the application can serve the traffic just fine. Cloud Run will continue to send traffic to this faulty app thereby resulting in several failed requests. With Cloud Run Liveness probes you can now configure your Cloud Run application container instance to be killed and start on the next request arrival in the event of failure.

Types of probes

Now that you have learned how each type of health check works, it’s time to configure the probing mechanism. Cloud Run now supports HTTP, TCP and gRPC probes for startup checks and HTTP and gRPC probes for liveness checks. These probes can be configured similar to kubernetes health checks with a compatible API, making your workloads portable across the platforms as you can see in the sample below.

TCP

The TCP probe is where Cloud Run tries to establish a TCP connection to a specified TCP port on the container. If it can establish a successful connection, the container and the service is marked healthy to start serving traffic, otherwise it’s unhealthy. TCP probe is only supported for startup checks and not liveness. By default, when starting a new container, Cloud Run uses TCP probe to wait for your container to listen on a port before sending traffic to it

HTTP

This is the most common type of probe for start up and liveness checks. For an HTTP probe, Cloud Run sends an HTTP GET request to the specified path to perform the check. With the configuration described here you can mark your container as ready when it returns success after a request is sent on the http endpoint path at startup. You will need to configure your own health check endpoint for e.g. /startz within your application.

Similarly, if you configure an http endpoint for liveness probe as described here, Cloud Run will make an HTTP GET request to the service health check endpoint. If the HTTP liveness probe fails within the specified time, the service will be stopped and then restarted by the next incoming request.

The above example shows the configuration of a typical http startup probe. The application will have a maximum of 20*10=200s to finish its startup. Once the startup probe has succeeded once, the application is marked ready to serve traffic. If the startup probe never succeeds, the container is terminated after 200s.

gRPC

Cloud Run supports deploying microservices communicating using the grpc protocol. For such services, you may choose to use the grpc health check probing mechanism. They follow the same principle as other types of probes but the configuration of grpc probes within your application needs to follow the GRPC Health Checking Protocol guidelines. Once your app implements the grpc service endpoints, Cloud Run services can be configured to use the startup and liveness checks with grpc as described here.

Service Health

Note that these checks are used to better manage containers inside Cloud Run, allowing your containers to communicate with Cloud Run itself. They do not provide a “service-level” health check at the load balancer layer to indicate whether your service is overall healthy or not, instead they focus on keeping the overall quality of or your service high (lowering your potential error-rate) by ensuring containers that Cloud Run is running for you are available to actually perform the requested work.

Conclusion

Managing a large pool of microservices in a serverless environment can have its own set of challenges. Although Cloud Run makes it super easy to host and run your serverless applications, you can now have more control over making these apps more robust and reliable with health checks.

You can find the instructions on how to set up the health checks for your Cloud Run service here.

Cloud BlogRead More