Background

With the increasing popularity of AI-generated content (AIGC), open-source projects based on text-to-image AI models such as MidJourney and Stable Diffusion have emerged. Stable Diffusion is a diffusion model that generates realistic images based on given text inputs. In this GitHub repository, we provide three different solutions for deploying Stable Diffusion quickly on Google Cloud Vertex AI, Google Kubernetes Engine (GKE), and Agones-based platforms, respectively, to ensure stable service delivery through elastic infrastructure. This article will focus on the Stable Diffusion model on GKE and improve launch times by up to 4x.

Problem statement

The container image of Stable Diffusion is quite large, reaching approximately 10-20GB, which slows down the image pulling process during container startup and consequently affects the launch time. In scenarios that require rapid scaling, launching new container replicas may take more than 10 minutes, significantly impacting user experience.

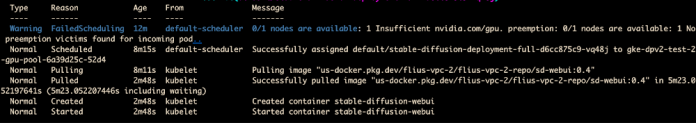

During the launch of the container, we can see following events in chronological order:

Triggering Cluster Autoscaler for scaling + Node startup and Pod scheduling: 225 seconds

Image pull startup: 4 seconds

Image pulling: 5 minutes 23 seconds

Pod startup: 1 second

sd-webui serving: more than 2 minutes

After analyzing this time series, we can see that the slow startup of the Stable Diffusion WebUI running in the container is primarily due to the heavy dependencies of the entire runtime, resulting in a large container image size and prolonged time for image pulling and pod initialization.

Therefore, we consider optimizing the startup time from the following three aspects:

Optimizing the Dockerfile: Selecting the appropriate base image and minimizing the installation of runtime dependencies to reduce the image size.

Separating the base environment from the runtime dependencies: Accelerating the creation of the runtime environment through PD disk images.

Leveraging GKE Image Streaming: Optimizing image loading time by utilizing GKE Image Streaming and utilizing Cluster Autoscaler to enhance elastic scaling and resizing speed.

This article focuses on introducing a solution to optimize the startup time of the Stable Diffusion WebUI container by separating the base environment from the runtime dependencies and leveraging high-performance disk image.

Optimizing the Dockerfile

First of all, here’s a reference Dockerfile based on the official installation instructions for the Stable Diffusion WebUI:

In the initial building container image for the Stable Diffusion, we found that besides the base image NVIDIA runtime, there were also numerous installed libraries, dependencies and extensions

In terms of optimizing the Dockerfile, after analyzing the Dockerfile, we found that the nvidia runtime occupies approximately 2GB, while the PyTorch library is a very large package, taking up around 5GB. Additionally, Stable Diffusion and its extensions also occupy some space.

Therefore, following the principle of minimal viable environment, we can remove unnecessary dependencies from the environment.

We can use the NVIDIA runtime as the base image and separate the PyTorch library, Stable Diffusion libraries, and extensions from the original image, storing them separately in the file system. Below is the original Dockerfile snippet,

After moving out Pytorch libraries and Stable diffusion, we only retained the NVIDIA runtime in the base image, here is the new Dockerfile.

Using PD disks images to store libraries

PD Disk images are the cornerstone of instance deployment in Google Cloud. Often referred to as templates or bootstrap disks, these virtual images contain the baseline operative system, and all the application software and configuration your instance will have upon first boot. The idea here is to store all the runtime libraries and extensions in a disk image, which in this case has a size of 6.77GB. The advantage of using a disk image is that it can support up to 1000 disk recoveries simultaneously, making it suitable for scenarios involving large-scale scaling and resizing.

We use a DaemonSet to mount the disk when GKE nodes start.

The specific steps are as follows:

As described in previous sections, in order to speed up initial launch for better performance, we’re trying to mount a persistent disk to GKE nodes to place runtime libraries for stable diffusion.

Leveraging GKE Image Streaming and Cluster Autoscaler

In addition, as mentioned earlier, we have also enabled GKE Image Streaming to accelerate the image pulling and loading process. GKE Image Streaming works by using network mounting to attach the container’s data layer to containerd and supporting it with multiple cache layers on the network, memory, and disk. Once we have prepared the Image Streaming mount, your containers transition from the ImagePulling state to Running in a matter of seconds, regardless of the container size. This effectively parallelizes the application startup with the data transfer of the required data from the container image. As a result, you can experience faster container startup times and faster automatic scaling.

We have enabled the Cluster Autoscaler (CS) feature, which allows the GKE nodes to automatically scale up when there are increasing requests. Cluster Autoscaler triggers and determines the number of nodes needed to handle the additional requests. When the Cluster Autoscaler initiates a new scaling wave and the new GKE nodes are registered in the cluster, the DaemonSet starts working to assist in mounting the disk image that contains the runtime dependencies. The Stable Diffusion Deployment then accesses this disk through the HostPath. Additionally, we have utilized the Optimization Utilization Profile of the Cluster Autoscaler, a profile on GKE CA that prioritizes optimizing utilization over keeping spare resources in the cluster, to reduce the scaling time, save costs, and improve machine utilization.

Final results

The final startup result is as below:

In chronological order:

Triggering Cluster Autoscaler for scaling: 38 seconds

Node startup and Pod scheduling: 89 seconds

Mounting PVC: 4 seconds

Image pull startup: 10 seconds

Image pulling: 1 second

Pod startup: 1 second

Ability to provide sd-webui service (approximately): 65 seconds

Overall, it took approximately 3 minutes to start a new Stable Diffusion container instance and start serving on a new GKE node. Compared to the previous 12 minutes, it is evident that the significant improvement in startup speed has enhanced the user experience.

Take a look at the full code here: https://github.com/nonokangwei/Stable-Diffusion-on-GCP/tree/main/Stable-Diffusion-UI-Agones/optimizated-init

Considerations

While the technique described above splits up dependencies so that the container size is smaller and you can load the libraries from PD Disk images, there are some downsides to consider. Packing it all in one container image has its upsides where you can have a single immutable and versioned artifact. Separating the base environment from the run time dependencies means you have multiple artifacts to maintain and update. You can mitigate this by building tooling to manage updating of your PD disk images.

Cloud BlogRead More