Parallel queries in PostgreSQL have the ability to use more than one CPU core per query. In parallel queries the optimizer breaks down the query tasks into smaller parts and spreads each task across multiple CPU cores. Each core can process the tasks at the same time thereby reducing the total time taken to complete the query task.

There are instances where parallel queries are not beneficial. For example: when only a small amount of data is needed, and it can be quickly processed by only one core. The optimizer decides whether it will be beneficial to execute the query by using multiple CPU cores or a single core.

In this post, I explain how to implement parallel queries in Amazon Aurora PostgreSQL-Compatible Edition and Amazon RDS for PostgreSQL databases, and demonstrate the performance gain that RDS Postgres can achieve using the parallel query feature.

Note: The PostgreSQL implementation of parallel query described in this blog is different than the parallel query feature implemented by Amazon Aurora MySQL-Compatible Edition.

Prerequisites

Create a new parameter group with parameters for parallel queries as follows:

max_worker_processes – Maximum number of background processes that can be launched to support parallel queries.

max_parallel_workers – Maximum number of workers that support parallel operations.

max_parallel_workers_per_gather (default 0) – Number of additional workers that can be used on a query.

parallel_tuple_cost (default 0.1) – Estimates the cost of transferring one tuple from a parallel worker process to another process

parallel_setup_cost (default 1000.0) – Estimates the cost of launching parallel worker processes

min_parallel_relation_size (default 8 MB) – A relation larger than this parameter is considered for parallel scans

force_parallel_mode (default off) – This is useful when testing parallel query scans even when there is no performance benefit

Note: For more information regarding setting these parameters as per your workload refer to the PostgreSQL documentation.

Create an RDS PostgreSQL instance

To create an RDS or Aurora PostgreSQL instance and connect to a PostgreSQL database, see the following instructions, respectively:

Create and Connect to a PostgreSQL Database with Amazon RDS

Creating a DB cluster and connecting to a database on Aurora PostgreSQL

Make sure to use the parameter group that you created when you create the instance. For instructions, see Associating a DB parameter group with a DB instance.

Overview of solution

In order to understand Parallel Query in PostgreSQL, we should first look at the fundamental structure of query processing in PostgreSQL. PostgreSQL queries are broken down into multiple steps by the optimizer and then each step is processed sequentially. The reason being PostgreSQL is a process based system and not threaded and a single connection has its own process. The different parts of query processing in PostgreSQL are:

Parser: Generates a parse tree from a SQL statement.

Analyzer: Creates a semantic analysis of a parse tree and generates a query tree.

Rewriter: Transforms a query tree using the rules stored in the rule system if such rules exist.

Planner: Generates the plan tree that can most effectively be executed from the query tree.

Executor: Executes the query via accessing the tables and indexes in the order that was created by the plan tree.

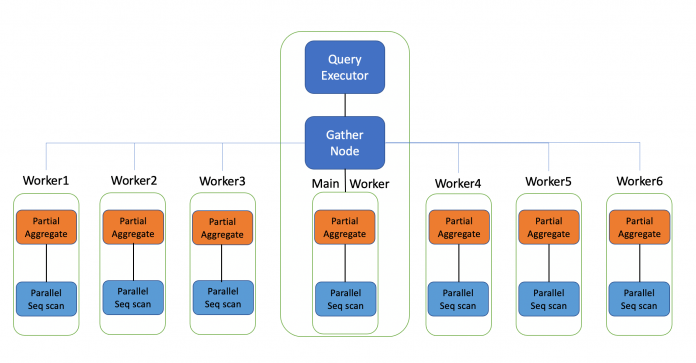

The following is an architectural diagram of how PostgreSQL uses parallel query processing.

Every parallel process query in PostgreSQL has two main components:

Gather node – Every parallel process query has a gather root node that is responsible for gathering, processing, and combining all the information provided by the sub-worker nodes. Every time the planner decides that a query must be parallelized, it creates a gather node and assigns worker nodes to the gather node for parallel processing.

Worker nodes – Multiple workers work in parallel to get chunks of information from different parts of the tables. This information is given to one gather node that combines all this information and provides it to the query output queue.

Implement parallel queries

You can also set up parallel query parameters at the session level when running a set command.

In the following example code, I create a table and generate data to test the parallel plan implementation:

Explain plan and outputs

The following explain plans are taken from an average of three runs for every iteration.

First we check the explain plan of our query without any workers provided for the parallel plan (disabling the parallel query). The following code determines if the parallel query functionality is disabled:

The parallel mode is set to off in the preceding code, which verifies that our parallel queries are currently not working. The following code demonstrates the query run time without parallel query workers:

Review the performance gain

In this section, we see how we can achieve performance gains from implementing our solution.

After we disabled parallel query workers in the preceding section, the sequential scan on the table took 6,929.438 milliseconds. The following code is the explain plan after we enable parallel query workers:

The run time for the same query has reduced from 6,929.438 milliseconds (approximately 6.9 seconds) to 2,804.390 (approximately 2.8 seconds).

This shows an improvement of up to three times faster in the parallel query performance .

When you run a parallel plan, you can use EXPLAIN (ANALYZE, VERBOSE) to display per-worker statistics for each plan node. This may be useful in determining whether the work is being evenly distributed between all plan nodes, and also helpful in understanding the performance characteristics of the plan. See the following example code:

Limitations

Keep in mind the following limitations:

A parallel plan for the query isn’t generated if a query contains a data-modifying operation. For example, if your query is a delete of an update statement and not a select statement, the query plan for parallel query isn’t generated.

A query within another query that is already running in parallel doesn’t qualify to run as a parallel query.

A system-defined function or a query within a function is marked as parallel unsafe.

A parallel plan doesn’t run if background workers can’t be obtained by the system, either due to memory or available resources on the server.

Conclusion

In this post, I showed you how parallel query support in PostgreSQL provides performance gains compared to sequential queries. This feature is built into PostgreSQL 9.6 onwards. Having the right number of worker sessions is highly recommended to fully take advantage of parallel queries, and Amazon Aurora and RDS PostgreSQL instances are a great way to start using parallel queries.

About the Author

Vikram Singh Rai is a Senior consultant with AWS Professional Services. He works with AWS DMS, SCT, DMAF, Aurora, and RDS PostgreSQL related AWS services to bring the best possible experience to customers.

Personal note from author: Work hard, play by the rules!!

Read MoreAWS Database Blog