Today, geospatial workflows typically consist of loading data, transforming it, and then producing visual insights like maps, text, or charts. Generative AI can automate these tasks through autonomous agents. In this post, we discuss how to use foundation models from Amazon Bedrock to power agents to complete geospatial tasks. These agents can perform various tasks and answer questions using location-based services like geocoding available through Amazon Location Service. We also share sample code that uses an agent to bridge the capabilities of Amazon Bedrock with Amazon Location. Additionally, we discuss the design considerations that went into building it.

Amazon Bedrock is a fully managed service that offers an easy-to-use API for accessing foundation models for text, image, and embedding. Amazon Location offers an API for maps, places, and routing with data provided by trusted third parties such as Esri, HERE, Grab, and OpenStreetMap. If you need full control of your infrastructure, you can use Amazon SageMaker JumpStart, which gives you the ability to deploy foundation models and has access to hundreds of models.

Solution overview

In the realm of large language models (LLMs), an agent is an entity that can autonomously reason and complete tasks with an LLM’s help. This allows LLMs to go beyond text generation to conduct conversations and complete domain-specific tasks. To guide this behavior, we employ reasoning patterns. According to the research paper Large Language Models are Zero-Shot Reasoners, LLMs excel at high-level reasoning, despite having a knowledge cutoff.

We selected Claude 2 as our foundational model from Amazon Bedrock with the aim of creating a geospatial agent capable of handling geospatial tasks. The overarching concept was straightforward: think like a geospatial data scientist. The task involved writing Python code to read data, transform it, and then visualize it in an interesting map. We utilized a prompting pattern known as Plan-and-Solve Prompting for this purpose.

Using a Plan-and-Solve strategy allows for multi-step reasoning and developing a high-level plan as the first task. This works well for our load, transform, and visualize workflow, and is the high-level plan our agent will use. Each of these subtasks are sent to Claude 2 to solve separately.

We devised an example task to create a price heatmap of Airbnb listings in New York. To plan a path to complete the task, the agent needs to understand the dataset. The agent needs to know the columns in the dataset and the type of data in those columns. We generate a summary from the dataset so the agent can plan for the task provided by the user, in this case, generating a heatmap.

Prerequisites

There are a few prerequisites to deploy the demo. You’ll need access to an AWS account with an access key or AWS Identity and Access Management (IAM) role with permissions to Amazon Bedrock and Amazon Location. You will need to create a map, a place index, and an Amazon Location API key using the Amazon Location console. You will also need access to either a local or virtual environment where Docker is installed. In our demonstration, we use an Amazon Elastic Compute Cloud (Amazon EC2) instance running Amazon Linux with Docker installed.

Read and summarize the data

To give the agent context about the dataset, we prompt Claude 2 to write Python code that reads the data and provides a summary relevant to our task. The following are a few of the prompts we included. The full list is available in the prompts.py file in the project.

From these prompts, Claude 2 generated the following Python code:

The agent:// prefix tells our CLI that this file is stored inside the session storage folder. Upon running the CLI, it will create a geospatial-agent-session-storage folder to store local data. The agent now recognizes the uploaded Airbnb data and its column names. Next, let’s ask Claude for some hints to generate a heatmap using these columns. We will pass in the following prompts.

Claude 2 replies with a hint

Plan a solution

Now we can ask Claude 2 to draft a high-level plan. We can use a graph library for Python called NetworkX to map out the steps for solving our problem.

Similar to our last prompt, we pass in a few requirements to guide Claude 2:

From these prompts, Claude 2 generated the following Python code:

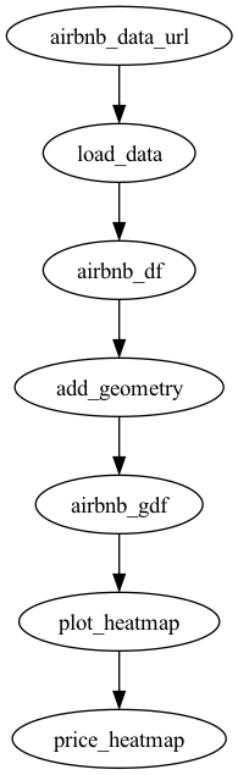

In this NetworkX graph, Claude 2 breaks down the process into three main segments:

Loading data – Importing the Airbnb listing prices from a given URL into a Pandas DataFrame

Transforming data – Creating a geometry column based on the latitude and longitude coordinates

Visualizing data – Generating a heatmap to display the pricing of Airbnb listings

This approach allows for a clear and straightforward implementation of the geospatial task at hand. We can use GraphViz to visualize the following workflow.

Implement the plan

Now that Claude 2 has provided us with a plan, it’s time to bring it to life. For each step, we prompt Claude 2 to write the corresponding code. To keep Claude 2 focused, we supply high-level requirements for each task. Let’s dive into the code that Claude 2 generated for each individual phase.

Load the data

To load the Airbnb listing price data into a Pandas DataFrame, we create a prompt and pass in some parameters. The “Load Airbnb data” in the Operation_task is referencing the Load Data node in our graph we created earlier.

From these prompts, Claude 2 generated the following Python code:

Transform the data

Next, Claude 2 generates the code to add a geometry column to our DataFrame using latitude and longitude. For this prompt, we pass in the following requirements:

From these prompts, Claude 2 generated the following Python code:

Visualize the data

Finally, Claude 2 builds a heatmap visualization using pydeck, which is a Python library for spatial rendering. For this prompt, we pass in the following requirements:

From these prompts, Claude 2 generated the following Python code:

When Claude 2 returns a response, it also includes some helpful notes explaining how each function meets the provided requirements. For example, for the heatmap visualization, Claude 2 noted the following:

“This function generates a heatmap of Airbnb listing prices using pydeck and saves the resulting HTML locally. It fulfills the requirements specified in the prompt.”

Assemble the generated code

Now that Claude 2 has created the individual building blocks, it’s time to put it all together. The agent automatically assembles all these snippets into a single Python file. This script calls each of our functions in sequence, streamlining the entire process.

The final step looks like the following code:

After the script is complete, we can see that Claude 2 has created an HTML file with the code to visualize our heatmap. The following image shows New York on an Amazon Location basemap with a heatmap visualizing Airbnb listing prices.

Use Amazon Location with Amazon Bedrock

Although our Plan-and-Solve agent can handle this geospatial task, we need to take a slightly different approach for tasks like geocoding an address. For this, we can use a strategy called ReAct, where we combine reasoning and acting with our LLM.

In the ReAct pattern, the agent reasons and acts based on customer input and the tools at its disposal. To equip this Claude 2-powered agent with the capability to geocode, we developed a geocoding tool. This tool uses the Amazon Location Places API, specifically the SearchPlaceIndexForText method, to convert an address into its geographic coordinates.

Within this brief exchange, the agent deciphers your intent to geocode an address, activates the geocoding tool, and returns the latitude and longitude.

Whether it’s plotting a heatmap or geocoding an address, Claude 2 combined with agents like ReAct and Plan and Solve can simplify geospatial workflows.

Deploy the demo

To get started, complete the following steps:

Clone the following repository either to your local machine or to an EC2 instance. You may need to run aws configure –profile <profilename> and set a default Region; this application was tested using us-east-1.

Now that we have the repository cloned, we configure our environment variables.

Change directories into the cloned project folder:

Edit the .env file using your preferred text editor:

Add your map name, place index name, and API key:

Run the following command to build your container:

Run the following command to run and connect to your Docker container:

Grab the Airbnb dataset:

Run the following command to create a session. We use sessions to isolate unique chat environments.

Now you’re ready to start the application.

Run the following command to begin the chat application:

You will be greeted with a chat prompt.

You can begin by asking the following question:

The agent grabs the Airbnb_listings_price.csv file we have downloaded to the /data folder and parses it into a geospatial DataFrame. Then it generates the code to transform the data as well as the code for the visualization. Finally, it creates an HTML file that will be written in the /data folder, which you can open to visualize the heatmap in a browser.

Another example uses the Amazon Location Places API to geocode an address. If we ask the agent to geocode the address 112 E 11th St, Austin, TX 78701, we will get a response as shown in the following image.

Conclusion

In this post, we provided a brief overview of Amazon Bedrock and Amazon Location, and how you can use them together to analyze and visualize geospatial data. We also walked through Plan-and-Solve and ReAct and how we used them in our agent.

Our example only scratches the surface. Try downloading our sample code and adding your own agents and tools for your geospatial tasks.

About the authors

Jeff Demuth is a solutions architect who joined Amazon Web Services (AWS) in 2016. He focuses on the geospatial community and is passionate about geographic information systems (GIS) and technology. Outside of work, Jeff enjoys traveling, building Internet of Things (IoT) applications, and tinkering with the latest gadgets.

Swagata Prateek is a Senior Software Engineer working in Amazon Location Service at Amazon Web Services (AWS) where he focuses on Generative AI and geospatial.

Read MoreAWS Machine Learning Blog