Analyzing data originating on a blockchain can be a challenging and time-consuming process due to the complexity and variety of smart contract structures. This primary obstacle requires an in-depth understanding of the various platforms you wish to analyze. In the Web3 space, each protocol has their own way of storing and calling methods, making implementation a challenging and burdensome process. Additionally, the substantial volume of transactions and blocks needs to be downloaded and fully processed for a meaningful analysis. The Graph helps address these difficulties by providing a standardized, open-source platform. In this post, we discuss how to gain insights from Web3 data by using The Graph and Amazon Managed Blockchain (AMB) Access. With The Graph, you have full control of what you are indexing and which events are relevant to you. You can also use Amazon Managed Blockchain (AMB) Query, a fully-managed blockchain data offering that provides developer-friendly APIs for current and historical token balances, transactions, and events for native and standard token types on both the Ethereum and Bitcoin networks.

While a blockchain stores a comprehensive record of transactions, including the state of the ledger following smart contract interactions, it is not optimized for efficient data querying. For instance, information about the provenance of an NFT is stored within a blockchain because it has stored all ownership transfers. Retrieving this data presents a cumbersome process, requiring you to extract and inspect every block for ownership transfers of a specific NFT and then compile a list of all previous holders. Given that the blockchain is not indexed for these types of queries, establishing ownership data amounts to scanning the full blockchain for NFT transfers. This isn’t feasible for any but the most basic queries. Instead, application developers need a means of querying blockchain data efficiently. The Graph addresses this need by indexing blockchain data and making it available for rich and performant queries.

The Graph is a decentralized protocol for indexing and querying smart contract events from various blockchains in a fast and efficient way. It indexes events from specific smart contracts, stores the data in a database, and provides a GraphQL interface for querying the data. A Graph Node can be run on The Graph’s decentralized network (for Ethereum, Polygon, Avalanche, Arbitrum One, Gnosis, or Celo). However, if you need to index any other EVM-compatible blockchain, you can do so with your own Graph Node.

The basics of The Graph protocol

The primary purpose of The Graph is to index, process, and store event data from a blockchain. It converts events emitted from a smart contract into data structures that are consumable by a frontend or API-utilizing logic.

After data is indexed from a blockchain, it’s organized into a predefined schema before being persisted into a PostgreSQL database. The data can be queried through GraphQL endpoints on the Graph Node. The mappings and smart contract definitions are defined as a subgraph.

Subgraph definitions specify which smart contract(s) to index as well as the specific events to track. The mapping is used to persist the data into the database. A subgraph definition consists of three parts that you specify:

GraphQL schema – This defines the target schema for storage. It also defines how you can query for data later on.

Mapping – This translates (smart contract) event data into entities. This part defines the transformation logic.

Subgraph configuration – This specifies the smart contract to index, the starting block, and similar config data.

After a subgraph has been defined, it needs to be deployed to a Graph Node. With the deployment, the node is aware of the subgraph and starts indexing the smart contract. It starts with the defined starting block and backfills the database up to the current block head. When the Graph Node is in sync with the current block head, it subscribes to future events and updates the database as they come in.

Use cases and applications of The Graph

The Graph allows for an effective way of querying blockchain data. Use cases for this protocol span multiple industries:

Decentralized finance (DeFi) – Uniswap, a protocol in the DeFi space, uses a subgraph for fetching trading pair data in real time. This allows users to get the latest prices and liquidity information for different cryptocurrency pairs.

Non-fungible tokens (NFTs) – In the NFT ecosystem, a subgraph can be created to track marketplaces such as OpenSea. This allows developers the ability to track ownership and transaction history of digital assets.

Decentralized autonomous organizations (DAOs) – Governance platforms such as Aragon use a subgraph in order to keep track of voting activities and decisions made within their DAOs. This enables a transparent and democratic process, which is crucial for maintaining trust within a community.

Gaming – Blockchain games such as Aavegotchi use a subgraph to index in-game events so they can display the current state of the game in the front end.

These are just a few examples of how The Graph’s decentralized data indexing can be harnessed. As innovation continues within the Web3 ecosystem, the possibilities for utilizing The Graph will continue to expand.

Data sources: Full node or archive node

The Graph indexes smart contract event data, which is sourced from a blockchain node. A Graph Node retrieves all new data for a newly created block from the blockchain node and then maps the data to the database schema. As the input source, a Graph Node can connect to either a full or archive blockchain node. There are several differences between a full node and an archive node that are crucial to understand when deciding which specific smart contracts you wish to index. A full node stores the entire blockchain’s history of transactions, but only the most recent state of each smart contract (the latest 128 blocks), making it less storage-intensive. An archive node retains all historical states, providing a complete historical context, but requiring substantially more storage. This variation determines the data a Graph Node can use while constructing its dataset.

Full node – A full node provides all event data for transactions with the payload. For example, NFT transfers usually consist of the sender, the receiver, and the token ID. The Graph indexes this data and builds up the ownership history of a token by processing all transfer events.

Archive node – In addition to the data on a full node, an archive node stores all historical states for the blockchain. An archive node can return an answer to a query such as “What was the tokenURI for a particular token at a certain block?” A Graph Node sourcing its data from an archive node can augment its data by calling a smart contract for additional information. Whenever the Graph Node handles an event, it can call smart contracts. A newly minted token, for example, can be augmented with its tokenURI by calling the tokenURI() function at the block that emitted the event. An archive node provides the correct answer, whereas a full node cannot — state information is pruned after 128 blocks.

For a rich dataset, it’s always advisable to use an archive node as the data source, so that richer models are available.

Apart from blockchain data directly, The Graph can additionally query the InterPlanetary File System (IPFS), a decentralized network designed for storing and sharing data. Data on IPFS is immutable due to its content-based addressing functionality, making it a good fit for The Graph.

Deploying your own node on AWS

Running your own Graph Node on AWS presents numerous benefits, such as the following:

Scalability – You can adjust the resources (storage and computing power) allocated to your Graph Node as needed. If you have a read or write-heavy workload, you can scale out to create additional nodes to ensure high availability.

Reliability – You can minimize your application’s downtime by utilizing AWS’s reliable and robust infrastructure.

Data integrity – You have full control over data indexing and query parameters.

Security – You can protect your data from unauthorized access by integrating with AWS Identity and Access Management (IAM). Access to your Graph Node is additionally restricted to an IP address of your development machine.

Management – Maintenance, hardware, software, and network infrastructure are all managed by AWS. This enables you to prioritize innovation rather than the underlying infrastructure.

Integration – You can integrate your Graph Node with various other services, such as Amazon Relational Database Service (Amazon RDS) and AWS Lambda.

AMB Access is a fully managed service that allows you to run your own dedicated node for Ethereum. This node is a full node and provides access to Ethereum mainnet and Goerli testnet. AMB Access can be used as the data source for a Graph Node.

If the subgraph needs an archive node, it can run as self-managed node on Amazon Elastic Cloud Compute (Amazon EC2). This option gives you full control over which client you want to run.

If your use case does not require custom events, and is built around token balances, transaction details, and ownership information across accounts for native (ETH, BTC) and standard token types (ERC-20, ERC-721, and ERC-1155), consider using AMB Query instead of a Graph Node. As it is a managed serviced, it does not need to be deployed or maintained.

Solution overview

You can deploy the Graph Node with the AWS Cloud Development Kit (AWS CDK), which provides the configuration for the components in JavaScript. It is available in the GitHub repository. The solution uses the following services:

Amazon Elastic Container Service (Amazon ECS) is a fully managed container orchestration service to run highly secure, reliable, and scalable containerized applications. It launches, monitors, and scales applications across flexible compute options with automatic integrations to other supporting AWS services. In this post, the Amazon Elastic Cloud Compute (Amazon EC2) launch type is used to run your containerized Graph Node on an EC2 instance.

Amazon Aurora PostgreSQL-Compatible Edition makes it simple to deploy scalable PostgreSQL databases in minutes with cost-efficient and resizable hardware capacity. After a subgraph has been deployed onto a Graph Node, blockchain data will be indexed and processed before being persisted into the database.

IPFS node – Metadata associated with subgraph deployment is securely preserved within the InterPlanetary File System (IPFS) network. During the deployment process, the Graph Node primarily interfaces with the IPFS node to retrieve the subgraph manifest along with all interconnected files. The IPFS storage uses Amazon Elastic Filesystem (EFS) as underlying storage and an IPFS node as a docker container.

Queries – The Graph Node provides GraphQL endpoints to query the subgraphs. These endpoints are exposed via Amazon API Gateway.

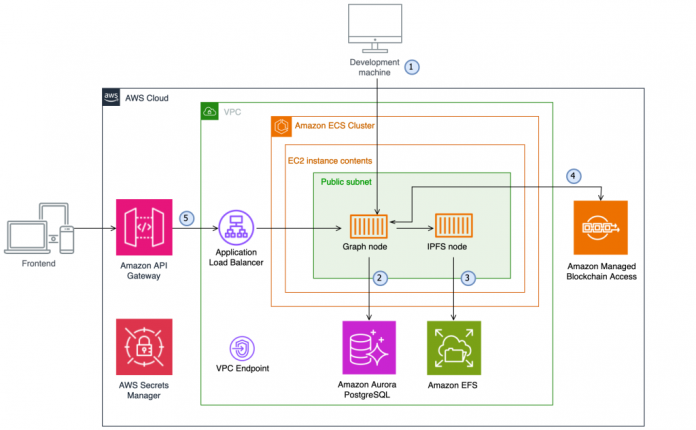

The following diagram illustrates the architecture of this solution.

The arrows indicate different data flows between the components:

From the local development machine, you can directly access the EC2 instance that hosts the containers. This path is used to deploy a subgraph and maintain the Graph Node.

The Graph Node stores the index on an Aurora PostgreSQL database.

The IPFS node is backed by an Amazon Elastic File System (Amazon EFS) to store the subgraph’s metadata.

The Graph Node indexes the blockchain provided by AMB Access.

For queries, access to the GraphQL endpoints is routed through API Gateway and an Application Load Balancer to establish stable endpoints.

This repository has the normal folder structure for an AWS CDK application. In addition, there are two sub-folders worth mentioning:

subgraph – This folder contains the definition for a subgraph that can be used for testing. Once the Graph Node is running, a subgraph needs to be deployed. Upon deployment, the Graph Node will begin to index the subgraph.

frontend – A simple React frontend that can be used to test the subgraph and display its results. It can be used to demonstrate the time-travel capabilities of the Graph Node. It works with the example subgraphs that are included in the repository.

Prerequisites

The AWS CDK accelerates cloud development using common programming languages to model your applications.

To manually install the cdk command line client on MacOS and Linux and validate the installed version, run the following command:

If you haven’t done this before for your account and Region, you need to bootstrap the AWS account for usage with the AWS CDK. Run the following command:

This creates an AWS CloudFormation stack in your account/Region combination. After that, you can use the AWS CDK to manage your AWS CDK stacks.

The CDK application to deploy the self-hosted Graph Node will incur costs. For additional information, refer to AWS Pricing.

Install Docker

The AWS CDK uses a Docker based build process. The computer running the cdk commands needs to have Docker installed in order to run the build. If you don’t have Docker installed, install it before building the AWS CDK stacks.

Deploy the Graph Node

After you clone the repository, install all the needed npm packages of the TheGraph-Service AWS CDK application:

We need to allow external access to the Graph Node through the security group of the EC2 instance for the deployment of the subgraph. There are two ways of deploying to the Graph Node:

From your local development machine – In order to access the Graph Node from the local machine, we need to open the Graph Node’s security group to the external IP address of the machine. Therefore, you need to look up the IP of your (external) development machine and modify cdk.json so the IP address gets exported to the AWS CDK as a context variable.

From an AWS-based instance (such as AWS Cloud9) – To permit traffic from another EC2 instance, you need to open the Graph Node’s security group to allow traffic from the AWS Cloud9 instance’s security group. If you’re developing from an EC2 instance, note the security group’s ID (it should start with sg-) and add it to cdk.json as the value of allowedSG. It will be exported to the AWS CDK.

There are three more settings in cdk.json:

clientUrl – Specifies the RPC URL for the blockchain node. When using AMB Access as your Ethereum node, make sure you are using token-based access to ensure that only authorized access can be made. This is due to the default Graph Node being incompatible with AWS SignatureV4 (SigV4) authentication.

chainID – Specifies the chain ID of the network.

apiKey – Sets an API key that will be needed to access the API Gateway for queries. This can be any string. The value of this parameter needs to be included as a header when making a request to the Graph Node.

If you are developing locally, set “allowedSG” to “”

At this point, you can list the available AWS CDK stacks:

You can now deploy the whole stack with the following command:

Deploy a subgraph

To manage subgraphs, you need the Graph CLI. You can install it globally for easy access with the following command:

For writing subgraph mappings, additionally install The Graph TypeScript library with the following command:

There are two ways to create a new subgraph:

Start from scratch and have the Graph CLI scaffold a folder for you

Use an included subgraph as a starting point.

Scaffold a new folder

With graph init –allow-simple-name, you can scaffold a new folder for a subgraph. Run the command in the subgraph folder and answer all the questions. The second option is for the “product for which to initialize”. Choose the hosted service option here. We want to deploy the subgraph to our running node, so that is the correct option.

When the command finishes, you will have a new folder with a basic template for a subgraph in it. cd into this folder.

Use the existing subgraph

If you want to start with an existing example, you can use subgraph/boredApes (or subgraph/boredApes_simple). These are subgraphs that index all transfers of the popular Bored Ape Yacht Club (BAYC) NFT collection. These subgraphs use two data sources: the blockchain itself as well as IPFS metadata files. BAYC stores its metadata and the images itself on IPFS. The subgraph will index the collection’s metadata files for each new token it detects.

The main difference between boredApes and boredApes_simple is the reliance on an archive node: boredApes calls the smart contract during indexing and needs an archive node. boredApes_simple hardcodes some values and doesn’t need to call the smart contract during indexing because it only relies on event data. This is why it only needs a full node.

cd into the subgraph folder that you wish to deploy and install the required packages by running npm install.

Define a subgraph

In the folder there are three main files of interest:

schema.graphql – This file holds the GraphQL schema that will be used for querying the subgraph. It defines what data will be stored in the database.

subgraph.yaml – This file holds the subgraph configuration. It defines the data source to index, the starting block, and the events that should be indexed.

src/<subgraphName>.ts – This file defines how data is mapped into the GraphQL schema. The file holds functions for each event you are indexing. The functions define how the data is stored in the database.

The three files define a subgraph. Because we are defining the mapping and the functions for the mapping ourselves (the what and the how), we have many liberties in creating complex subgraphs. Two things are worth mentioning:

We aren’t restricted to the data we are receiving for each event. Instead, in the mapping functions, we can query different data sources (such as IPFS files) to enrich our data set. We can also call other smart contracts to query additional data.

We aren’t restricted to events for triggering our functions. Additionally, we can define block handlers, which trigger potentially at every block. This is possible, but not advisable: subgraphs that trigger on every block are slow and costly to compute. The official Graph documentation has a comprehensive guide on creating a subgraph.

Deploy the subgraph

After creating its definition, the subgraph needs to be deployed to the node. This consists of three steps: building, creating, and deploying. The Graph CLI helps with that:

Building the subgraph – graph codegen builds the subgraph. This will create a generated folder, which has all the files needed to deploy the subgraph. Whenever there is a modification to the subgraph, it needs to be re-built.

Creating the subgraph on the node – graph create –node http://<IP OF GRAPH NODE EC2>:8020/ <NAME OF SUBGRAPH> creates the subgraph on our node. This is a one-time action.

Deploy the subgraph to the node: graph deploy –node http://<IP OF GRAPH NODE EC2>:8020/ –ipfs http://<IP OF GRAPH NODE EC2>:5001/ <NAME OF SUBGRAPH> deploys the subgraph to the node. It asks a couple of questions, namely the version of the subgraph. This is needed if we update our subgraph and want to provide a new version. When this command has finished, the subgraph has been deployed and the Graph Node will start indexing. If a subgraph is modified and needs re-deployment, step 1 (building) and this step (deploying) need to be repeated, but not the creation of the subgraph (step 2).

The three commands used for deploying the subgraph need to communicate to the Graph Node on ports 8020 and 5001. The AWS CDK allows access on these ports from the allowed IP and the allowed SG in cdk.json.

Query a subgraph

After the subgraph has been deployed, it can be queried via GraphQL. There are two ways for accessing the Graph Node:

For development purposes, you can query the EC2 instance directly from a specified IP. Contrary to the output of the graph deploy command, the GraphQL is reachable on port 80 (directly on the EC2 IP) and not on port 8000 (which is used internally on the Docker container). From the development machine, the GraphQL endpoint is: http://<EC2 IP>/subgraphs/name/<NAME OF SUBGRAPH>. The GraphiQL explorer, an in-browser graphical interface for creating queries, can additionally be accessed from this endpoint.

For general queries (from the front end or other applications), you can query the subgraph through API Gateway. There are two routes on the API Gateway:

POST <API Gateway Invoke URL>/subgraphs/name/<NAME OF SUBGRAPH> accepts valid subgraph names as a path element. It is used for queries on the specified subgraph.

POST <API Gateway Invoke URL>/graphql is for status queries about the syncing status of the Graph Node.

For the BAYC subgraph, a valid query to retrieve the first 100 owners of BAYC NFTs is as follows:

This query will retrieve the first 100 accounts and provide all information about each token that these accounts own. Due to The Graph having historical data, it can display any previous owner for a token and provide provenance data.

For checking the syncing status for a subgraph, you can use the following query. It needs to be run against the /graphql endpoint.

To query the syncing status of a subgraph, you can run the following command from your terminal:

Clean up

To avoid incurring future charges, delete the resources you created by running the following AWS CDK command from the root of the directory:

Conclusion

The Graph is a useful component of an application’s architecture in the Web3 landscape because it can serve as the backbone for data retrieval and management. With the ability to provide custom subgraphs for indexing predefined smart contracts, you have full control over the data and how it is mapped to the database.

The number of queries from a front end can be reduced by providing richer queries including all data – for example, to retrieve the ownership, attributes, and images for 100 NFTs from an NFT collection, you would need 300 queries if using the smart contract directly. With a custom subgraph, you can reduce that to 101 calls because The Graph can include all metadata in its response. The bulk of the queries remaining is loading the actual images (100 calls to the image sources). This ultimately shifts the load from the front end to the indexer.

In this post, we showed you how to deploy a self-hosted Graph Node on AWS to index smart contracts from any EVM-compatible blockchain. The self-hosted node expands potential use cases to many more blockchains than the ones currently supported by the decentralized network. To learn more about Amazon Managed Blockchain and other customer success stories, explore our catalog of blog posts.

About the Authors

Christoph Niemann is a Senior Blockchain Solutions Architect at AWS. He likes Blockchains and helps customers with designing and building blockchain based solutions. If he’s not building with blockchains, he’s probably drinking coffee.

Simon Goldberg is a Blockchain/Web3 Specialist Solutions Architect at AWS. Outside of work, he enjoys music production, reading, climbing, tennis, hiking, attending concerts, and researching Web3 technologies.

Read MoreAWS Database Blog