Starting today, Firestore is adding database triggering support for Firestore in Datastore Mode. This functionality builds upon the recently launched Firestore and Eventarc trigger integration, which was previously available only to Firestore in Native Mode customers.

Firestore’s Eventarc trigger integration enables the set up of a change data capture system, which is commonly utilized to replicate Firestore document changes to other platform services such as BigQuery, send push or email notifications to end-users based on specific Firestore data changes, and archive deleted Firestore documents in a different storage system. Firestore’s Eventarc triggering integration supports a wide set of destinations including Cloud Run, Google Kubernetes Engine (GKE), and Cloud Functions (2nd gen). The integration also uses the open and portable CloudEvents format.

Example walkthrough

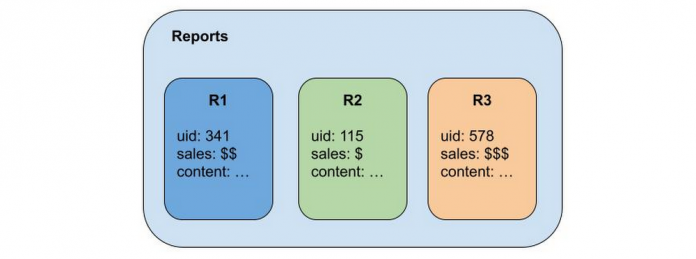

Let’s say that you need to replicate your Firestore operational data to BigQuery to perform data analytics. In this example, we’ll start with the following Firestore in Datastore Mode data model:

To set up a data replication trigger, navigate to the Eventarc section of the Cloud Console. You will need to create a new trigger for Firestore using the associated event types for Datastore Mode. Firestore in Datastore Mode event types start with the prefix google.cloud.datastore.entity.v1.*. Here, we’ll want to capture newly written entities, so we’ll subscribe to google.cloud.datastore.entity.v1.written events:

Next, you can specify additional filters, which ensures only desirable events from a specified database and kind of entities are delivered. In this case, we filter for events from the (default) database, and for entities of the kind Reports.

On the same screen, you will also need to specify a destination. Triggering events can be delivered to any number of supported Eventarc destinations, like Cloud Run, Cloud Functions (2nd gen), and Google Kubernetes Engine. Let’s say we have a Cloud Run service named analysis that exposes an HTTP endpoint to receive the events. You can configure your trigger as follows:

That’s it! When any write operation is applied to your (default) database with the kind Reports, a CloudEvent will be delivered to the configured Cloud Run service analysis almost immediately. Your new Cloud Run service can now process newly written entities, and write them to BigQuery using BigQuery’s Storage APIs.

Next steps

For more information on how to set up and configure Firestore triggers, check out our documentation.

Thanks to both Minh Nguyen, Senior Product Manager Lead for Firestore and Juan Lara, Senior Technical Writer for Firestore, for their contributions to this blog post.

Cloud BlogRead More