As organizations adopt modern application architectures such as microservices, application teams tend to retrofit one-size-fits-all databases. The mortgage industry is going through unprecedented transformation due to changing generation technologies such as API adoption. In the mortgage industry, API-enabled software allows lenders, issuers, borrowers, and more to integrate different functionalities into their portal, meaning they bring together data that was previously siloed.

Mortgage-backed securities (MBS) are bonds secured by home and other real estate loans. They are created when several loans, usually with similar characteristics, are pooled together under a legal entity. In this post, we show you the use case of modeling mortgage-backed security data using a purpose-built database such as Amazon Neptune. By migrating the data to Neptune, we can dismantle barriers between data sources via a knowledge graph approach, which can provide a basis for better risk modeling and data consumption by means of several APIs.

Today, there is a need in mortgage-backed securities to provide a holistic view of the loan, borrower, property, pool, security, servicer, originator, and other related entities in a highly connected manner. The securities model is typically hierarchal in nature, with a group of entities such as loans, pools (a pool of loans), securities (“n” levels), and more, which makes the complexity of modeling the entity model rather difficult in a traditional database data store. Moreover, adding any new entity to an existing model typically requires changes in the data that’s already set up. In this post, we go through the process of unloading the source data from an existing relational data store, building the knowledge graph model, and consuming the data.

Solution overview

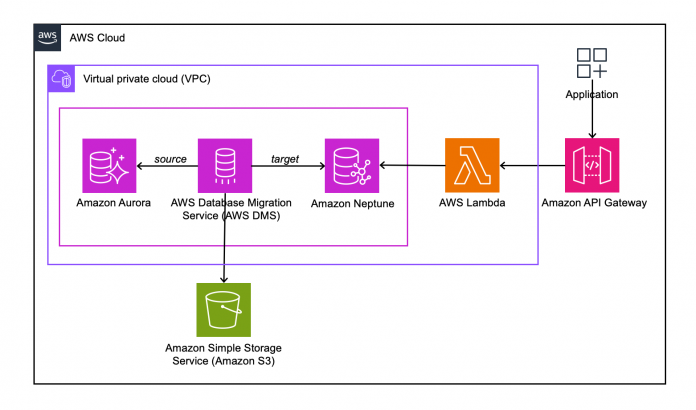

The following diagram shows the solution architecture.

The solution is comprised of the following key services:

Amazon API Gateway – A fully managed service that makes it straightforward to create, publish, maintain, monitor, and secure APIs at any scale for API consumers. API Gateway helps you manage traffic to backend systems, translate the input request into a graph language-specific query, and return the output in more readable manner for the consumer. API Gateway uses AWS Lambda as the proxy for the service implementation.

AWS Lambda – A serverless execution environment that enables you to interact with Neptune using the Gremlin query interface. Lambda provides the query function of the underlying connected data using a REST interface. We also use Lambda to load sample MBS source datasets into Amazon Aurora PostgreSQL.

Amazon Aurora PostgreSQL – Compatible Edition – A fully managed, PostgreSQL-compatible, and ACID compliant relational database engine that combines the speed, reliability, and manageability of Amazon Aurora with the simplicity and cost-effectiveness of open source databases. We show how the entities are modeled using Amazon Aurora PostgreSQL, where our source data resides.

Amazon Neptune – A fast, reliable, fully managed graph database service that makes it straightforward to build and run applications. We show how the knowledge graph is built from our source data. Neptune is our target data store.

AWS Database Migration Service – AWS DMS helps us migrate the data from a source database (Amazon Aurora PostgreSQL) to a target database (Neptune). We walk through the steps in building the mapping files needed for the migration.

Amazon Simple Storage Service – Amazon S3 is an object storage service offering industry-leading scalability, data availability, security, and performance. We use Amazon S3 for staging the source datasets and for the AWS DMS migration task.

Relational entity data model

The following entity relationship diagram depicts how different entities are related to each other with their attributes. In this post, we use the following tables as our source data for migrating them to Neptune and building a graph model.

Mortgage-backed securities are formed by pooling mortgage loans. The borrower works with a lender to complete a mortgage transaction, resulting in a mortgage loan. A mortgage loan is a loan in which the property is used as a collateral. Post-issuance of a mortgage loan, it is serviced by a servicer. The mortgage-backed securities schema is simplified for this post.

The source data is manually generated synthetic data that is similar to real-world data. The primary purpose of synthetic data is to increase the privacy and integrity of systems. It has a very limited set of loans and securities.

Graph data model

We first need to convert the relational data model into a graph data model with vertices and edges using the AWS DMS migration task. The definition of the graph mapping file used for conversion can be referenced in our source code path:

The following diagram shows equivalent entities as vertices and the relationships as edges.

Graph data visualization

Gremlin is a graph traversal language for property graphs. A query in Gremlin is a traversal made up of discrete steps, each of which follows an edge to a node. For more information, refer to the TinkerPop documentation. For this post, we use Gremlin and Amazon Neptune Workbench to create a visual diagram of data loaded into a Neptune database. For more details, refer to Graph visualization in the Neptune workbench.

An example of a Gremlin query associated with this dataset is as follows:

We can expand this query to bring the security value for the reporting period based on loan activity and many more such use cases. The following graph depicts the visualization of the same source dataset in Neptune by security (369WAU713).

At a glance, we observe many connections from the MBS vertex, in the center in blue. It consists of a bundle of mortgage loans, where each loan is collateralized by a property, the servicer who services the loan, the loan activities submitted for each mortgage loan, and so on.

The following table shows a list form of the dataset.

Data consumption through API

We have added a handful of REST APIs using the data persisted in the Neptune database and exposed them as API endpoints through API Gateway.

The following are the Gremlin queries used to build each API:

Get all loans by CUSIP (Committee on Uniform Security Identification Procedures):

Get all loans by seller:

Get all loans by servicer:

Imagine now if you want to connect Metropolitan Statistical Areas (MSA), which is a formal grouping of a city and its surrounding communities. You can do so with ease by expanding the existing dataset by adding new vertices and edges associated with the property zip code. Furthermore, supplementing with additional datasets such as Federal Reserve, macroeconomic data, and unemployment data would help the business provide meaningful inferences and risk insights. Integration of various datasets becomes easier with graph databases, allowing developers to focus on solving the business problem.

We have simplified the process of creating the infrastructure, loading the sample set of data from the source database and migrating it to the target graph database, and consuming the data through an API with a single AWS Cloud Development Kit (AWS CDK) project.

Cost considerations

The solution outlined in this post includes services such as Amazon Virtual Private Cloud (Amazon VPC), VPC endpoints, AWS Key Management Service (AWS KMS), API Gateway, Lambda, Neptune, Amazon Aurora PostgreSQL, and AWS DMS. After validating the deployment, don’t forget to destroy the resources as mentioned later in this post.

Prerequisites

For this walkthrough, you should have the following prerequisites:

Python version 3.9

Docker version 20.10.12

Postman to test your API

The AWS Command Line Interface (AWS CLI) set up to allow you to deploy your resources

The appropriate AWS credentials for interacting with resources in your AWS account

AWS CDK v2 installed

Deploy the solution

You can deploy the solution by completing the following steps:

Clone the project GitHub repository to your local machine after completing the prerequisites:

Create and activate a virtual environment:

For MacOS / Linux activation, run the following:

For Windows activation, run the following:

Install the Python-dependent packages:

Run the following commands to bootstrap the AWS environment:

Verify if the Docker daemon is running.

Deploy the AWS CDK project:

This process could take approximately 45 minutes.

Validate the deployment

To validate the mortgage backed-securities migration and its consumption of data from Neptune, complete the following steps:

On the API Gateway console, navigate to the API MbsApi.

In the navigation pane, choose API Keys.

Retrieve the API authorization key, which you need to pass accessing the APIs through Postman in subsequent steps.

Choose Stages in the navigation pane.

Retrieve the API endpoint for the dbloader POST method, which loads the source database with sample datasets into the Amazon Aurora PostgreSQL database.

Copy the invoke URL to Postman.

Set the x-api-key retrieved earlier in the Headers section.

Choose Send.

There is no payload required for this API.

Now you’re ready to load the data into Neptune using AWS DMS.

On the AWS DMS console, choose Database migration tasks in the navigation pane.

Select the mbs-rds-neptune-migration task.

On the Actions menu, choose Restart/Resume.

The status should change to Load complete upon completion of the task.

You can confirm a successful load of all rows on the Table statistics tab.

Validate the mbsget API through Postman by copying the invoke URL.

Enter the following JSON in the Request body section:

Set the x-api-key retrieved earlier in the Headers section.

Choose Send.

You can change the queryStringParameters to validate other APIs. For example, in the following screenshot, we get all loans by seller.

In the following screenshot, we get all loans by servicer.

Clean up

To avoid incurring ongoing charges, clean up the infrastructure by running the following command:

Summary

In this post, we described how to build connected datasets for mortgage-backed securities using purpose-built database such as Neptune, dismantling barriers between data sources. We also described how the data can be consumed with API Gateway. This technical choice of using Neptune as the primary database for this problem is driven by the complexity of the data objects and their relationships, which could potentially grow over time.

Reach out if you have any feedback or questions regarding the approach presented in this post. For more information on getting started with a Neptune database, refer to the Amazon Neptune User Guide.

About the Authors

Vijaykumar Pannirselvam is a Consultant at Amazon Web Services. Vijay is passionate about working with customers to understand their needs, leveraging the cloud to architect, implement innovative solutions and driving business value for them. He leverages his experience to help customers build serverless applications and big data workloads.

Supreeth Shettar is a Cloud Application Architect at Amazon Web Services. Supreeth has 19 years of experience in building and scaling distributed systems and web applications. Supreeth is passionate about helping customers adopt modern technologies such as cloud, containers, serverless and immutable infrastructure.

Tim Seyfried is a Data Specialist at Amazon Web Services. Tim is passionate about securing and getting value out of data. He has 28 years’ experience in data analytics, institutional data warehouses, and data storage technologies. He has helped several AWS customers to re-host, re-platform, and re-architect data storage migrating from on-premise to cloud technologies.

Read MoreAWS Database Blog