At the 2021 AWS re:Invent conference in Las Vegas, we demoed Read For Me at the AWS Builders Fair—a website that helps the visually impaired hear documents.

For better quality, view the video here.

Adaptive technology and accessibility features are often expensive, if they’re available at all. Audio books help the visually impaired read. Audio description makes movies accessible. But what do you do when the content isn’t already digitized?

This post focuses on the AWS AI services Amazon Textract and Amazon Polly, which empower those with impaired vision. Read For Me was co-developed by Jack Marchetti, who is visually impaired.

Solution overview

Through an event-driven, serverless architecture and a combination of multiple AI services, we can create natural-sounding audio files in multiple languages from a picture of a document, or any image with text. For example, a letter from the IRS, a holiday card from family, or even the opening titles to a film.

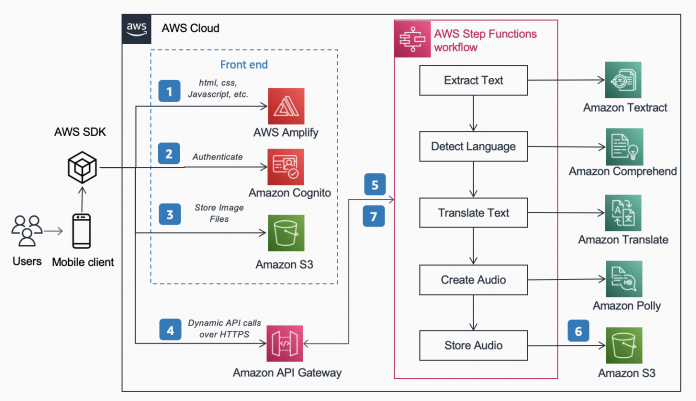

The following Reference Architecture, published in the AWS Architecture Center shows the workflow of a user taking a picture with their phone and playing an MP3 of the content found within that document.

The workflow includes the following steps:

Static content (HTML, CSS, JavaScript) is hosted on AWS Amplify.

Temporary access is granted for anonymous users to backend services via an Amazon Cognito identity pool.

The image files are stored in Amazon Simple Storage Service (Amazon S3).

A user makes a POST request through Amazon API Gateway to the audio service, which proxies to an express AWS Step Functions workflow.

The Step Functions workflow includes the following steps:

Amazon Textract extracts text from the image.

Amazon Comprehend detects the language of the text.

If the target language differs from the detected language, Amazon Translate translates to the target language.

Amazon Polly creates an audio file as output using the text.

The AWS Step Functions workflow creates an audio file as output and stores it in Amazon S3 in MP3 format.

A pre-signed URL with the location of the audio file stored in Amazon S3 is sent back to the user’s browser through API Gateway. The user’s mobile device plays the audio file using the pre-signed URL.

In the following sections, we discuss the reasons for why we chose the specific services, architecture pattern, and service features for this solution.

AWS AI services

Several AI services are wired together to power Read For Me:

Amazon Textract identifies the text in the uploaded picture.

Amazon Comprehend determines the language.

If the user chooses a different spoken language than the language in the picture, we translate it using Amazon Translate.

Amazon Polly creates the MP3 file. We take advantage of the Amazon Polly neural engine, which creates a more natural, lifelike audio recording.

One of the main benefits of using these AI services is the ease of adoption with little or no core machine learning experience required. The services expose APIs that clients can invoke using SDKs made available in multiple programming languages, such as Python and Java.

With Read For Me, we wrote the underlying AWS Lambda functions in Python.

AWS SDK for Python (Boto3)

The AWS SDK for Python (Boto3) makes interacting with AWS services simple. For example, the following lines of Python code return the text found in the image or document you provide:

All Python code is run within individual Lambda functions. There are no servers to provision and no infrastructure to maintain.

Architecture patterns

In this section, we discuss the different architecture patterns used in the solution.

Serverless

We implemented a serverless architecture for two main reasons: speed to build and cost. With no underlying hardware to maintain or infrastructure to deploy, we focused entirely on the business logic code and nothing else. This allowed us to get a functioning prototype up and running in a matter of days. If users aren’t actively uploading pictures and listening to recordings, nothing is running, and therefore nothing is incurring costs outside of storage. An S3 lifecycle management rule deletes uploaded images and MP3 files after 1 day, so storage costs are low.

Synchronous workflow

When you’re building serverless workflows, it’s important to understand when a synchronous call makes more sense from the architecture and user experience than an asynchronous process. With Read For Me, we initially went down the asynchronous path and planned on using WebSockets to bi-directionally communicate with the front end. Our workflow would include a step to find the connection ID associated with the Step Functions workflow and upon completion, alert the front end. For more information about this process, refer to From Poll to Push: Transform APIs using Amazon API Gateway REST APIs and WebSockets.

We ultimately chose not to do this and used express step functions which are synchronous. Users understand that processing an image won’t be instant, but also know it won’t take 30 seconds or a minute. We were in a space where a few seconds was satisfactory to the end-user and didn’t need the benefit of WebSockets. This simplified the workflow overall.

Express Step Functions workflow

The ability to break out your code into smaller, isolated functions allows for fine-grained control, easier maintenance, and the ability to scale more accurately. For instance, if we determined that the Lambda function that triggered Amazon Polly to create the audio file was running slower than the function that determined the language, we could vertically scale that function, adding more memory, without having to do so for the others. Similarly, you limit the blast radius of what your Lambda function can do or access when you limit its scope and reach.

One of the benefits of orchestrating your workflow with Step Functions is the ability to introduce decision flow logic without having to write any code.

Our Step Functions workflow isn’t complex. It’s linear until the translation step. If we don’t need to call a translation Lambda function, that’s less cost to us, and a faster experience for the user. We can use the visual designer on the Step Functions console to find the specific key in the input payload and, if it’s present, call one function over the other using JSONPath. For example, our payload includes a key called translate:

Within the Step Functions visual designer, we find the translate key, and set up rules to match.

Headless architecture

Amplify hosts the front-end code. The front end is written in React and the source code is checked into AWS CodeCommit. Amplify solves a few problems for users trying to deploy and manage static websites. If you were doing this manually (using an S3 bucket set up for static website hosting and fronting that with Amazon CloudFront), you’d have to expire the cache yourself each time you did deployments. You’d also have to write up your own CI/CD pipeline. Amplify handles this for you.

This allows for a headless architecture, where front-end code is decoupled from the backend and each layer can be managed and scaled independent of the other.

Analyze ID

In the preceding section, we discussed the architecture patterns for processing the uploaded picture and creating an MP3 file from it. Having a document read back to you is a great first step, but what if you only want to know something specific without having the whole thing read back to you? For instance, you need to fill out a form online and provide your state ID or passport number, or perhaps its expiration date. You then have to take a picture of your ID and, while having it read back to you, wait for that specific part. Alternatively, you could use Analyze ID.

Analyze ID is a feature of Amazon Textract that enables you to query documents. Read For Me contains a drop-down menu where you can specifically ask for the expiration date, date of issue, or document number. You can use the same workflow to create an MP3 file that provides an answer to your specific question.

You can demo the Analyze ID feature at readforme.io/analyze.

Additional Polly Features

Read For Me offers multiple neural voices utilizing different languages and dialects. Note that there are several other voices you can choose from, which we did not implement. When a new voice is available, an update to the front-end code and a lambda function is all it takes to take advantage of it.

The Polly service also offers other options which we have yet to include in Read For Me. Those include adjusting the speed of the voices and speech marks.

Conclusion

In this post, we discussed how to use numerous AWS services, including AI and serverless, to aid the visually impaired. You can learn more about the Read For Me project and use it by visiting readforme.io. You can also find Amazon Textract examples on the GitHub repo. To learn more about Analyze ID, check out Announcing support for extracting data from identity documents using Amazon Textract.

The source code for this project will be open-sourced and added to AWS’s public GitHub soon.

About the Authors

Jack Marchetti is a Senior Solutions architect at AWS. With a background in software engineering, Jack is primarily focused on helping customers implement serverless, event-driven architectures. He built his first distributed, cloud-based application in 2013 after attending the second AWS re:Invent conference and has been hooked ever since. Prior to AWS Jack spent the bulk of his career in the ad agency space building experiences for some of the largest brands in the world. Jack is legally blind and resides in Chicago with his wife Erin and cat Minou. He also is a screenwriter, and director with a primary focus on Christmas movies and horror. View Jack’s filmography at his IMDb page.

Alak Eswaradass is a Solutions Architect at AWS based in Chicago, Illinois. She is passionate about helping customers design cloud architectures utilizing AWS services to solve business challenges. She has a Master’s degree in computer science engineering. Before joining AWS, she worked for different healthcare organizations, and she has in-depth experience architecting complex systems, technology innovation, and research. She hangs out with her daughters and explores the outdoors in her free time.

Swagat Kulkarni is a Senior Solutions Architect at AWS and an AI/ML enthusiast. He is passionate about solving real-world problems for customers with cloud native services and machine learning. Outside of work, Swagat enjoys travel, reading and meditating.

Read MoreAWS Machine Learning Blog