AWS Database Migration Service (AWS DMS) is a cloud service that makes it easy to migrate relational databases, data warehouses, NoSQL databases, and other types of data stores. You can use AWS DMS to migrate your data to the AWS Cloud or between combinations of cloud and on-premises setups. With AWS DMS, you can perform one-time migrations, and you can replicate ongoing changes to keep sources and targets in sync.

At a high level, when using AWS DMS, you do the following:

Create a replication instance

Create source and target endpoints that have connection information about your data stores

Create one or more migration tasks to migrate data between the source and target data stores

When configuring your endpoints, you may find that you need connection settings to be dynamic depending on contextual information like when the task is running. For example, for full load migration tasks loading data into Amazon Simple Storage Service (Amazon S3), you may want the target path in your S3 bucket to reflect the load date so that you can keep a full history of data loads. This is not natively supported, but you can modify the target S3 endpoint with the appropriate target path before running the migration task to ensure the data is partitioned by date in the S3 bucket.

In this post, we walk you through a solution for configuring a workflow—run on a cron-like schedule—that modifies an AWS DMS endpoint and starts a full load migration task. The AWS DMS endpoint modification code in the example updates the S3 target endpoint’s BucketPath with the current date, but you can modify it to fit other use cases where dynamic modification of AWS DMS endpoints is needed. For example, you may also want the prefix to be updated before every full load to make cataloging your tables easier.

Although AWS DMS doesn’t support date-based partitioning for full load migration tasks, it does support date-based partitioning for full load plus replicating data and replicating changes only tasks. For more information, refer to Using Amazon S3 as a target for AWS Database Migration Service.

Solution walkthrough

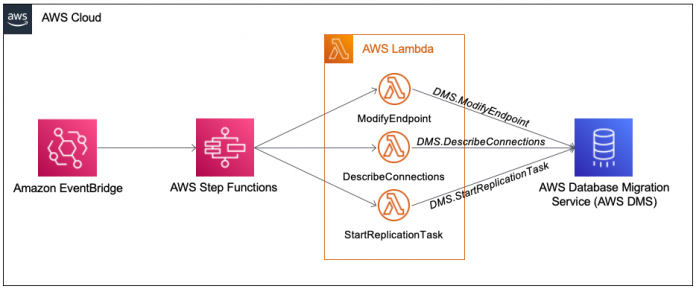

The following diagram shows the high-level architecture of the solution. We use the following AWS services:

AWS DMS – Contains the configuration for the migration components (such as endpoints and tasks) and compute environment

Amazon EventBridge – Triggers the workflow to run on a cron-like schedule

AWS Lambda – Provides the serverless compute environment for running AWS SDK calls

AWS Step Functions – Orchestrates the workflow steps

A Step Functions state machine orchestrates the invocation of three Lambda functions: ModifyEndpoint, DescribeConnections, and StartReplicationTask. The purpose of each Lambda function is as follows:

ModifyEndpoint – Runs the DMS.ModifyEndpoint SDK call. This function contains the logic for modifying the endpoint according to our requirements. In this example, we modify the target S3 endpoint’s BucketPath to contain the current date to achieve date partitioning in our target S3 bucket.

DescribeConnections – Runs the DMS.DescribeConnections SDK call. The DMS.ModifyEndpoint SDK call made in the ModifyEndpoint function triggers an automatic connection test on the modified endpoint, and a task that references that endpoint can’t be started until the connection test is successful. This function simply checks the target endpoint’s connection status.

StartReplicationTask – Runs the DMS.StartReplicationTask SDK call. This function kicks off the data migration process by starting the AWS DMS task with the updated endpoint.

The state machine orchestrates the invocation of these functions and handles retries and waits when necessary. The following image shows a visual representation of the workflow.

The state machine includes a step to wait for 60 seconds after invoking the ModifyEndpoint Lambda function because it can take 30–40 seconds for the automatic connection test to succeed. This wait time could potentially be lowered but it could result in additional invocations of the DescribeConnections function.

Depending on the outcome of DescribeConnections, the state machine takes different actions:

If the function returns a testing status, the state machine returns to the 60-second wait step to retry until a successful or failure status is returned

If the function returns a successful status, the state machine advances to the StartReplicationTask Lambda function invocation

If the function returns a failure status (failed or deleting), the state machine fails immediately and the StartReplicationTask function never gets invoked

After the state machine has been deployed and AWS DMS has done multiple full loads of the database to Amazon S3, you’ll have an S3 path for each export with the current year, month, and day appended. The following screenshot shows that we completed two full loads of our database and the AWS DMS endpoint was modified for each respective day.

Prerequisites

Before you get started, you should have the following in place:

An AWS account with access to create EventBridge rules, Step Functions state machines, Lambda functions, and AWS Identity and Access Management (IAM) roles

A working full load AWS DMS task with an S3 target endpoint

Deploy the solution in your AWS account

You can deploy the solution to your AWS account via AWS CloudFormation. Complete the following steps to deploy the CloudFormation template:

Choose Launch Stack:

For Stack name, enter a name, such as dms-endpoint-mod-stack.

For DMSTask, enter the ARN of the AWS DMS replication task to start after modifying the S3 target endpoint. You can find the ARN on the AWS DMS console. Choose Database migration tasks in the navigation pane and open the task to view its details.

For EventRuleScheduleExpression, enter the time when the DMS task should be started. The default is daily at 2:00 AM GMT, but you can modify it to meet your needs. See the EventBridge documentation for the schedule expression syntax.

For S3Endpoint, enter the ARN of the S3 target endpoint for dynamic modification. To locate the ARN, on the AWS DMS console, choose Endpoints and then open the target endpoint you want to modify. After filling out all of the fields, the Specify stack details screen should look something like this:

Choose Next.

Enter any tags you want to assign the stack.

Choose Next.

Verify the parameters are correct and choose Create stack.

The stack should take 3–5 minutes to deploy.

Once the stack deployment is complete, the Step Functions state machine that orchestrates the workflow will be executed at the next scheduled interval, according to the supplied EventBridge schedule expression. Alternatively, you can execute the state machine manually by navigating to the Step Functions console, choosing the state machine beginning with TaskWorkflowStateMachine, and then choosing Start execution.

Cost of solution and cleanup

Refer to the pricing for EventBridge, Step Functions, and Lambda to determine the cost of this solution per your own use cases and desired outcomes. The AWS Pricing Calculator is also a great way to estimate costs of a solution.

Deploying the CloudFormation templates are free, but you’re charged for resources that the template deploys. To avoid incurring extra cost, delete the CloudFormation stack you deployed when going through this post.

Summary

In this post, we covered how to dynamically modify an AWS DMS endpoint for full load AWS DMS tasks using EventBridge, Step Functions, and Lambda. We also provided a CloudFormation template that you can modify and deploy for your own workloads and use cases, like date-based folder partitioning for AWS DMS full load tasks.

For more information about the services in this solution, refer to the AWS DMS User Guide and AWS Step Functions User Guide. Additionally, please leave a comment with any questions or feedback!

About the Authors

Jeff Gardner is a Solutions Architect with Amazon Web Services (AWS). In his role, Jeff helps enterprise customers through their cloud journey, leveraging his experience with application architecture and DevOps practices. Outside of work, Jeff enjoys watching and playing sports and chasing around his three young children.

Michael Hamilton is an Analytics Specialist Solutions Architect who enjoys working with customers to solve their complex needs when it comes to data on AWS. He enjoys spending time with his wife and kids outside of work and has recently taken up mountain biking!

Read MoreAWS Database Blog