As machine learning (ML) goes mainstream and gains wider adoption, ML-powered applications are becoming increasingly common to solve a range of complex business problems. The solution to these complex business problems often requires using multiple ML models. These models can be sequentially combined to perform various tasks, such as preprocessing, data transformation, model selection, inference generation, inference consolidation, and post-processing. Organizations need flexible options to orchestrate these complex ML workflows. Serial inference pipelines are one such design pattern to arrange these workflows into a series of steps, with each step enriching or further processing the output generated by the previous steps and passing the output to the next step in the pipeline.

Additionally, these serial inference pipelines should provide the following:

Flexible and customized implementation (dependencies, algorithms, business logic, and so on)

Repeatable and consistent for production implementation

Undifferentiated heavy lifting by minimizing infrastructure management

In this post, we look at some common use cases for serial inference pipelines and walk through some implementation options for each of these use cases using Amazon SageMaker. We also discuss considerations for each of these implementation options.

The following table summarizes the different use cases for serial inference, implementation considerations and options. These are discussed in this post.

Use Case

Use Case Description

Primary Considerations

Overall Implementation Complexity

Recommended Implementation options

Sample Code Artifacts and Notebooks

Serial inference pipeline (with preprocessing and postprocessing steps included)

Inference pipeline needs to preprocess incoming data before invoking a trained model for generating inferences, and then postprocess generated inferences, so that they can be easily consumed by downstream applications

Ease of implementation

Low

Inference container using the SageMaker Inference Toolkit

Deploy a Trained PyTorch Model

Serial inference pipeline (with preprocessing and postprocessing steps included)

Inference pipeline needs to preprocess incoming data before invoking a trained model for generating inferences, and then postprocess generated inferences, so that they can be easily consumed by downstream applications

Decoupling, simplified deployment, and upgrades

Medium

SageMaker inference pipeline

Inference Pipeline with Custom Containers and xgBoost

Serial model ensemble

Inference pipeline needs to host and arrange multiple models sequentially, so that each model enhances the inference generated by the previous one, before generating the final inference

Decoupling, simplified deployment and upgrades, flexibility in model framework selection

Medium

SageMaker inference pipeline

Inference Pipeline with Scikit-learn and Linear Learner

Serial inference pipeline (with targeted model invocation from a group)

Inference pipeline needs to invoke a specific customized model from a group of deployed models, based on request characteristics or for cost-optimization, in addition to preprocessing and postprocessing tasks

Cost-optimization and customization

High

SageMaker inference pipeline with multi-model endpoints (MMEs)

Amazon SageMaker Multi-Model Endpoints using Linear Learner

In the following sections, we discuss each use case in more detail.

Serial inference pipeline using inference containers

Serial inference pipeline use cases have requirements to preprocess incoming data before invoking a pre-trained ML model for generating inferences. Additionally, in some cases, the generated inferences may need to be processed further, so that they can be easily consumed by downstream applications. This is a common scenario for use cases where a streaming data source needs to be processed in real time before a model can be fitted on it. However, this use case can manifest for batch inference as well.

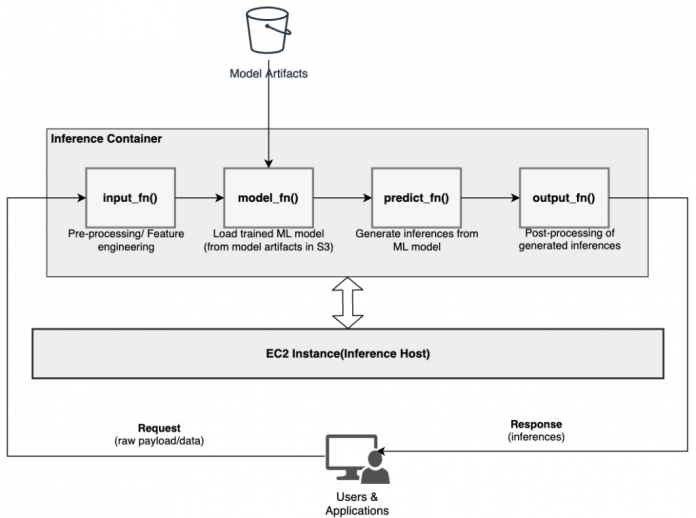

SageMaker provides an option to customize inference containers and use them to build a serial inference pipeline. Inference containers use the SageMaker Inference Toolkit and are built on SageMaker Multi Model Server (MMS), which provides a flexible mechanism to serve ML models. The following diagram illustrates a reference pattern of how to implement a serial inference pipeline using inference containers.

SageMaker MMS expects a Python script that implements the following functions to load the model, preprocess input data, get predictions from the model, and postprocess the output data:

input_fn() – Responsible for deserializing and preprocessing the input data

model_fn() – Responsible for loading the trained model from artifacts in Amazon Simple Storage Service (Amazon S3)

predict_fn() – Responsible for generating inferences from the model

output_fn() – Responsible for serializing and postprocessing the output data (inferences)

For detailed steps to customize an inference container, refer to Adapting Your Own Inference Container.

Inference containers are an ideal design pattern for serial inference pipeline use cases with the following primary considerations:

High cohesion – The processing logic and corresponding model drive single business functionality and need to be co-located

Low overall latency – The elapsed time between when an inference request is made and response is received

In a serial inference pipeline, the processing logic and model are encapsulated within the same single container, so much of the invocation calls remain within the container. This helps reduce the overall number of hops, resulting in better overall latency and responsiveness of the pipeline.

Also, for use cases where ease of implementation is an important criterion, inference containers can help, with various processing steps of the pipeline be co-located within the same container.

Serial inference pipeline using a SageMaker inference pipeline

Another variation of the serial inference pipeline use case requires clearer decoupling between the various steps in the pipeline (such as data preprocessing, inference generation, data postprocessing, and formatting and serialization). This could be due to a variety of reasons:

Decoupling – Various steps of the pipeline have a clearly defined purpose and need to be run on separate containers due to the underlying dependencies involved. This also helps keep the pipeline well structured.

Frameworks – Various steps of the pipeline use specific fit-for-purpose frameworks (such as scikit or Spark ML) and therefore need to be run on separate containers.

Resource Isolation – Various steps of the pipeline have varying resource consumption requirements and therefore need to be run on separate containers for more flexibility and control.

Furthermore, for slightly more complex serial inference pipelines, multiple steps may be involved to process a request and generate an inference. Therefore, from an operational standpoint, it may be beneficial to host these steps on separate containers for better functional isolation, and facilitate easier upgrades and enhancements (change one step without impacting other models or processing steps).

If your use case aligns with some of these considerations, a SageMaker inference pipeline provides an easy and flexible option to build a serial inference pipeline. The following diagram illustrates a reference pattern of how to implement a serial inference pipeline using multiple steps hosted on dedicated containers using a SageMaker inference pipeline.

A SageMaker inference pipeline consists of a linear sequence of 2–15 containers that process requests for inferences on data. The inference pipeline provides the option to use pre-trained SageMaker built-in algorithms or custom algorithms packaged in Docker containers. The containers are hosted on the same underlying instance, which helps reduce the overall latency and minimize cost.

The following code snippet shows how multiple processing steps and models can be combined to create a serial inference pipeline.

We start by building and specifying Spark ML and XGBoost-based models that we intend to use as part of the pipeline:

The models are then arranged sequentially within the pipeline model definition:

The inference pipeline is then deployed behind an endpoint for real-time inference by specifying the type and number of host ML instances:

The entire assembled inference pipeline can be considered a SageMaker model that you can use to make either real-time predictions or process batch transforms directly, without any external preprocessing. Within an inference pipeline model, SageMaker handles invocations as a sequence of HTTP requests originating from an external application. The first container in the pipeline handles the initial request, performs some processing, and then dispatches the intermediate response as a request to the second container in the pipeline. This happens for each container in the pipeline, and finally returns the final response to the calling client application.

SageMaker inference pipelines are fully managed. When the pipeline is deployed, SageMaker installs and runs all the defined containers on each of the Amazon Elastic Compute Cloud (Amazon EC2) instances provisioned as part of the endpoint or batch transform job. Furthermore, because the containers are co-located and hosted on the same EC2 instance, the overall pipeline latency is reduced.

Serial model ensemble using a SageMaker inference pipeline

An ensemble model is an approach in ML where multiple ML models are combined and used as part of the inference process to generate final inferences. The motivations for ensemble models could include improving accuracy, reducing model sensitivity to specific input features, and reducing single model bias, among others. In this post, we focus on the use cases related to a serial model ensemble, where multiple ML models are sequentially combined as part of a serial inference pipeline.

Let’s consider a specific example related to a serial model ensemble where we need to group a user’s uploaded images based on certain themes or topics. This pipeline could consist of three ML models:

Model 1 – Accepts an image as input and evaluates image quality based on image resolution, orientation, and more. This model then attempts to upscale the image quality and sends the processed images that meet a certain quality threshold to the next model (Model 2).

Model 2 – Accepts images validated through Model 1 and performs image recognition to identify objects, places, people, text, and other custom actions and concepts in images. The output from Model 2 that contains identified objects is sent to Model 3.

Model 3 – Accepts the output from Model 2 and performs natural language processing (NLP) tasks such as topic modeling for grouping images together based on themes. For example, images could be grouped based on location or people identified. The output (groupings) is sent back to the client application.

The following diagram illustrates a reference pattern of how to implement multiple ML models hosted on a serial model ensemble using a SageMaker inference pipeline.

As discussed earlier, the SageMaker inference pipeline is managed, which enables you to focus on the ML model selection and development, while reducing the undifferentiated heavy lifting associated with building the serial ensemble pipeline.

Additionally, some of the considerations discussed earlier around decoupling, algorithm and framework choice for model development, and deployment are relevant here as well. For instance, because each model is hosted on a separate container, you have flexibility in selecting the ML framework that best fits each model and your overall use case. Furthermore, from a decoupling and operational standpoint, you can continue to upgrade or modify individual steps much more easily, without affecting other models.

The SageMaker inference pipeline is also integrated with the SageMaker model registry for model cataloging, versioning, metadata management, and governed deployment to production environments to support consistent operational best practices. The SageMaker inference pipeline is also integrated with Amazon CloudWatch to enable monitoring the multi-container models in inference pipelines. You can also get visibility into real-time metrics to better understand invocations and latency for each container in the pipeline, which helps with troubleshooting and resource optimization.

Serial inference pipeline (with targeted model invocation from a group) using a SageMaker inference pipeline

SageMaker multi-model endpoints (MMEs) provide a cost-effective solution to deploy a large number of ML models behind a single endpoint. The motivations for using multi-model endpoints could include invocating a specific customized model based on request characteristics (such as origin, geographic location, user personalization, and so on) or simply hosting multiple models behind the same endpoint to achieve cost-optimization.

When you deploy multiple models on a single multi-model enabled endpoint, all models share the compute resources and the model serving container. The SageMaker inference pipeline can be deployed on an MME, where one of the containers in the pipeline can dynamically serve requests based on the specific model being invoked. From a pipeline perspective, the models have identical preprocessing requirements and expect the same feature set, but are trained to align to a specific behavior. The following diagram illustrates a reference pattern of how this integrated pipeline would work.

With MMEs, the inference request that originates from the client application should specify the target model that needs to be invoked. The first container in the pipeline handles the initial request, performs some processing, and then dispatches the intermediate response as a request to the second container in the pipeline, which hosts multiple models. Based on the target model specified in the inference request, the model is invoked to generate an inference. The generated inference is sent to the next container in the pipeline for further processing. This happens for each subsequent container in the pipeline, and finally SageMaker returns the final response to the calling client application.

Multiple model artifacts are persisted in an S3 bucket. When a specific model is invoked, SageMaker dynamically loads it onto the container hosting the endpoint. If the model is already loaded in the container’s memory, invocation is faster because SageMaker doesn’t need to download the model from Amazon S3. If instance memory utilization is high and a new model is invoked and therefore needs to be loaded, unused models are unloaded from memory. The unloaded models remain in the instance’s storage volume, however, and can be loaded into the container’s memory later again, without being downloaded from the S3 bucket again.

One of the key considerations while using MMEs is to understand model invocation latency behavior. As discussed earlier, models are dynamically loaded into the container’s memory of the instance hosting the endpoint when invoked. Therefore, the model invocation may take longer when it’s invoked for the first time. When the model is already in the instance container’s memory, the subsequent invocations are faster. If an instance memory utilization is high and a new model needs to be loaded, unused models are unloaded. If the instance’s storage volume is full, unused models are deleted from the storage volume. SageMaker fully manages the loading and unloading of the models, without you having to take any specific actions. However, it’s important to understand this behavior because it has implications on the model invocation latency and therefore overall end-to-end latency.

Pipeline hosting options

SageMaker provides multiple instance type options to select from for deploying ML models and building out inference pipelines, based on your use case, throughput, and cost requirements. For example, you can choose CPU or GPU optimized instances to build serial inference pipelines, on a single container or across multiple containers. However, there are sometimes requirements where it is desired to have flexibility and support to run models on CPU or GPU based instances within the same pipeline for additional flexibility.

You can now use NVIDIA Triton Inference Server to serve models for inference on SageMaker for heterogeneous compute requirements. Check out Deploy fast and scalable AI with NVIDIA Triton Inference Server in Amazon SageMaker for additional details.

Conclusion

As organizations discover and build new solutions powered by ML, the tools required for orchestrating these pipelines should be flexible enough to support based on a given use case, while simplifying and reducing the ongoing operational overheads. SageMaker provides multiple options to design and build these serial inference workflows, based on your requirements.

We look forward to hearing from you about what use cases you’re building using serial inference pipelines. If you have questions or feedback, please share them in the comments.

About the authors

Rahul Sharma is a Senior Solutions Architect at AWS Data Lab, helping AWS customers design and build AI/ML solutions. Prior to joining AWS, Rahul has spent several years in the finance and insurance sector, helping customers build data and analytical platforms.

Anand Prakash is a Senior Solutions Architect at AWS Data Lab. Anand focuses on helping customers design and build AI/ML, data analytics, and database solutions to accelerate their path to production.

Dhawal Patel is a Principal Machine Learning Architect at AWS. He has worked with organizations ranging from large enterprises to mid-sized startups on problems related to distributed computing, and Artificial Intelligence. He focuses on Deep learning including NLP and Computer Vision domains. He helps customers achieve high performance model inference on SageMaker.

Saurabh Trikande is a Senior Product Manager for Amazon SageMaker Inference. He is passionate about working with customers and making machine learning more accessible. In his spare time, Saurabh enjoys hiking, learning about innovative technologies, following TechCrunch and spending time with his family.

Read MoreAWS Machine Learning Blog