Many companies need to maintain an audit trail to track updates to their data. For compliance and other reasons, they’re required to preserve a history of all updates and deletes made to any given record in any given database table.

For example, a foreign exchange transaction between two global banks includes a set of payment instructions between the buyer and seller. These instructions, such as the time and method of payment, can be modified several times before the transaction is finally settled. Without an audit trail, you only have a record of the final settled transaction, and no history of the changes that led up to it. In case of a dispute or compliance review, an auditor needs to see that history.

Conventional methods for creating an audit log table, such as described at this PostgreSQL wiki page, use a trigger function on the source table. However, this introduces additional performance overhead because the trigger logic executes on every insert, update and delete operation. This performance penalty may not be acceptable for a production database. Secondly, PostgreSQL database trigger functions cannot easily record the precise commit timestamp, which is the exact time that the record was committed. Precise commit timestamps are particularly important when auditing financial transactions.

In this post, I show you an alternate way to create an audit log for a table in an Amazon Aurora PostgreSQL-Compatible Edition database that eliminates the above drawbacks. This audit log is a table of timestamped records that captures the history of all updates and deletes made to the table. The solution uses AWS Database Migration Service (AWS DMS) to create a staging table, and PostgreSQL database triggers to create the final audit log table. AWS DMS can capture precise commit timestamps, and it can create a continuous audit log table at a separate database instance. Therefore, there is no performance overhead added to the source database due to running triggers there or due to processing queries used only for audit purposes.

Note that the objective here is to produce a history table. To do a more comprehensive security audit, please refer to Audit Aurora PostgreSQL databases using Database Activity Streams and pgAudit.

Solution overview

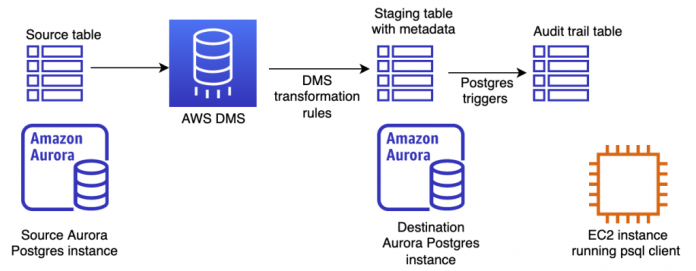

The following diagram shows the solution components. The source Aurora PostgreSQL database contains the source table with current business records.

AWS DMS transforms the source table to a staging table in the destination Aurora PostgreSQL database. AWS DMS transformation rules add metadata columns such as timestamp and operation type (insert, update, or delete) to create the staging table.

As records get created in the staging table, PostgreSQL triggers get invoked to create the final audit log table in the destination PostgreSQL database. Audit trails often need to capture the precise times at which transactions were committed in the source table. During replication, AWS DMS can record the commit times at the source table through the AR_H_COMMIT_TIMESTAMP column header. PostgreSQL triggers don’t have access to commit timestamps, so we use AWS DMS to capture them.

We also need a Linux Amazon Elastic Compute Cloud (Amazon EC2) instance to create the tables and run queries using PostgreSQL’s psql tool.

For our example use case, suppose we have a table of items for sale at our e-commerce company. Each row contains the item’s name and its current price. From time to time, prices get changed and items get removed. For audit purposes, we need to know the history of price changes and creation or removal for any given item.

The following diagram shows the schema for the items table, the staging table, and the final audit log table, which records all historical changes. AWS DMS transformation rules add audit-related metadata to the staging table. This data includes the operation type (insert, update, or delete), the commit timestamp, and the transaction ID (which helps identify atomic transactions). The PostgreSQL trigger generates the final audit log records. Each record contains the new data and the old data row (if applicable) for all updates, inserts, and deletes on the original items table.

In the following sections, we describe the steps to build the solution and deploy the audit log table.

Prerequisites

Before you begin, make sure you have a VPC with at least one public subnet and two private subnets in two different Availability Zones in your AWS account. You will need to connect using SSH, as described in the next section.

Note that this solution incurs the cost of running two Aurora PostgreSQL database instances plus a DMS replication instance.

Launch an EC2 instance to run psql

In this section, we create an EC2 instance and install the psql command line tool, which we use later to create our PostgreSQL tables and run our SQL queries.

On the Amazon EC2 console, create an SSH keypair named, for example, my-ec2-keypair.

Choose Security groups and create a new security group named postgres-audit-poc in your desired VPC.

Choose Add rule under Inbound rules.

For Type, select SSH.

For Source, choose My IP.

Choose Add rule.

This rule allows SSH access only from your IP address.

On the Amazon EC2 console, choose Launch instance.

Name the instance audit-poc.

For AMI, choose Amazon Linux.

For Instance type, choose t2.medium.

For Key pair, choose the key pair you created (my-ec2-keypair).

Choose Edit next to Network settings.

Choose the same VPC, and make sure the subnet is a public one.

Choose Select existing security group, and select the postgres-audit-poc security group you created.

Make sure Auto-assign public IP is set to Enable.

Choose Launch instance.

After the instance is in the Running state, find its public DNS name, and SSH to it using your key pair:

On your EC2 instance, look for the latest supported version of PostgreSQL:

Install the latest version shown (which, as of this writing, is PostgreSQL 13):

Test that you now have psql installed:

Create the Aurora PostgreSQL source and destination database clusters

In this section, we launch an Aurora PostgreSQL cluster with a single database instance for our items table. This is the source table for the audit log. We then launch a second single-instance database cluster for our staging and audit log tables.

On the Amazon EC2 console, choose Security Groups and create a new security group named aurora-poc-sg in the same VPC.

Choose Add rule under Inbound rules.

For Type, select PostgreSQL.

For Custom source, choose the postgres-audit-poc security group.

This allows your EC2 instance to access your database.

Create a parameter group for the source database

To enable database replication, we first need to create a new parameter group for the source database. For more information, refer to Using a PostgreSQL database as an AWS DMS source.

On the Amazon RDS console, choose Parameter groups.

Choose Create parameter group.

Choose aurora-postgresql13 (or the version you are using) for Parameter group family.

For Type, choose DB Cluster Parameter Group.

For Group name, enter a name, such as postgres-replication.

Enter a description in the Description field.

Choose Create.

Edit this parameter group by choosing Edit.

In the Parameters search field, find the rds.logical_replication parameter, and set it to 1.

Find the wal_sender_timeout parameter and set it to 0.

Choose Preview changes to preview your changes, then choose Save changes.

Create the source and destination databases

To create your source and destination databases, complete the following steps:

On the Amazon RDS console, choose Create database.

Choose the Standard create, Amazon Aurora, and PostgreSQL-Compatible Edition options.

Choose the Dev/Test option and enter source-db as your DB cluster identifier.

Leave the Master username as postgres and enter a strong password.

For instance configuration, choose Burstable classes and select the db.t3.medium instance type.

Select Don’t create an Aurora replica and then choose the same VPC as the one where the EC2 instance resides.

Select No under Public access.

For VPC Security Group, choose Choose existing and choose the aurora-poc-sg as the only security group for this database instance.

For the source database, choose Additional configuration and choose the postgres-replication DB cluster parameter group that you created earlier.

Choose Create database.

When the database status shows as Available, test that you can connect to it by first finding its endpoint name by choosing the instance souce-db-instance-1.

Copy the name under Endpoint to your clipboard.

Return to the EC2 instance where you installed psql, and enter the following:

Enter your password when prompted. If you see psql display the connection information and a postgres=> prompt, you have successfully connected to the database.

In a separate terminal window, repeat steps 1– 14 to launch a second Aurora PostgreSQL database cluster, except specify audit-log-db as your DB cluster identifier.

Create the database tables

You should now have two separate SSH terminal windows, one running psql on the source database, and one running psql on the audit log database.

In this section, we create the items table in the source database and then create the staging and audit log tables in the audit log database. We also add the trigger code for the staging table, and enable ongoing replication for the source database.

In the terminal window running psql on the source database, enter the following SQL statement at the postgres=> prompt to create an empty items table:

In the terminal window running psql on the audit log (destination) database, enter the following SQL statement to create the items_audit_log table:

Then enter the following to create the items_staging table:

Using a text editor, copy and enter the following code into a file named create-trigger.sql. This is the trigger that creates records in the items_audit_log table as new records arrive in the items_staging table.

Enter the following command in psql to run the preceding code:

We’re now ready to set up the AWS DMS replication instance, and create the AWS DMS replication tasks to replicate the source items table to the staging table.

Create the AWS DMS replication instance

To create your replication instance, complete the following steps:

On the AWS DMS console, choose Create replication instance.

Enter a name such as postgres-audit-poc-dms-instance and a description.

Choose the same VPC as the one in which you created your EC2 instance and Aurora PostgreSQL clusters.

Choose Dev or test workload (Single-AZ) under Multi-AZ.

Under Advanced security and network configuration, choose the postgres-audit-poc security group under VPC security group(s).

Leave all other choices as default, and choose Create.

Create AWS DMS endpoints

Next, we create AWS DMS endpoints. We start with an endpoint for our source database.

In the navigation pane, choose Endpoints.

Select Source endpoint and select Select RDS DB instance.

For RDS Instance, choose the instance source-db-instance-1.

For Access to endpoint database, select Provide access information manually.

In the Password field, enter the database password you specified earlier when you created the database.

Enter postgres in the Database name field.

Leave all other choices as default.

To test that the endpoint can connect to the source database, choose Run after selecting the VPC.

When the status shows as successful, choose Create endpoint.

To create an AWS DMS endpoint for our destination database, follow the same steps, but select Target endpoint, and the RDS instance should be audit-log-db-instance-1.

Create an AWS DMS migration task

To create your migration task, complete the following steps:

On the AWS DMS console, choose Database migration tasks.

Choose Create task.

Enter a task identifier, such as audit-poc-dms-task.

Choose the replication instance and the source and destination endpoints you created.

Under Migration type, select Migrate existing data and replicate ongoing changes.

Select Do Nothing under Target table preparation mode.

Select Don’t stop under Stop task after full load completes.

Under Table mappings, select JSON editor, and enter the following rules in the editor:

Select the option to run the task automatically, and choose Create task.

After some time, the task status shows as Load complete, replication ongoing.

Test with sample data

We now have our audit trail system up and running, and can test it by inserting some sample data into the items table and making sure that any updates and deletes are captured in the items_audit_log table.

In the terminal window running psql on the source database, insert some new items as follows:

In the terminal window running psql on the destination audit-log-db database, check that the items_audit_log table now has the inserted records: select * from items_audit_log;

Let’s delete the third item from our items table in the source database: delete from items where item_id=3;

The item is now gone from the items table, but the delete operation (D) remains captured in the items_audit_log table along with the old row data.

Update the price of the keyboard to $29.95:

update items set price=29.95 where item_id=2;

The price is now updated in the items table, but the items_audit_log table has a new record that shows both the new price and the old price in the old_row _data field.

Cleanup

To clean up and terminate the instances you have created above, proceed as follows.

Delete the DMS replication instance

To delete the DMS replication instance, complete the following steps:

In the AWS DMS console, choose Database migration tasks.

Select the DMS task you created, and choose Stop under Actions.

After the status shows “Stopped”, choose Delete under Actions.

Choose Replication Instances.

Select the DMS instance you created, and select Delete under Actions.

Choose Endpoints, select both the endpoints you created, and select Delete under Actions.

Delete the Aurora PostgreSQL source and destination clusters

To delete the Aurora PostgreSQL source and destination clusters, complete the following steps:

In the AWS RDS console, choose Databases.

Select the source-db-instance-1 database instance and choose Delete under Actions.

Type “delete me” to confirm deletion, and click Delete.

Repeat the above steps for the audit-log-db-instance-1 database instance.

Conclusion

In this post, we demonstrated how to create an audit trail for an Aurora PostgreSQL table using AWS DMS. Unlike conventional methods of creating PostgreSQL audit log tables, this solution does not impose a performance penalty on the source database because it avoids running database triggers there on every update operation; records the precise commit timestamps available in AWS DMS into the audit log table; and avoids burdening the source database with processing queries just for audit purposes.

You can also use this general approach for Amazon Relational Database Service (Amazon RDS) for PostgreSQL tables. If you have multiple tables requiring audit trails, you can automate these steps by using AWS CloudFormation or AWS Cloud Development Kit (AWS CDK).

About the author

Rana Dutt is a Principal Solutions Architect at Amazon Web Services. He has a background in architecting scalable software platforms for financial services, healthcare, and telecom companies, and is passionate about helping customers build on AWS.

Read MoreAWS Database Blog