Database performance monitoring is critical for application availability and productivity. A good monitoring practice can ensure a small issue is identified in time before it develops into a big problem and causes service disruption.

In the AWS Cloud, you can use analytical and monitoring tools like Amazon RDS Performance Insights and Amazon CloudWatch metrics and logs to get insights about a database’s internal and external states. This means you must visit different service pages to get a full picture of your database. You need a comprehensive view of the data collected by these tools in one place.

The second requirement is customization. Each application has different monitoring needs. A write-heavy application may focus more on write I/Os and commit latencies than a read-heavy application. A good monitoring tool should allow you to add and remove contents easily.

In the first post of this series, we showed how you can monitor Amazon Relational Database Service (Amazon RDS) for MySQL and Amazon Aurora MySQL-Compatible Edition databases by building a custom CloudWatch dashboard with database activities and its related server statistics.

In this post, we focus on building a dynamic dashboard to monitor Amazon Relational Database Service (Amazon RDS) for PostgreSQL and Amazon Aurora PostgreSQL–Compatible Edition databases.

Solution overview

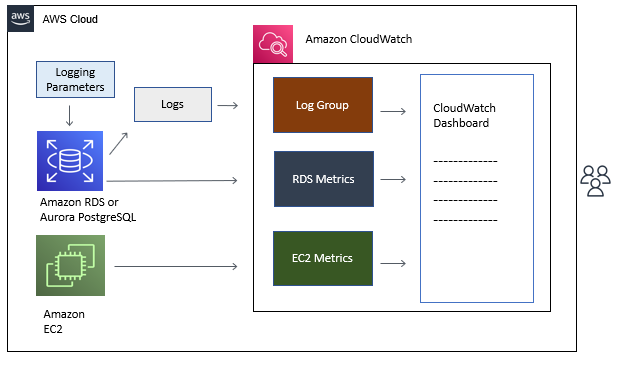

The monitoring dashboard first fetches data from CloudWatch Logs (published PostgreSQL logs) and CloudWatch metrics, then displays them as widgets by subject.

We cover the following database or application server metrics as part of this post. However, you can modify the dashboard to add the required metrics accordingly:

Top 20 slow queries

Execution plans for slow queries

Error log

Autovacuum and Autoanalyze

Deadlocks

Temporary file usage and others

Performance Insights metrics: DBLoad, DBLoadCPU, and DBLoadNonCPU

Amazon RDS server metrics: CPUUtilization, FreeableMemory, ReadIOPS, and WriteIOPS

Application server (Amazon EC2) metrics: CPUUtilization, NetworkIn, NetworkOut, and BytesRead

The following diagram shows the architecture and the technical components in use:

The solution contains the following steps:

Set parameters for the PostgreSQL database to turn on necessary logging.

Build a CloudWatch dashboard.

Customize the dashboard.

Prerequisites

Complete the following prerequisite steps:

Create an RDS for PostgreSQL database instance or an Aurora PostgreSQL cluster – Make sure you select the option to publish PostgreSQL logs to CloudWatch. Although you can view and download the logs from the Amazon RDS console, to build a CloudWatch dashboard to analyze the logs, we need to publish them to CloudWatch.

Enable Performance Insights – If you already have an RDS instance, you can enable log exports and Performance Insights by modifying the instance.

Verify the CloudWatch log group is created and logs are published to CloudWatch.

The log group name is different for an RDS for PostgreSQL database and an Aurora PostgreSQL database, as shown in the following table. You can find it on the CloudWatch console by choosing Logs and then Log groups.

Log Group

Amazon RDS for PostgreSQL

Aurora PostgreSQL

PostgreSQL log

/aws/rds/instance/<your DB identifier>/postgresql

/aws/rds/cluster/<your DB identifier>/postgresql

Note: You may want to set log retention to a non-default value for your log group to save money. You can do this by choosing Actions and Edit retention setting(s). The default is Never expire.

Tag the monitored resources – The Explorer type metrics use tag key-value pairs to filter, classify, aggregate, and group server statistics as a collection. Make a common key for the database instances in an Aurora cluster or EC2 instances that support the same application.

For example, we use a common key for the two instances in the Aurora cluster:

instance1 – Key db-name-cluster1, value db-instance1

instance2 – Key db-name-cluster1, value db-instance2

Similarly, we use a common key called name for the two application servers:

Application server1 – Key name, value app-instance1

Application server2 – Key name, value app-instance2

You can encrypt log data using AWS Key Management Service (AWS KMS). For instructions, refer to Encrypt log data in CloudWatch Logs using AWS Key Management Service.

Set parameters for the PostgreSQL database to turn on necessary logging

The PostgreSQL log is a major source of database information. Because a PostgreSQL database with default configuration doesn’t provide all we need, we must modify certain parameters to turn on relevant logging.

For example, we need the server to log all the SQL statements with a runtime greater than or equal to x milliseconds. We achieve this by setting the log_min_duration_statement parameter to x. This is a customizable number you choose based on your use case.

The following table lists the database parameters and values we set for this post. You should monitor the log volume generated with the settings and adjust the parameter values accordingly. If you have different tuning priorities or concerns and are looking for guidance on the logging parameters, refer to the Working with RDS and Aurora PostgreSQL logs blog post series.

Parameter

Values

Function

log_temp_files

1024

Logs SQL queries that use more than this value (kb) of temporary files. DBAs should run explain analyze on such queries. Most times, it involves a full table scan on a large table with hash join. Tune the queries appropriately.

Log_min_messages

error

Controls log volume, log only error, and preceding events.

log_lock_waits

1

Logs if a session is stuck in a locked state and waits longer than deadlock_timeout (1 second by default). Investigate if you should stop the blocking or blocked session.

log_min_duration_statement

1000

Logs the queries with runtimes greater than or equal to the specified milliseconds. This is how we define and capture the slow queries. Tune the queries as needed.

rds.force_autovacuum_logging_level

log

Logs autovacuum and autoanalyze activity as per the threshold that the parameter log_autovacuum_min_duration set. It answers the question of which table was being vacuumed.

log_autovacuum_min_duration

1000

Logs autovacuum and autoanalyze that runs more than the specified time in milliseconds. The default is 10,000.

shared_preload_libraries

auto_explain

Enables logging execution plans automatically. You can then tune the slow queries to use better execution plans.

auto_explain.log_min_duration

1000

Logs the statement’s execution plan if the query runtime is greater than or equal to the given amount of time in milliseconds.

To set these parameters for an RDS for PostgreSQL or Aurora PostgreSQL database, you first need to create a clone of the default DB parameter group. Choose the cloned parameter group and edit the parameters one by one. Attach it to the PostgreSQL instances we monitor. For specific instructions, refer to Modifying parameters in a DB parameter group.

Build a CloudWatch dashboard

Now you’re ready to build the CloudWatch dashboard. For this post, we use an Aurora PostgreSQL cluster as an example, but the same procedure applies to RDS for PostgreSQL databases.

We walk through three methods to create the dashboard: manually, using a template, and via the AWS Command Line Interface (AWS CLI).

Create the CloudWatch dashboard manually

Our dashboard is composed of multiple widgets that stand for different subject areas we listed earlier in the solution overview. In this post, we use Logs table, Line, and Explorer type widgets.

On the CloudWatch console, under Dashboards, choose Create dashboard.

For Name, enter a name, for example test.

Choose Create widget.

Choose Logs table as your widget type.

Choose Next.

You’re redirected to Logs insights, as shown in the following screenshot:

Choose your log group from the drop-down menu.

Search by your database identifier. For Amazon RDS, your log group is /aws/rds/instance/<database identifier>/postgresql. For Aurora, your log group is /aws/rds/cluster/<database identifier>/postgresql.

In the text field, replace the text with an insights query. For example, the following query retrieves the top 20 slow queries from the CloudWatch log streams published by the database server:

Choose Create widget.

To rename the widget, hover your mouse over the widget and choose the pencil icon.

For Rename Widget, enter a new name, for example, Top 20 Slow Queries.

Choose Apply.

Choose Save dashboard.

You can refer to the following table to create different widgets. For the first widget, you choose Create widget; choose Add widget for subsequent widgets. The log group stays the same for this type of widget.

Action

Log Group

Widget Type

Insights Query

Widget Title

Create widget

/aws/rds/instance/<database identifier>/postgresql for Amazon RDS for PostgreSQL or /aws/rds/cluster/<database identifier>/postgresql for Aurora PostgreSQL

Logs table

Top 20 Slow Queries

Add widget

Same as above

Same as above

Execution Plans for Slow Queries

Add widget

Same as above

Same as above

Error log

Add widget

Same as above

Same as above

Auto-vacuum & Auto-analyze

Add widget

Same as above

Same as above

Dead locks

Add widget

Same as above

Same as above

Temp File Usage and Others

Next, we add a Line type widget to display Performance Insights metrics for Amazon RDS.

Choose Add widget, then choose Line, then Metrics, RDS, and Per-Database metrics.

The metrics we use for this post are DBLoad, DBLoadCPU, and DBLoadNonCPU. DBLoad denotes the number of average active sessions. DBLoadCPU is the number of average active sessions waiting for CPU resources, and DBLoadNonCPU is the number of average active sessions waiting for non-CPU resources, for example, buffer content locks.

Select the three metrics for your database instances and choose Create widget.

Now we add one Explorer type metric to the dashboard for Amazon RDS. The Explorer type metric is a pre-filled collection of server metrics based on the tag key and values we input.

Choose Add widget, Explorer, Pre-filled Explorer widget, RDS, then Create.

Choose the metrics from the list in the Metrics section.

Enter a tag name in the From section and choose a tag value.

Leave the rest at default.

For example, we choose CPUUtilization, FreeableMemory, ReadIOPS, and WriteIOPS using the tag db-name-cluster1: All values(*) for our Aurora cluster.

Now we add one Explorer type metric to the dashboard for Amazon EC2.

Choose Add widget, Explorer, Pre-filled Explorer widget, EC2, then Create.

Choose the metrics from the list in the Metrics section.

Enter a tag name in the From section and choose a tag value.

Leave the rest at default.

For example, we choose CPUUtilization, NetworkIn, NetworkOut, and BytesRead with tags Name:app-instance1 and Name:app-instance2.

Save the dashboard.

The following screenshots show what the dashboard looks like:

You can generate a template from the dashboard for later use by choosing Actions and View/edit source.

Create the CloudWatch dashboard using a template

This section shows how to create a new CloudWatch dashboard using a template provided.

Collect the following information for the target database:

Database CloudWatch log group

Resources Region

RDS or Aurora instance tag

DB instance identifier

EC2 instance tag value

Edit the following template code, and replace each placeholder with its value. For this post, we use the following values:

<your CloudWatch log group name> – /aws/rds/cluster/database-pg-1/postgresql

<your region> – us-east-1

<your DB instance tag> – db-name-cluster1

<your DB instance1 id> – database-pg-1-instance-1

<your DB instance2 id> – database-pg-1-instance-2

<your EC2 instance1 tag value for Name> – app-instance1

<your EC2 instance2 tag value for Name> – app-instance2

On the CloudWatch console, choose Dashboards, then Create dashboard.

Enter a name. For example, new-pg-dashboard.

Choose Create dashboard.

This creates an empty dashboard. The Add widget window pops up automatically, which you can close.

Choose Actions, then choose View/edit source.

Replace the default code with your script from earlier.

Choose Update and save the dashboard.

Create the CloudWatch dashboard using the AWS CLI

In this section, we show you how to create the dashboard using the AWS CLI.

Save the template code from the previous section as a JSON file on your machine. For example, we name it my-dashboard.json.

Run the following AWS CLI command, using your dashboard name and template file name:

For example, we use the following command:

You may see a warning message like the following. You can safely ignore it. Your dashboard is created as expected.

Customize the CloudWatch dashboard

In this section, we provide some tips on customizing the dashboard.

First, you can display data for a specific time range or time zone format by adjusting the time range. Choose a value from the predefined time ranges or choose Custom for more ranges. To set the time zone format, choose UTC and choose either UTC or Local time zone.

You can also view a specific pane for log data. The dashboard displays single lines of logged activities by default. Choose the expand icon by the line number to expand the line for details. Choose the square icon in the upper-right corner to switch to full-screen view.

Summary

The CloudWatch dashboard provides a practical view of your database and server activities and state based on actual data. It allows you to zoom in to a subject area with a specific time range for quick analysis. When you integrate with Performance Insights metrics, developers and database administrators can get a better idea of database workloads and identify bottlenecks. With the database server and application server statistic metrics displayed in the same view, you can link events more easily.

We hope that we provided enough information for you to start building your own customized dashboard. Your feedback is greatly appreciated.

About the Authors

Shunan Xiang is a Senior Database Consultant with the Professional Services team at AWS. He works as a database migration specialist to help Amazon customers in design and implementing scalable, secure, performant, and robust database solutions on the cloud.

Li Liu is a Senior Database Consultant with the Professional Services team at Amazon Web Services. She helps customers to migrate traditional on-premise databases to AWS cloud. She specializes in database design, architecture and performance tuning.

Read MoreAWS Database Blog