In Part 1 of this series, we discussed database migration components, migration cost and its factors, and how to select right type of AWS DMS instance which determines the capacity of DMS jobs. In this post, we focus on how to regularly evaluate the AWS DMS instance size and scale up or down based on requirements and how to cost optimize the migrations with AWS DMS features and configurations.

Regularly evaluate the instance size and scale up or down based on requirements

You should conduct regular capacity planning exercises to ensure that the replication instance aligns with the current workload requirements. Adjust the instance size as necessary to maintain optimal performance and cost-efficiency. Consider the following strategies:

Right-sizing AWS DMS resources to align with workload demands

Scaling up or down based on migration phases and throughput requirements

Using AWS DMS Serverless auto scaling to dynamically adjust resources and save costs during peak and idle periods

In this section, we discuss various scenarios when you may want to reevaluate your AWS DMS instance size.

Migration transitions from full load to CDC or vice versa

During the full load phase of an AWS DMS migration, the AWS DMS instance caches all the changes from the source endpoint. This can cause the instance to consume more memory and disk space. However, during the CDC phase of the migration, the instance is less likely to cache changes from the source endpoint for a long duration. Therefore, it’s important to evaluate and resize the instance during the CDC phase to save costs. Consider the following factors when evaluating the AWS DMS instance’s resource usage during CDC phase:

The number of changes that are being made to the source endpoint

The size of the changes that are being made to the source endpoint

The amount of memory and disk space that is available on the instance

After you have evaluated the AWS DMS instance’s resource usage, you can resize the instance as needed. You can resize the instance up or down, depending on your needs. Resizing the instance can help you to save costs without impacting the performance of the migration.

Enable or disable debug mode

When troubleshooting an AWS DMS migration, it’s sometimes necessary to enable debug mode to get detailed logs. This can cause the AWS DMS instance to require more Amazon Elastic Block Store (Amazon EBS) storage to store the logs. Therefore, it’s important to reevaluate the instance sizing whenever debug mode is enabled or disabled.

Debug mode can help you to troubleshoot problems with your AWS DMS migration by providing detailed logs. However, debug mode can also cause the instance to consume more EBS storage. The amount of EBS storage that is required for debug mode will depend on the level of detail that you need. The more detailed the logs, the more EBS storage will be required. Therefore, it’s important to evaluate the amount of EBS storage that is required for debug mode before enabling it. After you have evaluated the amount of EBS storage that is required for debug mode, you can resize the instance as needed. You can resize the instance up or down, depending on your needs. Resizing the instance can help you save costs without impacting the performance of the migration.

AWS DMS data validation jobs

AWS DMS offers data validation to ensure that your data was migrated accurately from the source to the target. However, it’s important to note that data validation can consume additional resources at the source and target, as well as additional network resources. Therefore, it’s important to weigh the cost of data validation against the importance of ensuring that the data is migrated accurately.

Consider the following when deciding whether to enable data validation:

The size of the data that is being migrated

The importance of ensuring that the data is migrated accurately

The amount of resources that are available

If the data is small and the accuracy is not critical, then data validation may not be necessary. However, if the data is large or the accuracy is critical, then data validation may be a worthwhile investment.

Amazon CloudWatch metrics

To periodically reevaluate and scale the AWS DMS replication instance size up or down based on performance requirements, you can follow an action plan using CloudWatch metrics, monitoring and alerting features, and automation capabilities.

By following this action plan, you can continuously monitor and optimize the AWS DMS replication instance size, ensuring that it aligns with the performance requirements while minimizing costs and ensuring a smooth and efficient database migration process.

You can configure CloudWatch alarms and event notifications to proactively monitor the replication instance’s performance and resource utilization. Set up alarms to trigger when specific performance thresholds are breached or when the replication lag exceeds acceptable limits. These alerts can help you identify and address any performance issues promptly.

For more information, refer to Automating database migration monitoring with AWS DMS.

Metrics for the replication instance

Monitoring the CloudWatch metrics for your replication instance is always recommended to ensure that the instance doesn’t overload and impact the performance of the AWS DMS replication task. A successful migration requires metrics like CPU, memory, and IOPS usage to play a crucial role. The source and target DB engine, as well as the type of data, can all have an impact on the metrics. For more information, refer to AWS DMS key troubleshooting metrics and performance enhancers.

The following are critical metrics to monitor when running multiple tasks under the same instance:

CPUUtilization

FreeableMemory

SwapUsage

ReadIOPS

WriteIOPS

ReadThroughput

WriteThroughput

ReadLatency

WriteLatency

DiskQueueDepth

The following code is an example of the CPUUtilization settings:

Metrics for the replication task

Performance is determined by metrics that relate to replication tasks. To ensure a successful migration, it’s important to monitor the following metrics periodically:

FullLoadThroughputBandwidthTarget

FullLoadThroughputRowsTarget

CDCIncomingChanges

CDCLatencySource

CDCLatencyTarget

CDCChangesMemorySource

CDCChangesMemoryTarget

CDCChangesDiskSource

CDCChangesDiskTarget

Metrics for the task debugging

The following logging levels need to be changed to troubleshoot performance issues:

SOURCE_UNLOAD

SOURCE_CAPTURE

TARGET_LOAD

TARGET_APPLY

TASK_MANAGER

See the following example code:

Benchmark the data migration

You should evaluate the performance metrics gathered during the benchmarking phase. If the replication instance is consistently operating below the desired performance thresholds, consider scaling up to a larger instance size. Benchmark the bigger and smaller table migration timeline during the initial round of testing and resize the instances accordingly during the actual production migration. When testing is complete, resize (or delete) the AWS DMS replication instances until the actual production migration starts to avoid unnecessary bigger replication instances than required.

Choose a Multi-AZ instance

This section explains the scenarios where minimizing costs is a priority and the migration task doesn’t involve critical or sensitive data. In these cases, you can choose a Single-AZ configuration. Single-AZ instances are typically more cost-effective than Multi-AZ instances. The choice between a Multi-AZ and a Single-AZ configuration for an instance in AWS DMS depends on the specific requirements and characteristics of your migration tasks.

The following table summarizes the details of Single-AZ AWS DMS deployment compared to the multi-AZ AWS DMS deployment.

AWS DMS Deployment type

Multi-AZ

Single-AZ

Data Protection and Business Continuity

Transient migrations

Long running and on-going replication tasks

Full-Load tasks

Critical production migration

Task restart for incomplete tables

A multi-AZ configuration is required in the following cases:

Data protection and business continuity – If your migration involves critical data that can’t afford any downtime or data loss, a multi-AZ configuration is necessary. It provides redundancy by maintaining a standby replica in a different Availability Zone. In case of storage failures or other issues, failover to the standby replica ensures continuous operation without interruption, safeguarding your data and business continuity. A multi-AZ configuration is going to cost more because of standby instances deployment as part of the AWS DMS instance, so you should carefully choose when it’s required and when it’s optional.

Long-running and ongoing replication tasks – For migrations intended to run for extended periods, especially for ongoing replication tasks, a multi-AZ configuration is advisable. It enhances availability and makes sure that the replication process continues seamlessly even if a storage problem occurs, preventing any disruptions in data synchronization between the source and target databases.

Critical production migrations – These migrations can’t tolerate the failure of an AWS DMS instance just before or during the cutover.

A Single-AZ configuration is required in the following cases:

Transient migrations – If your migration is temporary, short-lived, or transient, and the downtime or data loss during a failover is acceptable, a Single-AZ configuration might be sufficient. Transient migrations, such as one-time data transfers or short-term projects, may not require the added complexity and cost of a multi-AZ setup.

Full load tasks – During a full load task, where the entire source dataset is migrated to the target, a failover or host replacement can cause the task to fail. This is because full load tasks involve transferring a large volume of data, and interruptions can lead to incomplete transfers. Therefore, any interruption in a full load task leads to restarting it from scratch for a given table in the AWS DMS task.

Task restart for incomplete tables – In the event of a failure during a full load task, you have the option to restart the task from the point of failure. This means the remaining tables that didn’t complete or are in an error state can be migrated in subsequent attempts, ensuring data completeness.

In summary, the decision to choose a Multi-AZ or Single-AZ configuration in AWS DMS should be based on factors such as the criticality of the data, required availability, duration of the migration task, and budget constraints. Multi-AZ configurations are ideal for long-running tasks, critical data, and ongoing replication, ensuring high availability and data protection. On the other hand, Single-AZ configurations can be suitable for short-term, non-critical migrations where cost optimization takes precedence over high availability. So, there is a trade-off!

Selective data migration

You should analyze the data in your source database and identify any unnecessary or irrelevant data that can be excluded from the migration process. By selectively migrating only the required data, you can reduce migration time, network bandwidth usage, and associated costs. Consider the following:

Migrate only a subset of data using AWS DMS filters – If you only need to migrate the most recent data from the source database, you can use AWS DMS filters to migrate only the data that meets certain criteria. For example, you can filter the data by date range, column value, or other criteria. See the following example code:

Migrate only specific tables or views – If you only need to migrate a subset of the tables or views from the source database, you can use the AWS DMS table mapping feature. This feature allows you to specify the specific tables or views that you want to migrate. See the following example code:

Identify non-mandatory parts of your database schema – In the process of migrating a database from a source to a target database, a common challenge arises when the source database contains numerous archived tables or other schema objects that are no longer necessary for regular operations but may or may not be required for the functionality of the application in the target database environment. To optimize costs during this migration process, it’s essential to adopt a strategic approach. One way to achieve this is by carefully selecting the data that needs to be migrated. By identifying and excluding irrelevant or outdated tables or schema objects, unnecessary storage and processing costs can be minimized. After you’re identified these tables, you can exclude them from the migration task in AWS DMS, reducing both the migration time and associated costs. See the following example code:

Selective database migration in AWS DMS can significantly optimize the overall cost of database migration in several ways:

Reduced data transfer volume – Selective migration allows you to migrate only the essential data, omitting unnecessary tables, schemas, or historical records. By transferring a smaller volume of data, you reduce the network bandwidth usage and associated data transfer costs. This can lead to significant cost savings, especially if you are migrating a large database.

Lower storage costs – By excluding non-essential data from the migration, you minimize the storage requirements in the target database. AWS charges for storage usage, so reducing the volume of stored data directly reduces storage costs over time.

Minimized downtime – Selective migration allows you to focus on migrating critical data first, ensuring that essential services are back online quickly. This can reduce downtime costs associated with the migration process. If you’re migrating a production database, you can use selective database migration to minimize downtime. For example, you can migrate the most critical data first, and then migrate the remaining data later, when the downtime is less disruptive.

Lower transformation costs – When performing data transformations (for example, schema mapping or data type conversions), working with a smaller dataset simplifies the transformation logic. Complexity and processing time are reduced, leading to potential savings in transformation costs.

Optimized target database resources – By migrating only necessary data, you can optimize the configuration of the target database, such as selecting appropriate instance types and storage capacities. Right-sizing these resources ensures you’re not overprovisioning, preventing unnecessary costs

Faster disaster recovery setup – Smaller databases are quicker to back up, restore, and replicate. In the event of a disaster, having a smaller dataset reduces the time and costs associated with disaster recovery processes.

Incremental and iterative approach – Selective migration enables an incremental approach, allowing you to migrate data in stages. This phased migration approach can help you assess costs at each stage, optimizing the process iteratively as you progress, preventing unnecessary spending.

Focused testing and validation – Testing efforts can be focused on the specific data and features that are being migrated, saving time and resources. By validating only the necessary functionalities, you optimize the testing process and ensure that costs related to extensive testing are minimized.

Selective database migration in AWS DMS leads to a more efficient, streamlined, and focused migration process. By concentrating efforts and resources on essential data, businesses can achieve significant cost savings while ensuring a smooth transition to the target database environment. Proper planning, analysis, and ongoing optimization are key to realizing the full potential of cost optimization through selective database migration in AWS DMS.

Cost optimize with AWS DMS features and configurations

There are a number of settings that you can configure to optimize the performance and cost of your AWS DMS migration. For example, you can configure the number of threads that are used for full load, the number of tasks that are used for CDC, and the size of the replication buffer. Consider the following:

Properly configuring replication tasks to optimize cost and performance

Scheduling and automating tasks based on workload patterns

Using AWS DMS features like CDC and ongoing replication optimization

Use AWS DMS Serverless to optimize cost

AWS DMS Serverless is a fully managed service that automatically provisions, scales, and manages migration resources. This can help you reduce the cost on your database migrations by eliminating the need to provision and manage your own migration infrastructure. AWS DMS Serverless is a pay-per-use option that can be a more cost-effective way to migrate data than using on-demand instances. For example, let’s say you have an AWS DMS Serverless deployment with the following usage pattern:

During peak hours, the data transaction volume increases significantly

At off-peak times, the data transaction volume decreases

With AWS DMS Serverless, you are only charged for the capacity used during each hour based on the data transaction volume at that time. This provides cost savings compared to having a fixed provisioned capacity that may remain underutilized during low-activity periods.

The capacity of AWS DMS Serverless is measured in DMS Capacity Units (DCUs). Each DCU represents 2 GB of RAM. To meet various workload needs, AWS DMS Serverless offers different increments of DCU, from 1–384.

For instance, you can configure your AWS DMS Serverless deployment with 8 DCUs to handle a workload that requires 16 GB of RAM. This flexibility enables you to align the capacity with the demands of your specific data replication tasks while optimizing costs.

By using AWS DMS Serverless and its capacity-based pricing, you can efficiently manage costs, seamlessly scale capacity, and ensure optimal performance for your continuous data replication or migration needs.

The maximum DCU is the upper limit on the amount of CPU and memory that AWS DMS Serverless will use for your migration. Setting the maximum DCU too high can result in unnecessary costs, whereas setting it too low can cause your migration to run slowly. See the following example code:

AWS DMS Serverless automatically scales as the need of compute capacity changes between full load and CDC under the limits of the maximum DCU configuration. If your replication isn’t using its resources at full capacity, AWS DMS will gradually deprovision resources to save you costs. However, because provisioning and deprovisioning resources takes time, we recommend that you set MinCapacityUnits to a value that can handle any sudden spikes you expect in your replication workload. This will keep your replication from being under-provisioned while AWS DMS provisions resources for the higher workload level.

AWS DMS Serverless can help reduce costs for database migrations in a number of ways:

Pay-as-you-go pricing – AWS DMS Serverless is a serverless option, which means that you only pay for the resources that you use. This eliminates the need for upfront costs or capacity planning.

Automatic scaling – AWS DMS Serverless automatically scales the resources that it needs to complete your migration. This means that you don’t have to worry about overprovisioning or under provisioning resources, which can lead to wasted costs.

Short-lived workloads – AWS DMS Serverless is a good option for short-lived workloads, such as database migrations. This is because you only pay for the resources that you use, and you don’t have to worry about managing servers or infrastructure.

Unpredictable workloads – AWS DMS Serverless is a good option for unpredictable workloads, such as database migrations that may experience spikes in traffic. This is because AWS DMS Serverless will automatically scale the resources that it needs to complete your migration, even if there is a sudden spike in traffic.

Migrating only some days in weeks and months – Serverless computing is a good option for workloads that are intermittent or unpredictable. This is because you only pay for the resources that you use, and you don’t have to worry about managing servers or infrastructure. The service automatically allocates and deallocates resources as needed, and you only pay for the time that you use the service.

Fire exits – Serverless computing can be a good option for fire exits. A fire exit is a system that allows you to quickly scale up your workload in response to unexpected demand. For example, if you have a website that experiences a sudden spike in traffic, you can use a serverless computing service to scale up your website. This allows you to handle the increased traffic without having to worry about managing servers or infrastructure.

Use AWS DMS homogenous migration to optimize cost

Migrating databases often presents challenges, especially when tasks like data loading and CDC are managed manually. There’s a significant risk of missing crucial configurations related to security, performance, and reliability in such cases. For instance, when migrating PostgreSQL from on premises to AWS, it requires effort to determine the appropriate configurations for the full load and CDC phases of migration. Additionally, selecting the right size for AWS DMS instances is crucial, and native tools like pg_dump for full load and PostgreSQL logical replication methods for CDC are preferred. However, without automation, this kind of choice leads to heavy lifting for customers who are willing to do homogenous migration.

When considering a homogenous database migration, where both the source and target endpoints use the same database engines, it’s advisable to use the native methods provided by the respective database engine. This approach greatly simplifies the migration process by reducing the complexity associated with handling the AWS DMS native full load method. There are several reasons why native database methods are preferred over third-party tools in homogeneous migrations, including optimized performance, data consistency, compatibility, and feature availability. For instance, in MySQL, using mysqldump, or in PostgreSQL, utilizing pg_dump, ensures a more streamlined and efficient migration process.

Because native methods are specifically tailored for the respective database engine, organizations can avoid additional costs associated with third-party migration tools or services. This cost efficiency is particularly important for budget-conscious projects and businesses.

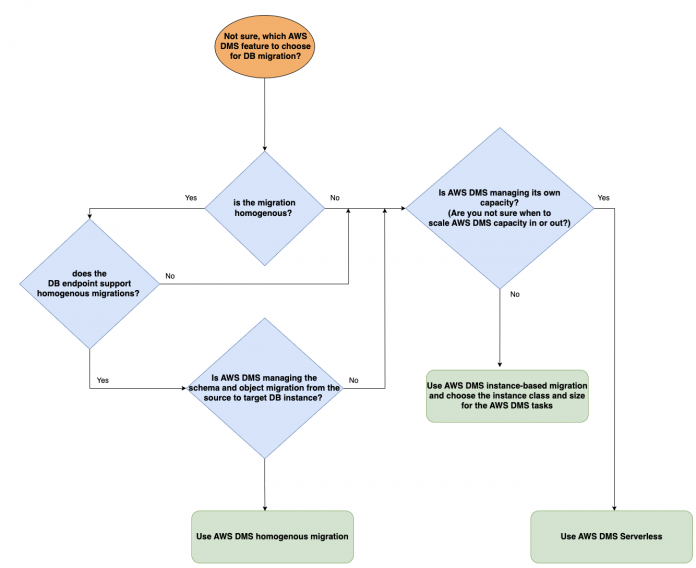

The following diagram can help you decide when to use AWS DMS homogenous migration, AWS DMS Serverless migration, or AWS DMS provisioned migration configuration.

The following diagram illustrates an AWS DMS homogenous migration environment.

AWS DMS homogenous migration offers a number of advantages that can help reduce the cost of your database migration project:

Simplified migration process

Homogeneous migration makes sure that both source and target endpoints use the same database engine

Using native methods provided by the database engine simplifies the process

It reduces complexity associated with the AWS DMS full load method

Cost-efficiency

Native methods eliminate additional costs associated with third-party migration tools or services

Budget-conscious projects benefit from avoiding extra expenses

Organizations pay only for necessary resources, optimizing costs

Automatic resource management

AWS DMS manages compute and storage resources automatically

Dynamic scaling based on workload eliminates manual provisioning efforts

Resources scale up during peak usage and scale down during low demand, optimizing costs

Focus on migration, not infrastructure

Automatic provisioning and deprovisioning of resources simplifies the migration process

You can focus on migration tasks without worrying about infrastructure management

It provides the right amount of resources at all times, aligning costs with actual usage

Optimized performance and efficiency

It provides optimal performance while controlling costs

The pay-as-you-go model aligns costs with resource usage

It allows organizations to achieve high availability, scalability, and enhanced security in the AWS Cloud

In summary, the AWS DMS homogenous migration feature automates the process from starting a full load to establishing a CDC job until migration cutover to stop these jobs. AWS DMS homogeneous migrations simplify the migration of open-source databases to managed services like Amazon RDS and Amazon Aurora. This approach not only reduces operational complexities, but also opens the door to using advanced features, ensuring high availability, scalability, and enhanced security for your applications and databases in the AWS Cloud environment.

Conclusion

In this blog series, we discussed about database migration components, migration cost & its factors and how to select right type of AWS DMS instance which determines the capacity of DMS jobs. In Part 2, we discussed how to regularly evaluate the AWS DMS instance size and scale up or down based on requirements and how to cost optimize the migrations with AWS DMS features and configurations. By understanding these AWS DMS replication task configurations and their potential cost implications, you can make informed decisions to optimize resource allocation and manage costs effectively.

We welcome your feedback, leave your comments.

About the Authors

Shailesh K Mishra is working as Sr. Solutions Architect with the FSI team at Amazon Web Services and Area of Depth in Database and migrations. He focuses on database migrations to AWS and helping customers to build well-architected solutions.

Babaiah Valluru is working as Lead Consultant – Databases with the Professional Services team at AWS based out of Hyderabad, India and specializes in database migrations. In addition to helping customers in their transformation journey to cloud, his current passion is to explore and learn ML services. He has a keen interest in open source databases like MySQL, PostgreSQL and MongoDB. He likes to travel, and spend time with family and friends in his free time.

Read MoreAWS Database Blog