In the realm of database migration, achieving cost optimization is paramount. It’s not just about moving data; it’s about doing so in a way that ensures every resource is utilized efficiently, infrastructure expenses are minimized, and the return on investment is maximized. Careful consideration of cost implications and the implementation of strategic measures are key. Balancing the need to minimize expenses with the requirement for optimal performance and reliability is crucial to enhance the value derived from the migration investment. As we delve into the intricacies of designing a database migration architecture, it becomes evident that several components must be meticulously weighed to ensure a seamless and efficient migration process.

In this post, we show how to cost optimize database migrations on AWS using AWS Database Migration Service (AWS DMS) and discuss the methods, configurations, considerations, and approaches of database migrations.

This is a two-part series post. In Part 1 (this post), we discuss database migration components, migration cost and its factors, and how to select right type of AWS DMS instance which determines the capacity of DMS jobs. In Part 2, we discuss how to regularly evaluate the AWS DMS instance size and scale up or down based on requirements and how to cost optimize the migrations with AWS DMS features and configurations.

Database migration components

Database migration typically includes the following components:

Source database – Identify the existing database system that needs to be migrated. This could be PostgreSQL, MySQL, or Oracle, for example. Understand the schema, data volume, and dependencies within the source database.

Target database – Determine the destination database system where the data will be migrated. This could be Amazon Relational Database Service (Amazon RDS), Amazon Aurora, a NoSQL database, a data lake, or another option. Consider the compatibility and features of the target database.

Data migration tool or service – Select an appropriate tool to extract data from the source database and load it into the target database followed by change data capture (CDC) from the source database to target database, such as AWS DMS, AWS Partner solutions, or open source tools like Debezium.

Schema conversion – In heterogenous migrations the source and target databases have different schema structures or data types in heterogenous database migration, AWS DMS Schema Conversion (SC) or the AWS Schema Conversion Tool (AWS SCT) is required, and it may also be required in homogenous migration depending on the objectives of migration. This component can help transform the schema and data to align with the target database requirements.

For technical enthusiasts seeking to optimize costs in database schema migration, employing AWS SCT is a strategic choice. This tool, available at no charge, adeptly transforms your existing database schema from one engine to another. Its supports a variety of source databases such as Oracle, Microsoft SQL Server, MySQL, Sybase, and IBM Db2 LUW. AWS SCT stands out as a cost-effective solution, simplifying the database schema migration process significantly, minimizing manual efforts.

AWS DMS also provide feature of schema conversion, as AWS DMS Schema Conversion (SC) is a fully managed feature that allows automatic assessment and conversion of database schemas and code objects between different database platforms. This helps streamline migrations by reducing the manual effort required. AWS DMS Schema Conversion enables the conversion of source database schemas to the target engine, allowing users to select specific schema items. Transformation rules can be applied to modify data types, move objects, or change object names before the conversion. Users can adjust conversion settings for improved code performance, and in cases where certain source database features lack equivalent target database endpoint like Amazon RDS counterparts, an extension pack can be used to emulate these features. The converted code and extension pack schema can then be applied to the target database. DMS Schema Conversion supports features in the latest AWS SCT release, ensuring compatibility and integration with evolving AWS technologies.

Database migration cost and its factors

To optimize database migration costs effectively, you must thoroughly understand and manage various factors. This includes carefully considering the data volume being migrated, selecting appropriate instance types and storage options, using automation and compression techniques, and optimizing network bandwidth utilization. In this post, we discuss how to effectively manage these factors within AWS DMS to achieve cost-efficient database migrations while maintaining high performance and reliability. You should consider the following:

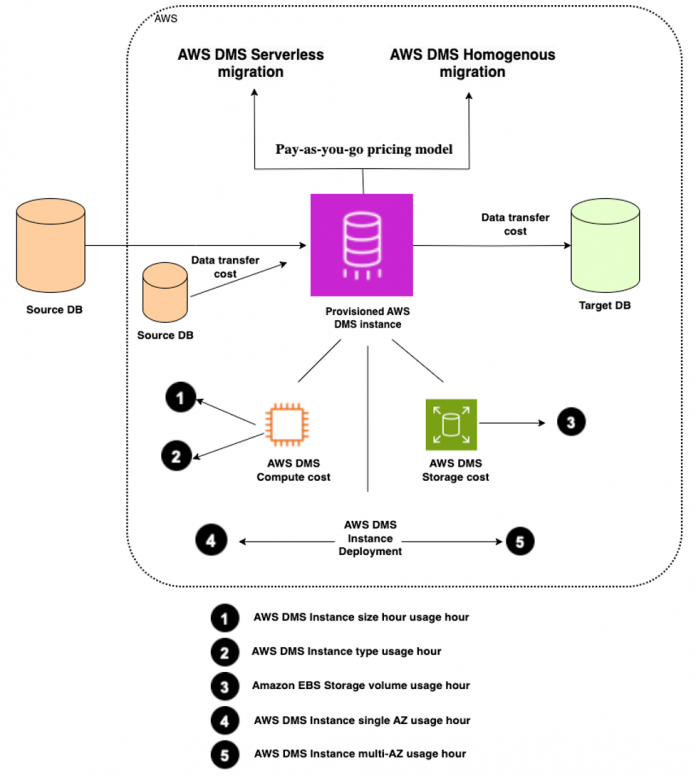

AWS DMS replication instance hours usage – This is the primary factor that influences AWS DMS usage. AWS DMS instances are billed based on the total hours the instances are provisioned and running. Each hour incurs a specific cost based on the instance type selected.

AWS DMS replication instance type – The choice of replication instance class affects pricing. Different instance classes have varying performance characteristics and associated costs. For example, higher-performance instance classes like R or C will cost more compared to lower-performance classes like t2 or t3.

AWS DMS replication instance deployment type – Depending on your specific requirements, you can choose to deploy your AWS DMS instance in a single Availability Zone or multiple Availability Zones. If your environment does not require the AWS Database Migration Service (DMS) instance to be always available and can tolerate some data replication lag, using a single availability zone (AZ) DMS instance can save you money compared to a multi-AZ configuration.

AWS DMS replication instance storage volume – Every compute-optimized (C) or memory-optimized (R) instance type comes with 100 GB of GP2 network-attached storage allocated for swap space, replication logs, and data cache. Similarly, each burstable performance (T) instance type includes 50 GB of GP2 network-attached storage. If you require additional storage to store logs for an extended duration, you have the flexibility to extend the included storage and pay for the additional storage as needed.

Data transfer – Data transfer into AWS DMS incurs no additional charges, and data transfer between AWS DMS and databases in Amazon RDS and Amazon Elastic Compute Cloud (Amazon EC2) instances within the same Availability Zone is also free of cost. However, when migrating a source database to a target database located in a different Availability Zone, Region, or outside of AWS, standard AWS data transfer rates apply.

The following diagram illustrates different factors to consider regarding AWS DMS costs.

It’s recommended to use the AWS Pricing Calculator for AWS DMS to estimate the size of running an AMS DMS instance with all the preceding cost factors.

The following are two key features of AWS DMS to help reduce the complexity of provisioning the underlying AWS DMS instances and operate under a pay-as-you-go pricing model:

AWS DMS Serverless data migration – AWS DMS Serverless offers a flexible pricing model. Instead of assuming and continuously consuming a fixed compute capacity for the AWS DMS replication instance, you’re charged based on the actual capacity you use on an hourly basis. This eliminates the need to provision and manage instance types according to assumptions. You have the flexibility to use it for continuous data replication as well. AWS DMS Serverless adapts to your requirements for example, imagine a scenario where your data replication tasks experience varying levels of activity throughout the day. With AWS DMS Serverless, you will only be charged for the capacity you require during each hour based on the actual workload. During peak times, the service scales up to accommodate higher transaction volumes, and during off-peak hours, it scales down to minimize costs.

AWS DMS homogenous data migrations – From a cost optimization perspective, homogenous data migrations involve the utilization of instance profiles, data providers, and migration projects. By setting up a migration project with compatible source and target data providers of the same type, AWS DMS establishes a serverless environment for your data migration to run efficiently. This approach adheres to a pay-as-you-go model, ensuring you only pay for the resources you consume. AWS DMS connects to the source data provider, reads the data, stores the files on disk, and restores the data using native database tools.

Select the right instance type for your AWS DMS instance

You should assess the workload and resource requirements of your source database. Choose an appropriate instance type for the AWS DMS replication instance based on the workload characteristics, data volume, and performance requirements. Avoid over-provisioning resources to minimize costs. The instance type you choose will have a significant impact on the cost of your migration. For example, a C4 instance is more powerful than a T2 instance, but it will also cost more. Choose the instance type that best meets your needs and budget. The instance type you choose will have a big impact on the cost of your migration. If you’re migrating a small amount of data, you can use a smaller instance type. For larger migrations, you may need to use a larger instance type. If burstable instances make sense for development or test environments, you can use smaller replication instances in lower environments with less throughput. You can use the AWS Pricing Calculator to estimate the cost of different instance types.

In this section, we discuss different aspects of database migration that can help you choose the size and type of your AWS DMS instance.

Memory-intensive workloads

To run tasks, memory-intensive workloads require a significant amount of memory in the AWS DMS instance. Distributed databases and caches of real-time streaming data analytics are typical examples of this. Various factors can determine if a database migration is memory intensive.

For example, are you migrating LOB data? Database migration can be memory intensive if it involves large object (LOB) values in the table. LOB data type columns can use more memory on an AWS DMS instance because LOBs are large objects that can store a significant amount of data. For example, a LOB column that can store up to 1 GB of data would require 1 GB of memory on the AWS DMS instance. In addition, AWS DMS needs to store a copy of the LOB data in memory while it’s being migrated. This means that if there are a large number of LOB columns in a table, the AWS DMS instance could require a significant amount of memory, depending on the LOB settings in the AWS DMS task.

Choosing the right LOB configuration involves a balance of factors. In Full LOB mode, the process begins by creating a row in the target database with an empty LOB column. The LOB data is then populated over multiple iterations, because AWS DMS lacks foreknowledge of the maximum LOB data size in each row and column. This approach might slow down the migration process compared to Limited LOB mode, where LOB data is migrated up to a preconfigured maximum size. Inline LOB mode switches to Full LOB mode when LOB data size exceeds a configured maximum size limit. The best LOB mode to use depends on the specific migration requirements. If you’re migrating a large number of LOB columns, you might need to use Full LOB mode. If you’re limited on memory, you might need to use Limited LOB mode. And if you’re migrating a small number of LOB columns, you might use Inline LOB mode.

The following are some other factors that can impact memory or consume more memory, and therefore the AWS DMS instance might require more memory:

The amount of data you are migrating during AWS DMS full load and CDC tasks – The larger the amount of data, the more memory you will need. This is because while in full load, if you have any inflight or ongoing transactions, the AWS DMS replication instance will first cache the changes in memory. When memory is full, it swaps to the disk until full load completes. Consider the trade-off of what amount of swapping cache changes will be appropriate.

The number of transactions per second – The higher the transactions per second (TPS), the more memory you will need because the sorter component in the AWS DMS instance will keep track and order of running and completed transactions before it sends them to target endpoints. This requires memory on the AWS DMS instance before it swaps to disk.

The number of concurrent replication tasks – If you’re running multiple concurrent replication tasks, you need more memory because each task will occupy AWS DMS instance memory individually.

You can upgrade to a larger instance class if you need more memory. You can use Amazon CloudWatch metrics to monitor the performance of your replication instance. If you see memory pressure, you can increase the instance class size or make other changes to the configuration. We discuss monitoring CloudWatch metrics in more detail later in this post.

If your migration includes any of these factors, you should take steps to mitigate memory usage, such as using a database migration tool that is optimized for memory usage, breaking the migration down into smaller batches, and using a temporary staging database to reduce the load on the production database.

CPU-intensive workloads

Database migration could be CPU intensive with high computational requirements in applications such as multiplayer gaming apps that are highly scalable, big data analytics, 3D modeling, and video encodings.

Firstly, is your migration homogenous or heterogeneous? In a homogenous database migration, the source and target databases are the same database engine. This means that the data can be transferred directly from the source to the target without any conversion. In a heterogeneous database migration, the source and target databases are different database engines. This means that the data needs to be converted from the source format to the target format. This conversion process can be CPU intensive, especially if the data is large or complex.

The following are some examples of database migrations that can consume more CPU:

Migrating from a relational database to a NoSQL database – NoSQL databases typically store data in a different way than relational databases. This means that the data needs to be converted before it can be migrated to the NoSQL database.

Migrating from a mainframe database to a cloud-native database – Mainframe databases are typically very large and complex. This means that migrating them to a cloud database can be CPU intensive.

Migrating from a legacy database to a modern database – Legacy databases are often outdated and inefficient. Migrating them to a modern database can require a lot of CPU resources to convert the data and update the schema.

Secondly, are you planning to parallelize the migration process? Sometimes the migration needs to be completed more quickly, which can also save CPU resources. AWS DMS can consume more CPU when data migration is parallelized because the AWS DMS instance needs to process the data in multiple streams or threads. This can require more CPU resources, especially if the data is large or complex. When a table is partitioned into separate AWS DMS tasks, each task needs to process its own partition of the data. This can require more CPU resources than if the entire table were processed by a single task.

Similarly, when an AWS DMS task is running with multiple threads, each thread needs to process its own stream of data. This can also require more CPU resources than if the task were running with a single thread.

The amount of CPU required for parallelized data migration will depend on specific factors, such as the following:

The amount of data that is being migrated

The number of partitions or threads that are being used

The complexity of the data

The type of data migration that is being performed

Additionally, consider migrating the data in batches. This can help reduce the amount of data that needs to be processed at once, which can also save CPU resources.

By following these tips, you can reduce the CPU requirements for your database migration and ensure a smooth and successful migration.

Non-critical migration

Identify if you are going to run a non-critical migration. There are a few different conditions when a database migration may not be critical:

The data is not mission-critical – If the data isn’t essential to the business, then a database migration may not be necessary. For example, if the data is for historical purposes or research, then it may not be necessary to migrate it to a new database. Another example is if you’re migrating a development database to a test database, or migrating a staging database to a non-production database.

The migration can be done during off-peak hours – If you can perform the migration during off-peak hours, then it’s likely to consume fewer compute resources and is likely to complete the migration task in less time and lower the impact on the business. This is because there will be less traffic on the database during these hours, so the migration will be less disruptive. For example, you might migrate a database to a new server at night when there are no active users.

The migration can be performed incrementally – If the migration can be performed incrementally, then it can be done in smaller batches. This can help reduce the impact on the users. For example, you might migrate a table at a time, or you might migrate a subset of the data.

A backup plan is in place – If there is a backup plan in place in case of problems with the migration, then the migration is less critical. This is because the data can be restored from the backup if the migration fails.

The migration is not urgent – If the migration is not urgent, then it can be done at a later date when there is more time and resources available. This can help reduce the risk of problems during the migration.

The following are some additional factors to consider when deciding whether to migrate a database:

The cost of the migration

The time required for the migration

The risk of data loss or corruption during the migration

The impact of the migration on the business

Ultimately, the decision of whether to migrate a database should be made based on the specific needs of the business or organization.

Burstable database migration

A burstable CPU is a type of virtual CPU that provides a baseline level of CPU performance with the ability to burst to a higher level to support occasional spikes in usage. Burstable CPUs are a good option for workloads that have variable CPU requirements. These workloads are only used at certain times, maybe a few days in a month, such as for an electricity bill payment app.

When the migration is being performed on a budget or non-production database, burstable CPUs can be a more cost-effective option than dedicated CPUs, especially for migrations that don’t require a lot of CPU resources all the time.

Decision-making process to choose your instance class

The following is an example of how to determine the appropriate AWS DMS instance class. Although not a definitive method, this framework can be used as foundational guidance when selecting the most appropriate instance class for database migration. It takes into account factors such as the characteristics of the source and target endpoints, as well as the specific environmental conditions. Note that although this process provides valuable insights, it might not cover every unique scenario.

To ensure a smooth and successful database migration process, it’s highly advisable to conduct thorough testing of your environment, especially during the early stages of migration planning. We strongly recommend initiating a small-scale test migration. This preliminary step serves multiple crucial purposes. Quick benchmarking and a proof of concept of your database migration can help in assessing the database workload to determine the optimum size for your AWS DMS instance.

For target database size advise, you can use AWS DMS Fleet Advisor. Fleet Advisor offers recommendations based on either database capacity or utilization metrics. When opting for capacity-based recommendations, Fleet Advisor aligns the existing database capacity with the specifications of the most closely matching instance class. On the other hand, if you prefer recommendations based on resource utilization, Fleet Advisor calculates the 95th percentile value for metrics like CPU, memory, I/O throughput, and IOPS. This percentile signifies that 95% of the gathered data falls below this value. Fleet Advisor correlates these values with the specifications of the most similar instance class.

Running a small test migration allows you to pinpoint potential issues within your existing setup. By doing so, you can identify and resolve any hiccups or challenges that might arise during the migration process. These could range from compatibility issues to data transfer problems. Addressing these concerns preemptively significantly reduces the risk of encountering major obstacles later on.

Additionally, conducting a test migration provides invaluable insights into the realistic timeline required for the entire migration process. It helps you gauge the time and effort needed to migrate your database fully. Accurately estimating the migration timeline is essential for proper project planning and resource allocation.

In addition to the small-scale test migration, it’s also advisable to perform a comprehensive, full-scale test migration. This extensive test serves a critical purpose: evaluating whether AWS DMS can efficiently handle the data throughput of your database across your network infrastructure. During this phase, it’s essential to meticulously benchmark and optimize both the initial full load and ongoing replication processes.

By benchmarking and optimizing, you gain a deep understanding of various aspects of your migration, including your network latency and overall performance metrics. This knowledge is invaluable in ensuring the seamless transfer of data from your existing database to the new environment.

Moreover, the full-scale test migration presents an opportune moment to delve into the specifics of your data profile. This includes gaining insights into the size and nature of your database. You can ascertain vital information such as the number of tables categorized by size—whether they are large, medium, or small. Additionally, you can analyze how AWS DMS handles intricate tasks like data type and character set conversions, which are essential for maintaining data integrity during migration.

Furthermore, this testing phase enables you to identify tables that include LOB columns. Understanding how these specific data elements are handled is crucial, because their migration often presents unique challenges.

Lastly, a full-scale test migration allows you to measure the time required to complete the migration process comprehensively. This data provides essential input for refining your migration strategy, enabling you to make informed decisions about the timing and implementation of the actual migration. Additionally, it’s important to thoroughly test the application migration cutover, ensuring a smoother transition during the actual migration.

In summary, conducting both small-scale and full-scale test migrations is pivotal for a successful database migration project. These tests empower you with in-depth knowledge about your existing data environment, enabling you to anticipate challenges, optimize processes, and ultimately ensure a seamless transition to your new database infrastructure.

The following diagram showcases the decision-making steps for selecting an AWS DMS instance. If you prefer to avoid managing DMS compute capacity, consider the AWS DMS Serverless option elaborated in part 2 of this series. Additionally, for homogenous migration, the AWS DMS homogenous migration feature offers a serverless alternative, which is also detailed in part 2 of this blog series.

For more information, refer to Selecting the best size for a replication instance.

Memory requirements for your migration task

Depending on the schema attributes and table data type columns, your memory requirements could be quite different. You can use the following parameters to estimate the amount of memory required for your migration task:

Number of LOB columns in each table – The average number of LOB columns in each table in your migration scope.

Maximum number of tables to load in parallel – The maximum number of tables that AWS DMS loads in parallel in one task. The default value is 8.

Size of the LOB chunks – The size of the LOB chunks, in KB, that AWS DMS uses to replicate data to the target database.

Commit rate during full load – The maximum number of records that AWS DMS can transfer in parallel. The default value is 10,000.

Maximum size of an individual LOB – The maximum size of an individual LOB, in KB.

Bulk array size – The maximum number of rows that are fetched or processed by your endpoint driver. This value depends on the driver settings. The default value is 1,000.

After you have figured out these values, you can apply one of the following approaches to estimate the memory needed for your migration task. The method you choose for the LOB column settings in your migration task determines which methods to use.

Full LOB mode – Required memory = (LOB columns) * (Maximum number of tables to load in parallel) * (LOB chunk size) * (Commit rate during full load)

Limited LOB mode – Required memory = (LOB columns) * (Maximum number of tables to load in parallel) * (LOB size) * (Bulk array size)

Let’s look at an example of a migration scenario. For this example, migrating a table with 1.5 billion records on Oracle took 60 hours. With the following parameter settings, we reduced the full load time from 60 hours to 3 hours and 45 minutes:

parallel-load – We used the parallel-load feature to divide the table into logical partitions by specifying column value ranges with 15 million values in each segment. This logical partition is only on the AWS DMS replication instance and not on the source or target database. See the following code:

MaxFullLoadSubTasks – We increased MaxFullLoadSubTasks from the default of 8 to 46 in the full load task settings.

CommitRate – We increased CommitRate from the default of 10,000 to 30,000 in the full load task settings.

Learn more about how you can speed up database migrations by using AWS DMS parallel load and filter options, in this blog post.

CPU requirements for your migration task

The following configurations directly affect CPU utilization on the AWS DMS instance. Therefore, it’s advisable to take these factors into account when determining whether a compute-heavy instance is necessary. Regular reviews are recommended.

Number of tables to load in parallel

Parallelism

AWS DMS validation jobs

Storage requirements

The following configurations directly affect disk utilization on the AWS DMS instance. You should take these factors into account when determining how much storage is required for your AWS DMS instance. Regular reviews are recommended whenever there is a relevant configuration change.

Full load is running for longer duration

AWS DMS debug mode (detailed logging level enabled)

When more IOPS is required (rare case)

Summary

In this post, we discussed database migration components, migration cost & its factors and how to select right type of AWS DMS instance which determines the capacity of DMS jobs.

In Part 2 of this series, we’ll focus on how to regularly evaluate the AWS DMS instance size and scale up or down based on requirements and how to cost optimize the migrations with AWS DMS features and configurations.

About the Authors

Shailesh K Mishra is working as Sr. Solutions Architect with the FSI team at Amazon Web Services and Area of Depth in Database and migrations. He focuses on database migrations to AWS and helping customers to build well-architected solutions.

Babaiah Valluru is working as Lead Consultant – Databases with the Professional Services team at AWS based out of Hyderabad, India and specializes in database migrations. In addition to helping customers in their transformation journey to cloud, his current passion is to explore and learn ML services. He has a keen interest in open source databases like MySQL, PostgreSQL and MongoDB. He likes to travel, and spend time with family and friends in his free time.

Read MoreAWS Database Blog