This is a guest post by Sudip Roy, Manager of Technical Staff at Cohere.

It’s an exciting day for the development community. Cohere’s state-of-the-art language AI is now available through Amazon SageMaker. This makes it easier for developers to deploy Cohere’s pre-trained generation language model to Amazon SageMaker, an end-to-end machine learning (ML) service. Developers, data scientists, and business analysts use Amazon SageMaker to build, train, and deploy ML models quickly and easily using its fully managed infrastructure, tools, and workflows.

At Cohere, the focus is on language. The company’s mission is to enable developers and businesses to add language AI to their technology stack and build game-changing applications with it. Cohere helps developers and businesses automate a wide range of tasks, such as copywriting, named entity recognition, paraphrasing, text summarization, and classification. The company builds and continually improves its general-purpose large language models (LLMs), making them accessible via a simple-to-use platform. Companies can use the models out of the box or tailor them to their particular needs using their own custom data.

Developers using SageMaker will have access to Cohere’s Medium generation language model. The Medium generation model excels at tasks that require fast responses, such as question answering, copywriting, or paraphrasing. The Medium model is deployed in containers that enable low-latency inference on a diverse set of hardware accelerators available on AWS, providing different cost and performance advantages for SageMaker customers.

“Amazon SageMaker provides the broadest and most comprehensive set of services that eliminate heavy lifting from each step of the machine learning process. We’re excited to offer Cohere’s general purpose large language model with Amazon SageMaker. Our joint customers can now leverage the broad range of Amazon SageMaker services and integrate Cohere’s model with their applications for accelerated time-to-value and faster innovation.”

-Rajneesh Singh, General Manager AI/ML at Amazon Web Services.

“As Cohere continues to push the boundaries of language AI, we are excited to join forces with Amazon SageMaker. This partnership will allow us to bring our advanced technology and innovative approach to an even wider audience, empowering developers and organizations around the world to harness the power of language AI and stay ahead of the curve in an increasingly competitive market.”

-Saurabh Baji, Senior Vice President of Engineering at Cohere.

The Cohere Medium generation language model available through SageMaker, provide developers with three key benefits:

Build, iterate, and deploy quickly – Cohere empowers any developer (no NLP, ML, or AI expertise required) to quickly get access to a pre-trained, state-of-the-art generation model that understands context and semantics at unprecedented levels. This high-quality, large language model reduces the time-to-value for customers by providing an out-of-the-box solution for a wide range of language understanding tasks.

Private and secure – With SageMaker, customers can spin up containers serving Cohere’s models without having to worry about their data leaving these self-managed containers.

Speed and accuracy – Cohere’s Medium model offers customers a good balance across quality, cost, and latency. Developers can easily integrate the Cohere Generate endpoint into apps using a simple API and SDK.

Get started with Cohere in SageMaker

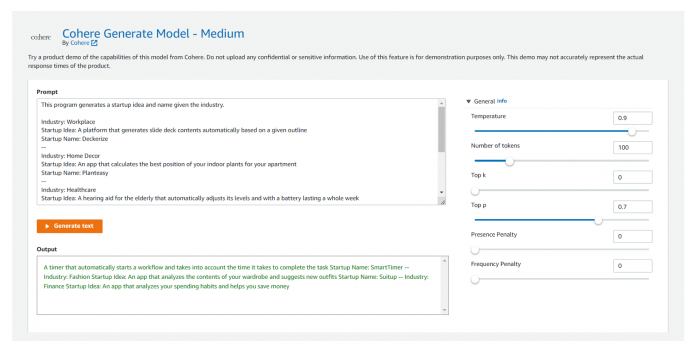

Developers can use the visual interface of the SageMaker JumpStart foundation models to test Cohere’s models without writing a single line of code. You can evaluate the model on your specific language understanding task and learn the basics of using generative language models. See Cohere’s documentation and blog for various tutorials and tips-and-tricks related to language modeling.

Deploy the SageMaker endpoint using a notebook

Cohere has packaged Medium models, along with an optimized, low-latency inference framework, in containers that can be deployed as SageMaker inference endpoints. Cohere’s containers can be deployed on a range of different instances (including ml.p3.2xlarge, ml.g5.xlarge, and ml.g5.2xlarge) that offer different cost/performance trade-offs. These containers are currently available in two Regions: us-east-1 and eu-west-1. Cohere intends to expand its offering in the near future, including adding to the number and size of models available, the set of supported tasks (such as the endpoints built on top of these models), the supported instances, and the available Regions.

To help developers get started quickly, Cohere has provided Jupyter notebooks that make it easy to deploy these containers and run inference on the deployed endpoints. With the preconfigured set of constants in the notebook, deploying the endpoint can be easily done with only a couple of lines of code as shown in the following example:

After the endpoint is deployed, users can use Cohere’s SDK to run inference. The SDK can be installed easily from PyPI as follows:

It can also be installed from the source code in Cohere’s public SDK GitHub repository.

After the endpoint is deployed, users can use the Cohere Generate endpoint to accomplish multiple generative tasks, such as text summarization, long-form content generation, entity extraction, or copywriting. The Jupyter notebook and GitHub repository include examples demonstrating some of these use cases.

Conclusion

The availability of Cohere natively on SageMaker via the AWS Marketplace represents a major milestone in the field of NLP. The Cohere model’s ability to generate high-quality, coherent text makes it a valuable tool for anyone working with text data.

If you’re interested in using Cohere for your own SageMaker projects, you can now access it on SageMaker JumpStart. Additionally, you can reference Cohere’s GitHub notebook for instructions on deploying the model and accessing it from the Cohere Generate endpoint.

About the authors

Sudip Roy is Manager of Technical Staff at Cohere, a provider of cutting-edge natural language processing (NLP) technology. Sudip is an accomplished researcher who has published and served on program committees for top conferences like NeurIPS, MLSys, OOPSLA, SIGMOD, VLDB, and SIGKDD, and his work has earned Outstanding Paper awards from SIGMOD and MLSys.

Karthik Bharathy is the product leader for the Amazon SageMaker team with over a decade of product management, product strategy, execution, and launch experience.

Karl Albertsen leads product, engineering, and science for Amazon SageMaker Algorithms and JumpStart, SageMaker’s machine learning hub. He is passionate about applying machine learning to unlock business value.

Read MoreAWS Machine Learning Blog