We have never seen a proliferation of open source products sparked by a tool like we have seen with Kubernetes. These building blocks are what make Kubernetes the de facto container orchestrator to securely and reliably run services. Since organizations don’t run Kubernetes on its own, Kubernetes is more often than not deployed alongside other tools to solve real business challenges that Kuberntes doesn’t natively solve. Google offers a managed collection of these products under the Anthos umbrella.

In this article, we will demonstrate how organizations can leverage Anthos to centralize the management of internet traffic using Multi Cluster Ingress (MCI) and Anthos Service Mesh (ASM).

Problem statement

For large organizations, the separation of duty is a major concern. It’s this concern that drives the design of the cloud foundation. Projects are the boundaries to create least privileges strategy for most of the resources Google Cloud Platform provides. Hence, it’s fair to assume that separate teams would have separate projects to run their workloads.

This separation of duty applies also to the management of the networking. Shared VPC is one of the tools teams have to centrally manage networking. This allows multiple projects to share the same VPC. Having the same VPC is also required for some features Anthos provides.

Applications running in Kubernetes sometimes need to be exposed to the internet. Exposing the application can be achieved using a load balancer. The management of the lifecycle and configuration of the load balancer is done through annotations and custom resource definitions (CRD). MCI is a managed controller that manages the lifecycle of Google Cloud Global Load Balancer (GLBC) in order to expose services running on Kubernetes to the internet. Unfortunately, GLBC doesn’t support cross-project backends. Since projects are the foundation to implementing least privilege strategy, one solution is to deploy a GLBC per project. The management overhead that this would create for the network and security teams is substantial. The second solution is to deploy one GLBC using MCI and use ASM to route the traffic cross-projects. This can also enable security teams to achieve the required separation of duty with a seamless separation of responsibilities while minimizing confusion and accidental configuration errors.

Now that we have talked about the problem we are solving and what tools we are going to use, let’s deep dive into how we are going to do it.

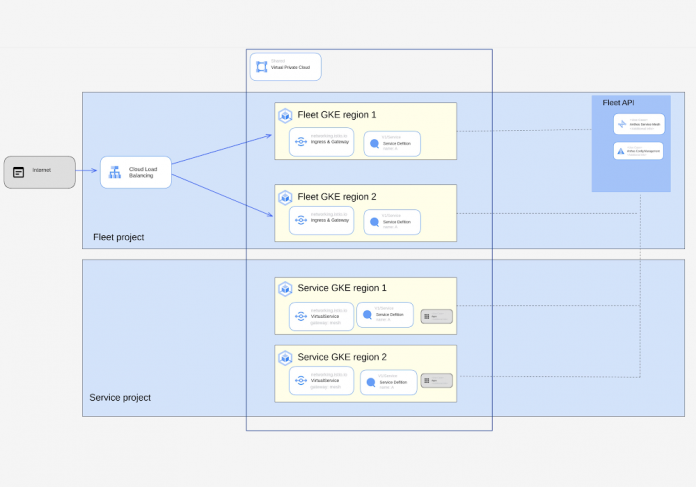

Architecture Diagram

This architecture shows the suggested solution to support MCI in a multi project setup. Both the fleet project and the service project share the same VPC. The shared VPC host project is not depicted in the picture but it’s a separate project. The fleet project refers to the project where the Fleet API is enabled. All the clusters that are part of the same fleet are also part of the same mesh. One of the fleet GKE clusters hosts the MCI configuration, but the configurations are deployed in both Fleet GKE clusters for high availability. Deploying the same configuration in both clusters makes it both possible and easy to swap the configuration cluster.

The service projects on the other hand host workloads. Development teams would have access to a namespace to deploy services they manage.

How to Implement this Solution

To implement this solution, a deep understanding of how the traffic flows is key. The following steps describe the process to make sure everything is working. Before starting, take the following actions:

Make sure MCI is already set up.

Make sure managed ASM is enabled.

code_block[StructValue([(u’code’, u’$ kubectl -n istio-system get controlplanerevisionrnNAME RECONCILED STALLED AGErnasm-managed-stable True False 34d’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e723dd8db10>)])]

Create a namespace for ingress gateway and other resources.

code_block[StructValue([(u’code’, u”$ export INGRESS_NS=ingressrn$ export ASM_REVISION=$(kubectl -n istio-system get controlplanerevision | awk ‘/asm/ {print $1}’)rn$ cat > ns_ingress.yaml << EOFrnapiVersion: v1rnkind: Namespacernmetadata:rn labels:rn istio.io/rev: ${ASM_REVISION}rn name: ${INGRESS_NS}rnEOF”), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e723dd8db90>)])]

In the ingress namespace create the

ingress deployment and the

ingress gateway. This should only be deployed in the Fleet GKE clusters.

Deploy the multi cluster service resource and use the selector that would send the traffic to the ingress controller that was deployed. The cluster spec should only link Fleet GKE clusters because this is where the ASM ingress resources are deployed. The multi cluster service resource creates

Network Endpoint Groups (NEG).

code_block[StructValue([(u’code’, u’$ export INGRESS_NS=ingressrn$ cat > mcs.yaml << EOFrnapiVersion: networking.gke.io/v1rnkind: MultiClusterServicernmetadata:rn annotations:rn networking.gke.io/app-protocols: ‘{“http”:”HTTP”}’rn name: mcs-servicern namespace: ${INGRESS_NS}rnspec:rn clusters:rn – link: us-east4/fleetclustereastrn – link: us-central1/fleetclustercentralrn template:rn spec:rn ports:rn – name: httpsrn port: 80rn protocol: TCPrn targetPort: 80rn selector:rn asm: ingressgateway # Same selector defined in the ingress controllerrnEOF’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e723dd8db50>)])]

To create the GLBC, a multicluster ingress resource should be deployed.

code_block[StructValue([(u’code’, u’cat > mci.yaml << EOFrnapiVersion: networking.gke.io/v1rnkind: MultiClusterIngressrnmetadata:rn name: mcirnspec:rn template:rn spec:rn backend:rn serviceName: mcs-service # name of the Multi Cluster Servicern servicePort: 80rn rules:rn – host: “store.store.svc.cluster.local”rn http:rn paths:rn – backend:rn serviceName: mcs-servicern servicePort: 80rnEOF’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e723dd8d990>)])]

code_block[StructValue([(u’code’, u”$ export STORE_NS=storern$ export ASM_REVISION=$(kubectl -n istio-system get controlplanerevision | awk ‘/asm/ {print $1}’)rn$ cat > ns_store.yaml << EOFrnapiVersion: v1rnkind: Namespacernmetadata:rn labels:rn istio.io/rev: ${ASM_REVISION}rn name: ${STORE_NS}rnEOF”), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e723dd8a650>)])]

Create a virtual service that would listen on the gateway deployed for MCI. The gateway attribute is a combination of the namespace and the name of the gateway that should be used. This virtual service should be created in the fleet GKE clusters.

code_block[StructValue([(u’code’, u’$ export STORE_NS=storerncat > ingress_virtual_service.yaml << EOFrnapiVersion: networking.istio.io/v1alpha3rnkind: VirtualServicernmetadata:rn name: frontendrn namespace: ${STORE_NS}rnspec:rn hosts:rn – “store.store.svc.cluster.local”rn gateways:rn – asmingress/ingressgatewayrn http:rn – route:rn – destination:rn host: storern port:rn number: 80rnEOF’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e724c19bf90>)])]

The second virtual service should be created in the cluster where the workload is running, the difference from the first virtual service is that the gateway used in this case should be mesh because the ingress gateway is deployed in a different project.

code_block[StructValue([(u’code’, u’$ export STORE_NS=storerncat > virtual_service.yaml << EOFrnapiVersion: networking.istio.io/v1alpha3rnkind: VirtualServicernmetadata:rn name: frontendrn namespace: ${STORE_NS}rnspec:rn hosts:rn – store.store.svc.cluster.localrn gateways:rn – meshrn http:rn – route:rn – destination:rn host: storern port:rn number: 80rnEOF’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e724c0ea1d0>)])]

Deploy the workload in the service GKE clusters

code_block[StructValue([(u’code’, u’$ export STORE_NS=storern$ cat > store.yaml << EOFrnapiVersion: apps/v1rnkind: Deploymentrnmetadata:rn name: storern namespace: ${STORE_NS}rnspec:rn replicas: 2rn selector:rn matchLabels:rn app: storern version: v1rn template:rn metadata:rn labels:rn app: storern version: v1rn spec:rn containers:rn – name: whereamirn image: us-docker.pkg.dev/google-samples/containers/gke/whereami:v1.2.11rn ports:rn – containerPort: 8080rnEOF’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e724c17a190>)])]

Deploy the service for the deployment in both Fleet GKE clusters and Service clusters, this is required for service discovery

code_block[StructValue([(u’code’, u’$ export STORE_NS=storern$ cat > store_svc.yaml << EOFrnapiVersion: v1rnkind: Servicernmetadata:rn name: storern namespace: ${STORE_NS}rnspec:rn selector:rn app: storern ports:rn – port: 80rn targetPort: 8080rnEOF’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e724c0cead0>)])]

The last step is to test that the service answers. Curl can be used for this for example:

code_block[StructValue([(u’code’, u’$ curl –header “Host: store.store.svc.cluster.local” http://${GLBC_IP} -v’), (u’language’, u”), (u’caption’, <wagtail.wagtailcore.rich_text.RichText object at 0x3e724c183710>)])]

Conclusion

In this blog post, we have seen that even though MCI doesn’t support backends in different projects we can overcome this limitation using ASM. The combination of both products makes it easy to centralize traffic management and reuse the same GLBC for workloads running in different projects. Make sure to check the GKE Network Recipes repository for more GKE ingress examples.

Cloud Blog