One of the best ways to share, reuse, and scale your ML workflows is to run them as pipelines. To maximize their value, it’s important to build these pipelines in such a way that you can easily reproduce runs that produce similar results, as described in the paper “Hidden Technical Debt in Machine Learning Systems”.

We are excited to announce support for pipeline templates on Vertex AI Pipelines. This blog post demonstrates how to create, upload, and (re)use end-to-end pipeline templates using the Kubeflow Pipelines (KFP) SDK registry client (RegistryClient), Artifact Registry, and Vertex AI Pipelines.

Understanding Pipeline Templates

A pipeline template is a resource that you can use to publish a workflow definition so that it can be reused. The KFP RegistryClient is a new client interface that you can use with a compatible registry server, such as Artifact Registry, for version control of your Kubeflow Pipelines templates. Using Artifact Registry makes it easier for your organization to:

Publish workflow definitions, which can be executed by other users

Store, manage, and control workflow definitions

Discover workflows

Building a Reusable Pipeline Template

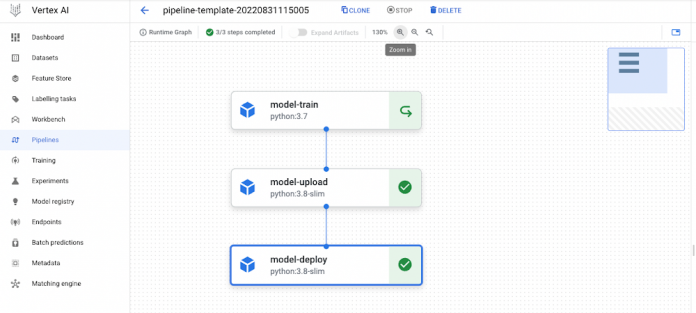

You can build, upload, and use a template in just a few steps. Let’s take a look at building a simple, three-step pipeline. We will then register and upload the template to Artifact Registry, from where we can run it using Vertex AI Pipelines. The Notebook can be found on our Google Cloud Github repository. The end result will look like this:

Step 1: Create an Artifact Repository

The first step is to create a repository in the Artifact Registry. This is where you store and track template artifacts. When creating the repository make sure that you set it to the Kubeflow Pipelines format. Once you create your repository you will see something like this.

Step 2: Build a Pipeline

Now it’s time to build and compile an end-to-end pipeline using the KFP DSL. The pipeline in this example has two steps (custom components):

Train a Tensorflow model.

Upload the trained model to the Vertex AI Model Registry.

(Of course, you can build your own, more advanced pipelines depending on your use case.) Once you have finalized your pipeline, use the compiler to generate the workflow yaml: template-pipeline.yaml.

Step 3: Upload the Template

After you have compiled the pipeline, use RegistryClient to configure a registry client.

Now you’re ready to upload the workflow YAML, in this case template-pipeline.yaml, to the repository that you created earlier. For this, you use client.upload_pipeline():

To find the template that you uploaded, navigate to the Pipelines tab in Vertex AI Pipelines. You will see something like this:

You can use this central repository to store, manage, and track all of your pipeline templates in one place.

Step 4: Reuse the Template in Vertex AI Pipelines Using the Vertex AI SDK

The main advantage of storing your pipeline template in a central repository is easy sharing and reuse. We can easily reuse the pipeline template in Vertex AI Pipelines.

To run (reuse) your pipeline template in Vertex AI Pipelines, you first need to create a Cloud Storage bucket for staging pipeline runs. Then, use the Vertex AI SDK to create a pipeline run from your template in the artifact repository.

You can view the runs created by a specific pipeline version using the Vertex AI SDK for Python. To list the pipeline runs, run the PipelineJobs.list command.

You can also see the pipeline run by navigating to the Runs tab in the Vertex Pipelines UI. When you click on the pipeline job you can see its topology and progress.

Optional: Reuse the Template in Vertex AI Pipelines Via the UI

If you prefer, you can also use the Vertex UI to reuse your pipeline templates. First, navigate to the Pipelines tab in the Vertex Pipelines UI.

Next, select the pipeline that you want to run and click Create run. In the Create Pipeline Run window, set the details and runtime configuration, and then submit your pipeline run.

What’s Next

Now that you know how to build, upload, and reuse a pipeline template, you’re ready to start deploying some pipeline templates of your own! You can find more information in our docs. Or learn more about Vertex AI Pipelines in this Codelab.

Thank you for reading! Have a question or want to chat? Find Erwin on Twitter or LinkedIn.

Cloud BlogRead More