In the first three posts of this four-part series, you learned how the choice of zonal or Regional services impacts availability, and some important characteristics of Amazon DynamoDB when used in a multi-Region context with global tables. Part 1 also covered the motivation for using multiple Regions. Part 2 discussed some important characteristics of DynamoDB. In Part 3, presented a design pattern for building a resilient multi-Region application using global tables. In this post, you’ll learn about operational concerns for a multi-Region deployment, including observability, deployment pipelines, and runbooks.

Observability

In this section, I discuss operational concerns regarding observability, including canary metrics and dashboards.

Canaries

In any multi-Region design, observability is a key requirement. You need to understand how your application is behaving in order to decide when to fail over or shift traffic. The volume of observability information available to you through built-in Amazon CloudWatch metrics is quite large. The problem for an operations team during an event is often too much data, not too little, and the problem is exacerbated when AWS services see intermittent failures or elevated error rates. During a period when an event causes your workload to be degraded, you might see periods of higher response latency and elevated error rates from AWS services. Things just seem off and it’s hard to get a complete and clear picture of the situation.

That’s why synthetic metrics, or canaries, are so useful. CloudWatch Synthetics let you emulate what users do on the platform and see how the platform responds. Canaries can give you an indication of what your end-users are seeing when they actually use your application. For example, say you get an alarm that the memory usage in an AWS Lambda function is anomalously high. Should you be concerned? If a canary causes an alarm that’s reporting that users are seeing excessively high response times from the application, then yes—the memory usage alarm is giving you a potential clue to a root cause. If everything looks fine to the end-users, then high Lambda memory usage is a more routine item to track down later. (It poses a cost concern if nothing else.)

The need for an external point of view

One tricky problem that’s not often discussed is how to handle a Region-scoped problem that impacts your monitoring system itself. If your observability tools are offline or behaving erroneously, you’re operating without your diagnostic tools.

That’s why it’s useful to deploy some of your monitoring suite in a Region that your application doesn’t use. Let’s say that your application runs in us-east-1 and us-west-2, and you deploy canaries in both Regions. Now let’s say that us-west-2 is experiencing a Region-scoped problem that impacts the canary in that Region, making it report a healthy experience even though the application is actually operating in a degraded state. Should you evacuate traffic from us-west-2?

If you also deploy a canary in us-east-2 that is measuring latency and success rates for calls from us-east-2 to the Regional application endpoints in us-west-2 and us-east-1, and to the Amazon Route 53 DNS endpoint used globally, you’ll get a clearer picture. The canaries in your observer Region report that the application is healthy in us-east-1, but that the Regional endpoint in us-west-2 gives unusually high latency and intermittent failures. That gives you more confidence to decide that the application is degraded in us-west-2, and you can either troubleshoot or evacuate that Region.

Supporting metrics

Canaries provide a user-level picture of how an application is working. But you still need to gather more detailed metrics. In the sample application, you want to collect error rates and response times from Amazon API Gateway. You want to gather memory usage, CPU usage, response times, and error rates from the Lambda functions. From DynamoDB, you want to collect metrics like ReplicationLatency and SuccessfulRequestLatency for read and write operations. That’s just a partial list. You can also create custom CloudWatch metrics that monitor the table read and write times as observed by the database client. For more information about client-level metrics, refer to Instrumenting distributed systems for operational visibility.

Amazon Route 53 Application Recovery Controller (Route 53 ARC) also does some basic monitoring of resources through its readiness checks. These checks look for inconsistencies between resources deployed in two fault domains (Regions in our case). For example, Route 53 ARC checks to see if the AWS service quotas in one Region are different from another Region. That’s the type of surprise that can cause real difficulty during an event. Although the Route 53 ARC readiness checks are quite useful, they shouldn’t be used as a standalone solution or to drive automatic rebalancing.

The number of alarms you should have in place can be surprisingly large. If you run the example application through AWS Resilience Hub, for example, you’ll get a list of 46 recommended alarms. Use them along with alarms based on your canaries and custom metrics, and practice how to respond. Run a chaos engineering exercise to make sure you know what those alarms mean and when your application reaches the tipping point of needing to fail over (or at least when to disable one Region).

A combined picture

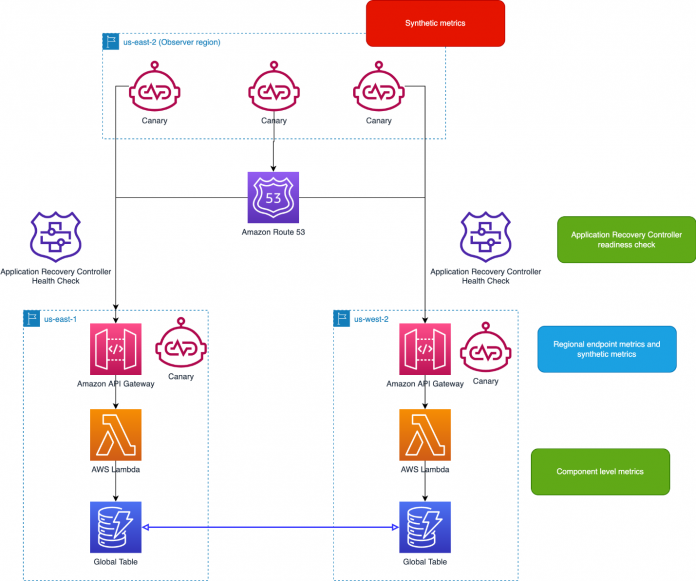

Figure 1 that follows shows a combined picture of the different types of monitoring I’ve discussed in this section. The synthetic metrics are the most useful for making important traffic rebalancing considerations. Regional endpoint metrics and synthetic metrics (in the blue box) are important for evaluating the health of a single Region. The application recovery controller readiness check and component level metrics (in the green boxes) are useful for more in-depth diagnostics.

Figure 1: Sample application deployed in two Regions with multiple types of metrics

Dashboards

The sample code in GitHub builds a CloudWatch dashboard in each Region showing metrics such as ReplicationLatency and successful canary runs. You can put an anomaly detection band on the charts to help you identify outliers visually, and set alarms on anomaly detection bands as well.

You can add additional metrics you want to monitor. For a more in-depth discussion of building useful dashboards, refer to Building dashboards for operational visibility. The article also discusses building dashboards for higher-level metrics like canary runs compared to lower-level metrics.

Deployment pipeline

You must have a way to deploy the application into all Regions. This implies that your supporting systems, like artifact repositories, are available in all Regions. It also implies that your basic infrastructure is managed using infrastructure as code tools like AWS CloudFormation or AWS Cloud Development Kit (AWS CDK); manual changes are a recipe for subtle errors in a multi-Region environment.

There are tools to simplify setting up a basic multi-Region deployment pipeline. Some modern tools like Spinnaker support multi-Region patterns natively, but running pipelines in multiple Regions can be done with almost any modern continuous integration/continuous delivery (CI/CD) system. Consider putting the CI/CD toolchain in a separate AWS account and deploying it in the observer Region.

As the article Automating safe, hands-off deployments describes, you shouldn’t deploy into all Regions at the same time. If a deployment goes wrong and must be rolled back, you need to isolate the blast radius to a single Region at a time. You can also use either an entire Region for canary and blue/green deployments, or use canary deployments in API Gateway to test new code on a small percentage of the traffic in a single Region.

Figure 2 that follows shows an example deployment sequence that uses API Gateway stages and canary deployments, plus grouping production Regions into staggered waves. The numbered boxes in the figure highlight some key points. First, use the CI/CD pipeline to build the deployment artifacts and run first-level tests like unit test. Second, deploy into a test environment, and use API Gateway stages to run increasingly realistic tests. Third, deploy into production environments in a staggered fashion using API Gateway canary deployments and Regional waves.

Figure 2: Example deployment using API Gateway stages and canary deployments

The article Automating safe, hands-off deployments also has good tips on other deployment best practices, like not doing deployments overnight or on weekends, when fewer engineers are available to help with troubleshooting.

Finally, on the topic of deployment pipelines, consider how to safely roll back. You need effective metrics to determine if a deployment has failed, and then be able to initiate that rollback. Be careful when introducing backward-incompatible changes, particularly at the database level. If you consider introducing database changes that might cause compatibility problems for users connecting through other Regions, you should use schema versioning and indicate which new database fields can be safely ignored by older database clients. For more information about safe rollbacks, refer to Ensuring rollback safety during deployments.

Load shedding

An application in a single Region will have some upper limits on performance. These might be related to Regional AWS service quotas. You can use stage or method throttling in API Gateway to shed load and protect the resources behind the API Gateway, or you can prioritize traffic from certain clients by using API Gateway API keys and setting higher limits on some users. For more information on load shedding techniques, refer to Using load shedding to avoid overload.

Introducing backoff and retry strategies at the database client level will also help by avoiding a flood of simultaneous requests if the database was temporarily offline but has recovered. In the case of an application that serves requests in low latency, retrying at the user level is the simplest approach.

Operational runbooks

We’d all like the operational experience to be fully automated, and indeed, after you decide to initiative a Region evacuation, your response plan should be designed to be fully automated and you should do nothing manually. However, the decision to evacuate a Region is not to be taken lightly, so you need a runbook to decide how to respond up until the point that you initiate traffic rebalancing.

A basic traffic rebalancing runbook might have the following steps:

Step 0: Event begins – Again, let’s say that you deploy your application in us-east-1 and us-west-2, and you use us-east-2 as an observer Region. A Region-scoped outage starts in us-west-2 and causes intermittent failures and elevated response time for your application in that Region. Your canaries in us-east-2 and us-west-2 enter alarm states.

Step 1: Evaluation – Your first task is to understand what’s happening at a high level. You can start by checking two data sources: your canaries and the AWS Health API. The canaries tell you that the application is seeing problems in us-west-2 and is running normally in us-east-1. The AWS Health API might tell you that certain AWS services in us-west-2 are reporting elevated error rates, and you might hear similar information from your AWS technical account managers if you have an Enterprise Support plan. Based on this information, you conclude that there’s a Region-scoped AWS service event happening in us-west-2. If the Health API didn’t show a problem with AWS services in us-west-2, you might need to dig deeper. For example, you might look for operational events like recent deployments in us-west-2 or unusually high traffic volumes in that Region.

Step 2: Decision to evacuate a Region – Because in this scenario you suspect that there’s an AWS service event impacting your application in us-west-2, you decide to evacuate that Region until the event is resolved or you receive better information. You change the Route 53 ARC routing controls to disable traffic to us-west-2. If you’re concerned about the time it takes for DNS changes to propagate, you can also cut off traffic in us-west-2 at the API Gateway level by setting the allowed incoming traffic rate to 0. That’s a helpful step if you don’t want to take any chance that your application is making changes in us-west-2 that might not be replicated. However, you can’t count on this working all the time, because the API Gateway control plane itself might be affected by the event.

Step 3: Observation – At this point, you sit back and watch. The application should be able to handle all the traffic in us-east-1, as long as you’ve previously adjusted service quotas. You’ll see higher levels of traffic in us-east-1 and should monitor the lower-level metrics closely for the duration of the incident.

Step 4: Restore service in affected Region – When you receive word that the event in us-west-2 is over, you can start slowly restoring service to that Region. You can remove any API Gateway restrictions you put in place, change the Route 53 ARC routing control to allow traffic to us-west-2 again, and use regular Route 53 weighted routing policies to slowly restore traffic.

This runbook is purposefully simple. Your runbook will likely be more detailed, but this example captures the most important decisions.

As a prerequisite to using a runbook in a real event, you should commit to reviewing the runbook during a periodic operational readiness review. You need to document the escalation paths, timelines, and resources needed. In addition to the traffic rebalancing runbook, you should have runbooks for routine operational activities such as security audits.

Conclusion

In this post, we covered observability, deployment pipelines, and runbooks in some detail. These are three of the most important operational aspects of building a resilient multi-Region application, collecting enough data to help you make informed decisions about Region evacuation. Start applying these resilience patterns and best practices to your business-critical applications that use DynamoDB by visiting the DynamoDB global tables Getting Started page and reviewing Parts 1–3 of this post.

Special thanks to Todd Moore, Parker Bradshaw and Kurt Tometich who contributed to this post.

About the author

Randy DeFauw is a Senior Principal Solutions Architect at AWS. He holds an MSEE from the University of Michigan, where he worked on computer vision for autonomous vehicles. He also holds an MBA from Colorado State University. Randy has held a variety of positions in the technology space, ranging from software engineering to product management. In entered the Big Data space in 2013 and continues to explore that area. He is actively working on projects in the ML space and has presented at numerous conferences including Strata and GlueCon.

Read MoreAWS Database Blog