More and more organizations are adopting graph databases for various use cases, such as legal entity lookup tools in the public sector, drug-drug interaction checkers in the healthcare sector, and customer insights and analytics tools in marketing.

If your application has relationships and connections, using a relational database is hard. But Amazon Neptune, a fully managed graph database, is purpose-built to store and navigate relationships. You can use Neptune to build popular graph applications such as knowledge graphs, identity graphs, and fraud graphs. We also recently released AWS CloudFormation templates of a sample chatbot application that utilizes knowledge graphs.

AWS Amplify is popular way for developers to build web applications, and you may want to combine the power of graph applications with the ease of building web applications. AWS AppSync makes it easy to develop GraphQL APIs, which provides an access layer to the data and builds a flexible backend using AWS Lambda to connect to Neptune.

In this post, we show you how to connect an Amplify application to Neptune.

Solution overview

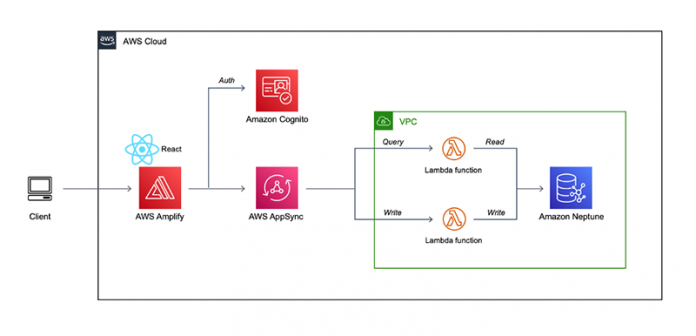

The architecture diagram of this solution is as follows.

This solution uses Amplify to host the application, and all access to Neptune is handled by Lambda functions that are invoked by AWS AppSync. The front end is created with React, and the backend is powered by Lambda functions built with Node.js. This application uses Apache TinkerPop Gremlin to access the graph data in Neptune.

The following video demonstrates the UI that you can create by following this tutorial.

In the navigation pane of the UI, you can navigate to the following services and features:

Dashboard – By entering information such as person name, product name, or affiliated academic society, you can retrieve information related to the input.

Amazon Neptune – This link redirects you to Neptune on the AWS Management Console.

Amazon SageMaker – This link redirects you to Amazon SageMaker on the console.

Add Vertex/Edge – You can add vertex or edge data to Neptune.

Visualizing Graph – You can visualize the graph data in Neptune. Choose a vertex on the graph data to display its properties.

The following table shows the graph schema vertexes and edges used for this solution.

Vertex

Description

person

Doctor or MR

paper

Paper authored by person

product

Medicine used by person

conference

Affiliated academic society

institution

Hospital, university, or company

Edge

Source

Target

knows

person

person

affiliated_with

person

institution

authored_by

paper

person

belong_to

person

conference

made_by

product

institution

usage

person

product

Prerequisites

For this walkthrough, you should have the following prerequisites.

An AWS account – You can create a new account if you don’t have one yet.

AWS Region – This solution uses the Region us-east-1.

IAM role and Amazon S3 access permissions for bulk loading – This solution loads graph data into Neptune using the bulk loader. You need an AWS Identity and Access Management (IAM) role and Amazon Simple Storage Service (Amazon S3) VPC endpoint. For more information, see Prerequisites: IAM Role and Amazon S3 Access. You attach this role to Neptune in a later step.

Create an AWS Cloud9 environment

We start by creating an AWS Cloud9 environment.

On the AWS Cloud9 console, create an environment with the following parameters:

Instance type – m5.large

Network (VPC) – VPC belongs to us-east-1

Subnet – Subnet belongs to us-east-1a

Next, copy and save the following script as resize.sh, which is used to modify the Amazon Elastic Block Store (Amazon EBS) volume size attached to AWS Cloud9.

#!/bin/bash

# Specify the desired volume size in GiB as a command-line argument. If not specified, default to 20 GiB.

SIZE=${1:-20}

# Get the ID of the environment host Amazon EC2 instance.

INSTANCEID=$(curl http://169.254.169.254/latest/meta-data/instance-id)

# Get the ID of the Amazon EBS volume associated with the instance.

VOLUMEID=$(aws ec2 describe-instances

–instance-id $INSTANCEID

–query “Reservations[0].Instances[0].BlockDeviceMappings[0].Ebs.VolumeId”

–output text)

# Resize the EBS volume.

aws ec2 modify-volume –volume-id $VOLUMEID –size $SIZE

# Wait for the resize to finish.

while [

“$(aws ec2 describe-volumes-modifications

–volume-id $VOLUMEID

–filters Name=modification-state,Values=”optimizing”,”completed”

–query “length(VolumesModifications)”

–output text)” != “1” ]; do

sleep 1

done

# Check if we’re on an NVMe filesystem

if [ $(readlink -f /dev/xvda) = “/dev/xvda” ]

then

# Rewrite the partition table so that the partition takes up all the space that it can.

sudo growpart /dev/xvda 1

# Expand the size of the file system.

# Check if we are on AL2

STR=$(cat /etc/os-release)

SUB=”VERSION_ID=”2″”

if [[ “$STR” == *”$SUB”* ]]

then

sudo xfs_growfs -d /

else

sudo resize2fs /dev/xvda1

fi

else

# Rewrite the partition table so that the partition takes up all the space that it can.

sudo growpart /dev/nvme0n1 1

# Expand the size of the file system.

# Check if we’re on AL2

STR=$(cat /etc/os-release)

SUB=”VERSION_ID=”2″”

if [[ “$STR” == *”$SUB”* ]]

then

sudo xfs_growfs -d /

else

sudo resize2fs /dev/nvme0n1p1

fi

fi

For more information about the preceding script, see Moving an environment and resizing or encrypting Amazon EBS volumes.

Run the following commands to change the volume size to 20 GB.

$ touch resize.sh

$ bash resize.sh 20

$ df –h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 3.8G 0 3.8G 0% /dev

tmpfs 3.8G 0 3.8G 0% /dev/shm

tmpfs 3.8G 440K 3.8G 1% /run

tmpfs 3.8G 0 3.8G 0% /sys/fs/cgroup

/dev/nvme0n1p1 20G 8.5G 12G 43% /

tmpfs 777M 0 777M 0% /run/user/1000

Create Neptune resources

You can create Neptune resources via the AWS Command Line Interface (AWS CLI).

Run the following commands to create a new Neptune DB cluster and instances.

$ aws neptune create-db-cluster –db-cluster-identifier ws-database-1 –engine neptune –engine-version 1.0.4.1

$ aws neptune create-db-instance –db-instance-identifier ws-neptune –db-instance-class db.r5.xlarge –engine neptune –db-cluster-identifier ws-database-1

$ aws neptune create-db-instance –db-instance-identifier ws-read-neptune –db-instance-class db.r5.xlarge –engine neptune –db-cluster-identifier ws-database-1

Add the IAM role that you created in the prerequisites section to Neptune.

Add the following rule to the inbound rules in the security group of all of the database instances in the Neptune cluster, so that you can bulk load the graph data from AWS Cloud9 into Neptune in the next step.

Type – Custom TCP

Port range – 8182

Source – Custom, with the security group of the AWS Cloud9 instance

Create an S3 bucket and load data into Neptune

In this step, you create an S3 bucket and upload graph data into the bucket.

Create an S3 bucket in us-east-1 and upload the following files.Because the solution uses Gremlin, the files are in the Gremlin load data format.The following code is the sample vertex data (vertex.csv).

~id, name:String, speciality:String, publish_date:String, ~label

Doctor1,”John”,”psychosomatic medicine”, ,person

Doctor2,”Bob”,”respiratory medicine”, ,person

Doctor3,”Alice”,”gastroenterology”, ,person

Doctor4,”Terry”,”neurology”, ,person

Doctor5,”Ken”,”cardiology”, ,person

Doctor6,”Peter”,”neurology”, ,person

MR1,”Mashiko”, , ,person

MR2,”Koizumi”, , ,person

MR3,”Ishio”, , ,person

Paper1,”Example1″, ,”2000-05-13″,paper

Paper2,”Example2″, ,”2005-01-20″,paper

Paper3,”Example3″, ,”2010-06-20″,paper

Paper4,”Example4″, ,”2018-11-13″,paper

Paper5,”Example5″, ,”2020-08-13″,paper

Prod1,”A medicine”, , ,product

Prod2,”B medicine”, , ,product

Prod3,”C medicine”, , ,product

Prod4,”D medicine”, , ,product

Prod5,”E medicine”, , ,product

Conf1,”X conference”, , ,conference

Conf2,”Y conference”, , ,conference

Conf3,”Z conference”, , ,conference

Inst1,”A hospital”, , ,institution

Inst2,”B hospital”, , ,institution

Inst3,”C Univ”, , ,institution

Inst4,”A pharma”, , ,institution

Inst5,”B pharma”, , ,institution

The following code is the sample edge data (edge.csv).

~id, ~from, ~to, ~label, weight:Double

e1,Doctor1,Doctor2,knows,

e2,Doctor2,Doctor4,knows,

e3,MR1,Doctor2,knows,

e4,MR1,Doctor1,knows,

e5,MR2,MR1,knows,

e6,MR1,MR2,knows,

e7,MR3,MR1,knows,

e8,Doctor3,Doctor1,knows,

e9,Doctor4,Doctor5,knows,

e10,Doctor4,Doctor6,knows,

e11,Doctor3,Doctor4,knows,

e12,Doctor5,Doctor6,knows,

e13,Doctor5,Doctor3,knows,

e14,Doctor6,Doctor5,knows,

e15,Doctor6,Doctor2,knows,

e16,Doctor2,Doctor5,knows,

e17,Doctor5,Doctor2,knows,

e18,Doctor6,Doctor3,knows,

e19,Doctor3,Doctor6,knows,

e20,MR2,Doctor3,knows,

e21,MR2,Doctor6,knows,

e22,MR1,Inst4,affiliated_with,

e23,MR2,Inst4,affiliated_with,

e24,MR3,Inst5,affiliated_with,

e25,Doctor1,Inst1,affiliated_with,

e26,Doctor2,Inst1,affiliated_with,

e27,Doctor3,Inst2,affiliated_with,

e28,Doctor4,Inst2,affiliated_with,

e29,Doctor5,Inst3,affiliated_with,

e30,Doctor6,Inst3,affiliated_with,

e31,Paper1,Doctor1,authored_by,

e32,Paper1,Doctor3,authored_by,

e33,Paper2,Doctor2,authored_by,

e34,Paper2,Doctor5,authored_by,

e35,Paper3,Doctor3,authored_by,

e36,Paper3,Doctor6,authored_by,

e37,Paper4,Doctor1,authored_by,

e38,Paper5,Doctor5,authored_by,

e39,Paper5,Doctor6,authored_by,

e40,Doctor1,Conf1,belong_to,

e41,Doctor2,Conf1,belong_to,

e42,Doctor3,Conf2,belong_to,

e43,Doctor3,Conf3,belong_to,

e44,Doctor4,Conf2,belong_to,

e45,Doctor5,Conf2,belong_to,

e46,Doctor5,Conf3,belong_to,

e47,Doctor6,Conf1,belong_to,

e48,Prod1,Inst4,made_by,

e49,Prod2,Inst5,made_by,

e50,Prod3,Inst4,made_by,

e51,Prod4,Inst5,made_by,

e52,Prod5,Inst4,made_by,

e53,Doctor1,Prod1,usage,1.0

e54,Doctor1,Prod2,usage,0.5

e55,Doctor1,Prod3,usage,0.2

e56,Doctor2,Prod1,usage,0.5

e57,Doctor2,Prod3,usage,0.5

e58,Doctor3,Prod4,usage,0.9

e59,Doctor3,Prod5,usage,0.2

e60,Doctor4,Prod4,usage,0.6

e61,Doctor5,Prod5,usage,0.9

e62,Doctor5,Prod4,usage,0.2

e63,Doctor5,Prod3,usage,0.4

e64,Doctor5,Prod2,usage,0.3

e65,Doctor6,Prod1,usage,1.0

In the AWS Cloud9 environment, enter the following command to bulk load the vertex data into Neptune.

$ curl -X POST

-H ‘Content-Type: application/json’

https://Your-Amazon-Neptune-Writer-Endpoint-Name:8182/loader -d ‘

{

“source” : “s3://Your-Bucket-name/vertex.csv”,

“format” : “csv”,

“iamRoleArn” : “IAM-Role-Arn-you-made“,

“region” : “us-east-1”,

“failOnError” : “FALSE”,

“parallelism” : “MEDIUM”,

“updateSingleCardinalityProperties” : “FALSE”,

“queueRequest” : “TRUE”

}

‘

#Response

{

“status” : “200 OK”,

“payload” : {

“loadId” : “xxxxxxxxxxxxxxxxx”

}

Enter the following command to verify the bulk loading was successful (LOAD_COMPLETED).

$ curl -G ‘https://

Your-Amazon-Neptune-Reader-Endpoint-Name:8182/loader/

xxxxxxxxxxxxxxxxx‘

#Response

{

“status” : “200 OK”,

“payload” : {

“feedCount” : [

{

“LOAD_COMPLETED” : 1

}

],

“overallStatus” : {

“fullUri” : “s3://Your-Bucket-name/vertex.csv”,

“runNumber” : 1,

“retryNumber” : 0,

“status” : “LOAD_COMPLETED”,

“totalTimeSpent” : 3,

“startTime” : 1612877196,

“totalRecords” : 78,

“totalDuplicates” : 0,

“parsingErrors” : 0,

“datatypeMismatchErrors” : 0,

“insertErrors” : 0

}

}

Repeat this procedure by changing the source to the S3 URI that stores the edge.csv file to bulk load the edge data.

This completes loading the graph data into Neptune.

Build a graph application using Amplify

In this step, you use Amplify to build an application that interacts with Neptune. After you configure Amplify with AWS Cloud9, you add components such as a user authentication mechanism and backend Lambda functions that communicate with Neptune and a GraphQL API.

Configure Amplify with AWS Cloud9

Now that you’ve created an AWS Cloud9 environment, let’s configure Amplify with AWS Cloud9.

Open the environment in your web browser again and run the following commands.

When running $ amplify configure, you are asked to create a new IAM user to allow Amplify to deploy your application on your behalf. In the final step of the configuration, save the current configuration using the profile name default. For more information about the configuration, see Configure the Amplify CLI.

$ npm install -g @aws-amplify/cli@latest # install Amplify CLI

$ amplify configure # initial configuration of Amplify

Initializing new Amplify CLI version…

Done initializing new version.

Scanning for plugins…

Plugin scan successful

Follow these steps to set up access to your AWS account:

Sign in to your AWS administrator account:

https://console.aws.amazon.com/

Press Enter to continue

Specify the AWS Region

? region: us-east-1

Specify the username of the new IAM user:

? user name: amplify-user

Complete the user creation using the AWS console

https://console.aws.amazon.com/iam/home?region=us-east-1#/users$new?step=final&accessKey&userNames=amplify-user&permissionType=policies&policies=arn:aws:iam::aws:policy%2FAdministratorAccess

Press Enter to continue

Enter the access key of the newly created user:

? accessKeyId: ********************

? secretAccessKey: ****************************************

This would update/create the AWS Profile in your local machine

? Profile Name: default

Successfully set up the new user.

Run the following commands to create your React application template.

The create-react-app command is used to quickly create a template of your React application. After creating the template, you should have a new directory named react-amplify-neptune-workshop. Move to the directory and set up Amplify.

$ npx create-react-app react-amplify-neptune-workshop

$ cd react-amplify-neptune-workshop

$ npm install aws-amplify @aws-amplify/ui-react @material-ui/core @material-ui/icons clsx react-router-dom react-vis-force

In the same directory (react-amplify-neptune-workshop), run $ amplify init to initialize your new Amplify application using the default profile you’ve created earlier.

$ amplify init

Note: It is recommended to run this command from the root of your app directory

? Enter a name for the project neptune

? Enter a name for the environment dev

? Choose your default editor: None

? Choose the type of app that you’re building javascript

Please tell us about your project

? What javascript framework are you using react

? Source Directory Path: src

? Distribution Directory Path: build

? Build Command: npm run-script build

? Start Command: npm run-script start

Using default provider awscloudformation

For more information on AWS Profiles, see:

https://docs.aws.amazon.com/cli/latest/userguide/cli-configure-profiles.html

? Do you want to use an AWS profile? Yes

? Please choose the profile you want to use default

Amplify automatically creates your application’s backend using AWS CloudFormation. The process takes a few minutes to complete.

In your AWS Cloud9 environment, open another terminal and run the following command.

$ cd react-amplify-neptune-workshop

$ npm start

On the menu bar, under Preview, choose Preview Running Application.

You should see a preview of your React application on the right half of your AWS Cloud9 environment. Going forward, you can preview the application right after modifying and saving the code.

Authentication

Next, run the following command to add a user authentication mechanism to your application. After running the command, Amplify uses AWS CloudFormation to create an Amazon Cognito user pool as the authentication backend. Answer the questions as in the following example to enable users to log in with their usernames and passwords.

$ amplify add auth

Using service: Cognito, provided by: awscloudformation

The current configured provider is Amazon Cognito.

Do you want to use the default authentication and security configuration? Default configuration

Warning: you will not be able to edit these selections.

How do you want users to be able to sign in? Username

Do you want to configure advanced settings? No, I am done.

Successfully added auth resource neptuneff8d594a locally

Now that you have authentication backend, let’s create the authentication front-end. Run the following command to download the front-end application code and replace the old one. You should see login window as your application preview on the right half of your Cloud9.

Run the following command to replace the existing App.js file.

$ wget https://aws-database-blog.s3.amazonaws.com/artifacts/DBBLOG-1462/App.js -O ~/environment/react-amplify-neptune-workshop/src/App.js

Note that this file will be compiled successfully once you create the components directory in the later steps.

Functions

In this step, you create Lambda functions using Amplify so that they can communicate with the Neptune database. Before creating Lambda functions, run the following commands to create a new Lambda layer and import the Gremlin library. For more information, see Create a Lambda Layer.

Create your Lambda layer with the following code.

$ amplify add function

? Select which capability you want to add: Lambda layer (shared code & resource used across functions)

? Provide a name for your Lambda layer: gremlin

? Select up to 2 compatible runtimes: NodeJS

? The current AWS account will always have access to this layer.

Optionally, configure who else can access this layer. (Hit <Enter> to skip) Specific AWS accounts

? Provide a list of comma-separated AWS account IDs: ************ # Your own AWS Account

✅ Lambda layer folders & files updated:

amplify/backend/function/gremlin

Next steps:

Include any files you want to share across runtimes in this folder:

amplify/backend/function/gremlin/opt

—–

# Install gremlin package into the lambda layer

$ cd amplify/backend/function/gremlin/opt/nodejs/ # create nodejs/ directory if there is not

$ npm init # if you do not have package.json file here

$ npm install gremlin

$ cd ~/environment/react-amplify-neptune-workshop/

Create a Lambda function named getGraphData.

$ amplify add function

Select which capability you want to add: Lambda function (serverless function)

? Provide an AWS Lambda function name: getGraphData

? Choose the runtime that you want to use: NodeJS

? Choose the function template that you want to use: Hello World

Available advanced settings:

– Resource access permissions

– Scheduled recurring invocation

– Lambda layers configuration

? Do you want to configure advanced settings? Yes

Do you want to access other resources in this project from your Lambda function? Yes

? Select the category

You can access the following resource attributes as environment variables from your Lambda function

ENV

REGION

? Provide existing layers or select layers in this project to access from this function (pick up to 5): gremlin

? Select a version for gremlin: 1

? Do you want to edit the local lambda function now? Yes

Successfully added resource getGraphData locally.

After you add the Lambda function, run the following commands to modify your CloudFormation template and Lambda function code.

Amplify uses this template to deploy the setting and environment of Lambda, so you don’t have to change these settings on the console if you change the template setting. You must provide values for the reader endpoint, security group ID, and subnet IDs of your Neptune instance.

$ sed -i ‘s/”REGION”:{“Ref”:”AWS::Region”}/”NEPTUNE_ENDPOINT”: “your-neptune-reader-endpoint”,”NEPTUNE_PORT”: “8182”/g’ amplify/backend/function/getGraphData/getGraphData-cloudformation-template.json

$ sed -i ‘/”Environment”/i “MemorySize”: 1024,”VpcConfig”: {“SecurityGroupIds”: [“your-neptune-sg-id”],”SubnetIds”: [“your-neptune-subnet-id”,”your-neptune-subnet-id”,”your-neptune-subnet-id”,”your-neptune-subnet-id”,”your-neptune-subnet-id”,”your-neptune-subnet-id”]},’ amplify/backend/function/getGraphData/getGraphData-cloudformation-template.json

$ sed -i ‘/”AssumeRolePolicyDocument”/i “ManagedPolicyArns”: [“arn:aws:iam::aws:policy/service-role/AWSLambdaVPCAccessExecutionRole”,”arn:aws:iam::aws:policy/NeptuneReadOnlyAccess”],’ amplify/backend/function/getGraphData/getGraphData-cloudformation-template.json

Run the following command to replace the index.js file of the Lambda function.

$ wget https://aws-database-blog.s3.amazonaws.com/artifacts/DBBLOG-1462/lambda/getGraphData/index.js -O amplify/backend/function/getGraphData/src/index.js

The following is the Lambda code of getGraphData. This function is invoked by AWS AppSync and uses Gremlin to access Neptune and get the graph information such as vertices and edges.

const gremlin = require(‘gremlin’);

const DriverRemoteConnection = gremlin.driver.DriverRemoteConnection;

const Graph = gremlin.structure.Graph;

const P = gremlin.process.P

const Order = gremlin.process.order

const Scope = gremlin.process.scope

const Column = gremlin.process.column

exports.handler = async (event, context, callback) => {

const dc = new DriverRemoteConnection(`wss://${process.env.NEPTUNE_ENDPOINT}:${process.env.NEPTUNE_PORT}/gremlin`,{});

const graph = new Graph();

const g = graph.traversal().withRemote(dc);

try {

const result = await g.V().toList()

const vertex = result.map(r => {

return {‘id’:r.id,’label’:r.label}

})

const result2 = await g.E().toList()

const edge = result2.map(r => {

console.log(r)

return {“source”: r.outV.id,”target”: r.inV.id,’value’:r.label}

})

return {‘nodes’:vertex,”links”:edge}

} catch (error) {

console.error(JSON.stringify(error))

return { error: error.message }

}

}

Now that you’re done setting up the Lambda function getGraphData, repeat the same procedure from step 2 to 4, for the other Lambda functions (replace the function names in the code).

getInfoNeptune

queryNeptune

registerNeptune (also replace your-neptune-reader-endpoint with your-neptune-writer-endpoint in sed command in step 3)

To complete setting up the Lambda functions, run the following command to download components.zip to your AWS Cloud9 environment.

$ wget https://aws-database-blog.s3.amazonaws.com/artifacts/DBBLOG-1462/components.zip && unzip components.zip -d src

GraphQL API

So far, you’ve created a user pool for authentication and backend Lambda functions for querying the Neptune database. In this step, you add a GraphQL API to your Amplify application.

Run the following command to create a GraphQL API template.

$ amplify add api

? Please select from one of the below mentioned services: GraphQL

? Provide API name: neptune

? Choose the default authorization type for the API Amazon Cognito User Pool

Use a Cognito user pool configured as a part of this project.

? Do you want to configure advanced settings for the GraphQL API No, I am done.

? Do you have an annotated GraphQL schema? No

? Choose a schema template: Single object with fields (e.g., “Todo” with ID, name, description)

The following types do not have ‘@auth’ enabled. Consider using @auth with @model

– Todo

Learn more about @auth here: https://docs.amplify.aws/cli/graphql-transformer/auth

…

GraphQL schema compiled successfully.

After you create the template, overwrite the schema of the GraphQL as following and compile it.

With Query, you can get the search result and information associated with the search word such as institution, product, conference, and so on. With Mutation, you can register vertex or edge to Neptune.

type Result {

name: String!

}

type Profile {

search_name: String

usage: String

belong_to: String

authored_by: String

affiliated_with: String

people: String

made_by: String

}

type Register {

result: String

}

type GraphInfo {

nodes: [Nodes]

links: [Links]

}

type Nodes {

id: String

label: String

}

type Links {

source: String

target: String

value: String

}

type Query {

getResult(name: String!, value: String!): [Result]

@function(name: “queryNeptune-${env}”)

@auth(rules: [{ allow: private, provider: userPools }])

getProfile(name: String!, value: String!): [Profile]

@function(name: “getInfoNeptune-${env}”)

@auth(rules: [{ allow: private, provider: userPools }])

getGraphInfo(value: String!): GraphInfo

@function(name: “getGraphData-${env}”)

@auth(rules: [{ allow: private, provider: userPools }])

}

type Mutation {

registerInfo(

value: String!

name: String

edge: String

vertex: String

property: String

source: String

sourceLabel: String

destination: String

destLabel: String

): [Register]

@function(name: “registerNeptune-${env}”)

@auth(rules: [{ allow: private, provider: userPools }])

}

$ amplify api gql-compile

…

GraphQL schema compiled successfully.

Upload the change using amplify push.

$ amplify push

Deploy the application

Run the following command to host the application. Choose Hosting with Amplify Console and then Manual deployment.

$ amplify add hosting

? Select the plugin module to execute Hosting with Amplify Console (Managed hosting with custom domains, Continuous deploym

ent)

? Choose a type Manual deployment

You can now publish your app using the following command:

Command: amplify publish

Finally, publish the application:

$ amplify publish

After you publish the application, you can use it and experience its operability and quick response.

Clean up

To avoid incurring future charges, clean up the resources you made as part of this post.

Run the following command to delete the resources created with Amplify.

$ amplify delete

? Are you sure you want to continue? This CANNOT be undone. (This would delete all the environments of the project from the cloud and wipe out all the local files c

reated by Amplify CLI) Yes

⠋ Deleting resources from the cloud. This may take a few minutes…

Run the following commands to delete the Neptune cluster and instances.

$ aws neptune delete-db-instance –db-instance-identifier neptune

$ aws neptune delete-db-instance –db-instance-identifier read-neptune

$ aws neptune delete-db-cluster –db-cluster-identifier database-1 –skip-final-snapshot

On the Amazon S3 console, select the bucket that stores vertex.csv and edge.csv.

Choose Empty and then choose Delete.

On the IAM console, choose the role used for bulk loading (NeptuneLoadFromS3) and choose Delete Role.

On the Amazon VPC console, choose the VPC endpoint you created and choose Delete endpoint.

On the AWS Cloud9 console, choose the environment you created and choose Delete.

Summary

In this post, we walked you through how to use Amplify to develop an application that interacts with the graph data in Neptune. We created a Neptune database instance, and then added an authentication mechanism, backend functions, and an API to the application.

To start developing your own application using Neptune and Amplify, see the user guides for Neptune and Amplify.

About the authors

Chiaki Ishio is a Solutions Architect in Japan’s Process Manufacturing and Healthcare Life Sciences team. She is passionate about helping her customers design and build their systems in AWS. Outside of work, she enjoys playing the piano.

Hidenori Koizumi is a Prototyping Solutions Architect in Japan’s Public Sector. He is an expert in developing solutions in the research field based on his scientific background (biology, chemistry, and more). He has recently been developing applications with AWS Amplify or AWS CDK. He likes traveling and photography.

Read MoreAWS Database Blog